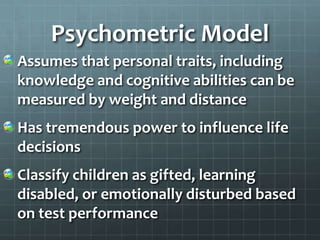

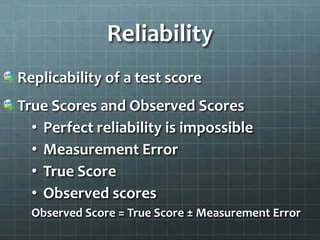

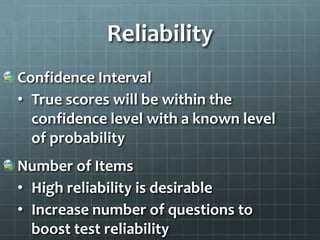

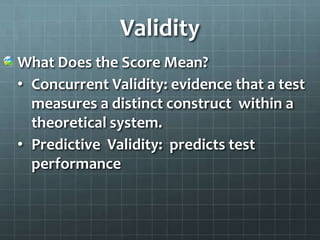

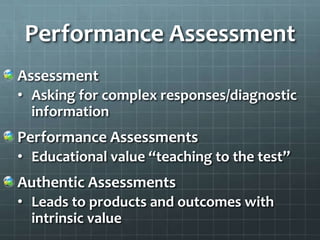

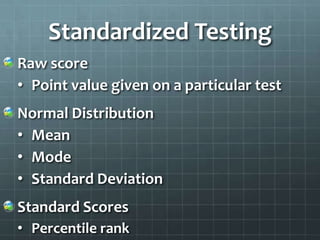

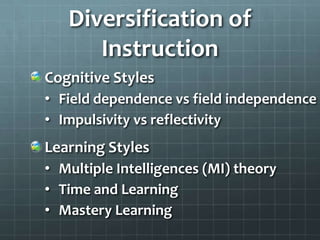

The document discusses key concepts in psychometrics including reliability, validity, standardized testing, and individual differences in assessment. It notes that reliability refers to the consistency of test scores and is improved by increasing the number of test items. Validity concerns whether a test accurately measures the intended construct. Standardized tests provide norm-referenced scores based on a normal distribution curve. Finally, the document outlines theories of cognitive styles, learning styles, and group differences that influence assessment.