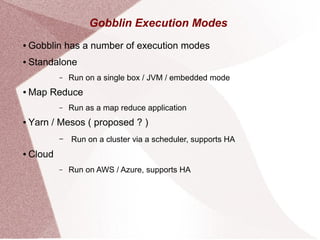

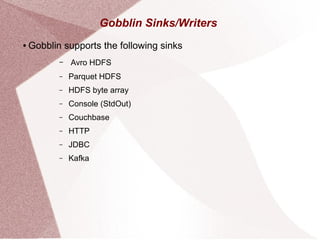

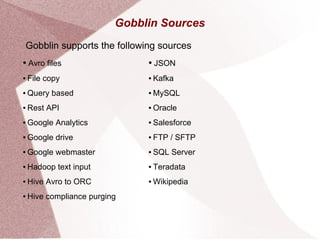

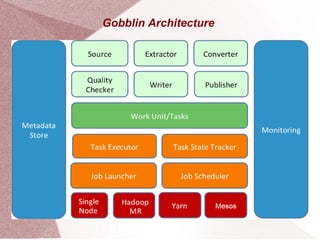

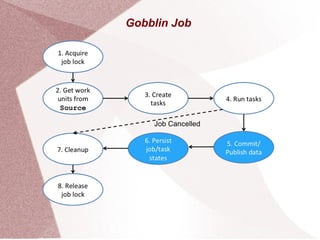

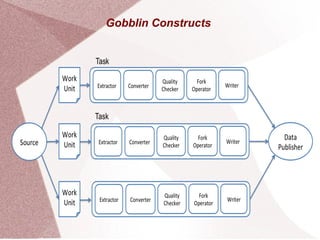

Apache Gobblin is a big data integration framework designed to simplify data ingestion, replication, organization, and lifecycle management for both streaming and batch processes. It supports multiple execution modes including standalone, MapReduce, and cloud-based options, and offers various sources and sinks for data processing. Gobblin jobs are created from pluggable constructs, managed by the runtime for tasks such as scheduling and error handling, with configuration defined via properties files.