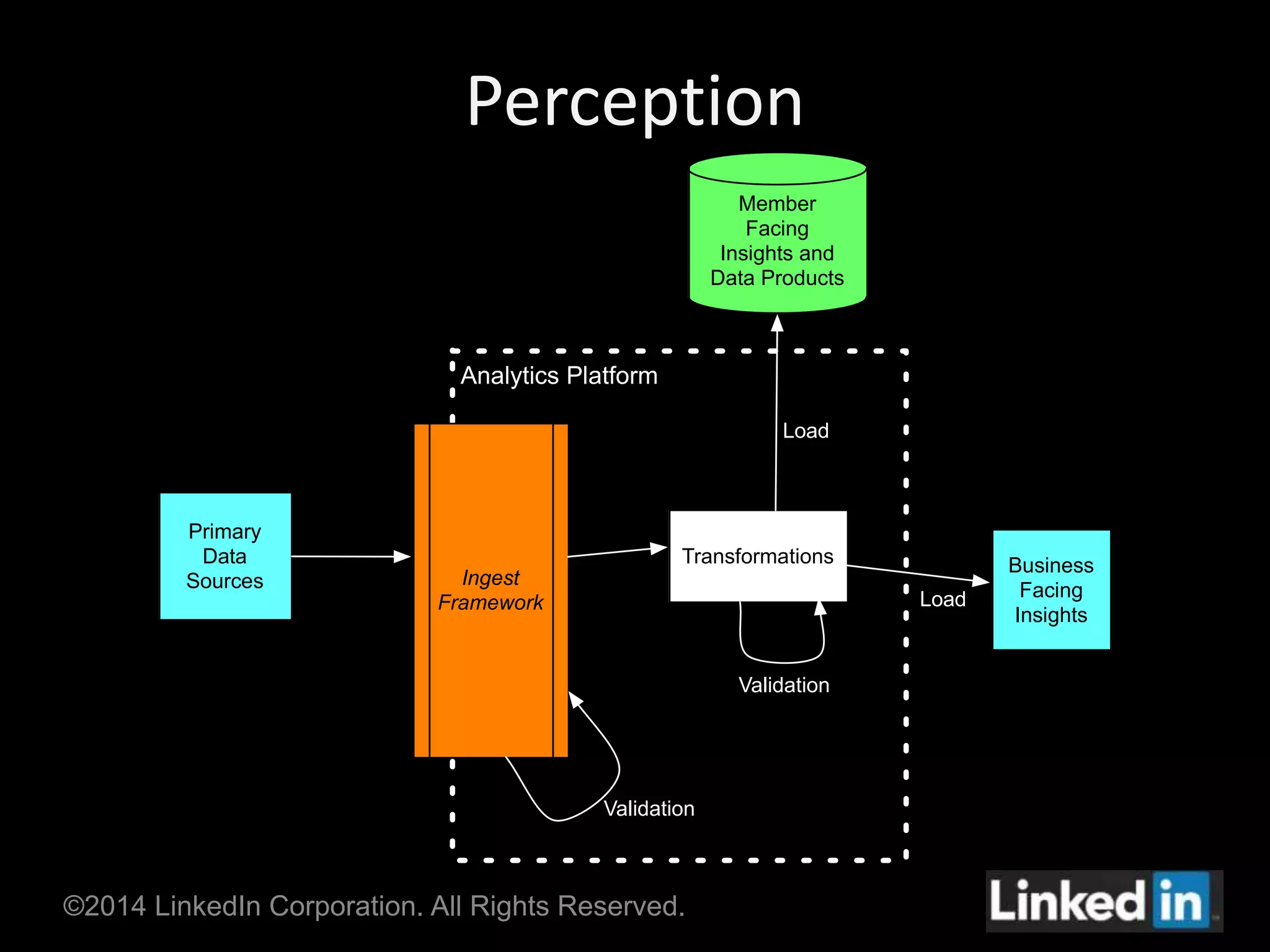

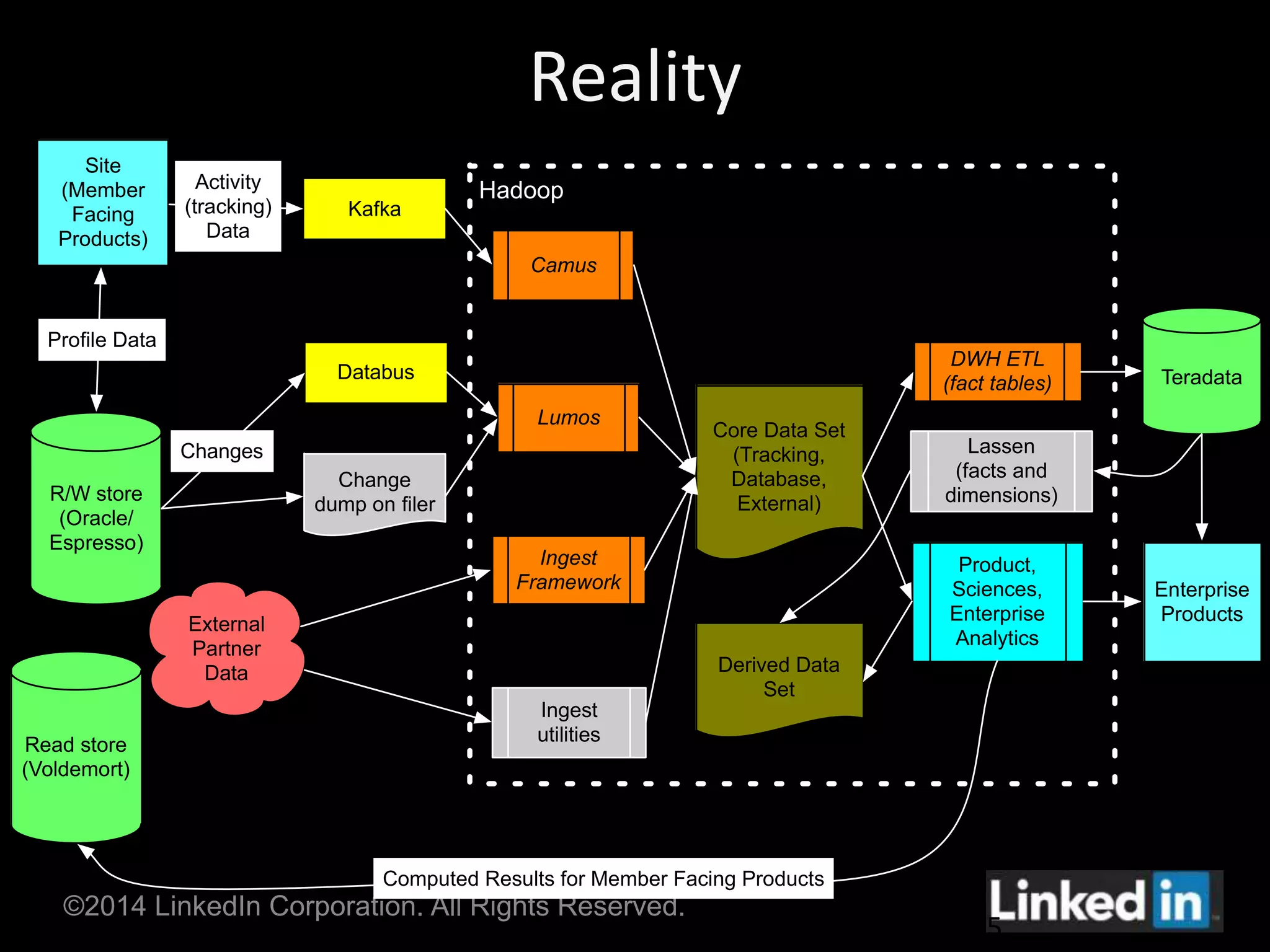

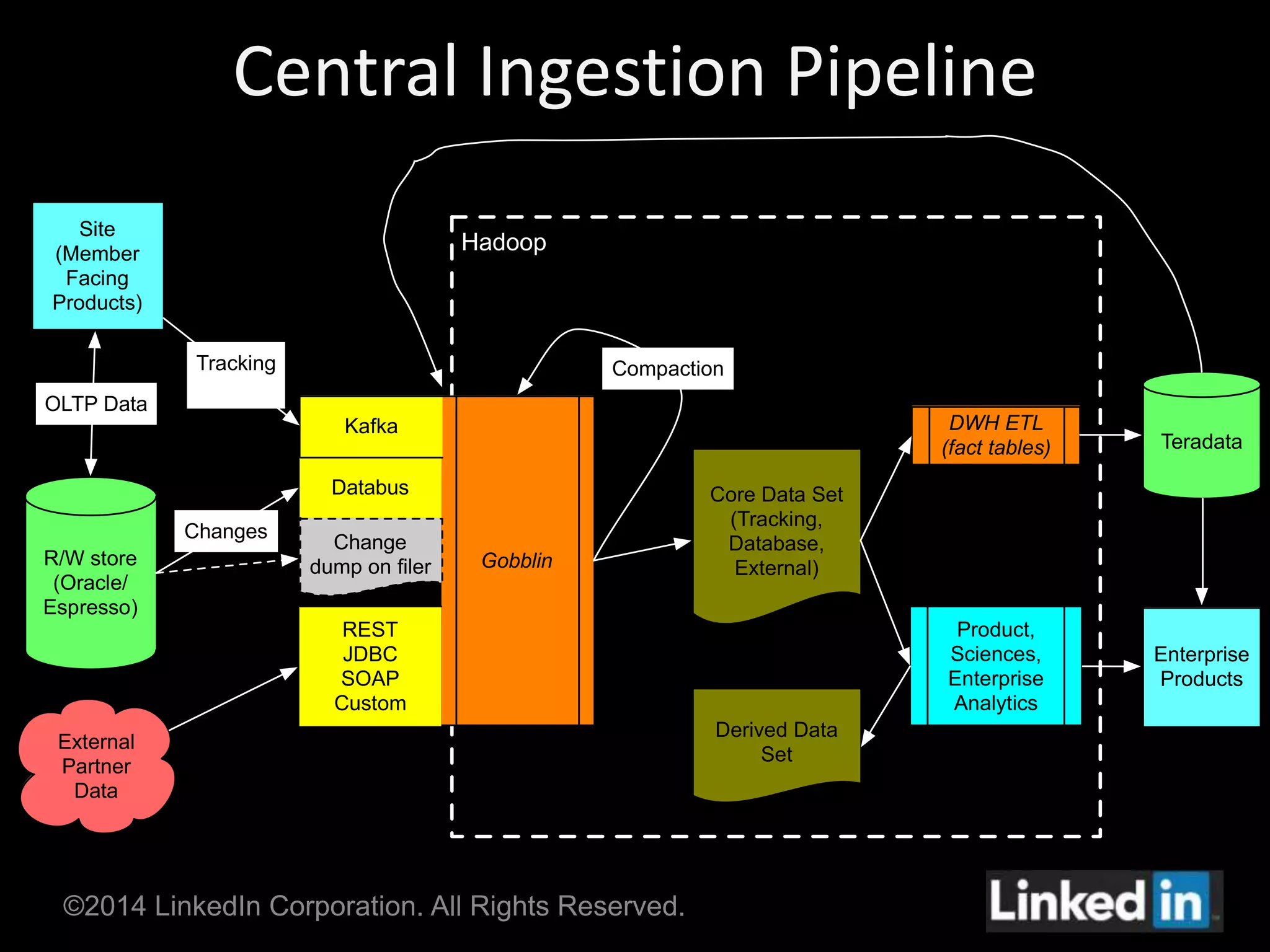

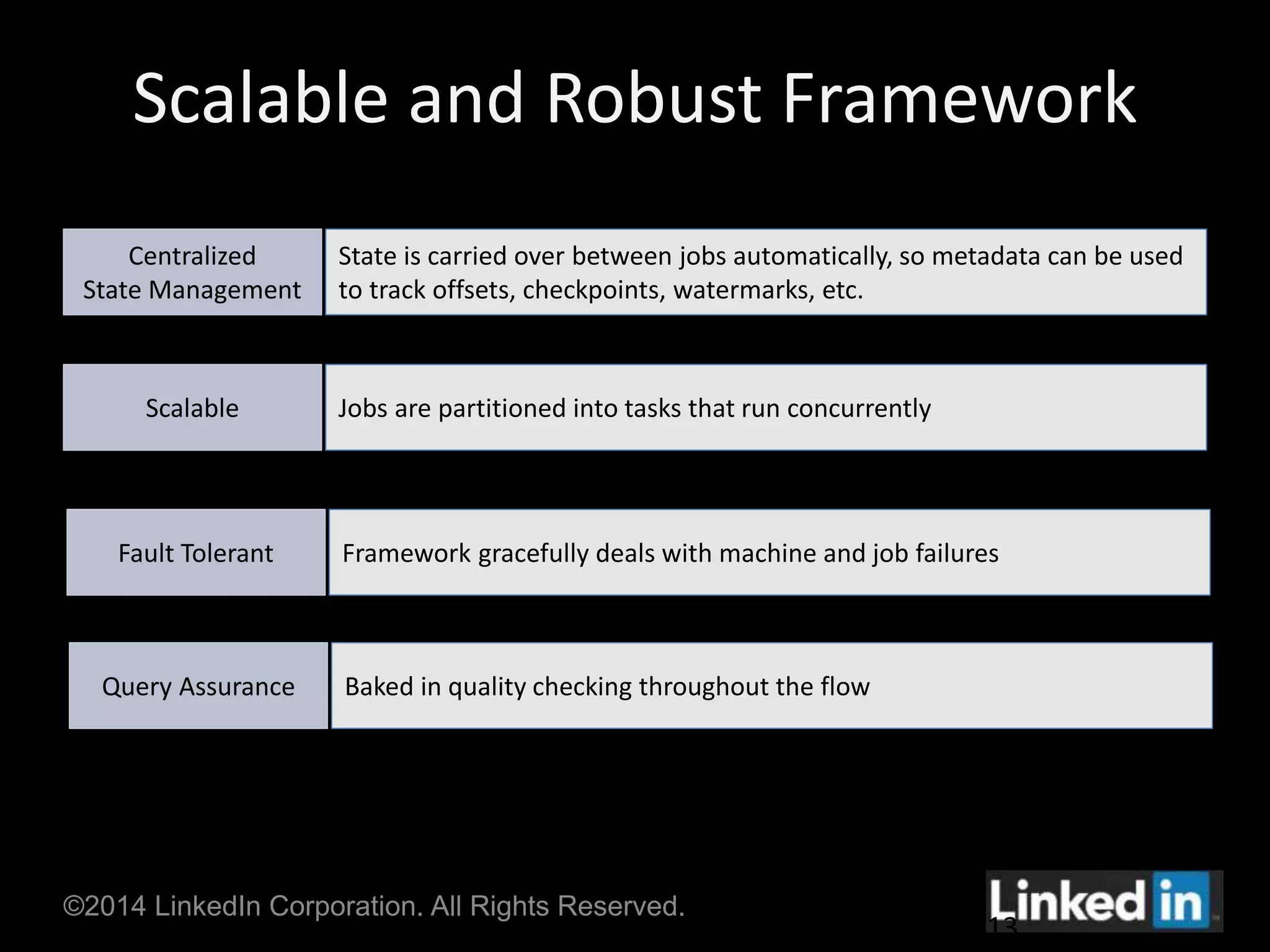

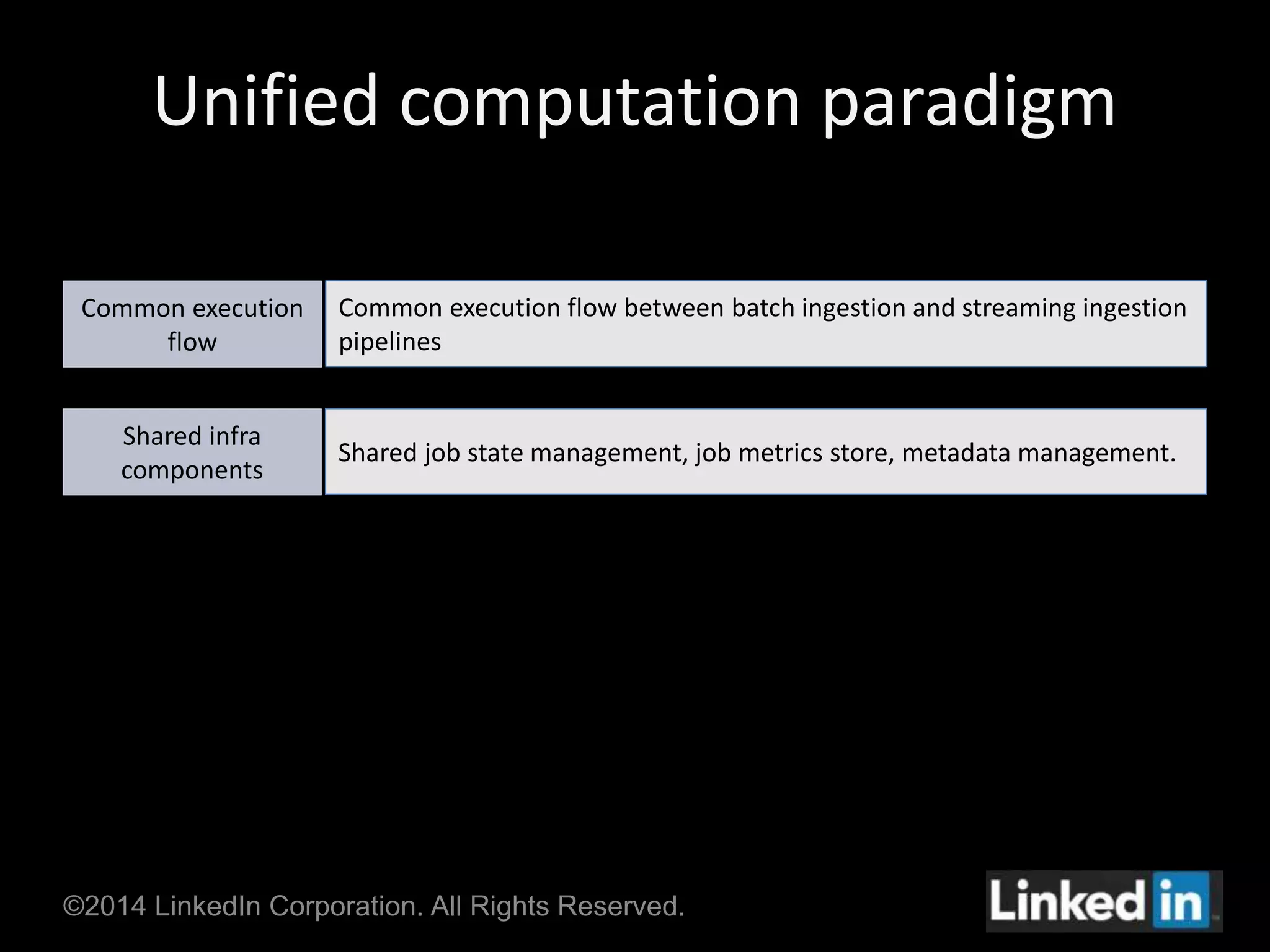

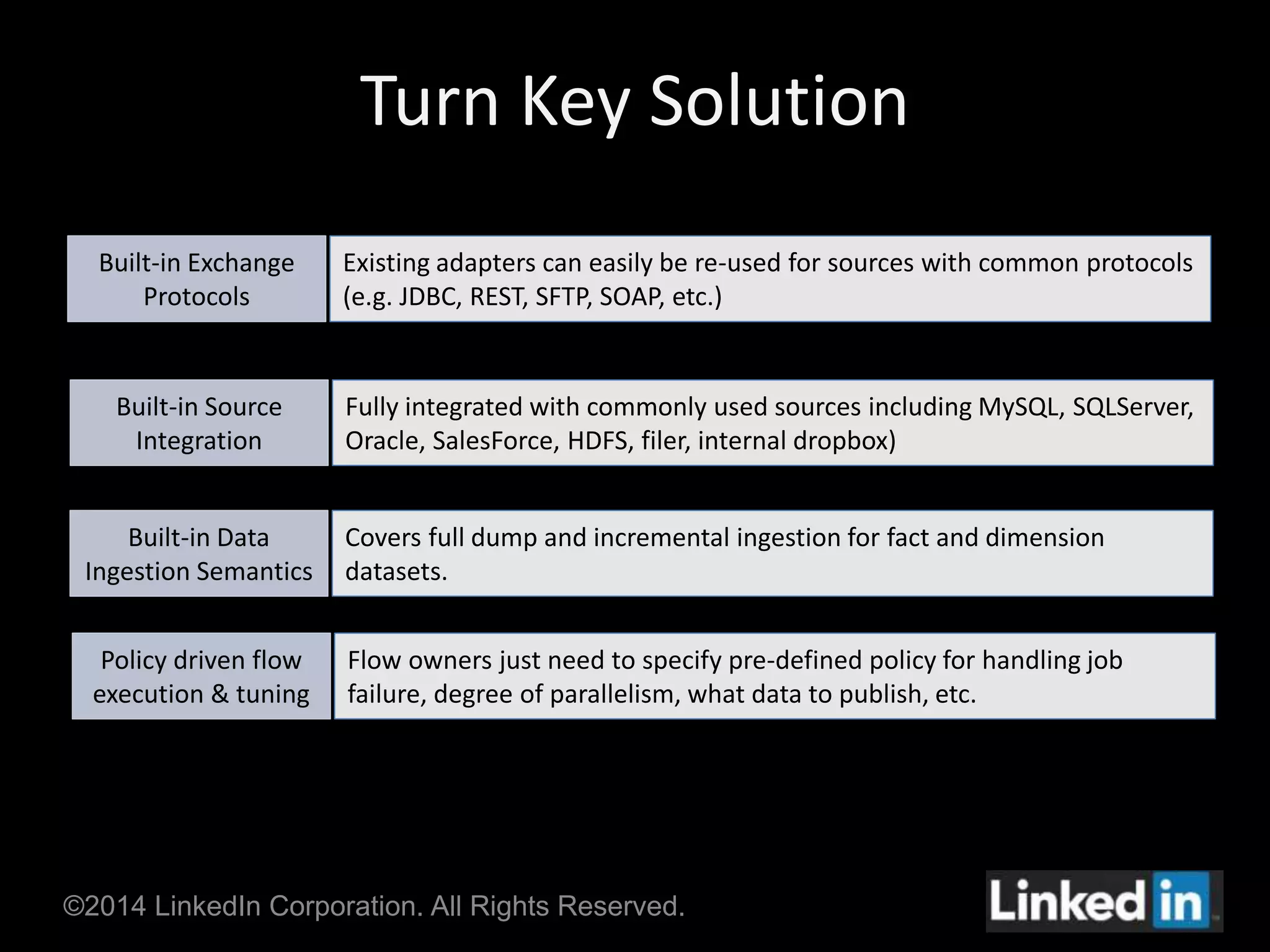

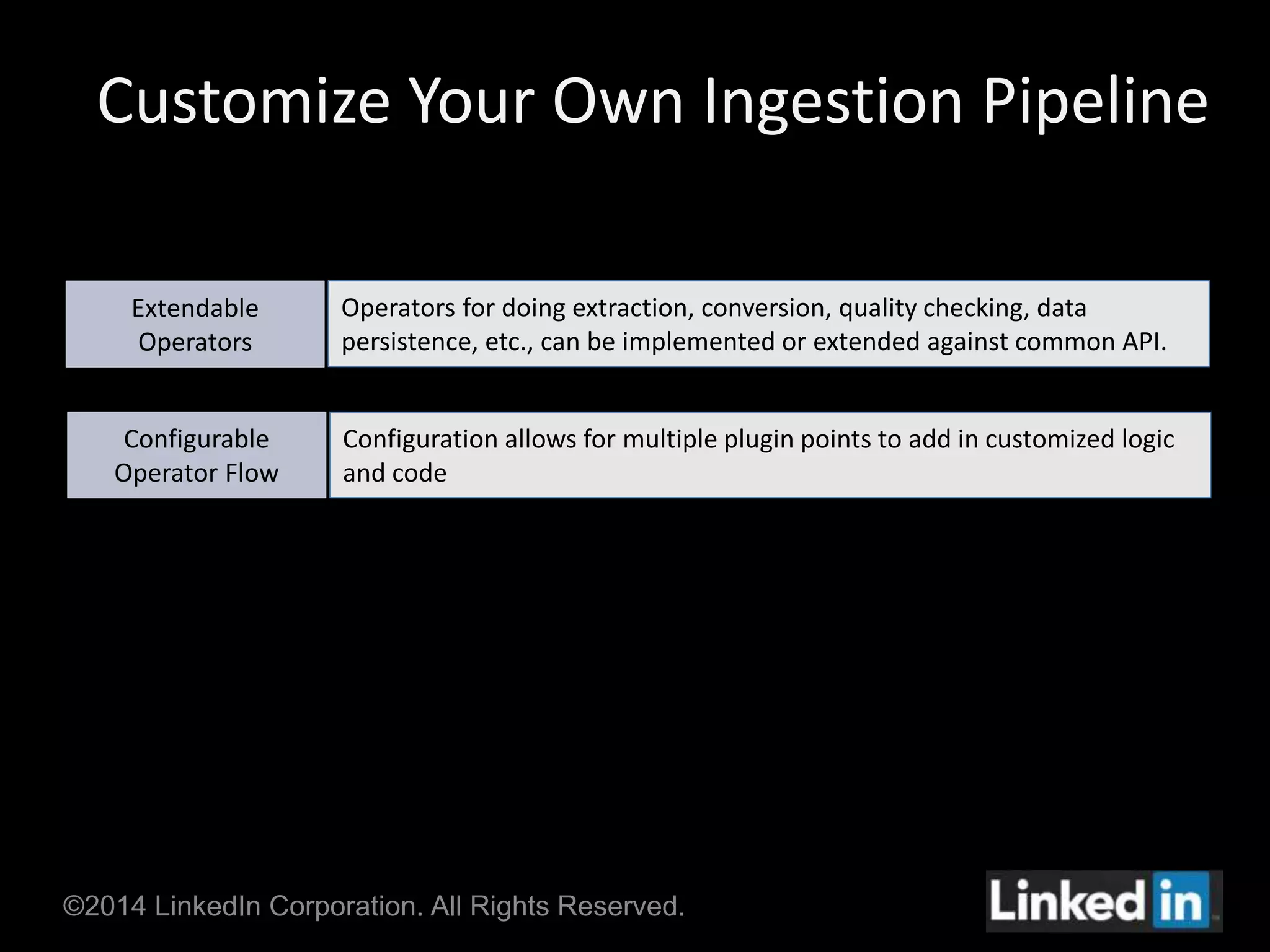

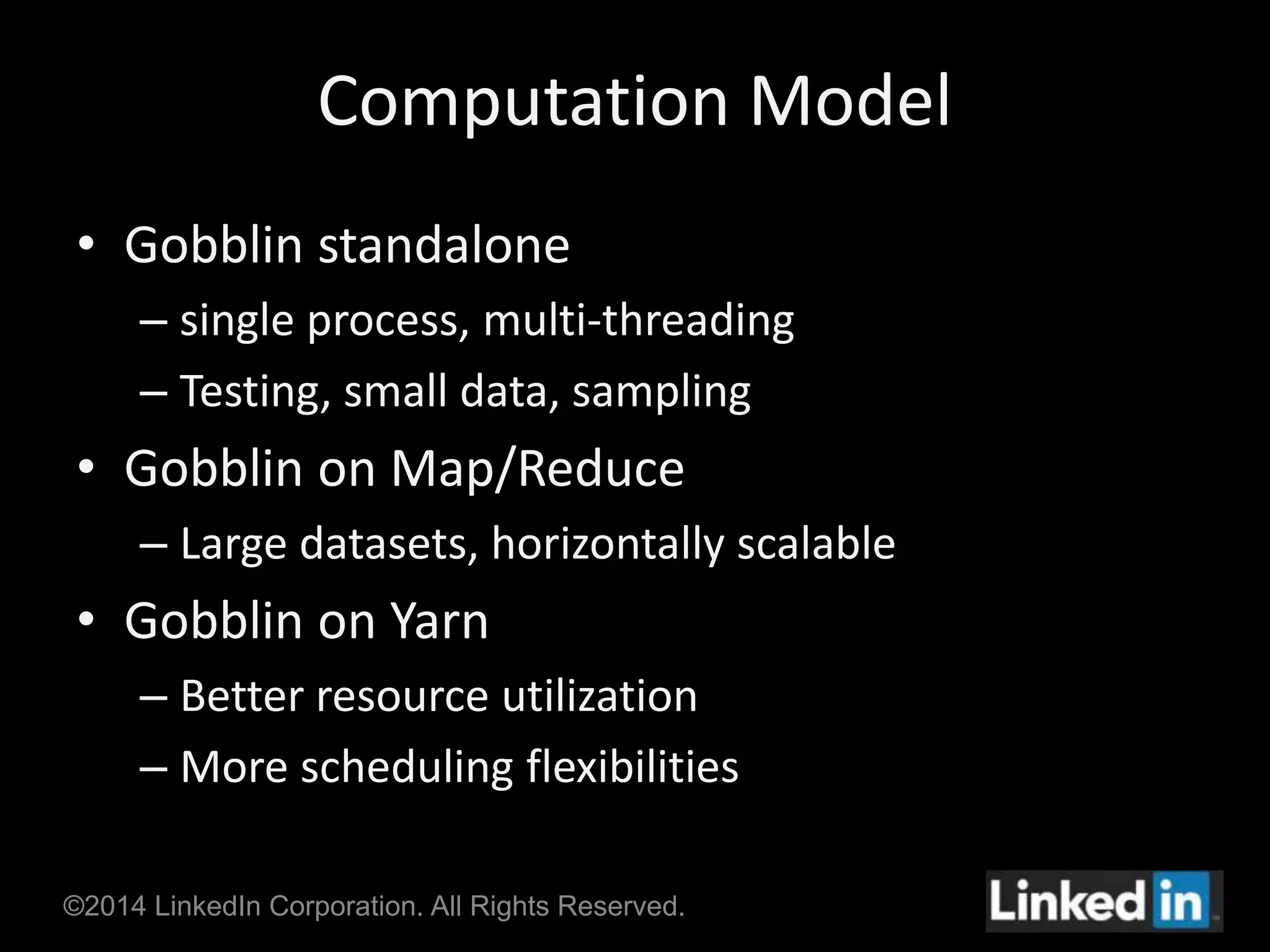

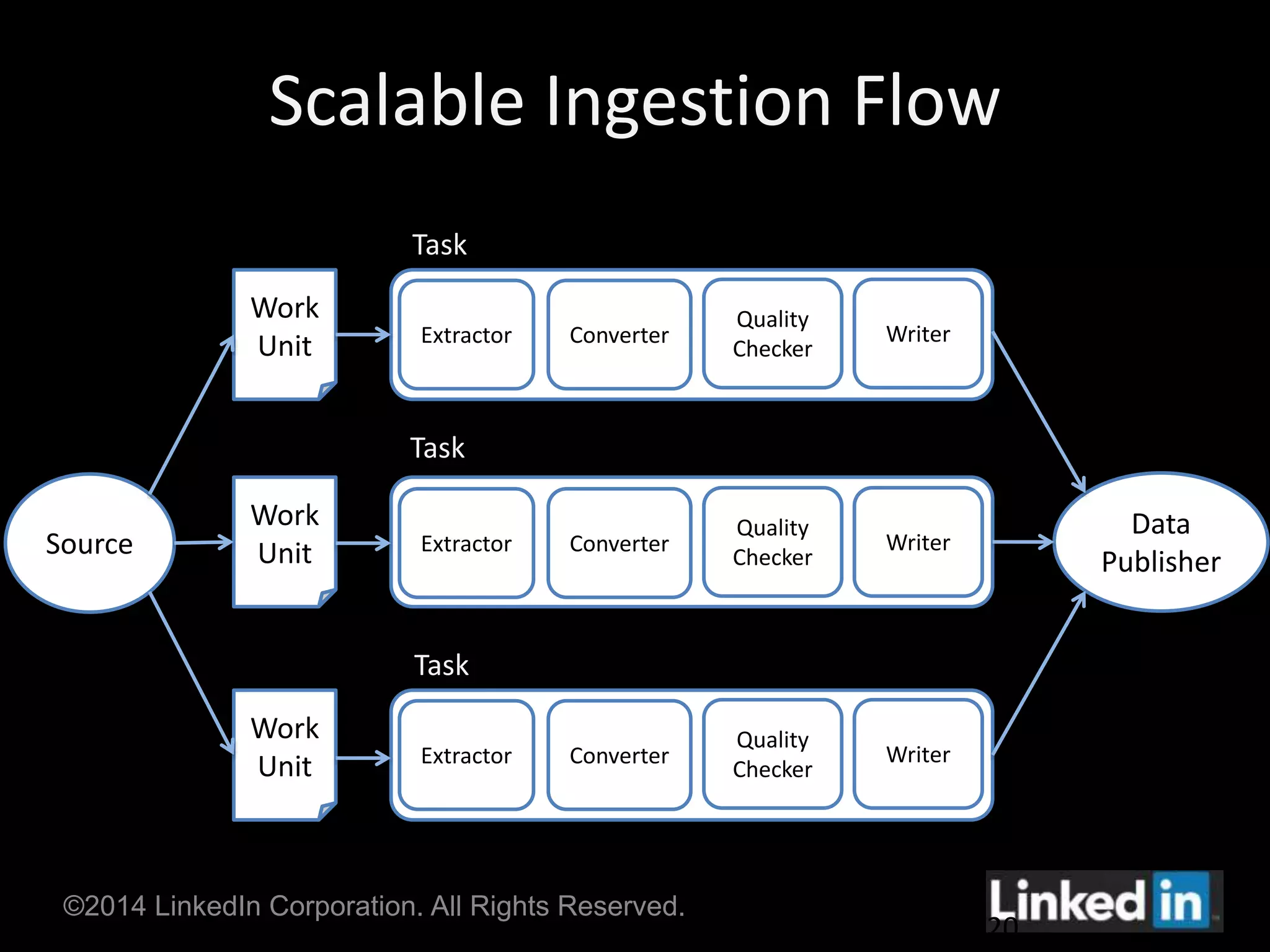

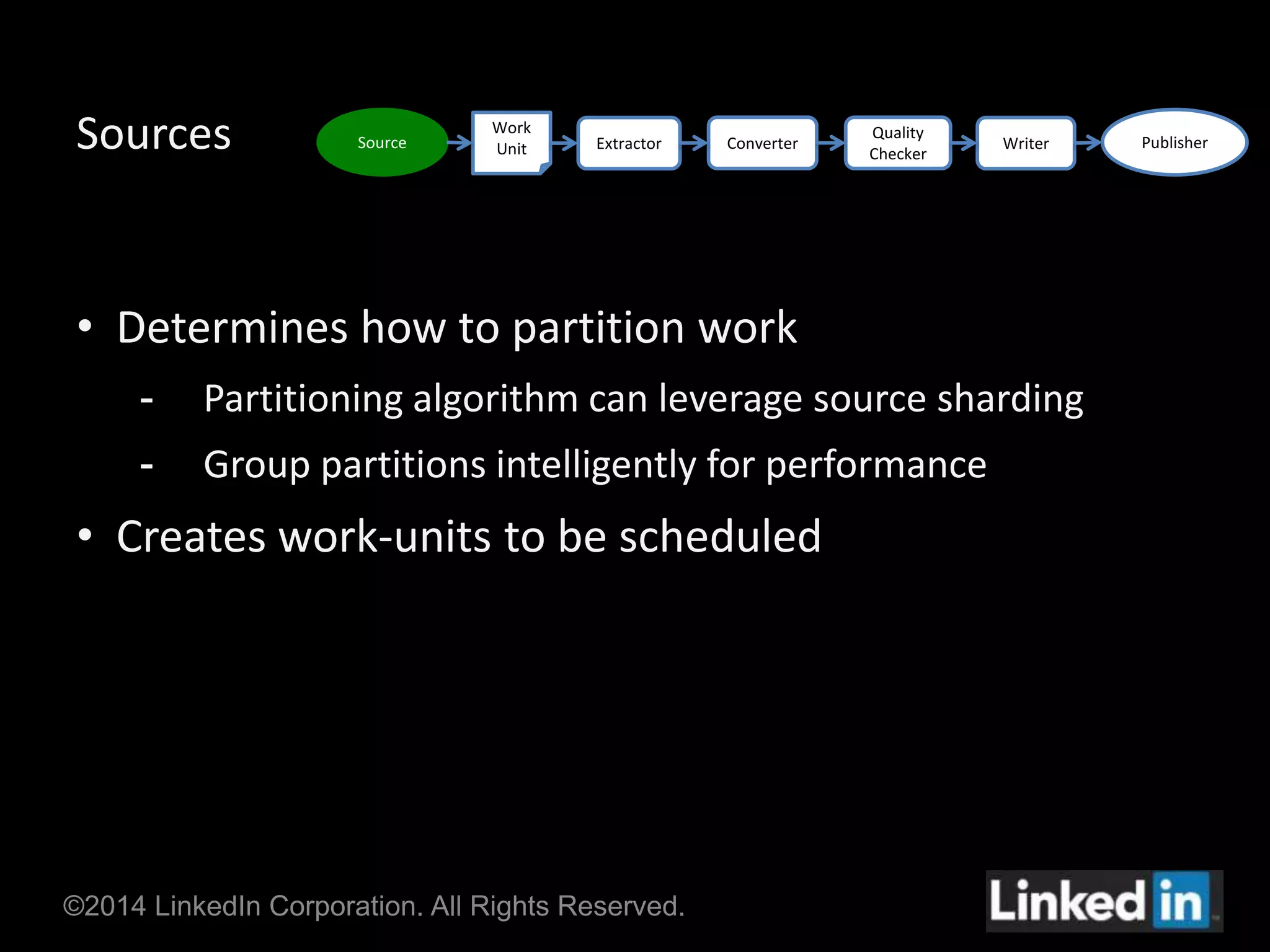

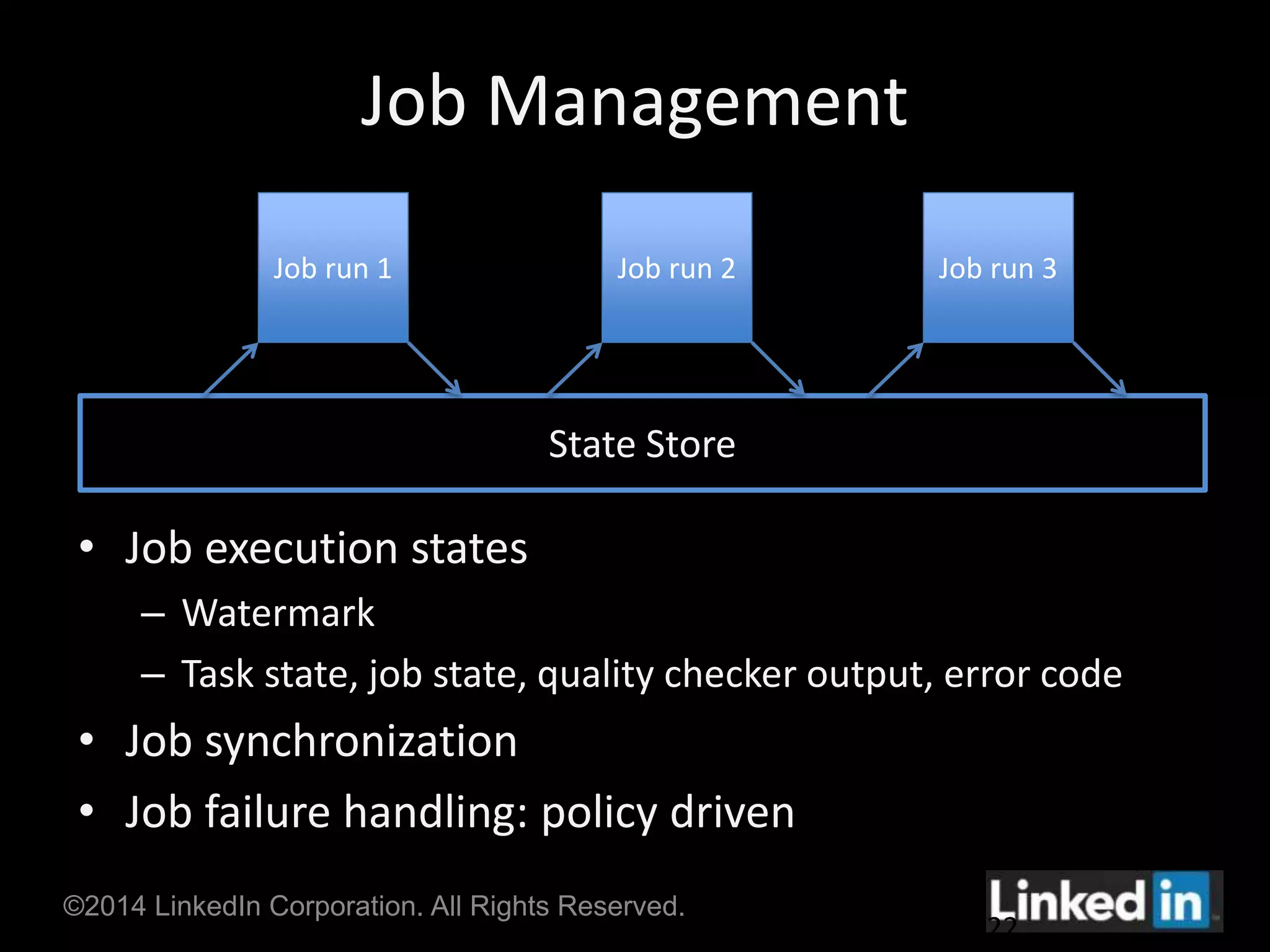

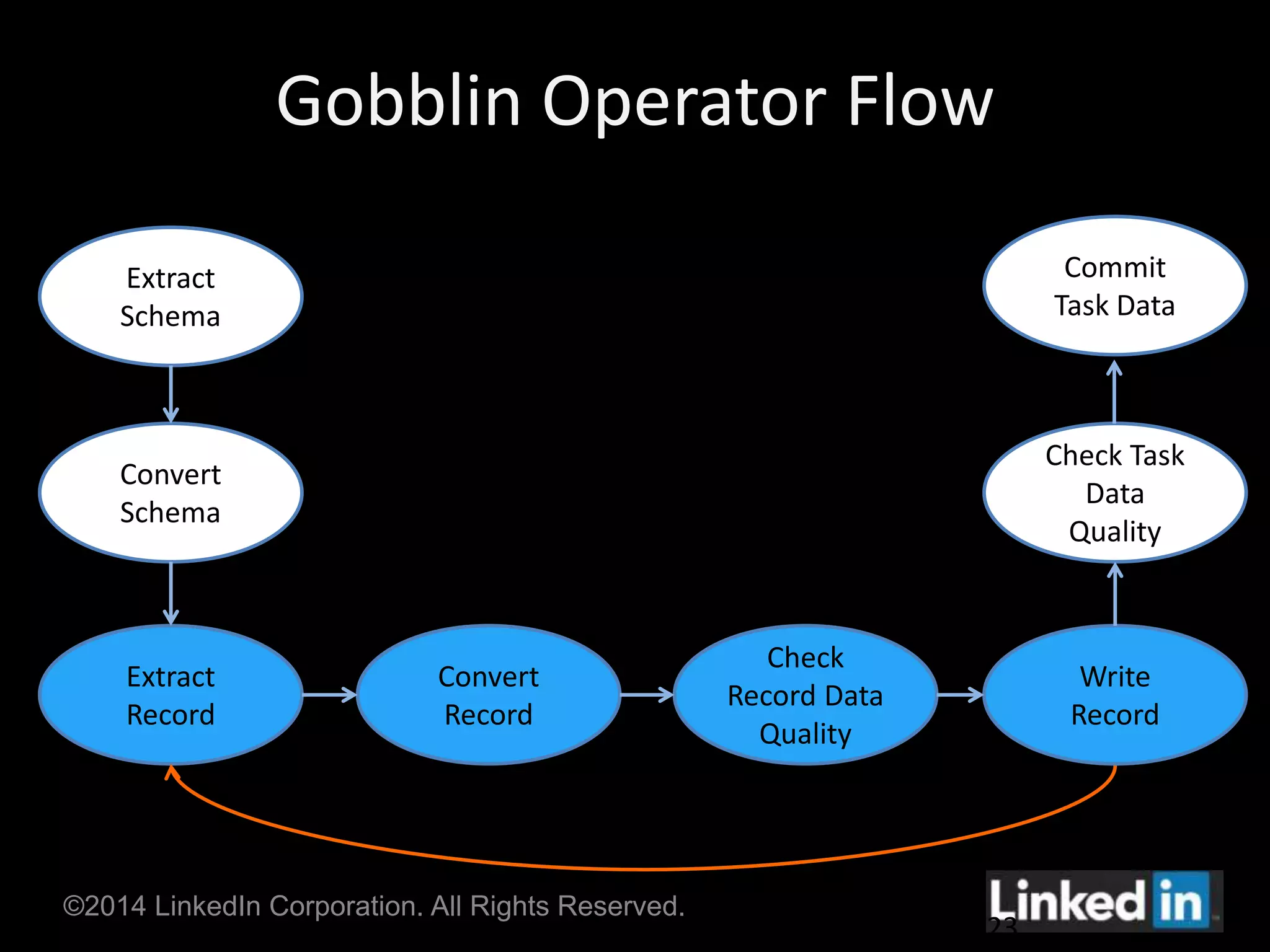

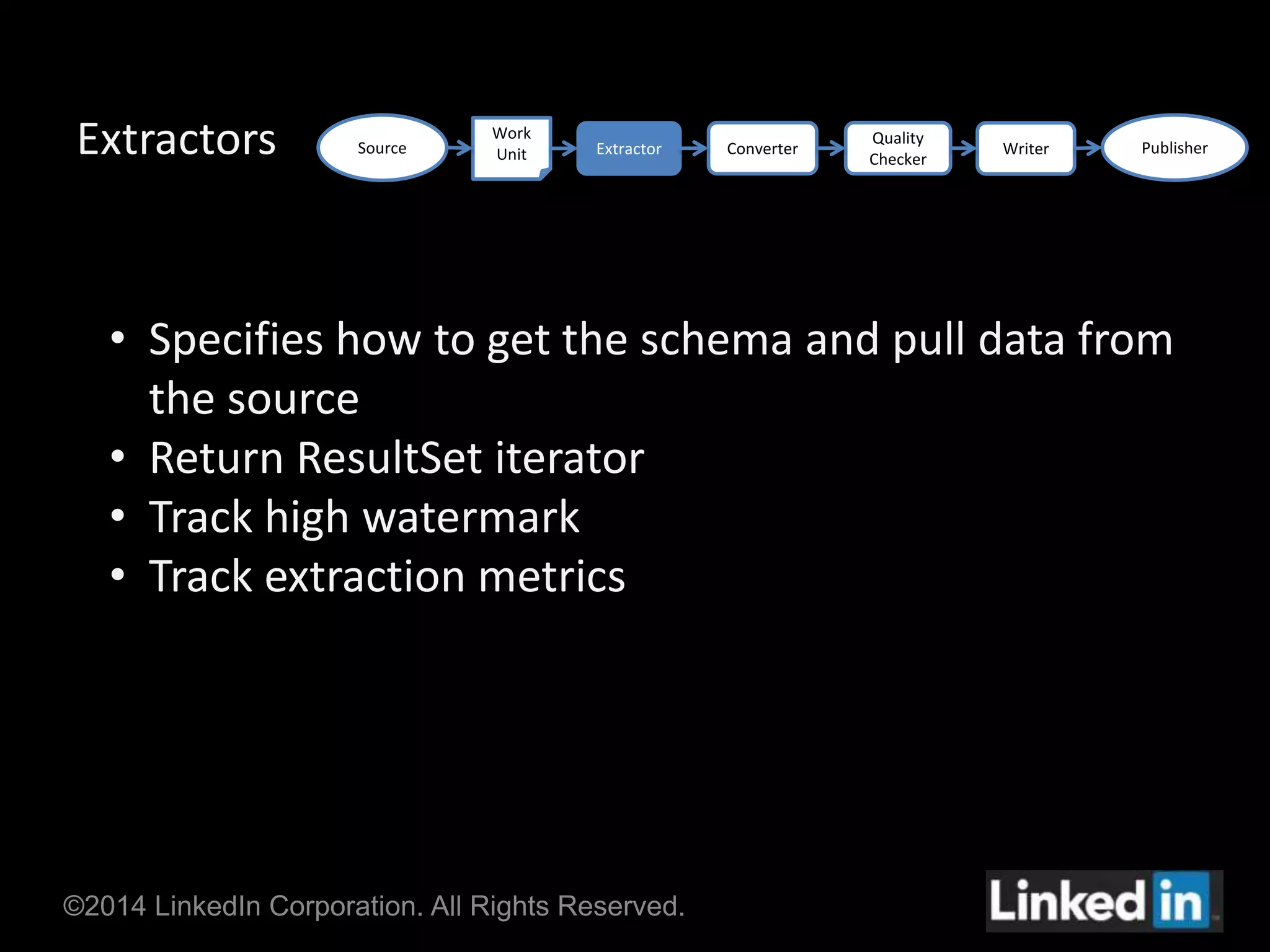

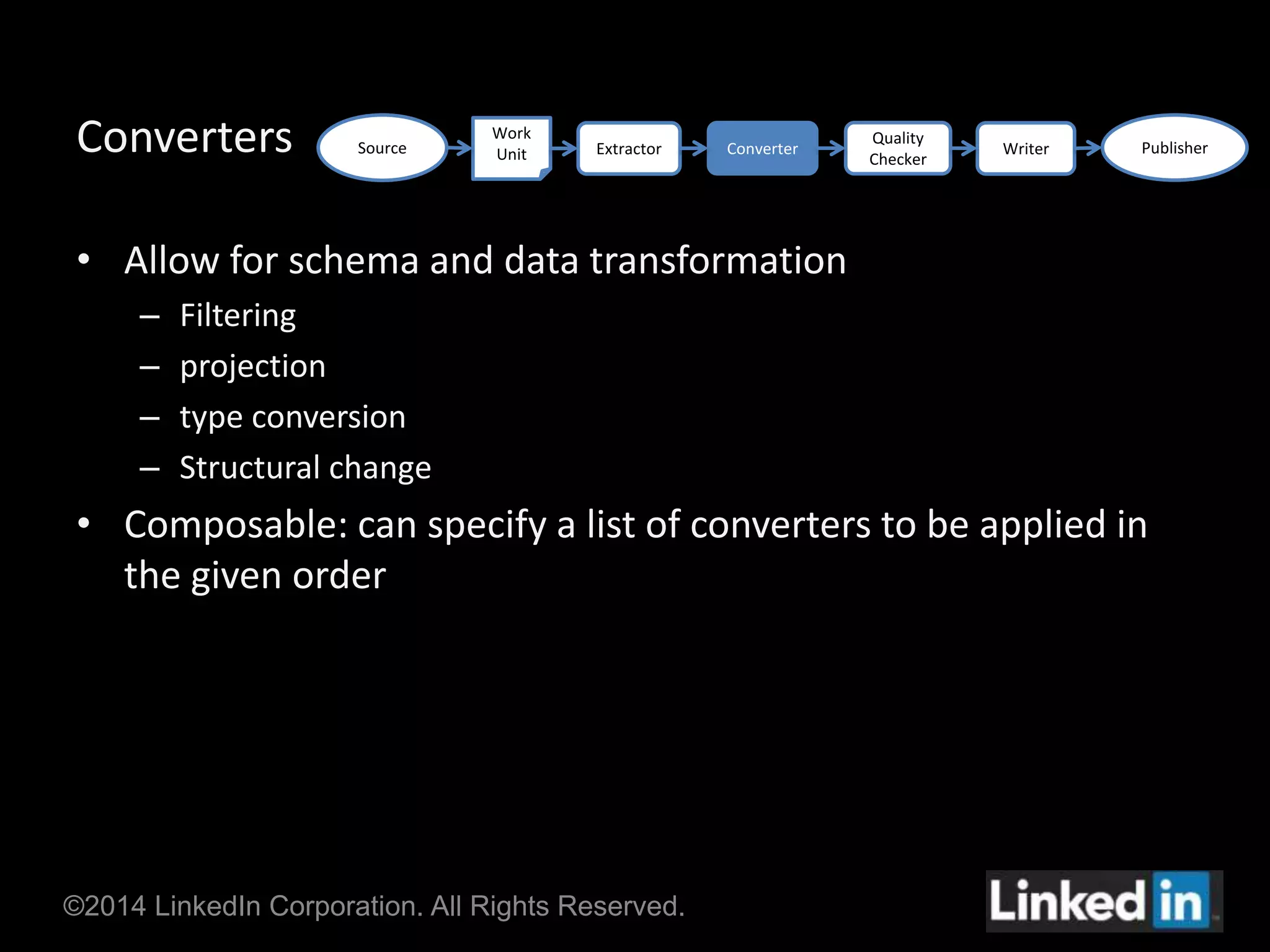

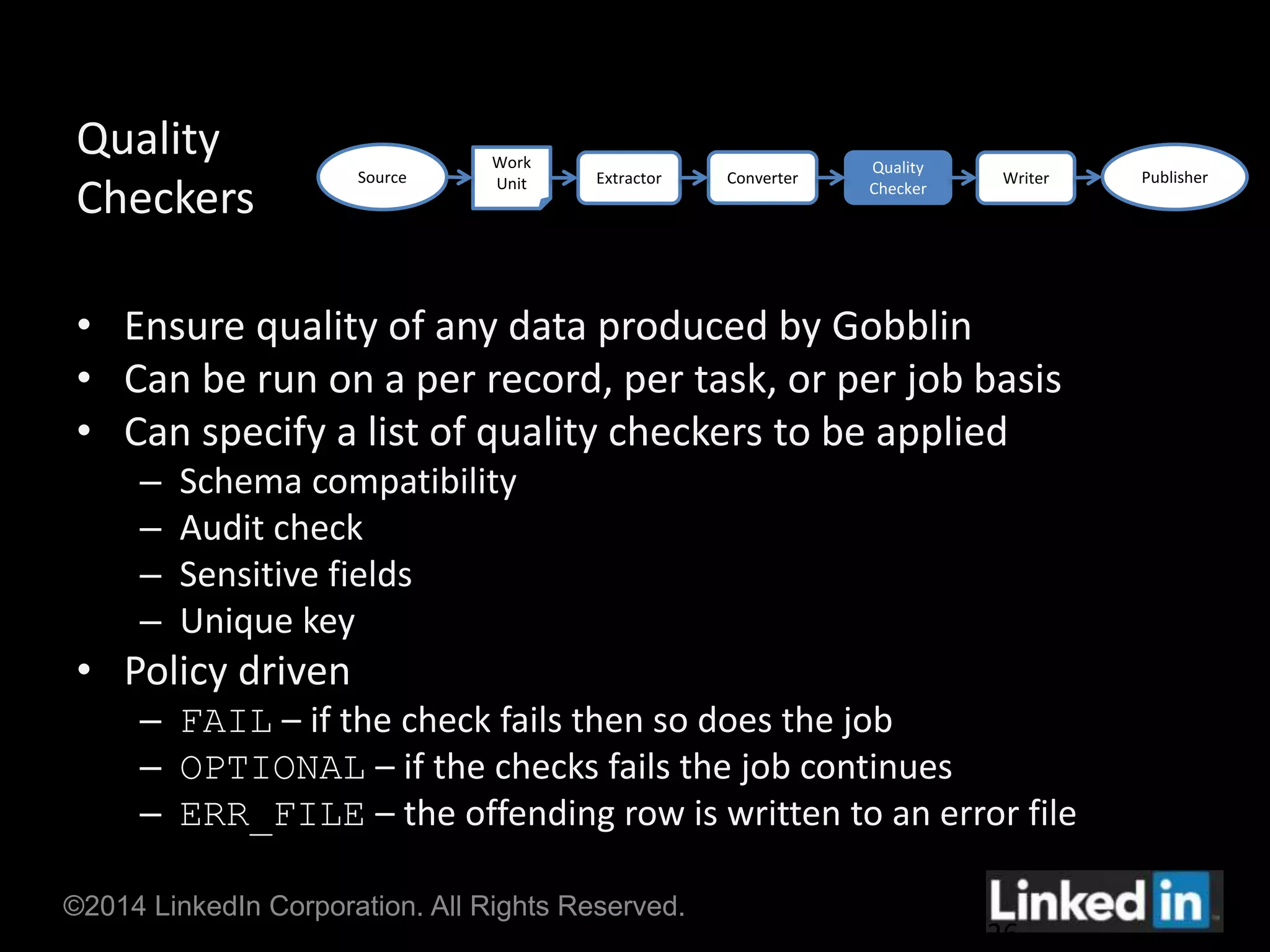

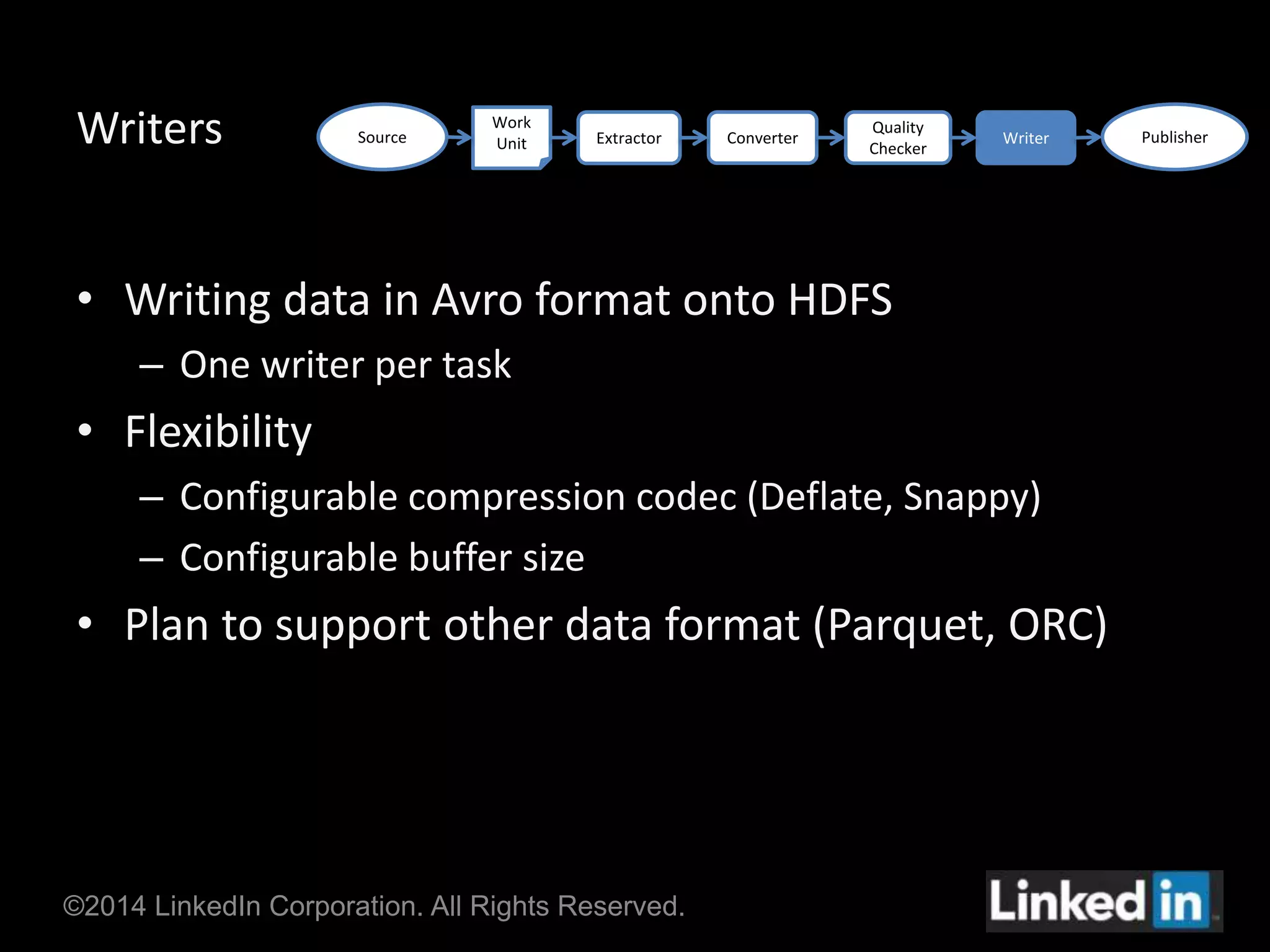

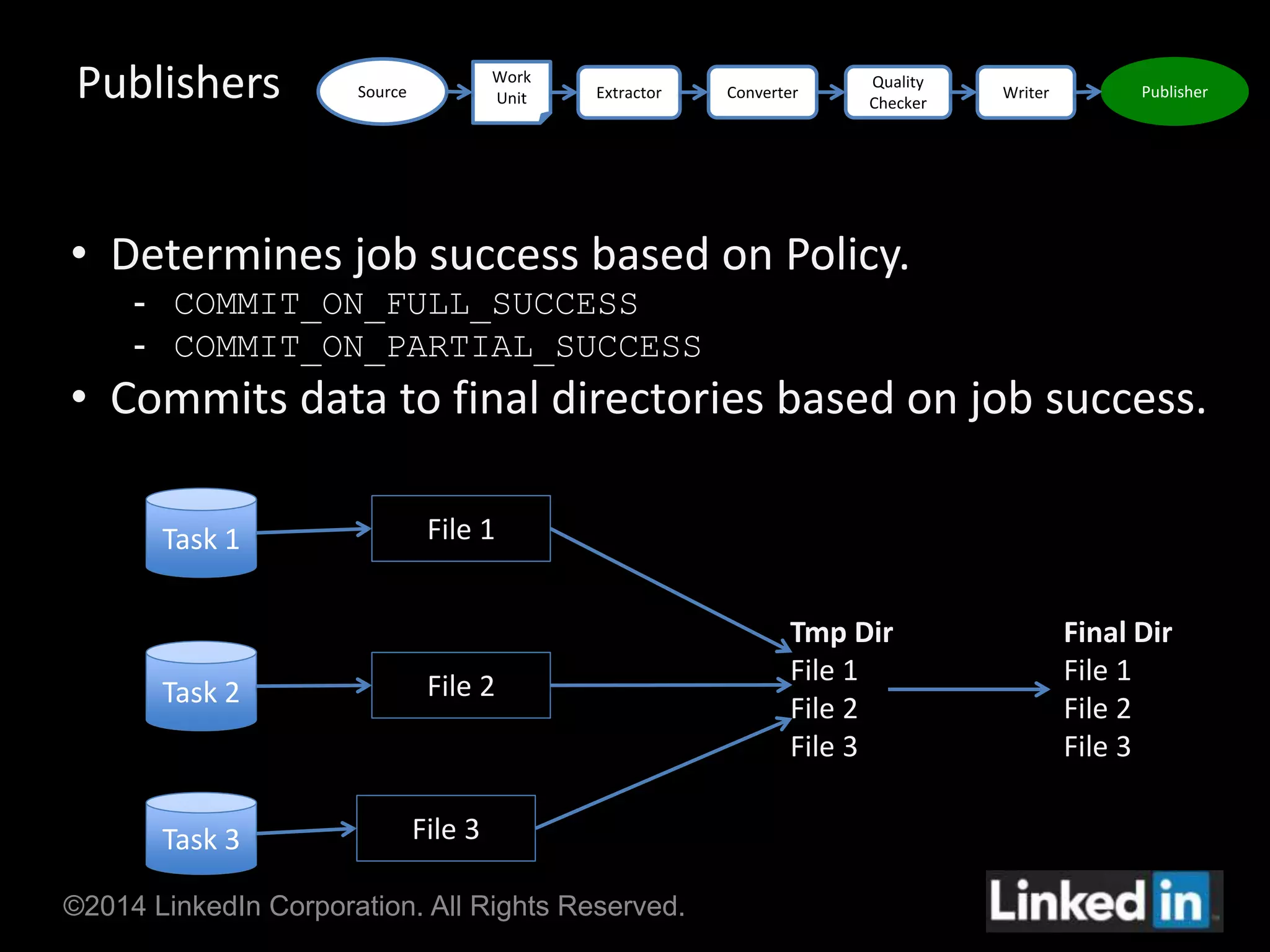

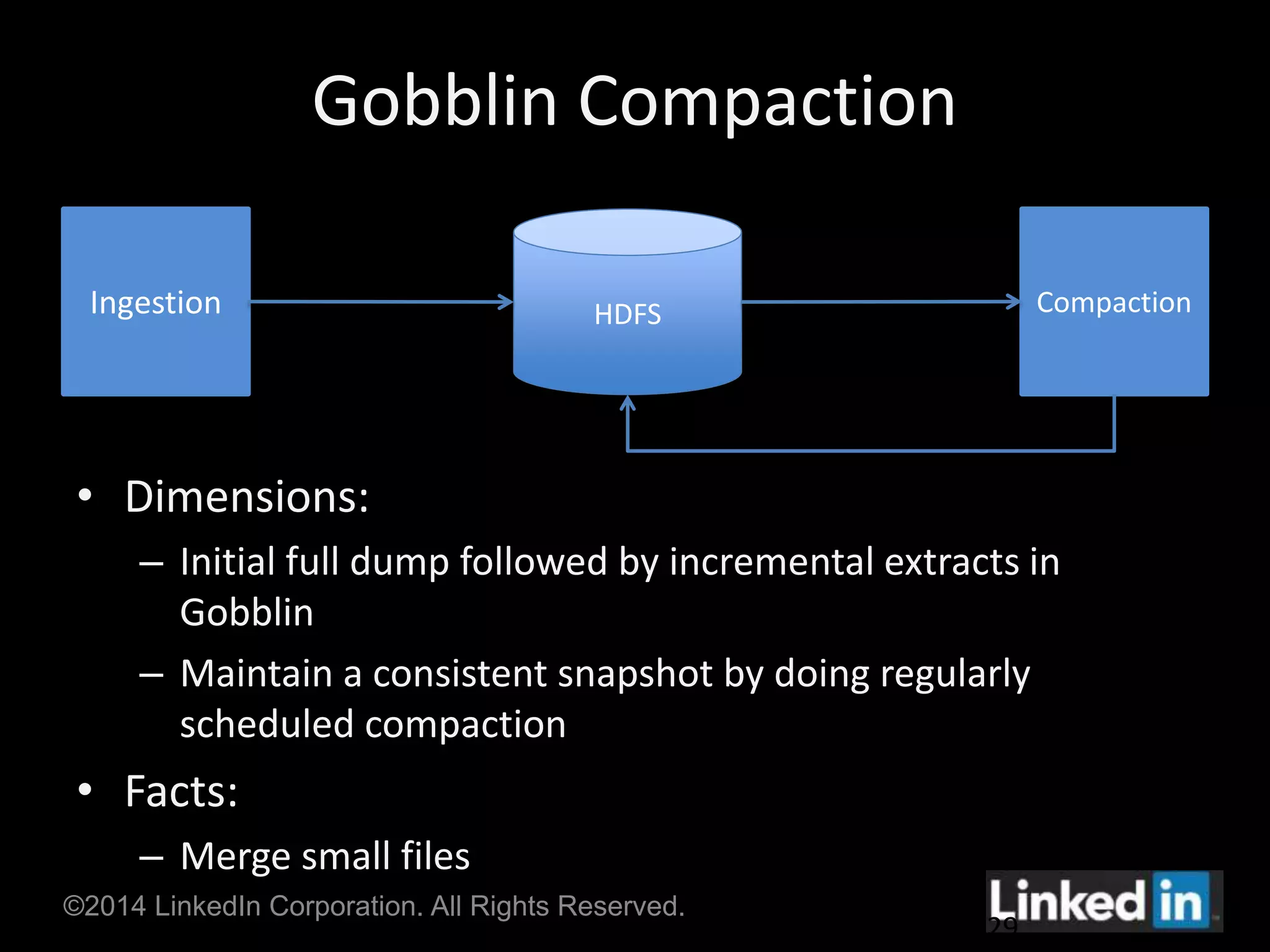

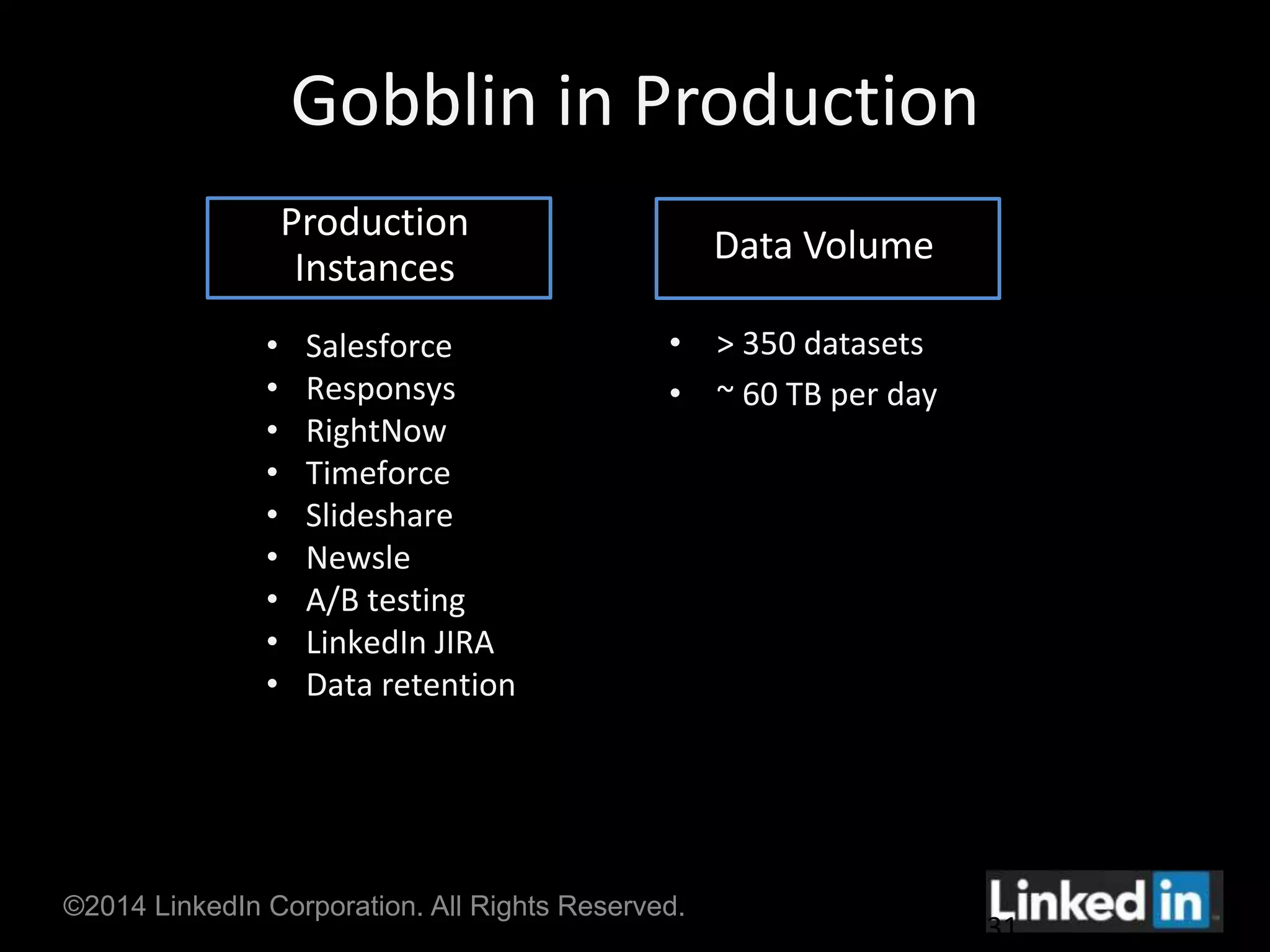

Gobblin is a unified data ingestion framework developed by LinkedIn to ingest large volumes of data from diverse sources into Hadoop. It provides a scalable and fault-tolerant workflow that extracts data, applies transformations, checks for quality, and writes outputs. Gobblin addresses challenges of operating multiple heterogeneous data pipelines by standardizing various ingestion tasks and metadata handling through its pluggable components.