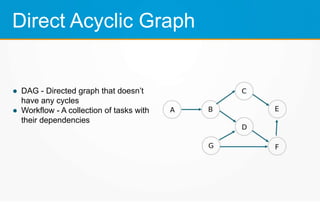

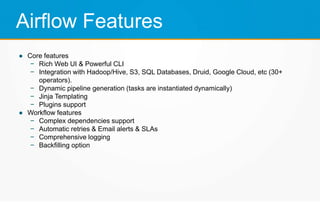

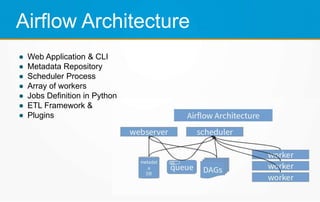

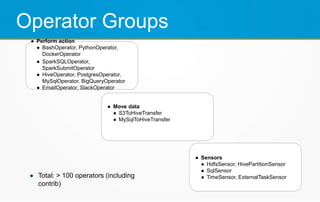

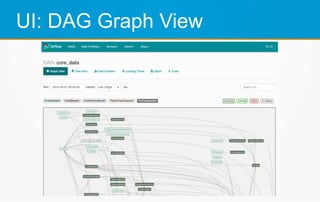

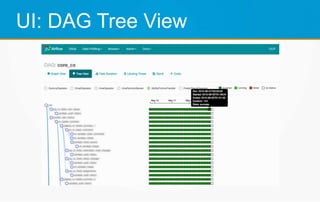

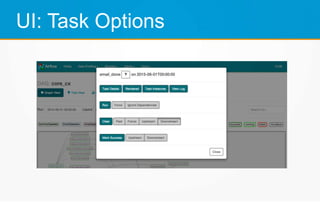

Apache Airflow is an open-source workflow management platform developed by Airbnb and now an Apache Software Foundation project. It allows users to define and manage data pipelines as directed acyclic graphs (DAGs) of tasks. The tasks can be operators to perform actions, move data between systems, and use sensors to monitor external systems. Airflow provides a rich web UI, CLI and integrations with databases, Hadoop, AWS and others. It is scalable, supports dynamic task generation and templates, alerting, retries, and distributed execution across clusters.