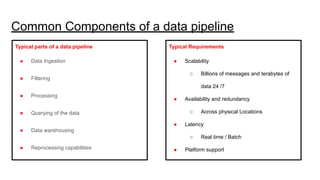

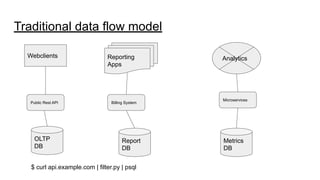

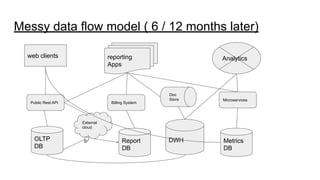

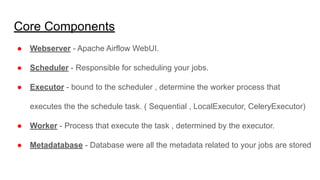

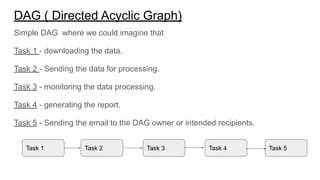

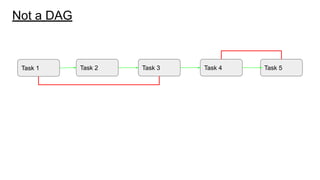

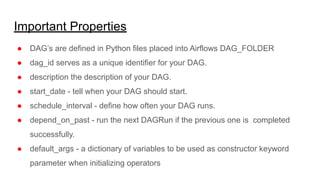

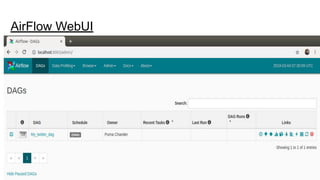

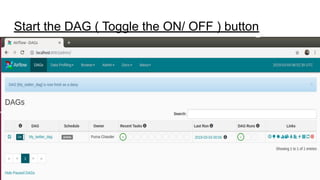

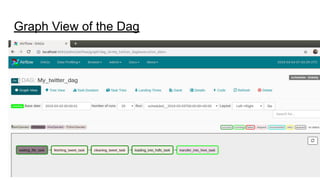

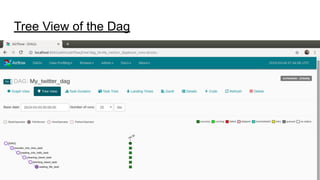

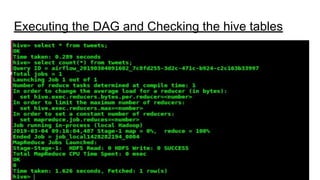

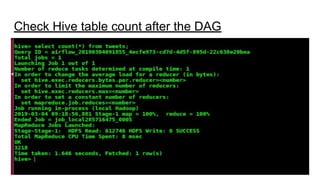

This document provides an overview of building data pipelines using Apache Airflow. It discusses what a data pipeline is, common components of data pipelines like data ingestion and processing, and issues with traditional data flows. It then introduces Apache Airflow, describing its features like being fault tolerant and supporting Python code. The core components of Airflow including the web server, scheduler, executor, and worker processes are explained. Key concepts like DAGs, operators, tasks, and workflows are defined. Finally, it demonstrates Airflow through an example DAG that extracts and cleanses tweets.