The document discusses algorithm analysis and determining the efficiency of algorithms. It introduces key concepts such as:

- Algorithms must be correct and efficient to solve problems.

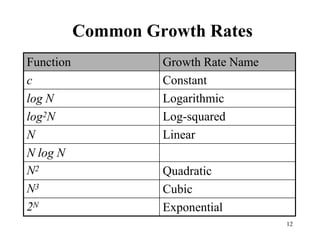

- The time and space complexity of algorithms is analyzed to compare efficiencies. Common growth rates include constant, logarithmic, linear, quadratic, and exponential time.

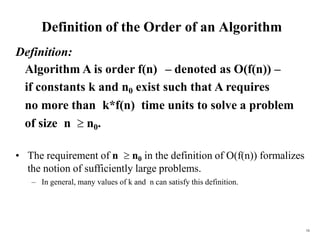

- The asymptotic worst-case time complexity of an algorithm (its "order") is expressed using Big O notation, such as O(n) for linear time. Higher order terms indicate slower growth.