Embed presentation

Download to read offline

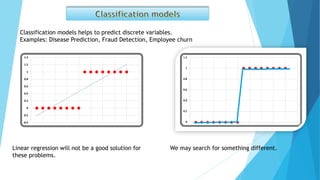

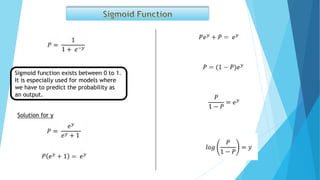

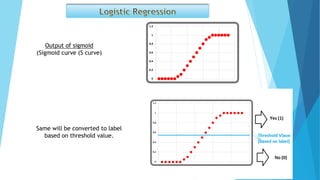

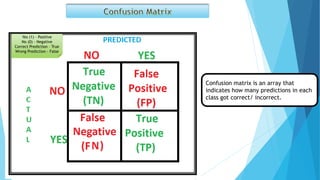

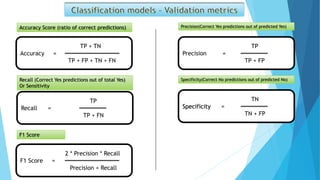

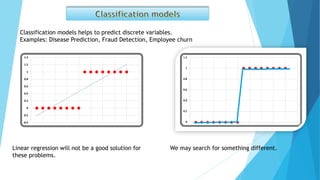

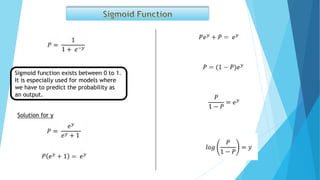

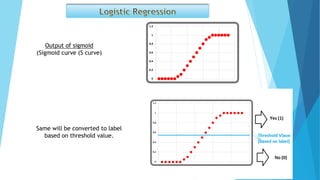

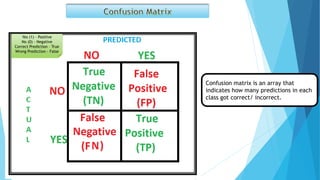

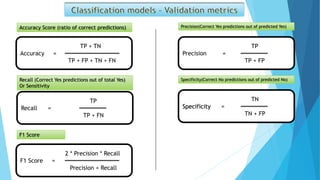

Classification models can predict discrete variables such as diseases, fraud detection, and employee churn. Sigmoid functions output probabilities between 0 and 1 and are useful for models that predict probabilities. Confusion matrices track correct and incorrect predictions to calculate metrics like accuracy, precision, recall, and F1 score.