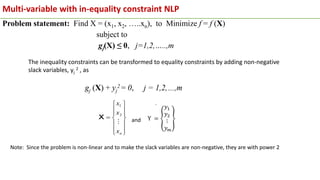

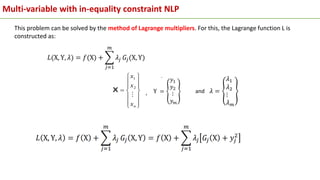

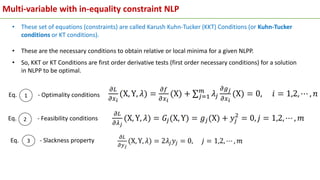

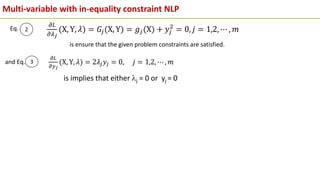

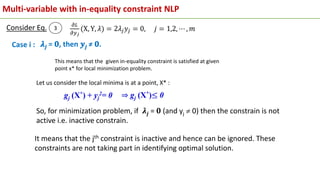

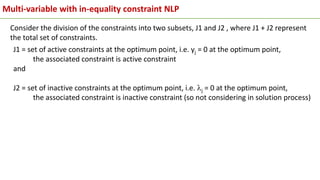

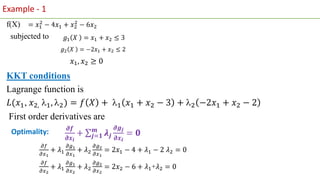

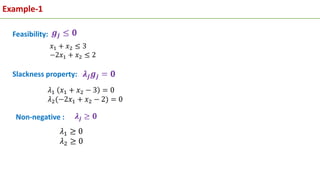

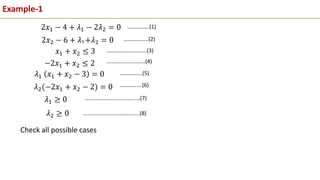

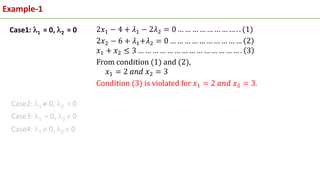

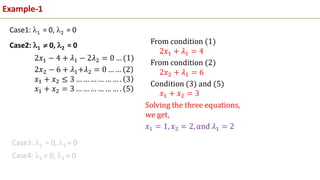

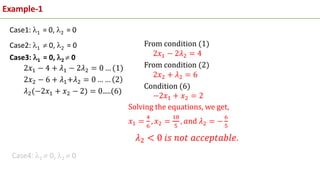

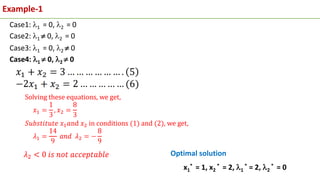

The document discusses multi-variable optimization with inequality constraints, focusing on the non-linear programming (NLP) problem of minimizing a function subject to these constraints. It introduces the method of Lagrange multipliers, emphasizing the Karush-Kuhn-Tucker (KKT) conditions as necessary criteria for determining local minima. Additionally, it explains the distinction between active and inactive constraints in relation to optimal solutions through various examples.