The document summarizes key points about equality constrained minimization problems and Newton's method for solving them. It discusses:

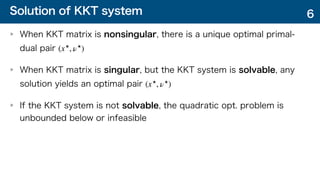

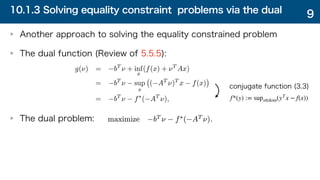

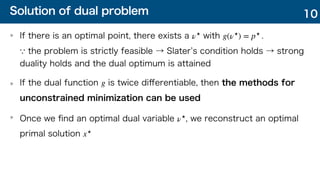

1) Equality constrained minimization problems and their equivalent forms via eliminating constraints or using the dual problem.

2) Newton's method extended to include equality constraints, where the Newton step is defined to satisfy the linearized optimality conditions and ensures feasible descent.

3) An infeasible start Newton method that computes steps to reduce the primal-dual residual norm, ensuring iterates become feasible within a finite number of steps.

![10.2.3 Newton s method and elimination (1/3)

The Newton s method for equality constrained problem coincide with

the Newton s method for reduced problem:

Both conditions for having the Newton step are equivalent

The Newton step for the reduced problem is defined, i.e., the hessian of the

reduced problem is invertible.

The Newton step for the equality constrained problem is defined, i.e., the

KKT matrix is invertible.

minimize ̂

f(z) = f(Fz + ̂

x)

∇2 ̂

f(x) = FT

∇2

f(Fz + ̂

x)F

[

∇2

f(x) AT

A 0 ]

20](https://image.slidesharecdn.com/boydchap10-210325051007/85/Boyd-chap10-20-320.jpg)

![Full step feasibility property

Analysis using residual with a step length

The residual of the next step :

The residual after step:

It implies

The primal residual at each step is in the direction of , and is scaled down at

each step

Once a full step ( ) is taken, all future iterates are primal feasible

t ∈ [0,1]

r+

pri

k

r(0)

t = 1

32](https://image.slidesharecdn.com/boydchap10-210325051007/85/Boyd-chap10-32-320.jpg)