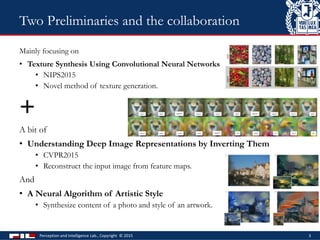

The document summarizes three papers on neural representations presented at a seminar:

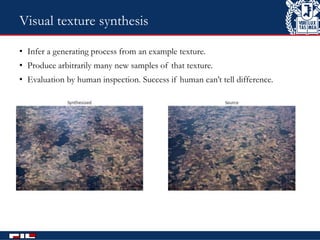

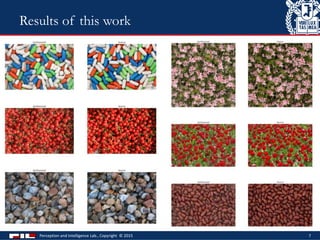

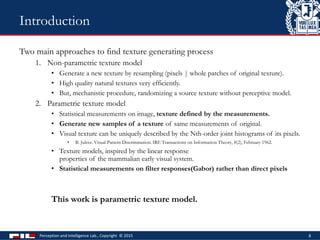

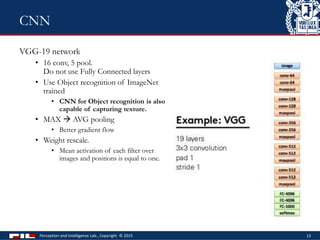

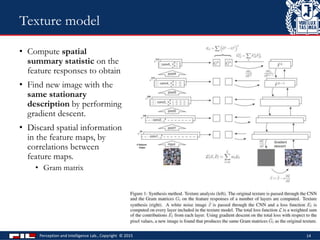

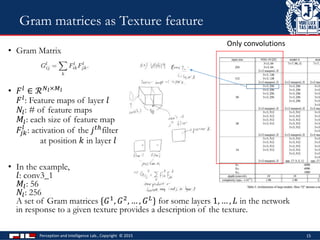

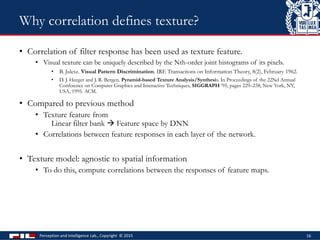

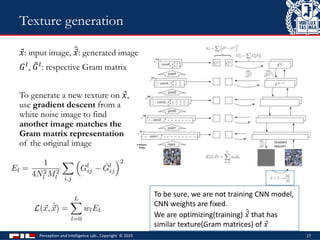

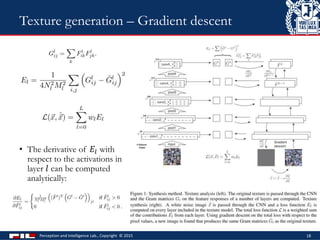

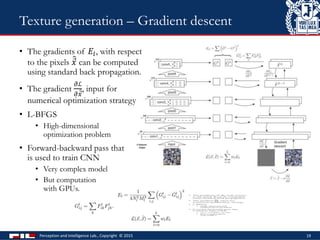

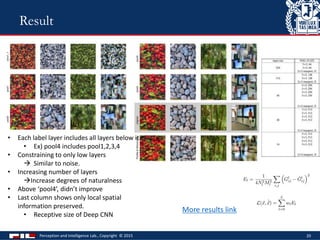

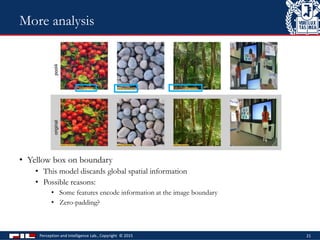

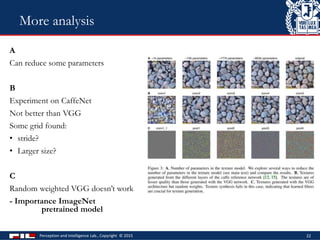

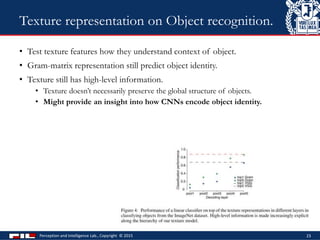

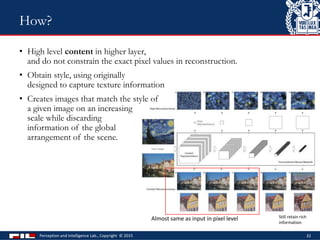

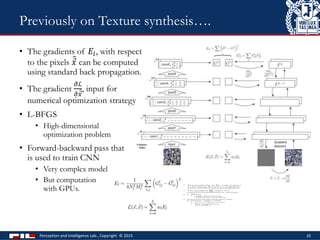

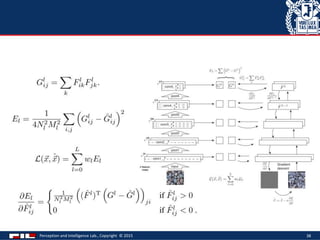

1. Texture synthesis using convolutional neural networks (CNNs) to generate new texture samples matching a source texture based on gram matrices of CNN feature maps.

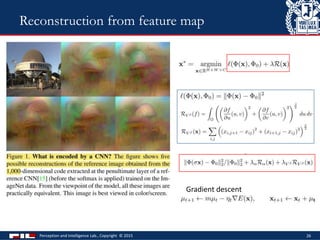

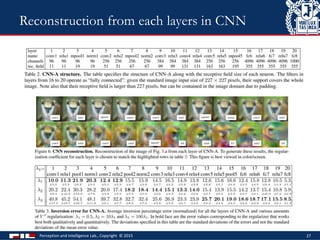

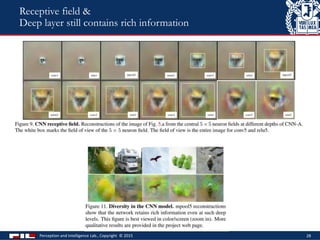

2. Reconstructing images from feature maps of CNNs trained on object recognition to understand neural representations.

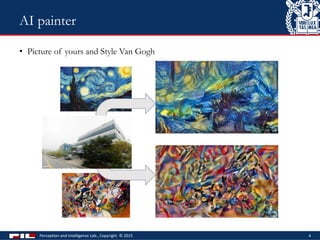

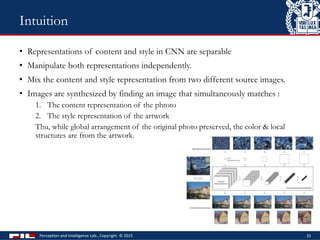

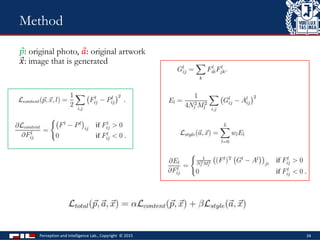

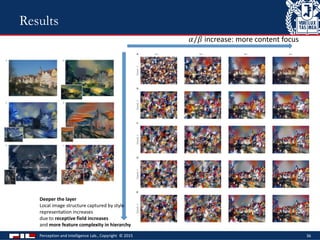

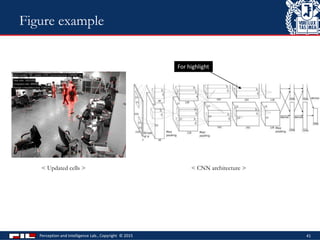

3. A neural algorithm of artistic style that combines the content of one image and style of another using CNN representations of content and style.