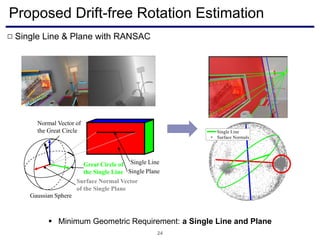

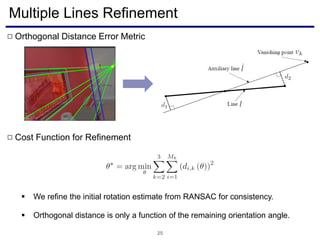

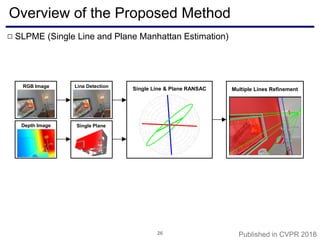

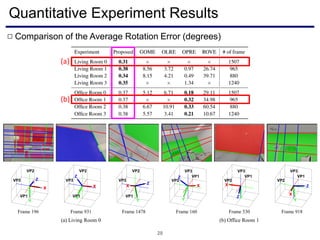

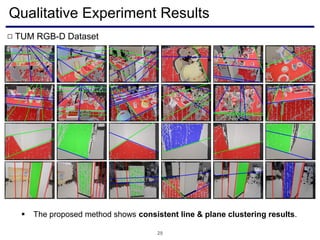

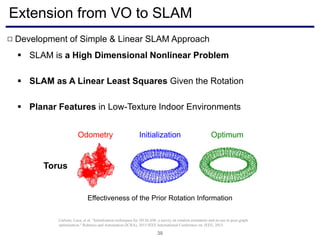

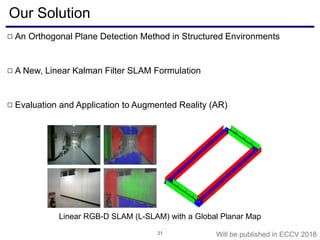

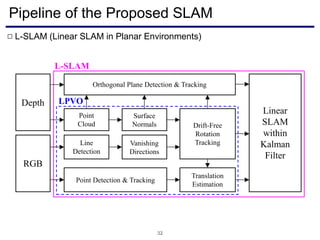

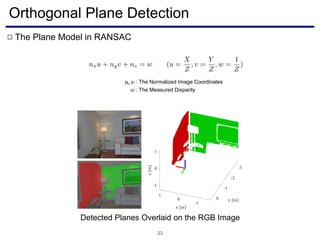

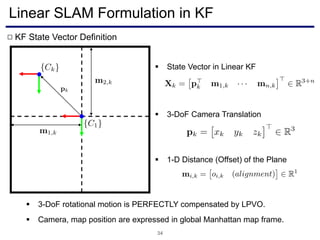

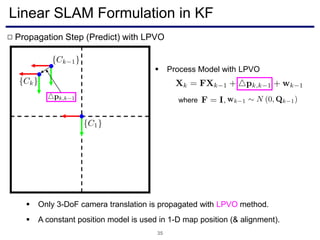

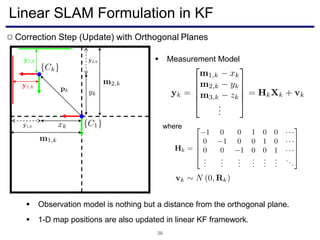

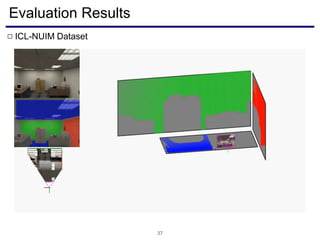

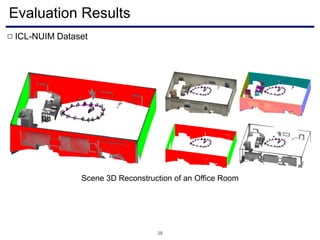

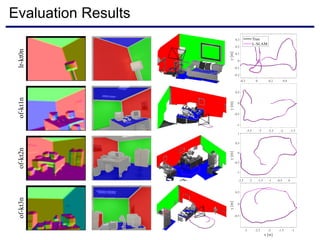

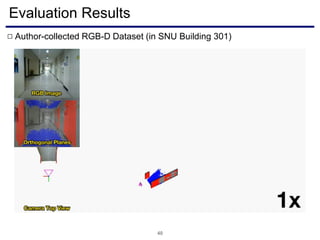

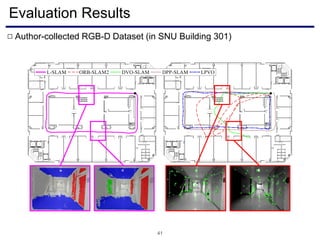

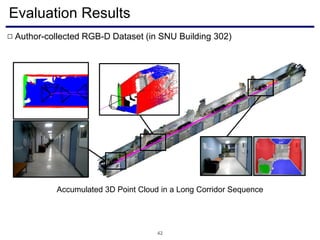

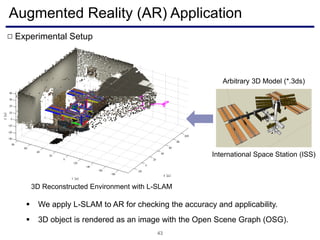

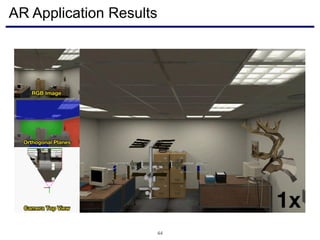

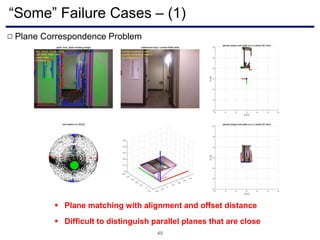

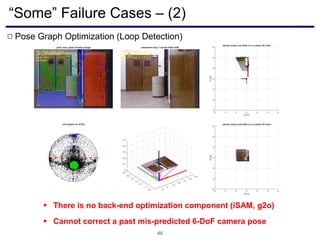

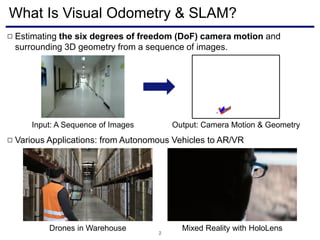

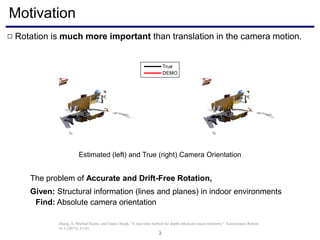

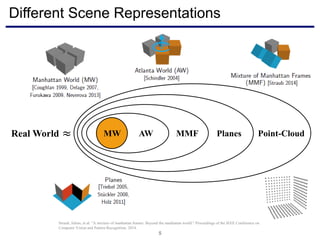

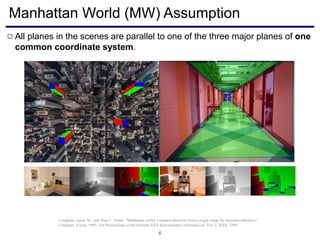

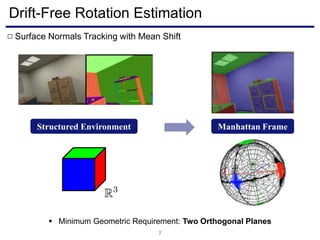

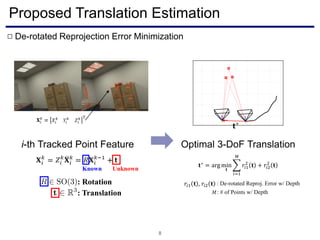

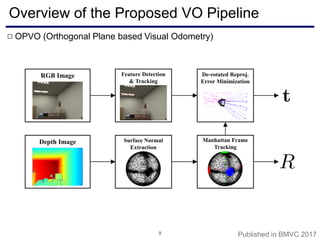

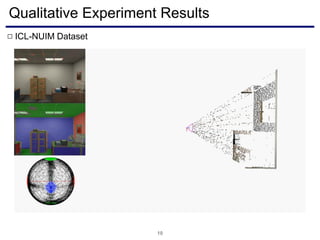

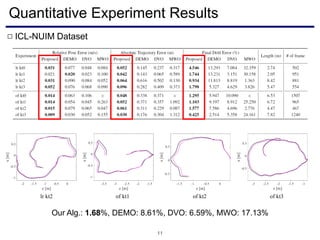

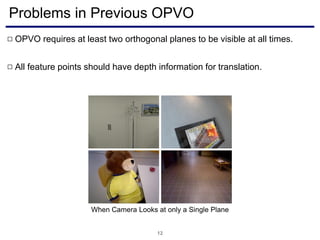

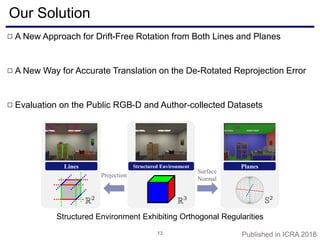

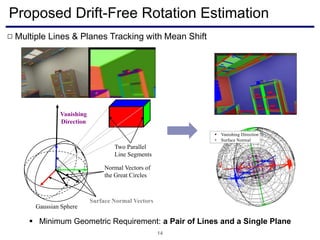

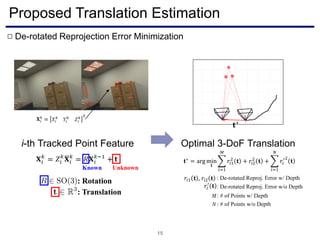

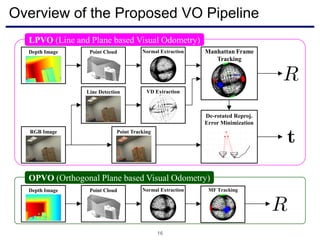

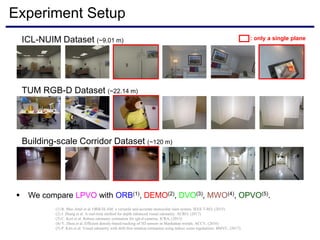

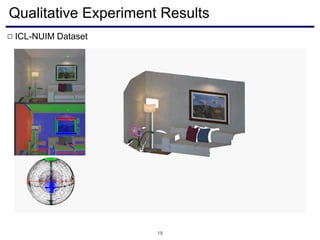

The document discusses a novel approach to visual odometry (VO) and simultaneous localization and mapping (SLAM) that leverages structural information in indoor environments, focusing on drift-free rotation estimation using lines and planes. It presents various algorithms and evaluations, demonstrating the effectiveness of the proposed methods in improving camera orientation and translation accuracy across different datasets. Key contributions include the development of linear SLAM formulations and their applications in augmented reality, addressing challenges in traditional VO and SLAM techniques.

![Quantitative Analysis with True Data

22

Frame Index

TranslationError[m]RotationError[deg]

Rotation error

causes failure

Average rotation

error is ~0.2 deg

On average, 5x

more accurate

15 Hz @ 10 FPS](https://image.slidesharecdn.com/visualodometryslamutilizingindoorstructuredenvironments-181113050523/85/Visual-odometry-slam-utilizing-indoor-structured-environments-22-320.jpg)