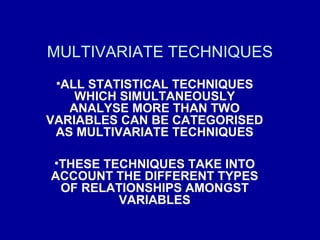

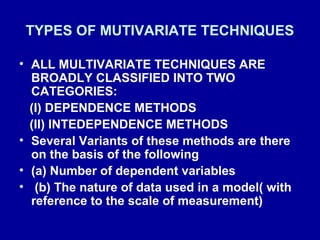

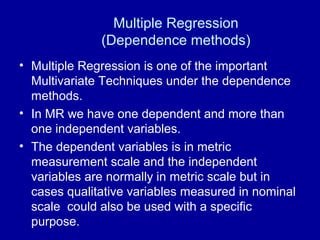

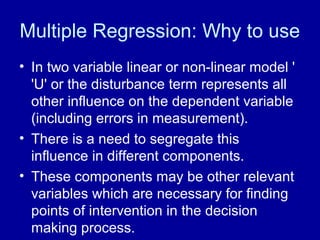

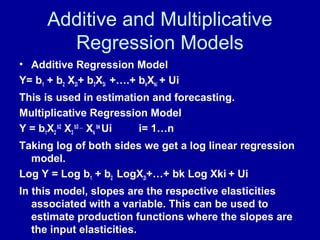

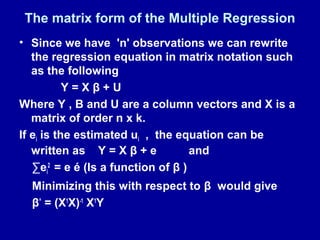

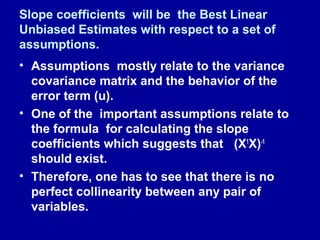

This document discusses multivariate techniques, specifically multiple regression. It defines multivariate techniques as those that analyze more than two variables simultaneously, accounting for relationships among variables. Multiple regression is described as a dependence method that uses one dependent variable and multiple independent variables. The document provides details on additive and multiplicative regression models, the matrix form of multiple regression equations, and assumptions of the technique. It also outlines how to interpret multiple regression output, including significance of slope coefficients and the adjusted R-squared statistic.

![• Consider the Venn Diagram discussed earlier.

• If one increases the number of explanatory

variables one after the other R2

is expected to

increase (Not decrease)

• R2

is adjusted to the number of parameters in

the model. Hence, it is a better estimate of the

co-efficient of determination (Adjusted)

•The following formula is used to calculate the

Adjusted R2

•R2

= 1 – RSS/TSS or 1 - ∑ ui

2

/ ∑ yi

2

• Adjusted R2

= 1 – [∑ ui

2

/ (n-k)]/ ∑ yi

2

/ (n-1)

• Where k is the number of parameter including

the intercept term. ( See Page 217: 4th

Ed DNG)](https://image.slidesharecdn.com/106856-170122204001/85/10685-6-1-multivar_mr_srm-bm-i-12-320.jpg)