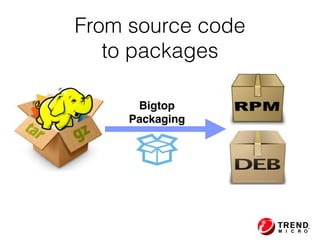

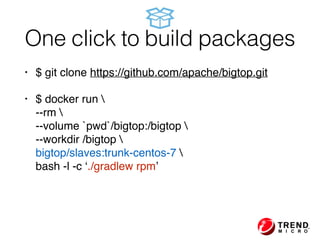

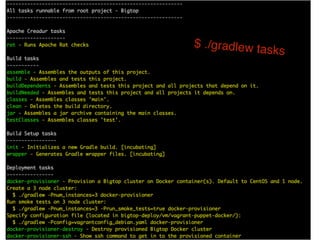

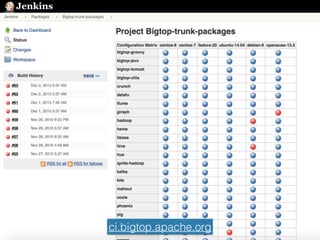

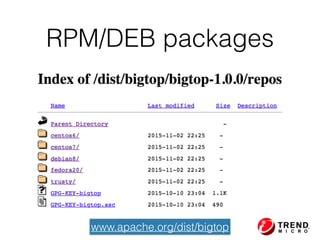

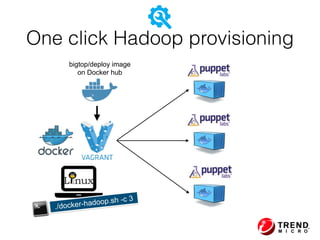

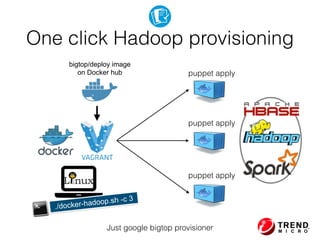

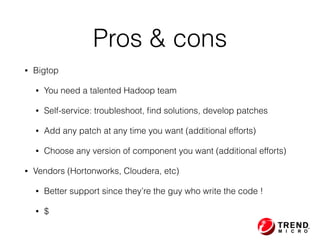

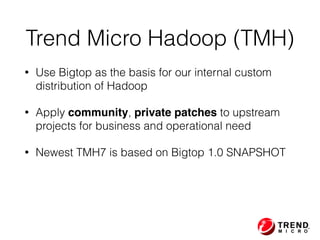

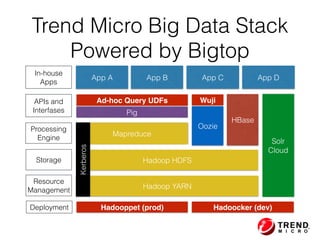

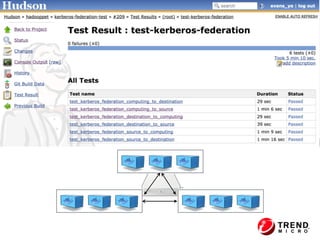

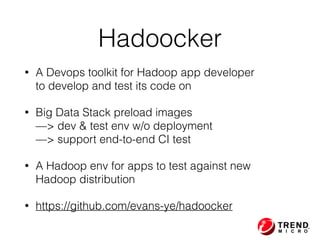

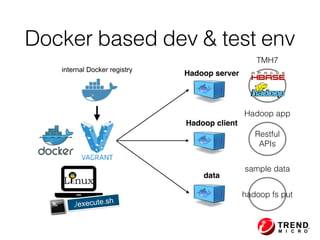

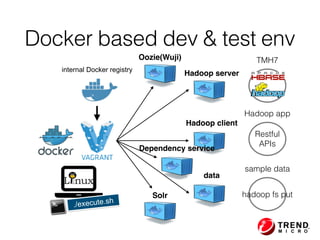

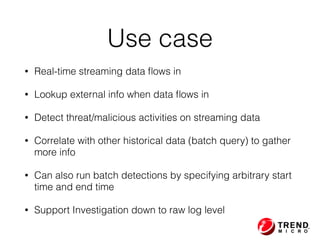

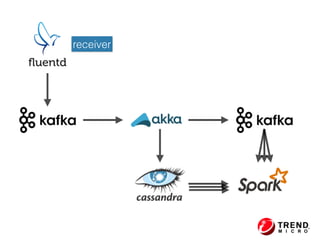

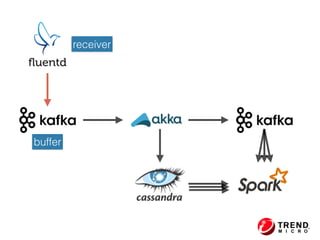

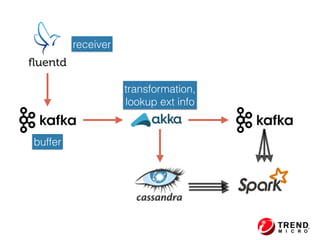

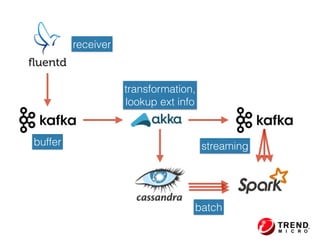

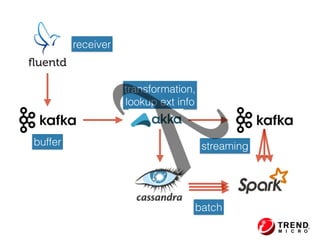

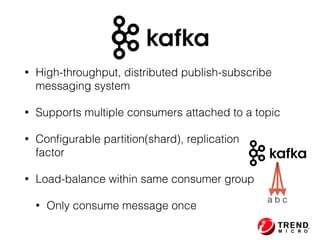

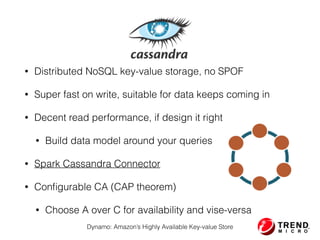

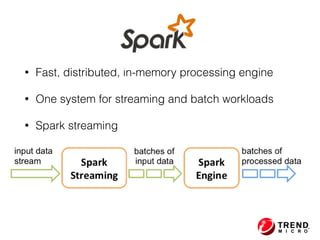

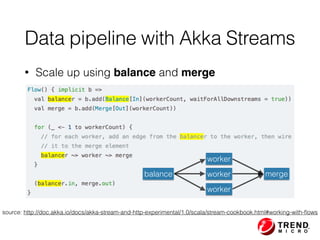

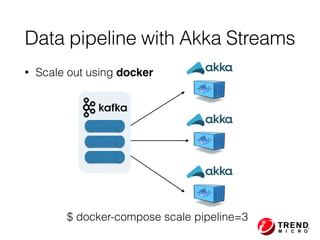

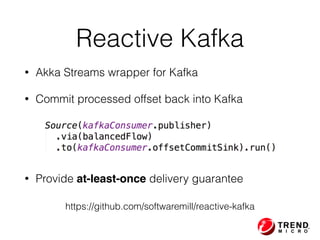

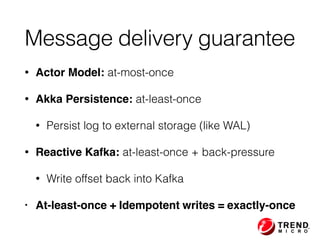

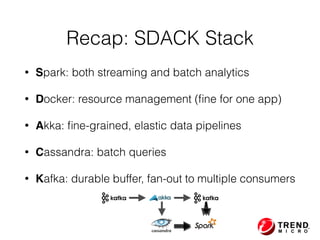

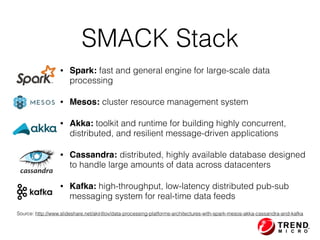

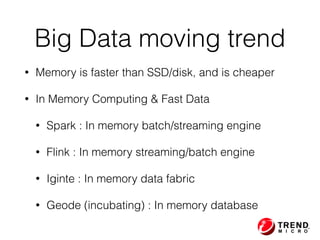

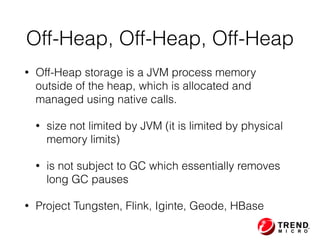

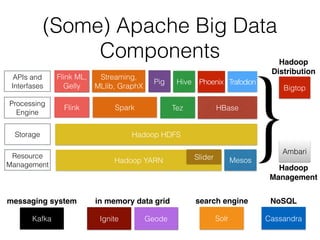

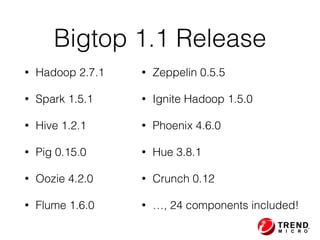

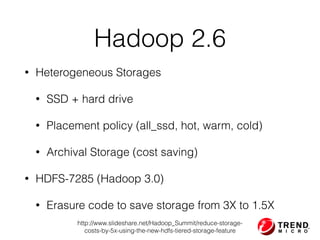

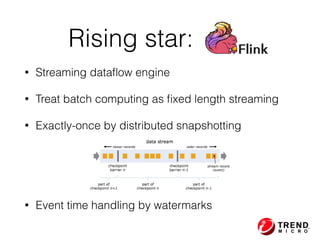

The document presents insights from Evans Ye at the 2015 Big Data Conference, covering the Trend Micro Big Data Platform and Apache Bigtop. It discusses the advantages of using Bigtop for building custom big data stacks, its features for deployment and testing, and the integration of various technologies like Hadoop, Spark, and Kafka. Additionally, it outlines the evolving big data landscape and future trends, including in-memory computing solutions and Bigtop's roadmap for upcoming releases.