Coder Name Rebecca Oquendo .docx

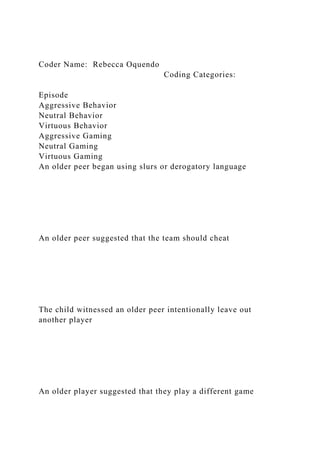

- 1. Coder Name: Rebecca Oquendo Coding Categories: Episode Aggressive Behavior Neutral Behavior Virtuous Behavior Aggressive Gaming Neutral Gaming Virtuous Gaming An older peer began using slurs or derogatory language An older peer suggested that the team should cheat The child witnessed an older peer intentionally leave out another player An older player suggested that they play a different game

- 2. The child lost the game with older players on their team The child witnessed an older player curse every time a mistake was made Index: · In this case aggressive behavior would constitute as mimicking older members undesired behaviors or becoming especially angry or agitated in game. A neutral behavior would be playing as they usually would not mimicking older player’s behaviors or trying to fit in to their more aggressive styles. A virtuous behavior would be steering the game away from aggression, voicing an opinion about the excessive aggression, or finding a way to express their gaming experience in a positive way. The same can be applied for the similar categories in “gaming”. · Each category can be scaled from 1-7 in which way the child’s dialogue tended to be behavior and gaming wise with a 1

- 3. indicating little to no effort in that direction and a 7 indicating extreme effort in that category. 1. What are the different types of attributes? Provide examples of each attribute. 2. Describe the components of a decision tree. Give an example problem and provide an example of each component in your decision making tree 3. Conduct research over the Internet and find an article on data mining. The article has to be less than 5 years old. Summarize the article in your own words. Make sure that you use APA formatting for this assignment. Questions from attached files 1. Obtain one of the data sets available at the UCI Machine Learning Repository and apply as many of the different visualization techniques described in the chapter as possible. The bibliographic notes and book Web site provide pointers to visualization software. 2. Identify at least two advantages and two disadvantages of using color to visually represent information. 3. What are the arrangement issues that arise with respect to three-dimensional plots? 4. Discuss the advantages and disadvantages of using sampling to reduce the number of data objects that need to be displayed. Would simple random sampling (without replacement) be a good approach to sampling? Why or why not? 5. Describe how you would create visualizations to display information that describes the following types of systems. a) Computer networks. Be sure to include both the static aspects

- 4. of the network, such as connectivity, and the dynamic aspects, such as traffic. b) The distribution of specific plant and animal species around the world fora specific moment in time. c) The use of computer resources, such as processor time, main memory, and disk, for a set of benchmark database programs. d) The change in occupation of workers in a particular country over the last thirty years. Assume that you have yearly information about each person that also includes gender and level of education. Be sure to address the following issues: · Representation. How will you map objects, attributes, and relation-ships to visual elements? · Arrangement. Are there any special considerations that need to be taken into account with respect to how visual elements are displayed? Specific examples might be the choice of viewpoint, the use of transparency, or the separation of certain groups of objects. · Selection. How will you handle a large number of attributes and data objects 6. Describe one advantage and one disadvantage of a stem and leaf plot with respect to a standard histogram. 7. How might you address the problem that a histogram depends on the number and location of the bins? 8. Describe how a box plot can give information about whether the value of an attribute is symmetrically distributed. What can you say about the symmetry of the distributions of the attributes shown in Figure 3.11? 9. Compare sepal length, sepal width, petal length, and petal width, using Figure3.12.

- 5. 10. Comment on the use of a box plot to explore a data set with four attributes: age, weight, height, and income. 11. Give a possible explanation as to why most of the values of petal length and width fall in the buckets along the diagonal in Figure 3.9. 12. Use Figures 3.14 and 3.15 to identify a characteristic shared by the petal width and petal length attributes. 13. Simple line plots, such as that displayed in Figure 2.12 on page 56, which shows two time series, can be used to effectively display high-dimensional data. For example, in Figure 2.12 it is easy to tell that the frequencies of the two time series are different. What characteristic of time series allows the effective visualization of high-dimensional data? 14. Describe the types of situations that produce sparse or dense data cubes. Illustrate with examples other than those used in the book. 15. How might you extend the notion of multidimensional data analysis so that the target variable is a qualitative variable? In other words, what sorts of summary statistics or data visualizations would be of interest? 16. Construct a data cube from Table 3.14. Is this a dense or

- 6. sparse data cube? If it is sparse, identify the cells that are empty. 17. Discuss the differences between dimensionality reduction based on aggregation and dimensionality reduction based on techniques such as PCA and SVD. 01/27/2020 1Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Data Mining: Data Lecture Notes for Chapter 2 Introduction to Data Mining , 2nd Edition by Tan, Steinbach, Kumar 01/27/2020 2Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Outline

- 7. 1 2 What is Data? cts and their attributes or characteristic of an object – Examples: eye color of a person, temperature, etc. – Attribute is also known as variable, field, characteristic, dimension, or feature utes describe an object – Object is also known as record, point, case, sample, entity, or instance Tid Refund Marital Status Taxable

- 8. Income Cheat 1 Yes Single 125K No 2 No Married 100K No 3 No Single 70K No 4 Yes Married 120K No 5 No Divorced 95K Yes 6 No Married 60K No 7 Yes Divorced 220K No 8 No Single 85K Yes 9 No Married 75K No 10 No Single 90K Yes 10 Attributes O b je c ts 01/27/2020 4Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar

- 9. A More Complete View of Data other attributes (objects) 3 4 01/27/2020 5Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Attribute Values assigned to an attribute for a particular object – Same attribute can be mapped to different attribute values – Different attributes can be mapped to the same set of values

- 10. e values can be different Measurement of Length attributes properties. 1 2 3 5 5 7 8 15 10 4 A B C D E

- 11. This scale preserves the ordering and additvity properties of length. This scale preserves only the ordering property of length. 5 6 01/27/2020 7Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Types of Attributes – Nominal rs, eye color, zip codes – Ordinal scale from 1-10), grades, height {tall, medium, short} – Interval

- 12. Fahrenheit. – Ratio ature in Kelvin, length, counts, elapsed time (e.g., time to run a race) 01/27/2020 8Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Properties of Attribute Values following properties/operations it possesses: – – Order: < > – Differences are + - meaningful : – Ratios are * / meaningful – Nominal attribute: distinctness – Ordinal attribute: distinctness & order – Interval attribute: distinctness, order & meaningful differences – Ratio attribute: all 4 properties/operations 7 8

- 13. 01/27/2020 9Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Difference Between Ratio and Interval say that a temperature of 10 ° is twice that of 5° on – the Celsius scale? – the Fahrenheit scale? – the Kelvin scale? – If Bill’s height is three inches above average and Bob’s height is six inches above average, then would we say that Bob is twice as tall as Bill? – Is this situation analogous to that of temperature? Attribute Type Description Examples Operations

- 14. Nominal Nominal attribute values only zip codes, employee ID numbers, eye color, sex: {male, female} mode, entropy, contingency test C a te g o ri ca l Q u a lit a tiv

- 15. e Ordinal Ordinal attribute values also order objects. (<, >) hardness of minerals, {good, better, best}, grades, street numbers median, percentiles, rank correlation, run tests, sign tests Interval For interval attributes, differences between values are meaningful. (+, - ) calendar dates, temperature in Celsius or Fahrenheit mean, standard deviation, Pearson's correlation, t and F tests N

- 16. u m e ri c Q u a n tit a tiv e Ratio For ratio variables, both differences and ratios are meaningful. (*, /) temperature in Kelvin, monetary quantities, counts, age, mass, length, current geometric mean, harmonic mean, percent variation This categorization of attributes is due to S. S. Stevens 9

- 18. Nominal Any permutation of values If all employee ID numbers were reassigned, would it make any difference? Ordinal An order preserving change of values, i.e., new_value = f(old_value) where f is a monotonic function An attribute encompassing the notion of good, better best can be represented equally well by the values {1, 2, 3} or by { 0.5, 1, 10}. N u m e ri c Q u

- 19. a n tit a tiv e Interval new_value = a * old_value + b where a and b are constants Thus, the Fahrenheit and Celsius temperature scales differ in terms of where their zero value is and the size of a unit (degree). Ratio new_value = a * old_value Length can be measured in meters or feet. This categorization of attributes is due to S. S. Stevens 01/27/2020 12Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Discrete and Continuous Attributes – Has only a finite or countably infinite set of values – Examples: zip codes, counts, or the set of words in a

- 20. collection of documents – Often represented as integer variables. – Note: binary attributes are a special case of discrete attributes – Has real numbers as attribute values – Examples: temperature, height, or weight. – Practically, real values can only be measured and represented using a finite number of digits. – Continuous attributes are typically represented as floating- point variables. 11 12 01/27/2020 13Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Asymmetric Attributes -zero attribute value) is regarded as important following?

- 21. “I see our purchases are very similar since we didn’t buy most of the same things.” ordinary binary attribute – Association analysis uses asymmetric attributes typically arise from objects that are sets 01/27/2020 14Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Some Extensions and Critiques ordinal, interval, and ratio typologies are misleading." The American Statistician 47, no. 1 (1993): 65-72. and regression. A second course in statistics." Addison- Wesley Series in Behavioral Science: Quantitative Methods, Reading, Mass.: Addison-Wesley, 1977. for cartography."Cartography and Geographic Information Systems 25, no. 4 (1998): 231-242. 13 14

- 22. 01/27/2020 15Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Critiques – Asymmetric binary – Cyclical – Multivariate – Partially ordered – Partial membership – Relationships between the data – This can complicate recognition of the proper attribute type – Treating one attribute type as another may be approximately correct 01/27/2020 16Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Critiques … – May unnecessarily restrict operations and results may be justified

- 23. – Transformations are common but don’t preserve scales ew scale with better statistical properties 15 16 01/27/2020 17Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar More Complicated Examples – Nominal, ordinal, or interval? – Nominal, ordinal, or ratio? – Interval or Ratio 01/27/2020 18Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Key Messages for Attribute Types “meaningful” for the type of data you have

- 24. – Distinctness, order, meaningful intervals, and meaningful ratios are only four properties of data – The data type you see – often numbers or strings – may not capture all the properties or may suggest properties that are not present – Analysis may depend on these other properties of the data – Many times what is meaningful is measured by statistical significance – But in the end, what is meaningful is measured by the domain 17 18 01/27/2020 19Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Types of data sets – Data Matrix – Document Data – Transaction Data – World Wide Web – Molecular Structures

- 25. – Spatial Data – Temporal Data – Sequential Data – Genetic Sequence Data 01/27/2020 20Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Important Characteristics of Data – Dimensionality (number of attributes) – Sparsity – Resolution – Size 19 20 01/27/2020 21Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Record Data

- 26. of which consists of a fixed set of attributes Tid Refund Marital Status Taxable Income Cheat 1 Yes Single 125K No 2 No Married 100K No 3 No Single 70K No 4 Yes Married 120K No 5 No Divorced 95K Yes 6 No Married 60K No 7 Yes Divorced 220K No 8 No Single 85K Yes 9 No Married 75K No 10 No Single 90K Yes 10 01/27/2020 22Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Data Matrix

- 27. attributes, then the data objects can be thought of as points in a multi-dimensional space, where each dimension represents a distinct attribute where there are m rows, one for each object, and n columns, one for each attribute 1.12.216.226.2512.65 1.22.715.225.2710.23 T hickness LoadDistanceProjection of y load Projection of x Load 1.12.216.226.2512.65 1.22.715.225.2710.23 T hickness LoadDistanceProjection of y load Projection of x Load 21 22 01/27/2020 23Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar

- 28. Document Data – Each term is a component (attribute) of the vector – The value of each component is the number of times the corresponding term occurs in the document. 01/27/2020 24Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Transaction Data – Each transaction involves a set of items. – For example, consider a grocery store. The set of products purchased by a customer during one shopping trip constitute a transaction, while the individual products that were purchased are the items. – Can represent transaction data as record data TID Items 1 Bread, Coke, Milk 2 Beer, Bread 3 Beer, Coke, Diaper, Milk 4 Beer, Bread, Diaper, Milk 5 Coke, Diaper, Milk

- 29. 23 24 01/27/2020 25Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Graph Data 5 2 1 2 5 Benzene Molecule: C6H6 01/27/2020 26Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Ordered Data An element of the sequence

- 30. Items/Events 25 26 01/27/2020 27Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Ordered Data GGTTCCGCCTTCAGCCCCGCGCC CGCAGGGCCCGCCCCGCGCCGTC GAGAAGGGCCCGCCTGGCGGGCG GGGGGAGGCGGGGCCGCCCGAGC CCAACCGAGTCCGACCAGGTGCC CCCTCTGCTCGGCCTAGACCTGA GCTCATTAGGCGGCAGCGGACAG GCCAAGTAGAACACGCGAAGCGC TGGGCTGCCTGCTGCGACCAGGG 01/27/2020 28Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Ordered Data -Temporal Data Average Monthly Temperature of land and ocean

- 31. 27 28 01/27/2020 29Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Data Quality efforts “The most important point is that poor data quality is an unfolding disaster. – Poor data quality costs the typical company at least ten percent (10%) of revenue; twenty percent (20%) is probably a better estimate.” Thomas C. Redman, DM Review, August 2004 people who are loan risks is built using poor data – Some credit-worthy candidates are denied loans – More loans are given to individuals that default 01/27/2020 30Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Data Quality …

- 32. can we do about these problems? – Noise and outliers – Missing values – Duplicate data – Wrong data – Fake data 29 30 01/27/2020 31Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Noise – Examples: distortion of a person’s voice when talking on a poor phone and “snow” on television screen Two Sine Waves Two Sine Waves + Noise

- 33. 01/27/2020 32Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar are considerably different than most of the other data objects in the data set – Case 1: Outliers are noise that interferes with data analysis – Case 2: Outliers are the goal of our analysis Outliers 31 32 01/27/2020 33Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Missing Values – Information is not collected

- 34. (e.g., people decline to give their age and weight) – Attributes may not be applicable to all cases (e.g., annual income is not applicable to children) ing values – Eliminate data objects or variables – Estimate missing values – Ignore the missing value during analysis 01/27/2020 34Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Missing Values … – Missingness of a value is independent of attributes – Fill in values based on the attribute – Analysis may be unbiased overall – Missingness is related to other variables – Fill in values based other values – Almost always produces a bias in the analysis – Missingness is related to unobserved measurements – Informative or non-ignorable missingness the situation from the data

- 35. 33 34 01/27/2020 35Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Duplicate Data duplicates, or almost duplicates of one another – Major issue when merging data from heterogeneous sources – Same person with multiple email addresses – Process of dealing with duplicate data issues 01/27/2020 36Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Similarity and Dissimilarity Measures – Numerical measure of how alike two data objects are. – Is higher when objects are more alike. – Often falls in the range [0,1]

- 36. easure – Numerical measure of how different two data objects are – Lower when objects are more alike – Minimum dissimilarity is often 0 – Upper limit varies 35 36 01/27/2020 37Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Similarity/Dissimilarity for Simple Attributes The following table shows the similarity and dissimilarity between two objects, x and y, with respect to a single, simple attribute. 01/27/2020 38Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Euclidean Distance

- 37. where n is the number of dimensions (attributes) and xk and yk are, respectively, the kth attributes (components) or data objects x and y. 37 38 01/27/2020 39Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Euclidean Distance 0 1 2 3 0 1 2 3 4 5 6 p1 p2 p3 p4 poi nt x y p1 0 2 p2 2 0

- 38. p3 3 1 p4 5 1 Distance Matrix p1 p2 p3 p4 p1 0 2.828 3.162 5.099 p2 2.828 0 1.414 3.162 p3 3.162 1.414 0 2 p4 5.099 3.162 2 0 01/27/2020 40Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Minkowski Distance neralization of Euclidean Distance Where r is a parameter, n is the number of dimensions (attributes) and xk and yk are, respectively, the k th attributes (components) or data objects x and y. 39 40 01/27/2020 41Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Minkowski Distance: Examples

- 39. – A common example of this for binary vectors is the Hamming distance, which is just the number of bits that are different between two binary vectors – This is the maximum difference between any component of the vectors defined for all numbers of dimensions. 01/27/2020 42Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Minkowski Distance Distance Matrix poi nt x y p1 0 2 p2 2 0 p3 3 1 p4 5 1 L1 p1 p2 p3 p4 p1 0 4 4 6 p2 4 0 2 4 p3 4 2 0 2 p4 6 4 2 0

- 40. L2 p1 p2 p3 p4 p1 0 2.828 3.162 5.099 p2 2.828 0 1.414 3.162 p3 3.162 1.414 0 2 p4 5.099 3.162 2 0 p1 0 2 3 5 p2 2 0 1 3 p3 3 1 0 2 p4 5 3 2 0 41 42 01/27/2020 43Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Mahalanobis Distance For red points, the Euclidean distance is 14.7, Mahalanobis distance is 6. ����������� �, � � � Ʃ � � 01/27/2020 44Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Mahalanobis Distance

- 41. Covariance Matrix: 3.02.0 2.03.0 A: (0.5, 0.5) B: (0, 1) C: (1.5, 1.5) Mahal(A,B) = 5 Mahal(A,C) = 4 B A C 43 44

- 42. 01/27/2020 45Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Common Properties of a Distance have some well known properties. x = y. (Positive definiteness) 2. d(x, y) = d(y, x) for all x and y. (Symmetry) (Triangle Inequality) where d(x, y) is the distance (dissimilarity) between points (data objects), x and y. metric 01/27/2020 46Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Common Properties of a Similarity properties. 1. s(x, y) = 1 (or maximum similarity) only if x = y. (does not always hold, e.g., cosine)

- 43. 2. s(x, y) = s(y, x) for all x and y. (Symmetry) where s(x, y) is the similarity between points (data objects), x and y. 45 46 01/27/2020 47Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Similarity Between Binary Vectors binary attributes f01 = the number of attributes where x was 0 and y was 1 f10 = the number of attributes where x was 1 and y was 0 f00 = the number of attributes where x was 0 and y was 0 f11 = the number of attributes where x was 1 and y was 1 SMC = number of matches / number of attributes = (f11 + f00) / (f01 + f10 + f11 + f00) J = number of 11 matches / number of non-zero attributes = (f11) / (f01 + f10 + f11)

- 44. 01/27/2020 48Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar SMC versus Jaccard: Example x = 1 0 0 0 0 0 0 0 0 0 y = 0 0 0 0 0 0 1 0 0 1 f01 = 2 (the number of attributes where x was 0 and y was 1) f10 = 1 (the number of attributes where x was 1 and y was 0) f00 = 7 (the number of attributes where x was 0 and y was 0) f11 = 0 (the number of attributes where x was 1 and y was 1) SMC = (f11 + f00) / (f01 + f10 + f11 + f00) = (0+7) / (2+1+0+7) = 0.7 J = (f11) / (f01 + f10 + f11) = 0 / (2 + 1 + 0) = 0 47 48 01/27/2020 49Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Cosine Similarity and d2 are two document vectors, then

- 45. cos( d1, d2 ) = <d1,d2> / ||d1|| ||d2|| , where <d1,d2> indicates inner product or vector dot product of vectors, d1 and d2, and || d || is the length of vector d. d1 = 3 2 0 5 0 0 0 2 0 0 d2 = 1 0 0 0 0 0 0 1 0 2 <d1, d2> = 3*1 + 2*0 + 0*0 + 5*0 + 0*0 + 0*0 + 0*0 + 2*1 + 0*0 + 0*2 = 5 | d1 || = (3*3+2*2+0*0+5*5+0*0+0*0+0*0+2*2+0*0+0*0) 0.5 = (42) 0.5 = 6.481 || d2 || = (1*1+0*0+0*0+0*0+0*0+0*0+0*0+1*1+0*0+2*2) 0.5 = (6) 0.5 = 2.449 cos(d1, d2 ) = 0.3150 01/27/2020 50Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Extended Jaccard Coefficient (Tanimoto) attributes – Reduces to Jaccard for binary attributes 49 50

- 46. 01/27/2020 51Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Correlation measures the linear relationship between objects 01/27/2020 52Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Visually Evaluating Correlation Scatter plots showing the similarity from –1 to 1. 51 52 01/27/2020 53Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Drawback of Correlation -3, -2, -1, 0, 1, 2, 3) yi = xi 2

- 47. -3)(5)+(-2)(0)+(-1)(-3)+(0)(-4)+(1)(-3)+(2)(0)+3(5) / ( 6 * 2.16 * 3.74 ) = 0 01/27/2020 54Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Comparison of Proximity Measures – Similarity measures tend to be specific to the type of attribute and data – Record data, images, graphs, sequences, 3D-protein structure, etc. tend to have different measures talk about various properties that you would like a proximity measure to have – Symmetry is a common one – Tolerance to noise and outliers is another – Ability to find more types of patterns? – Many others possible the data and produce results that agree with domain knowledge 53 54

- 48. 01/27/2020 55Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Information Based Measures -developed and fundamental disciple with broad applications information theory – Mutual information in various versions – Maximal Information Coefficient (MIC) and related measures – General and can handle non-linear relationships – Can be complicated and time intensive to compute 01/27/2020 56Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Information and Probability – transmission of a message, flip of a coin, or measurement of a piece of data that it contains and vice-versa – For example, if a coin has two heads, then an outcome of heads provides no information

- 49. – More quantitatively, the information is related the probability of an outcome information it provides and vice-versa – Entropy is the commonly used measure 55 56 01/27/2020 57Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Entropy – a variable (event), X, – with n possible values (outcomes), x1, x2 …, xn – each outcome having probability, p1, p2 …, pn – the entropy of X , H(X), is given by � � � log � and is measured in bits – Thus, entropy is a measure of how many bits it takes to represent an observation of X on average 01/27/2020 58Introduction to Data Mining, 2nd Edition

- 50. Tan, Steinbach, Karpatne, Kumar Entropy Examples obability p of heads and probability q = 1 – p of tails � � log � � log � – For p= 0.5, q = 0.5 (fair coin) H = 1 – For p = 1 or q = 1, H = 0 -sided die? 57 58 01/27/2020 59Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Entropy for Sample Data: Example Maximum entropy is log25 = 2.3219 Hair Color Count p -plog2p Black 75 0.75 0.3113 Brown 15 0.15 0.4105 Blond 5 0.05 0.2161

- 51. Red 0 0.00 0 Other 5 0.05 0.2161 Total 100 1.0 1.1540 01/27/2020 60Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Entropy for Sample Data – a number of observations (m) of some attribute, X, e.g., the hair color of students in the class, – where there are n different possible values – And the number of observation in the ith category is mi – Then, for this sample � � � � log � � 59 60

- 52. 01/27/2020 61Introduction to Data Mining, 2nd Edition Tan, Steinbach, Karpatne, Kumar Mutual Information Formally, � �, � � � � � … Data Mining: Exploring Data Lecture Notes for Chapter 3 Introduction to Data Mining by Tan, Steinbach, Kumar (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002

- 53. What is data exploration?Key motivations of data exploration includeHelping to select the right tool for preprocessing or analysisMaking use of humans’ abilities to recognize patterns People can recognize patterns not captured by data analysis tools Related to the area of Exploratory Data Analysis (EDA)Created by statistician John TukeySeminal book is Exploratory Data Analysis by TukeyA nice online introduction can be found in Chapter 1 of the NIST Engineering Statistics Handbook http://www.itl.nist.gov/div898/handbook/index.htm A preliminary exploration of the data to better understand its characteristics. (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Techniques Used In Data Exploration In EDA, as originally defined by TukeyThe focus was on visualizationClustering and anomaly detection were viewed as exploratory techniquesIn data mining, clustering and anomaly detection are major areas of interest, and not thought of as just exploratory In our discussion of data exploration, we focus onSummary statisticsVisualizationOnline Analytical Processing (OLAP) (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR

- 54. 2002 Iris Sample Data Set Many of the exploratory data techniques are illustrated with the Iris Plant data set.Can be obtained from the UCI Machine Learning Repository http://www.ics.uci.edu/~mlearn/MLRepository.html From the statistician Douglas FisherThree flower types (classes): Setosa Virginica VersicolourFour (non-class) attributes Sepal width and length Petal width and length Virginica. Robert H. Mohlenbrock. USDA NRCS. 1995. Northeast wetland flora: Field office guide to plant species. Northeast National Technical Center, Chester, PA. Courtesy of USDA NRCS Wetland Science Institute. (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Summary StatisticsSummary statistics are numbers that summarize properties of the data Summarized properties include frequency, location and spread Examples: location - mean spread - standard deviation Most summary statistics can be calculated in a single pass through the data (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR

- 55. 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Frequency and ModeThe frequency of an attribute value is the percentage of time the value occurs in the data set For example, given the attribute ‘gender’ and a representative population of people, the gender ‘female’ occurs about 50% of the time.The mode of a an attribute is the most frequent attribute value The notions of frequency and mode are typically used with categorical data (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 PercentilesFor continuous data, the notion of a percentile is more useful. Given an ordinal or continuous attribute x and a number p between 0 and 100, the pth percentile is a value of x such that p% of the observed values of x are less than . For instance, the 50th percentile is the value such that 50% of all values of x are less than . (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR

- 56. 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Measures of Location: Mean and MedianThe mean is the most common measure of the location of a set of points. However, the mean is very sensitive to outliers. Thus, the median or a trimmed mean is also commonly used. (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Measures of Spread: Range and VarianceRange is the difference between the max and minThe variance or standard deviation is the most common measure of the spread of a set of points. However, this is also sensitive to outliers, so that other measures are often used. (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002

- 57. (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Visualization Visualization is the conversion of data into a visual or tabular format so that the characteristics of the data and the relationships among data items or attributes can be analyzed or reported. Visualization of data is one of the most powerful and appealing techniques for data exploration. Humans have a well developed ability to analyze large amounts of information that is presented visuallyCan detect general patterns and trendsCan detect outliers and unusual patterns (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Example: Sea Surface TemperatureThe following shows the Sea Surface Temperature (SST) for July 1982Tens of thousands of data points are summarized in a single figure (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002

- 58. (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 RepresentationIs the mapping of information to a visual formatData objects, their attributes, and the relationships among data objects are translated into graphical elements such as points, lines, shapes, and colors.Example: Objects are often represented as pointsTheir attribute values can be represented as the position of the points or the characteristics of the points, e.g., color, size, and shapeIf position is used, then the relationships of points, i.e., whether they form groups or a point is an outlier, is easily perceived. (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 ArrangementIs the placement of visual elements within a displayCan make a large difference in how easy it is to understand the dataExample: (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR

- 59. 2002 SelectionIs the elimination or the de-emphasis of certain objects and attributesSelection may involve the chossing a subset of attributes Dimensionality reduction is often used to reduce the number of dimensions to two or threeAlternatively, pairs of attributes can be consideredSelection may also involve choosing a subset of objects A region of the screen can only show so many pointsCan sample, but want to preserve points in sparse areas (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Visualization Techniques: HistogramsHistogram Usually shows the distribution of values of a single variableDivide the values into bins and show a bar plot of the number of objects in each bin. The height of each bar indicates the number of objectsShape of histogram depends on the number of binsExample: Petal Width (10 and 20 bins, respectively) (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002

- 60. Two-Dimensional HistogramsShow the joint distribution of the values of two attributes Example: petal width and petal lengthWhat does this tell us? (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Visualization Techniques: Box PlotsBox Plots Invented by J. TukeyAnother way of displaying the distribution of data Following figure shows the basic part of a box plot outlier

- 61. 10th percentile 25th percentile 75th percentile 50th percentile 10th percentile (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Example of Box Plots Box plots can be used to compare attributes (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Visualization Techniques: Scatter PlotsScatter plots Attributes values determine the positionTwo-dimensional scatter plots most common, but can have three-dimensional scatter plotsOften additional attributes can be displayed by using the

- 62. size, shape, and color of the markers that represent the objects It is useful to have arrays of scatter plots can compactly summarize the relationships of several pairs of attributes See example on the next slide (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Scatter Plot Array of Iris Attributes (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Visualization Techniques: Contour PlotsContour plots Useful when a continuous attribute is measured on a spatial gridThey partition the plane into regions of similar valuesThe contour lines that form the boundaries of these regions connect points with equal values The most common example is contour maps of elevationCan also display temperature, rainfall, air pressure, etc.An example for Sea Surface Temperature (SST) is provided on the next slide (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR

- 63. 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Contour Plot Example: SST Dec, 1998 Celsius (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Visualization Techniques: Matrix PlotsMatrix plots Can plot the data matrixThis can be useful when objects are sorted according to classTypically, the attributes are normalized to prevent one attribute from dominating the plot Plots of similarity or distance matrices can also be useful for visualizing the relationships between objectsExamples of matrix plots are presented on the next two slides (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002

- 64. Visualization of the Iris Data Matrix standard deviation (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Visualization of the Iris Correlation Matrix (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Visualization Techniques: Parallel CoordinatesParallel Coordinates Used to plot the attribute values of high- dimensional dataInstead of using perpendicular axes, use a set of parallel axes The attribute values of each object are plotted as a point on each corresponding coordinate axis and the points are connected by a line Thus, each object is represented as a line Often, the lines representing a distinct class of objects

- 65. group together, at least for some attributesOrdering of attributes is important in seeing such groupings (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Parallel Coordinates Plots for Iris Data (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Other Visualization TechniquesStar Plots Similar approach to parallel coordinates, but axes radiate from a central pointThe line connecting the values of an object is a polygonChernoff FacesApproach created by Herman ChernoffThis approach associates each attribute with a characteristic of a faceThe values of each attribute determine the appearance of the corresponding facial characteristic Each object becomes a separate faceRelies on human’s ability to distinguish faces (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002

- 66. (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Star Plots for Iris Data Setosa Versicolour Virginica (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Chernoff Faces for Iris Data Setosa Versicolour

- 67. Virginica (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 OLAPOn-Line Analytical Processing (OLAP) was proposed by E. F. Codd, the father of the relational database.Relational databases put data into tables, while OLAP uses a multidimensional array representation. Such representations of data previously existed in statistics and other fieldsThere are a number of data analysis and data exploration operations that are easier with such a data representation. (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Creating a Multidimensional ArrayTwo key steps in converting tabular data into a multidimensional array.First, identify which attributes are to be the dimensions and which attribute is to be the target attribute whose values appear as entries in the multidimensional array.The attributes used as dimensions must

- 68. have discrete valuesThe target value is typically a count or continuous value, e.g., the cost of an itemCan have no target variable at all except the count of objects that have the same set of attribute valuesSecond, find the value of each entry in the multidimensional array by summing the values (of the target attribute) or count of all objects that have the attribute values corresponding to that entry. (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Example: Iris dataWe show how the attributes, petal length, petal width, and species type can be converted to a multidimensional arrayFirst, we discretized the petal width and length to have categorical values: low, medium, and highWe get the following table - note the count attribute (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Example: Iris data (continued)Each unique tuple of petal width, petal length, and species type identifies one element of the array.This element is assigned the corresponding count value. The figure illustrates

- 69. the result.All non-specified tuples are 0. (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Example: Iris data (continued)Slices of the multidimensional array are shown by the following cross-tabulationsWhat do these tables tell us? (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 OLAP Operations: Data CubeThe key operation of a OLAP is the formation of a data cubeA data cube is a multidimensional representation of data, together with all possible aggregates.By all possible aggregates, we mean the aggregates that result by selecting a proper subset of the dimensions and summing over all remaining dimensions.For example, if we choose the species type dimension of the Iris data and sum over all other dimensions, the result will be a one-dimensional entry with

- 70. three entries, each of which gives the number of flowers of each type. (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Data Cube ExampleConsider a data set that records the sales of products at a number of company stores at various dates.This data can be represented as a 3 dimensional arrayThere are 3 two-dimensional aggregates (3 choose 2 ), 3 one-dimensional aggregates, and 1 zero-dimensional aggregate (the overall total) (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Data Cube Example (continued)The following figure table

- 71. shows one of the two dimensional aggregates, along with two of the one-dimensional aggregates, and the overall total (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 OLAP Operations: Slicing and DicingSlicing is selecting a group of cells from the entire multidimensional array by specifying a specific value for one or more dimensions. Dicing involves selecting a subset of cells by specifying a range of attribute values. This is equivalent to defining a subarray from the complete array. In practice, both operations can also be accompanied by aggregation over some dimensions. (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 OLAP Operations: Roll-up and Drill-downAttribute values often have a hierarchical structure.Each date is associated with a year, month, and week.A location is associated with a continent, country, state (province, etc.), and city. Products can be divided into various categories, such as clothing, electronics, and furniture.Note that these categories often nest and form a tree or latticeA year contains months which contains dayA country

- 72. contains a state which contains a city (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 OLAP Operations: Roll-up and Drill-downThis hierarchical structure gives rise to the roll-up and drill-down operations.For sales data, we can aggregate (roll up) the sales across all the dates in a month. Conversely, given a view of the data where the time dimension is broken into months, we could split the monthly sales totals (drill down) into daily sales totals.Likewise, we can drill down or roll up on the location or product ID attributes. (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 (C) Vipin Kumar, Parallel Issues in Data Mining, VECPAR 2002 Data Mining Classification: Basic Concepts and Techniques Lecture Notes for Chapter 3

- 73. Introduction to Data Mining, 2nd Edition by Tan, Steinbach, Karpatne, Kumar 02/03/2020 Introduction to Data Mining, 2nd Edition 1 Classification: Definition l Given a collection of records (training set ) – Each record is by characterized by a tuple (x,y), where x is the attribute set and y is the class label l Task: – Learn a model that maps each attribute set x into one of the predefined class labels y 02/03/2020 Introduction to Data Mining, 2nd Edition 2 1 2 Examples of Classification Task Task Attribute set, x Class label, y Categorizing email

- 74. messages Features extracted from email message header and content spam or non-spam Identifying tumor cells Features extracted from x-rays or MRI scans malignant or benign cells Cataloging galaxies Features extracted from telescope images Elliptical, spiral, or irregular-shaped galaxies 02/03/2020 Introduction to Data Mining, 2nd Edition 3 General Approach for Building Classification Model Apply Model Learn

- 75. Model Tid Attrib1 Attrib2 Attrib3 Class 1 Yes Large 125K No 2 No Medium 100K No 3 No Small 70K No 4 Yes Medium 120K No 5 No Large 95K Yes 6 No Medium 60K No 7 Yes Large 220K No 8 No Small 85K Yes 9 No Medium 75K No 10 No Small 90K Yes 10 Tid Attrib1 Attrib2 Attrib3 Class 11 No Small 55K ? 12 Yes Medium 80K ? 13 Yes Large 110K ? 14 No Small 95K ?

- 76. 15 No Large 67K ? 10 02/03/2020 Introduction to Data Mining, 2nd Edition 4 3 4 Classification Techniques � Base Classifiers – Decision Tree based Methods – Rule-based Methods – Nearest-neighbor – Neural Networks – Deep Learning – Naïve Bayes and Bayesian Belief Networks – Support Vector Machines � Ensemble Classifiers – Boosting, Bagging, Random Forests 02/03/2020 Introduction to Data Mining, 2nd Edition 5 Example of a Decision Tree ID Home Owner Marital Status Annual Income

- 77. Defaulted Borrower 1 Yes Single 125K No 2 No Married 100K No 3 No Single 70K No 4 Yes Married 120K No 5 No Divorced 95K Yes 6 No Married 60K No 7 Yes Divorced 220K No 8 No Single 85K Yes 9 No Married 75K No 10 No Single 90K Yes 10 Home Owner MarSt Income YESNO NO

- 78. NO Yes No MarriedSingle, Divorced < 80K > 80K Splitting Attributes Training Data Model: Decision Tree 02/03/2020 Introduction to Data Mining, 2nd Edition 6 5 6 Another Example of Decision Tree MarSt Home Owner Income YESNO NO NO

- 79. Yes No Married Single, Divorced < 80K > 80K There could be more than one tree that fits the same data! ID Home Owner Marital Status Annual Income Defaulted Borrower 1 Yes Single 125K No 2 No Married 100K No 3 No Single 70K No 4 Yes Married 120K No 5 No Divorced 95K Yes 6 No Married 60K No 7 Yes Divorced 220K No

- 80. 8 No Single 85K Yes 9 No Married 75K No 10 No Single 90K Yes 10 02/03/2020 Introduction to Data Mining, 2nd Edition 7 Apply Model to Test Data Home Owner MarSt Income YESNO NO NO Yes No MarriedSingle, Divorced < 80K > 80K Home Owner Marital Status

- 81. Annual Income Defaulted Borrower No Married 80K ? 10 Test Data Start from the root of tree. 02/03/2020 Introduction to Data Mining, 2nd Edition 8 7 8 Apply Model to Test Data MarSt Income YESNO NO NO Yes No

- 82. MarriedSingle, Divorced < 80K > 80K Home Owner Marital Status Annual Income Defaulted Borrower No Married 80K ? 10 Test Data Home Owner 02/03/2020 Introduction to Data Mining, 2nd Edition 9 Apply Model to Test Data MarSt Income YESNO NO

- 83. NO Yes No MarriedSingle, Divorced < 80K > 80K Home Owner Marital Status Annual Income Defaulted Borrower No Married 80K ? 10 Test Data Home Owner 02/03/2020 Introduction to Data Mining, 2nd Edition 10 9 10

- 84. Apply Model to Test Data MarSt Income YESNO NO NO Yes No MarriedSingle, Divorced < 80K > 80K Home Owner Marital Status Annual Income Defaulted Borrower No Married 80K ? 10

- 85. Test Data Home Owner 02/03/2020 Introduction to Data Mining, 2nd Edition 11 Apply Model to Test Data MarSt Income YESNO NO NO Yes No Married Single, Divorced < 80K > 80K Home Owner Marital Status Annual Income Defaulted Borrower

- 86. No Married 80K ? 10 Test Data Home Owner 02/03/2020 Introduction to Data Mining, 2nd Edition 12 11 12 Apply Model to Test Data MarSt Income YESNO NO NO Yes No Married Single, Divorced < 80K > 80K

- 87. Home Owner Marital Status Annual Income Defaulted Borrower No Married 80K ? 10 Test Data Assign Defaulted to “No” Home Owner 02/03/2020 Introduction to Data Mining, 2nd Edition 13 Decision Tree Classification Task Apply Model Learn Model Tid Attrib1 Attrib2 Attrib3 Class

- 88. 1 Yes Large 125K No 2 No Medium 100K No 3 No Small 70K No 4 Yes Medium 120K No 5 No Large 95K Yes 6 No Medium 60K No 7 Yes Large 220K No 8 No Small 85K Yes 9 No Medium 75K No 10 No Small 90K Yes 10 Tid Attrib1 Attrib2 Attrib3 Class 11 No Small 55K ? 12 Yes Medium 80K ? 13 Yes Large 110K ? 14 No Small 95K ? 15 No Large 67K ? 10

- 89. Decision Tree 02/03/2020 Introduction to Data Mining, 2nd Edition 14 13 14 Decision Tree Induction � Many Algorithms: – Hunt’s Algorithm (one of the earliest) – CART – ID3, C4.5 – SLIQ,SPRINT 02/03/2020 Introduction to Data Mining, 2nd Edition 15 General Structure of Hunt’s Algorithm l Let Dt be the set of training records that reach a node t l General Procedure: – If Dt contains records that belong the same class yt, then t is a leaf node labeled as yt – If Dt contains records that belong to more than one class, use an attribute test

- 90. to split the data into smaller subsets. Recursively apply the procedure to each subset. Dt ? ID Home Owner Marital Status Annual Income Defaulted Borrower 1 Yes Single 125K No 2 No Married 100K No 3 No Single 70K No 4 Yes Married 120K No 5 No Divorced 95K Yes 6 No Married 60K No 7 Yes Divorced 220K No 8 No Single 85K Yes 9 No Married 75K No

- 91. 10 No Single 90K Yes 10 02/03/2020 Introduction to Data Mining, 2nd Edition 16 15 16 Hunt’s Algorithm (3,0) (4,3) (3,0) (1,3) (3,0) (3,0) (1,0) (0,3) (3,0) (7,3) ID Home Owner Marital Status Annual Income

- 92. Defaulted Borrower 1 Yes Single 125K No 2 No Married 100K No 3 No Single 70K No 4 Yes Married 120K No 5 No Divorced 95K Yes 6 No Married 60K No 7 Yes Divorced 220K No 8 No Single 85K Yes 9 No Married 75K No 10 No Single 90K Yes 10 02/03/2020 Introduction to Data Mining, 2nd Edition 17 Hunt’s Algorithm (3,0) (4,3) (3,0) (1,3) (3,0)

- 93. (3,0) (1,0) (0,3) (3,0) (7,3) ID Home Owner Marital Status Annual Income Defaulted Borrower 1 Yes Single 125K No 2 No Married 100K No 3 No Single 70K No 4 Yes Married 120K No 5 No Divorced 95K Yes 6 No Married 60K No 7 Yes Divorced 220K No 8 No Single 85K Yes 9 No Married 75K No

- 94. 10 No Single 90K Yes 10 02/03/2020 Introduction to Data Mining, 2nd Edition 18 17 18 Hunt’s Algorithm (3,0) (4,3) (3,0) (1,3) (3,0) (3,0) (1,0) (0,3) (3,0) (7,3) ID Home Owner Marital Status Annual Income

- 95. Defaulted Borrower 1 Yes Single 125K No 2 No Married 100K No 3 No Single 70K No 4 Yes Married 120K No 5 No Divorced 95K Yes 6 No Married 60K No 7 Yes Divorced 220K No 8 No Single 85K Yes 9 No Married 75K No 10 No Single 90K Yes 10 02/03/2020 Introduction to Data Mining, 2nd Edition 19 Hunt’s Algorithm (3,0) (4,3) (3,0) (1,3) (3,0)

- 96. (3,0) (1,0) (0,3) (3,0) (7,3) ID Home Owner Marital Status Annual Income Defaulted Borrower 1 Yes Single 125K No 2 No Married 100K No 3 No Single 70K No 4 Yes Married 120K No 5 No Divorced 95K Yes 6 No Married 60K No 7 Yes Divorced 220K No 8 No Single 85K Yes 9 No Married 75K No

- 97. 10 No Single 90K Yes 10 02/03/2020 Introduction to Data Mining, 2nd Edition 20 19 20 Design Issues of Decision Tree Induction l How should training records be split? – Method for specifying test condition – Measure for evaluating the goodness of a test condition l How should the splitting procedure stop? – Stop splitting if all the records belong to the same class or have identical attribute values – Early termination 02/03/2020 Introduction to Data Mining, 2nd Edition 21 Methods for Expressing Test Conditions l Depends on attribute types – Binary – Nominal

- 98. – Ordinal – Continuous l Depends on number of ways to split – 2-way split – Multi-way split 02/03/2020 Introduction to Data Mining, 2nd Edition 22 21 22 Test Condition for Nominal Attributes � Multi-way split: – Use as many partitions as distinct values. � Binary split: – Divides values into two subsets 02/03/2020 Introduction to Data Mining, 2nd Edition 23 Test Condition for Ordinal Attributes l Multi-way split: – Use as many partitions as distinct values l Binary split: – Divides values into two

- 99. subsets – Preserve order property among attribute values This grouping violates order property 02/03/2020 Introduction to Data Mining, 2nd Edition 24 23 24 Test Condition for Continuous Attributes 02/03/2020 Introduction to Data Mining, 2nd Edition 25 Splitting Based on Continuous Attributes � Different ways of handling – Discretization to form an ordinal categorical attribute Ranges can be found by equal interval bucketing, equal frequency bucketing (percentiles), or clustering. – discretize once at the beginning – repeat at each node –

- 100. 02/03/2020 Introduction to Data Mining, 2nd Edition 26 25 26 How to determine the Best Split Before Splitting: 10 records of class 0, 10 records of class 1 Which test condition is the best? 02/03/2020 Introduction to Data Mining, 2nd Edition 27 How to determine the Best Split l Greedy approach: – Nodes with purer class distribution are preferred l Need a measure of node impurity: High degree of impurity Low degree of impurity 02/03/2020 Introduction to Data Mining, 2nd Edition 28 27 28

- 101. Measures of Node Impurity l Gini Index l Entropy l Misclassification error 02/03/2020 Introduction to Data Mining, 2nd Edition 29 ���� ����� = 1 − � � ������� = − � � ��� � (�) �������������� ����� = 1 − max [� (�)] Where �� � is the frequency of class � at node t, and � is the total number of classes Finding the Best Split 1. Compute impurity measure (P) before splitting 2. Compute impurity measure (M) after splitting l Compute impurity measure of each child node l M is the weighted impurity of child nodes 3. Choose the attribute test condition that produces the highest gain Gain = P - M or equivalently, lowest impurity measure after splitting (M) 02/03/2020 Introduction to Data Mining, 2nd Edition 30

- 102. 29 30 Finding the Best Split B? Yes No Node N3 Node N4 A? Yes No Node N1 Node N2 Before Splitting: C0 N10 C1 N11 C0 N20 C1 N21 C0 N30 C1 N31

- 103. C0 N40 C1 N41 C0 N00 C1 N01 P M11 M12 M21 M22 M1 M2 Gain = P – M1 vs P – M2 02/03/2020 Introduction to Data Mining, 2nd Edition 31 Measure of Impurity: GINI � Gini Index for a given node � Where �� � is the frequency of class � at node �, and � is the total number of classes – Maximum of 1 − 1/� when records are equally distributed among all classes, implying the least beneficial situation for classification – Minimum of 0 when all records belong to one class, implying the most beneficial situation for classification 02/03/2020 Introduction to Data Mining, 2nd Edition 32

- 104. ���� ����� = 1 − � � 31 32 Measure of Impurity: GINI � Gini Index for a given node t : – For 2-class problem (p, 1 – p): – p2 – (1 – p)2 = 2p (1-p) C1 0 C2 6 Gini=0.000 C1 2 C2 4 Gini=0.444 C1 3 C2 3 Gini=0.500 C1 1 C2 5 Gini=0.278 02/03/2020 Introduction to Data Mining, 2nd Edition 33

- 105. ���� ����� = 1 − � � Computing Gini Index of a Single Node C1 0 C2 6 C1 2 C2 4 C1 1 C2 5 P(C1) = 0/6 = 0 P(C2) = 6/6 = 1 Gini = 1 – P(C1)2 – P(C2)2 = 1 – 0 – 1 = 0 P(C1) = 1/6 P(C2) = 5/6 Gini = 1 – (1/6)2 – (5/6)2 = 0.278 P(C1) = 2/6 P(C2) = 4/6 Gini = 1 – (2/6)2 – (4/6)2 = 0.444 02/03/2020 Introduction to Data Mining, 2nd Edition 34 ���� ����� = 1 − � �

- 106. 33 34 Computing Gini Index for a Collection of Nodes l When a node � is split into � partitions (children) where, � = number of records at child �,� = number of records at parent node �. l Choose the attribute that minimizes weighted average Gini index of the children l Gini index is used in decision tree algorithms such as CART, SLIQ, SPRINT 02/03/2020 Introduction to Data Mining, 2nd Edition 35 ���� = �� ����(�) Binary Attributes: Computing GINI Index � Splits into two partitions (child nodes) � Effect of Weighing partitions: – Larger and purer partitions are sought B? Yes No Node N1 Node N2

- 107. Parent C1 7 C2 5 Gini = 0.486 N1 N2 C1 5 2 C2 1 4 Gini=0.361 Gini(N1) = 1 – (5/6)2 – (1/6)2 = 0.278 Gini(N2) = 1 – (2/6)2 – (4/6)2 = 0.444 Weighted Gini of N1 N2 = 6/12 * 0.278 + 6/12 * 0.444 = 0.361 Gain = 0.486 – 0.361 = 0.125 02/03/2020 Introduction to Data Mining, 2nd Edition 36 35 36

- 108. Categorical Attributes: Computing Gini Index l For each distinct value, gather counts for each class in the dataset l Use the count matrix to make decisions CarType {Sports, Luxury} {Family} C1 9 1 C2 7 3 Gini 0.468 CarType {Sports} {Family,Luxury} C1 8 2 C2 0 10 Gini 0.167 CarType Family Sports Luxury C1 1 8 1 C2 3 0 7

- 109. Gini 0.163 Multi-way split Two-way split (find best partition of values) Which of these is the best? 02/03/2020 Introduction to Data Mining, 2nd Edition 37 Continuous Attributes: Computing Gini Index l Use Binary Decisions based on one value l Several Choices for the splitting value – Number of possible splitting values = Number of distinct values l Each splitting value has a count matrix associated with it – Class counts in each of the partitions, A ≤ v and A > v l Simple method to choose best v – For each v, scan the database to gather count matrix and compute its Gini index – Computationally Inefficient! Repetition of work.

- 110. ID Home Owner Marital Status Annual Income Defaulted 1 Yes Single 125K No 2 No Married 100K No 3 No Single 70K No 4 Yes Married 120K No 5 No Divorced 95K Yes 6 No Married 60K No 7 Yes Divorced 220K No 8 No Single 85K Yes 9 No Married 75K No 10 No Single 90K Yes 10 ≤ 80 > 80 Defaulted Yes 0 3 Defaulted No 3 4 Annual Income ?

- 111. 02/03/2020 Introduction to Data Mining, 2nd Edition 38 37 38 Cheat No No No Yes Yes Yes No No No No Annual Income 60 70 75 85 90 95 100 120 125 220 55 65 72 80 87 92 97 110 122 172 230 <= > <= > <= > <= > <= > <= > <= > <= > <= > <= > <= > Yes 0 3 0 3 0 3 0 3 1 2 2 1 3 0 3 0 3 0 3 0 3 0 No 0 7 1 6 2 5 3 4 3 4 3 4 3 4 4 3 5 2 6 1 7 0 Gini 0.420 0.400 0.375 0.343 0.417 0.400 0.300 0.343 0.375 0.400 0.420 Continuous Attributes: Computing Gini Index... l For efficient computation: for each attribute, – Sort the attribute on values – Linearly scan these values, each time updating the count matrix and computing gini index – Choose the split position that has the least gini index

- 112. Sorted Values 02/03/2020 Introduction to Data Mining, 2nd Edition 39 Cheat No No No Yes Yes Yes No No No No Annual Income 60 70 75 85 90 95 100 120 125 220 55 65 72 80 87 92 97 110 122 172 230 <= > <= > <= > <= > <= > <= > <= > <= > <= > <= > <= > Yes 0 3 0 3 0 3 0 3 1 2 2 1 3 0 3 0 3 0 3 0 3 0 No 0 7 1 6 2 5 3 4 3 4 3 4 3 4 4 3 5 2 6 1 7 0 Gini 0.420 0.400 0.375 0.343 0.417 0.400 0.300 0.343 0.375 0.400 0.420 Continuous Attributes: Computing Gini Index... l For efficient computation: for each attribute, – Sort the attribute on values – Linearly scan these values, each time updating the count matrix and computing gini index – Choose the split position that has the least gini index Split Positions Sorted Values

- 113. 02/03/2020 Introduction to Data Mining, 2nd Edition 40 39 40 Cheat No No No Yes Yes Yes No No No No Annual Income 60 70 75 85 90 95 100 120 125 220 55 65 72 80 87 92 97 110 122 172 230 <= > <= > <= > <= > <= > <= > <= > <= > <= > <= > <= > Yes 0 3 0 3 0 3 0 3 1 2 2 1 3 0 3 0 3 0 3 0 3 0 No 0 7 1 6 2 5 3 4 3 4 3 4 3 4 4 3 5 2 6 1 7 0 Gini 0.420 0.400 0.375 0.343 0.417 0.400 0.300 0.343 0.375 0.400 0.420 Continuous Attributes: Computing Gini Index... l For efficient computation: for each attribute, – Sort the attribute on values – Linearly scan these values, each time updating the count matrix and computing gini index – Choose the split position that has the least gini index

- 114. Split Positions Sorted Values 02/03/2020 Introduction to Data Mining, 2nd Edition 41 Cheat No No No Yes Yes Yes No No No No Annual Income 60 70 75 85 90 95 100 120 125 220 55 65 72 80 87 92 97 110 122 172 230 <= > <= > <= > <= > <= > <= > <= > <= > <= > <= > <= > Yes 0 3 0 3 0 3 0 3 1 2 2 1 3 0 3 0 3 0 3 0 3 0 No 0 7 1 6 2 5 3 4 3 4 3 4 3 4 4 3 5 2 6 1 7 0 Gini 0.420 0.400 0.375 0.343 0.417 0.400 0.300 0.343 0.375 0.400 0.420 Continuous Attributes: Computing Gini Index... l For efficient computation: for each attribute, – Sort the attribute on values – Linearly scan these values, each time updating the count matrix and computing gini index – Choose the split position that has the least gini index Split Positions Sorted Values

- 115. 02/03/2020 Introduction to Data Mining, 2nd Edition 42 41 42 Cheat No No No Yes Yes Yes No No No No Annual Income 60 70 75 85 90 95 100 120 125 220 55 65 72 80 87 92 97 110 122 172 230 <= > <= > <= > <= > <= > <= > <= > <= > <= > <= > <= > Yes 0 3 0 3 0 3 0 3 1 2 2 1 3 0 3 0 3 0 3 0 3 0 No 0 7 1 6 2 5 3 4 3 4 3 4 3 4 4 3 5 2 6 1 7 0 Gini 0.420 0.400 0.375 0.343 0.417 0.400 0.300 0.343 0.375 0.400 0.420 Continuous Attributes: Computing Gini Index... l For efficient computation: for each attribute, – Sort the attribute on values – Linearly scan these values, each time updating the count matrix and computing gini index – Choose the split position that has the least gini index

- 116. Split Positions Sorted Values 02/03/2020 Introduction to Data Mining, 2nd Edition 43 Measure of Impurity: Entropy l Entropy at a given node � Where �� � is the frequency of class � at node �, and � is the total number of classes � when records are equally distributed among all classes, implying the least beneficial situation for classification implying most beneficial situation for classification – Entropy based computations are quite similar to the GINI index computations 02/03/2020 Introduction to Data Mining, 2nd Edition 44 ������� = − � � ��� � (�) 43 44 Computing Entropy of a Single Node C1 0 C2 6

- 117. C1 2 C2 4 C1 1 C2 5 P(C1) = 0/6 = 0 P(C2) = 6/6 = 1 Entropy = – 0 log 0 – 1 log 1 = – 0 – 0 = 0 P(C1) = 1/6 P(C2) = 5/6 Entropy = – (1/6) log2 (1/6) – (5/6) log2 (1/6) = 0.65 P(C1) = 2/6 P(C2) = 4/6 Entropy = – (2/6) log2 (2/6) – (4/6) log2 (4/6) = 0.92 02/03/2020 Introduction to Data Mining, 2nd Edition 45 ������� = − � � ��� � (�) Computing Information Gain After Splitting l Information Gain: Parent Node, � is split into � partitions (children)� is number of records in child node � – Choose the split that achieves most reduction (maximizes

- 118. GAIN) – Used in the ID3 and C4.5 decision tree algorithms – Information gain is the mutual information between the class variable and the splitting variable 02/03/2020 Introduction to Data Mining, 2nd Edition 46 ���� = ������� � − �� �������(�) 45 46 Problem with large number of partitions � Node impurity measures tend to prefer splits that result in large number of partitions, each being small but pure – Customer ID has highest information gain because entropy for all the children is zero 02/03/2020 Introduction to Data Mining, 2nd Edition 47 Gain Ratio l Gain Ratio: Parent Node, � is split into � partitions (children)� is number of records in child node � – Adjusts Information Gain by the entropy of the partitioning

- 119. (����� ����). is penalized! – Used in C4.5 algorithm – Designed to overcome the disadvantage of Information Gain 02/03/2020 Introduction to Data Mining, 2nd Edition 48 ���� ����� = ��������� ���� ����� ���� = − �� ��� �� 47 48 Gain Ratio l Gain Ratio: Parent Node, � is split into � partitions (children)� is number of records in child node � CarType {Sports, Luxury} {Family} C1 9 1 C2 7 3 Gini 0.468

- 120. CarType {Sports} {Family,Luxury} C1 8 2 C2 0 10 Gini 0.167 CarType Family Sports Luxury C1 1 8 1 C2 3 0 7 Gini 0.163 SplitINFO = 1.52 SplitINFO = 0.72 SplitINFO = 0.97 02/03/2020 Introduction to Data Mining, 2nd Edition 49 ���� ����� = ��������� ���� ����� ���� = �� ��� �� Measure of Impurity: Classification Error l Classification error at a node � – Maximum of 1 − 1/� when records are equally distributed among all classes, implying the least interesting situation

- 121. – Minimum of 0 when all records belong to one class, implying the most interesting situation 02/03/2020 Introduction to Data Mining, 2nd Edition 50 ����� � = 1 − max [� � ] 49 50 Computing Error of a Single Node C1 0 C2 6 C1 2 C2 4 C1 1 C2 5 P(C1) = 0/6 = 0 P(C2) = 6/6 = 1 Error = 1 – max (0, 1) = 1 – 1 = 0 P(C1) = 1/6 P(C2) = 5/6

- 122. Error = 1 – max (1/6, 5/6) = 1 – 5/6 = 1/6 P(C1) = 2/6 P(C2) = 4/6 Error = 1 – max (2/6, 4/6) = 1 – 4/6 = 1/3 02/03/2020 Introduction to Data Mining, 2nd Edition 51 ����� � = 1 − max [� � ] Comparison among Impurity Measures For a 2-class problem: 02/03/2020 Introduction to Data Mining, 2nd Edition 52 51 52 Misclassification Error vs Gini Index A? Yes No Node N1 Node N2 Parent C1 7 C2 3 Gini = 0.42

- 123. N1 N2 C1 3 4 C2 0 3 Gini=0.342 Gini(N1) = 1 – (3/3)2 – (0/3)2 = 0 Gini(N2) = 1 – (4/7)2 – (3/7)2 = 0.489 Gini(Children) = 3/10 * 0 + 7/10 * 0.489 = 0.342 Gini improves but error remains the same!! 02/03/2020 Introduction to Data Mining, 2nd Edition 53 Misclassification Error vs Gini Index A? Yes No Node N1 Node N2 Parent

- 124. C1 7 C2 3 Gini = 0.42 N1 N2 C1 3 4 C2 0 3 Gini=0.342 N1 N2 C1 3 4 C2 1 2 Gini=0.416 Misclassification error for all three cases = 0.3 ! 02/03/2020 Introduction to Data Mining, 2nd Edition 54 53 54 Decision Tree Based Classification l Advantages: – Inexpensive to construct – Extremely fast at classifying unknown records – Easy to interpret for small-sized trees – Robust to noise (especially when methods to avoid

- 125. overfitting are employed) – Can easily handle redundant or irrelevant attributes (unless the attributes are interacting) l Disadvantages: – Space of possible decision trees is exponentially large. Greedy approaches are often unable to find the best tree. – Does not take into account interactions between attributes – Each decision boundary involves only a single attribute 02/03/2020 Introduction to Data Mining, 2nd Edition 55 Handling interactions X Y + : 1000 instances o : 1000 instances Entropy (X) : 0.99 Entropy (Y) : 0.99 02/03/2020 Introduction to Data Mining, 2nd Edition 56 55 56

- 126. Handling interactions + : 1000 instances o : 1000 instances Adding Z as a noisy attribute generated from a uniform distribution Y Z Y Z X Entropy (X) : 0.99 Entropy (Y) : 0.99 Entropy (Z) : 0.98 Attribute Z will be chosen for splitting! X 02/03/2020 Introduction to Data Mining, 2nd Edition 57 Limitations of single attribute-based decision boundaries Both positive (+) and negative (o) classes

- 127. generated from skewed Gaussians with centers at (8,8) and (12,12) respectively. 02/03/2020 Introduction to Data Mining, 2nd Edition 58 57 58 02/05/2020 Introduction to Data Mining, 2nd Edition 1 Data Mining Model Overfitting Introduction to Data Mining, 2nd Edition by Tan, Steinbach, Karpatne, Kumar 02/05/2020 Introduction to Data Mining, 2nd Edition 2 Classification Errors � Training errors (apparent errors) – Errors committed on the training set � Test errors – Errors committed on the test set

- 128. � Generalization errors – Expected error of a model over random selection of records from same distribution 1 2 02/05/2020 Introduction to Data Mining, 2nd Edition 3 Example Data Set Two class problem: + : 5400 instances • 5000 instances generated from a Gaussian centered at (10,10) • 400 noisy instances added o : 5400 instances • Generated from a uniform distribution 10 % of the data used for training and 90% of the data used for testing 02/05/2020 Introduction to Data Mining, 2nd Edition 4 Increasing number of nodes in Decision Trees

- 129. 3 4 02/05/2020 Introduction to Data Mining, 2nd Edition 5 Decision Tree with 4 nodes Decision Tree Decision boundaries on Training data 02/05/2020 Introduction to Data Mining, 2nd Edition 6 Decision Tree with 50 nodes Decision TreeDecision Tree Decision boundaries on Training data 5 6 02/05/2020 Introduction to Data Mining, 2nd Edition 7 Which tree is better? Decision Tree with 4 nodes Decision Tree with 50 nodes

- 130. Which tree is better ? 02/05/2020 Introduction to Data Mining, 2nd Edition 8 Model Overfitting Underfitting: when model is too simple, both training and test errors are large Overfitting: when model is too complex, training error is small but test error is large •As the model becomes more and more complex, test errors can start increasing even though training error may be decreasing 7 8 02/05/2020 Introduction to Data Mining, 2nd Edition 9 Model Overfitting Using twice the number of data instances • Increasing the size of training data reduces the difference between training and testing errors at a given size of model 02/05/2020 Introduction to Data Mining, 2nd Edition 10 Model Overfitting

- 131. Using twice the number of data instances • Increasing the size of training data reduces the difference between training and testing errors at a given size of model Decision Tree with 50 nodes Decision Tree with 50 nodes 9 10 02/05/2020 Introduction to Data Mining, 2nd Edition 11 Reasons for Model Overfitting � Limited Training Size � High Model Complexity – Multiple Comparison Procedure 02/05/2020 Introduction to Data Mining, 2nd Edition 12 Effect of Multiple Comparison Procedure � Consider the task of predicting whether stock market will rise/fall in the next 10 trading days � Random guessing: P(correct) = 0.5 � Make 10 random guesses in a row:

- 132. Day 1 Up Day 2 Down Day 3 Down Day 4 Up Day 5 Down Day 6 Down Day 7 Up Day 8 Up Day 9 Up Day 10 Down 0547.0 2 10 10 9 10 8 10

- 133. 11 12 02/05/2020 Introduction to Data Mining, 2nd Edition 13 Effect of Multiple Comparison Procedure � Approach: – Get 50 analysts – Each analyst makes 10 random guesses – Choose the analyst that makes the most number of correct predictions � Probability that at least one analyst makes at least 8 correct predictions

- 134. 02/05/2020 Introduction to Data Mining, 2nd Edition 14 Effect of Multiple Comparison Procedure � Many algorithms employ the following greedy strategy: – Initial model: M – (e.g., a test condition of a decision tree) – � � If many alternatives are available, one may inadvertently add irrelevant components to the model, resulting in model overfitting 13 14 02/05/2020 Introduction to Data Mining, 2nd Edition 15 Effect of Multiple Comparison - Example Use additional 100 noisy variables generated from a uniform distribution along with X and Y as attributes.

- 135. Use 30% of the data for training and 70% of the data for testing Using only X and Y as attributes 02/05/2020 Introduction to Data Mining, 2nd Edition 16 Notes on Overfitting � Overfitting results in decision trees that are more complex than necessary � Training error does not provide a good estimate of how well the tree will perform on previously unseen records � Need ways for estimating generalization errors 15 16 02/05/2020 Introduction to Data Mining, 2nd Edition 17 Model Selection � Performed during model building � Purpose is to ensure that model is not overly complex (to avoid overfitting) � Need to estimate generalization error – Using Validation Set

- 136. – Incorporating Model Complexity – Estimating Statistical Bounds 02/05/2020 Introduction to Data Mining, 2nd Edition 18 Model Selection: Using Validation Set � Divide training data into two parts: – Training set: – Validation set: � Drawback: – Less data available for training 17 18 02/05/2020 Introduction to Data Mining, 2nd Edition 19 Model Selection: Incorporating Model Complexity � Rationale: Occam’s Razor – Given two models of similar generalization errors,

- 137. one should prefer the simpler model over the more complex model – A complex model has a greater chance of being fitted accidentally – Therefore, one should include model complexity when evaluating a model Gen. Error(Model) = Train. Error(Model, Train. Data) + x Complexity(Model) 02/05/2020 Introduction to Data Mining, 2nd Edition 20 Estimating the Complexity of Decision Trees � Pessimistic Error Estimate of decision tree T with k leaf nodes: – err(T): error rate on all training records – -off hyper-parameter (similar to ) – k: number of leaf nodes – Ntrain: total number of training records 19 20 02/05/2020 Introduction to Data Mining, 2nd Edition 21 Estimating the Complexity of Decision Trees: Example

- 138. e(TL) = 4/24 e(TR) = 6/24 egen(TL) = 4/24 + 1*7/24 = 11/24 = 0.458 egen(TR) = 6/24 + 1*4/24 = 10/24 = 0.417 02/05/2020 Introduction to Data Mining, 2nd Edition 22 Estimating the Complexity of Decision Trees � Resubstitution Estimate: – Using training error as an optimistic estimate of generalization error – Referred to as optimistic error estimate e(TL) = 4/24 e(TR) = 6/24 21 22 02/05/2020 Introduction to Data Mining, 2nd Edition 23 Minimum Description Length (MDL) � Cost(Model,Data) = Cost(Data|Model) + x Cost(Model)

- 139. – Cost is the number of bits needed for encoding. – Search for the least costly model. � Cost(Data|Model) encodes the misclassification errors. � Cost(Model) uses node encoding (number of children) plus splitting condition encoding. A B A? B? C? 10 0 1 Yes No B1 B2 C1 C2 X y X1 1 X2 0 X3 0 X4 1 … … Xn 1

- 140. X y X1 ? X2 ? X3 ? X4 ? … … Xn ? 02/05/2020 Introduction to Data Mining, 2nd Edition 24 Estimating Statistical Bounds Before splitting: e = 2/7, e’(7, 2/7, 0.25) = 0.503 After splitting: e(TL) = 1/4, e’(4, 1/4, 0.25) = 0.537 e(TR) = 1/3, e’(3, 1/3, 0.25) = 0.650 N z N z N ee z N

- 142. Therefore, do not split 23 24 02/05/2020 Introduction to Data Mining, 2nd Edition 25 Model Selection for Decision Trees � Pre-Pruning (Early Stopping Rule) – Stop the algorithm before it becomes a fully-grown tree – Typical stopping conditions for a node: – More restrictive conditions: -specified threshold p if class distribution of instances are independent of the measures (e.g., Gini or information gain). below certain threshold 02/05/2020 Introduction to Data Mining, 2nd Edition 26 Model Selection for Decision Trees

- 143. � Post-pruning – Grow decision tree to its entirety – Subtree replacement -up fashion If generalization error improves after trimming, replace sub-tree by a leaf node majority class of instances in the sub-tree – Subtree raising 25 26 02/05/2020 Introduction to Data Mining, 2nd Edition 27 Example of Post-Pruning A? A1 A2 A3 A4 Class = Yes 20 Class = No 10 Error = 10/30

- 144. Training Error (Before splitting) = 10/30 Pessimistic error = (10 + 0.5)/30 = 10.5/30 Training Error (After splitting) = 9/30 Pessimistic error (After splitting) PRUNE! Class = Yes 8 Class = No 4 Class = Yes 3 Class = No 4 Class = Yes 4 Class = No 1 Class = Yes 5 Class = No 1 02/05/2020 Introduction to Data Mining, 2nd Edition 28 Examples of Post-pruning 27 28 02/05/2020 Introduction to Data Mining, 2nd Edition 29

- 145. Model Evaluation � Purpose: – To estimate performance of classifier on previously unseen data (test set) � Holdout – Reserve k% for training and (100-k)% for testing – Random subsampling: repeated holdout � Cross validation – Partition data into k disjoint subsets – k-fold: train on k-1 partitions, test on the remaining one – Leave-one-out: k=n 02/05/2020 Introduction to Data Mining, 2nd Edition 30 Cross-validation Example � 3-fold cross-validation 29 30 02/05/2020 Introduction to Data Mining, 2nd Edition 31 Variations on Cross-validation � Repeated cross-validation – Perform cross-validation a number of times – Gives an estimate of the variance of the

- 146. generalization error � Stratified cross-validation – Guarantee the same percentage of class labels in training and test – Important when classes are imbalanced and the sample is small � Use nested cross-validation approach for model selection and evaluation 31 COLLAPSE Top of Form During quarantine I've been watching my boyfriend play more of his video games lately. The lobbies he's in tend to be pretty mature, and even toxic at times, despite games that are usually targeted at a young audience (Fortnite for example). Without the supervision of adults during their gaming, I've noticed many young children have a tendency to say and do things they and their parents would consider inappropriate even if they have been taught better. I would want to see how many children are influenced by having older gaming members in their lobby and how this effects their speech and gaming decisions. In this setting, I would be an unacknowledged observer because rather than playing and seeing how children's behaviors change toward older community members, I would be simply observing through my boyfriend's game play. According to the text, in this case without informing the children that they are being observed there could be some ethic rule breaking that would occur, but on