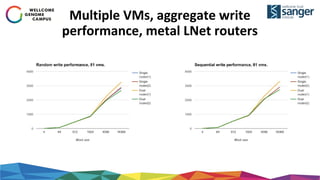

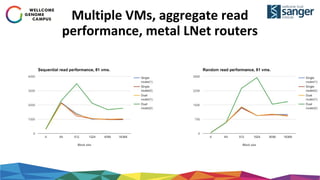

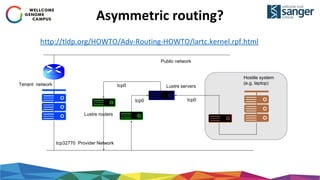

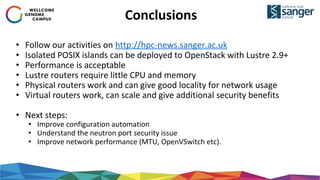

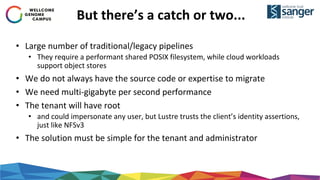

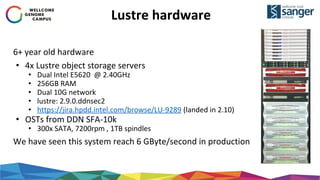

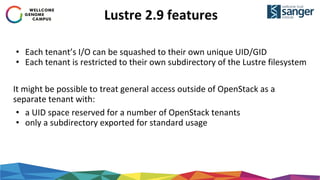

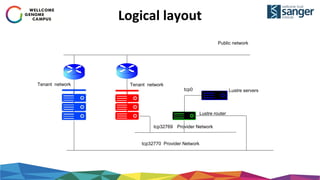

The document discusses the implementation of secure multi-tenant Lustre access in an OpenStack environment, highlighting performance and security considerations. It outlines the critical configuration steps for integrating Lustre filesystems with OpenStack while addressing challenges such as legacy pipelines and the need for high-performance shared storage. Key findings indicate that both physical and virtual Lustre routers can deliver acceptable performance while providing enhanced security and fault isolation for tenants.

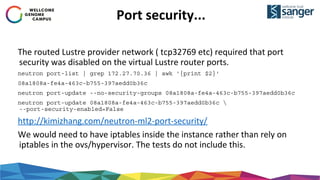

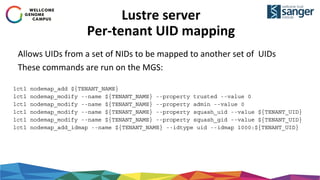

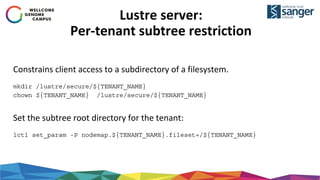

![Lustre server:

Map nodemap to network

Add the tenant network range to the Lustre nodemap

lctl nodemap_add_range --name ${TENANT_NAME} --range

[0-255].[0-255].[0-255].[0-255]@tcp${TENANT_UID}

And this command adds a route via a Lustre network router. This is run on all

MDS and OSS (or the route added to

/etc/modprobe.d/lustre.conf)

lnetctl route add --net tcp${TENANT_UID} --gateway ${LUSTRE_ROUTER_IP}@tcp

In the same way a similar command is needed on each client using TCP](https://image.slidesharecdn.com/wellcome-trust-sanger-sc17-user-group-171213163551/85/Experiences-in-Providing-Secure-Mult-Tenant-Lustre-Access-to-OpenStack-11-320.jpg)