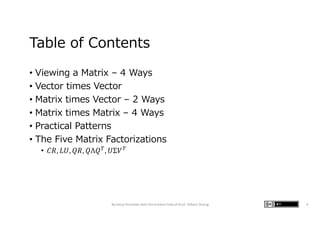

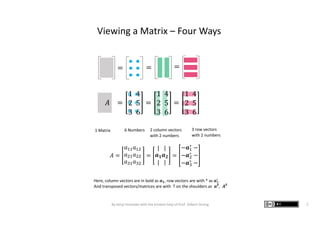

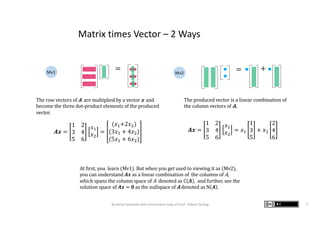

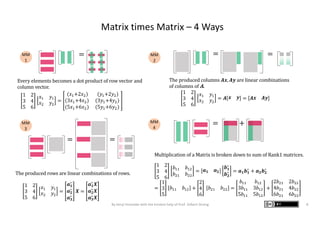

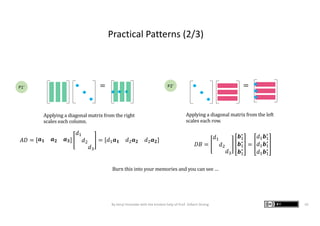

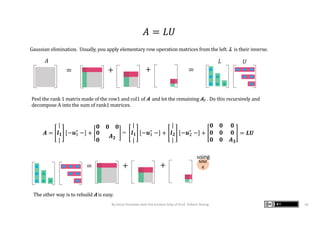

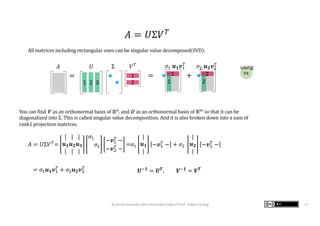

The document presents graphic notes summarizing Professor Gilbert Strang's book 'Linear Algebra for Everyone,' aimed at providing an intuitive understanding of linear algebra concepts through visual illustrations. It highlights key topics such as matrix operations, different multiplication methods, and various matrix factorizations including SVD. Additionally, it serves as an educational resource with links to complementary MIT course materials.