This document provides an overview of key linear algebra concepts:

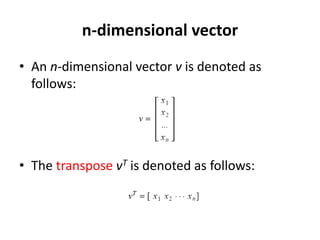

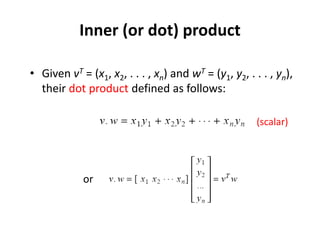

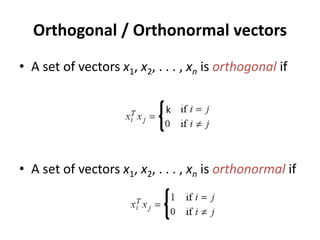

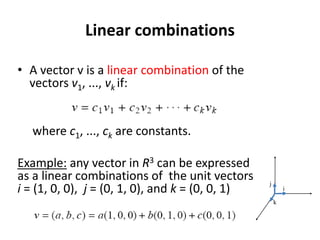

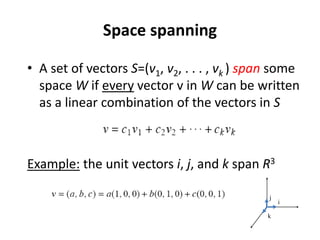

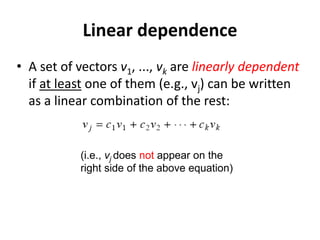

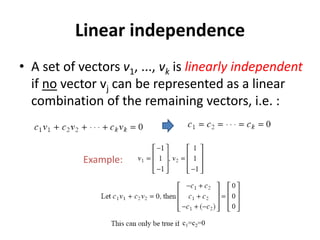

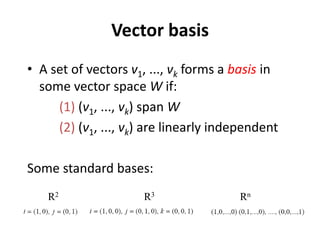

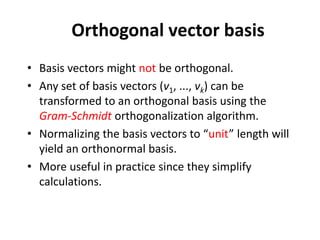

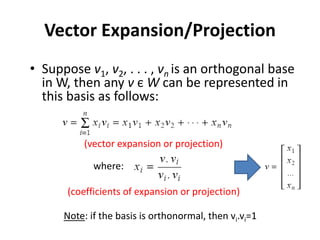

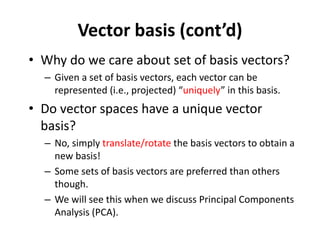

1) It defines vectors, inner products, orthogonality, linear combinations, and vector spaces.

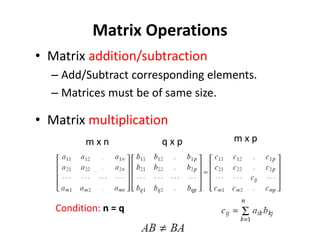

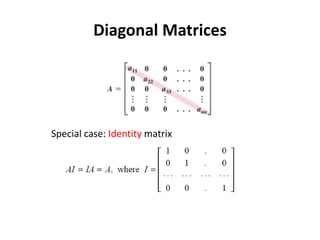

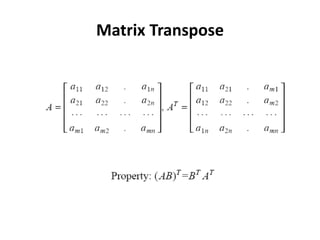

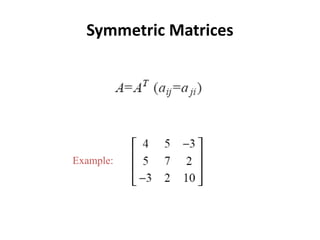

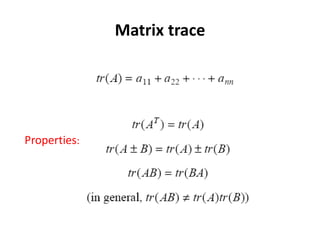

2) It covers matrix operations like addition, multiplication, transposes, and properties of diagonal, symmetric, and identity matrices.

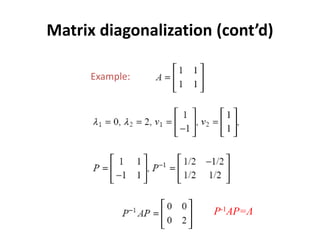

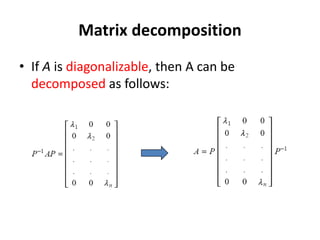

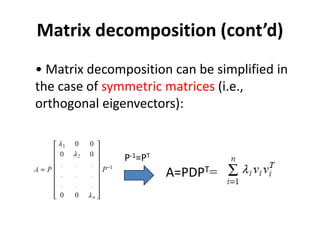

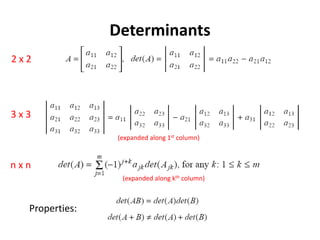

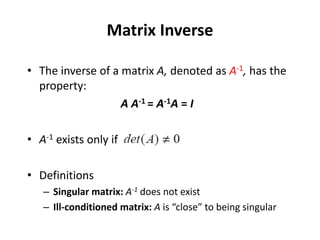

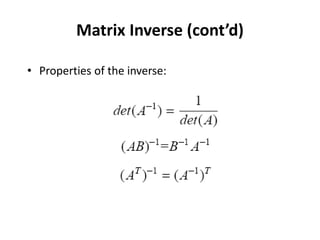

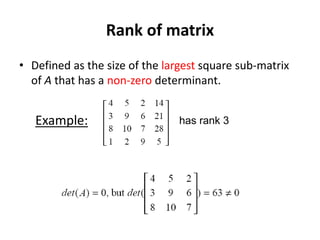

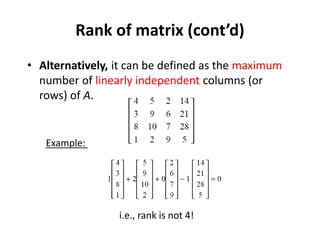

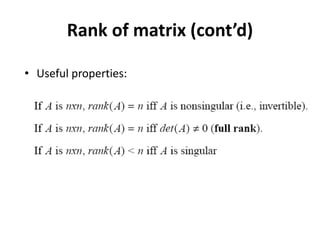

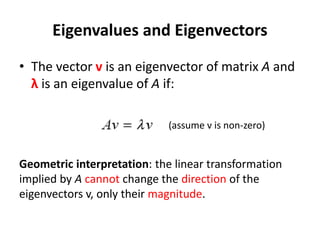

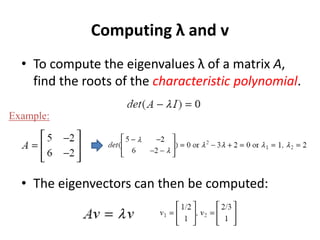

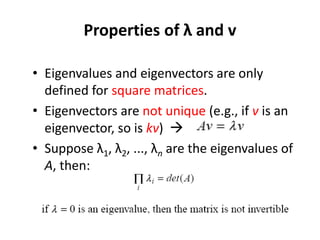

3) It discusses matrix inverses, determinants, ranks, eigenvalues/eigenvectors, matrix diagonalization, and decomposition.

4) Key concepts are illustrated with examples, including how vectors can be expressed as linear combinations of basis vectors and how matrices can be diagonalized using eigenvectors.

![Matrix diagonalization

• Given an n x n matrix A, find P such that:

P-1AP=Λ where Λ is diagonal

• Solution: Set P = [v1 v2 . . . vn], where v1,v2 ,. . .

vn are the eigenvectors of A: eigenvalues of A

P-1AP=Λ](https://image.slidesharecdn.com/linearalgebrareview-220814143548-ce3eb8cd/85/LinearAlgebraReview-ppt-27-320.jpg)