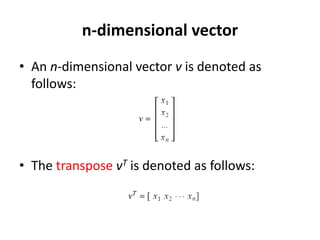

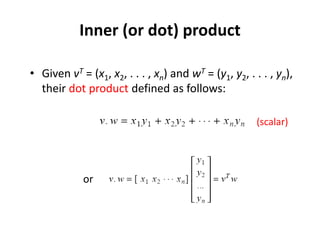

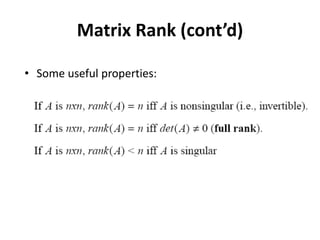

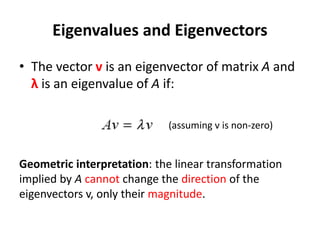

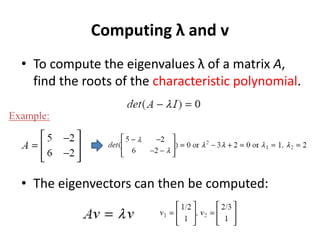

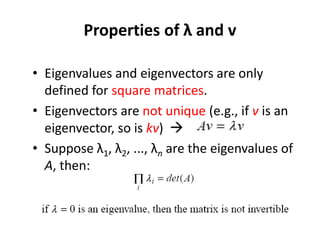

Vectors and matrices are fundamental concepts in linear algebra. Vectors can be added, scaled, and combined linearly. Sets of vectors may be orthogonal, independent, or dependent. Matrices can represent linear transformations and be added, multiplied, inverted, and decomposed. Important matrix properties include rank, eigenvalues/eigenvectors, diagonalization, and decomposition.

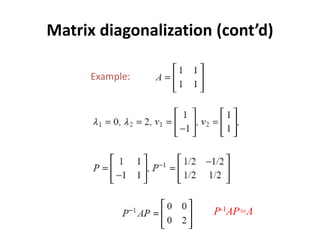

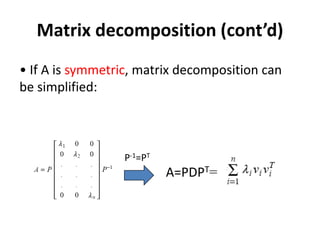

![Matrix diagonalization

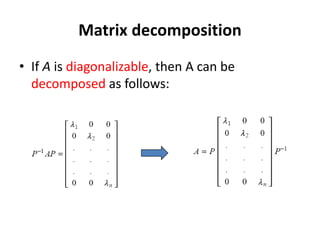

• Given an n x n matrix A, find P such that:

P-1AP=Λ where Λ is diagonal

• Solution: set P = [v1 v2 . . . vn], where v1,v2 ,. . .

vn are the eigenvectors of A: eigenvalues of A

P-1AP=Λ](https://image.slidesharecdn.com/linearalgebrareview-230326162403-578585b0/85/LinearAlgebraReview-ppt-29-320.jpg)