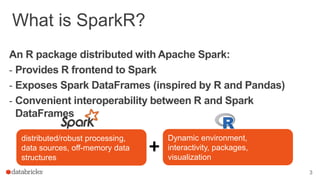

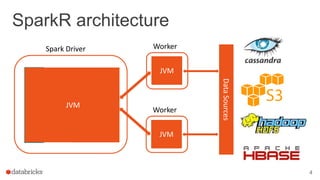

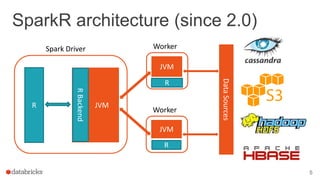

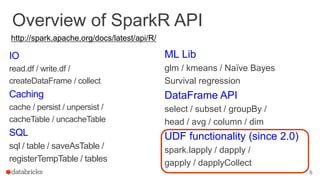

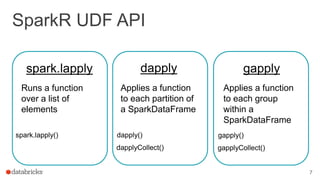

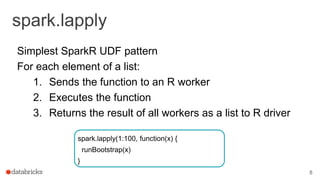

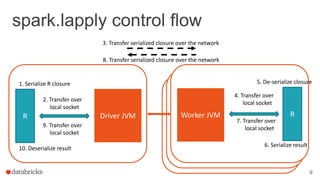

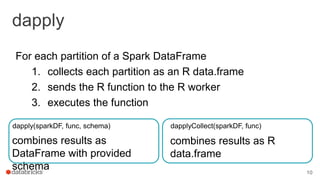

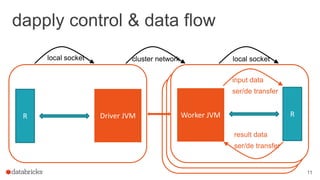

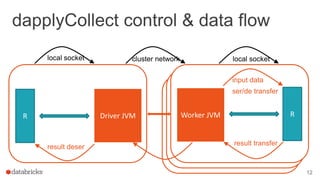

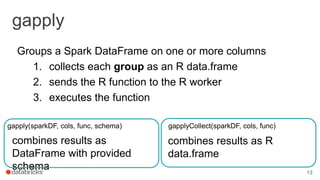

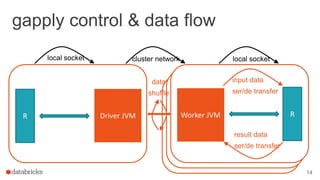

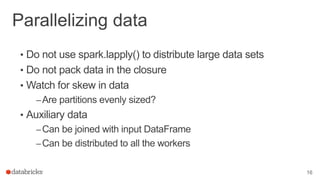

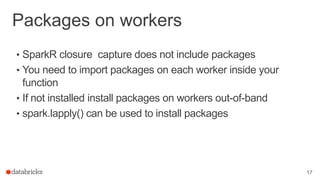

The document discusses the use of SparkR, an R package integrated with Apache Spark, which facilitates interoperability between R and Spark dataframes. It details the architecture of SparkR, its API functionalities for data manipulation and machine learning, as well as techniques for parallelizing computations with functions like spark.lapply, dapply, and gapply. The document also addresses considerations for debugging and managing package installations on SparkR workers.