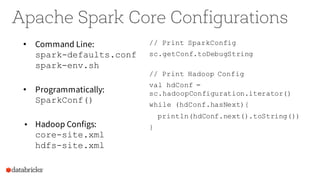

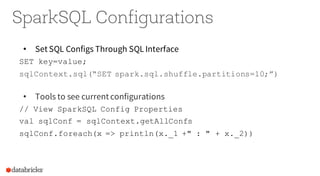

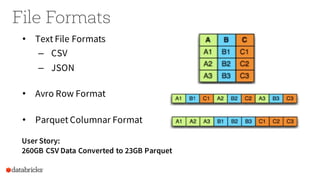

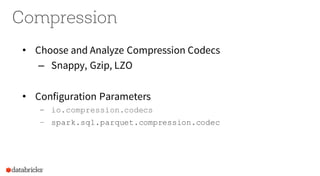

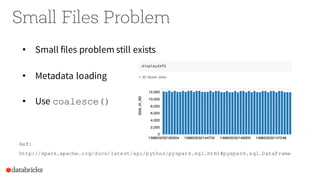

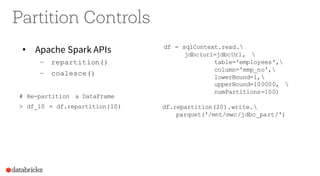

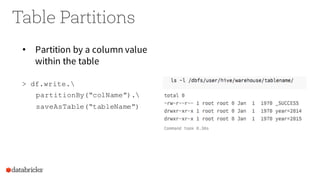

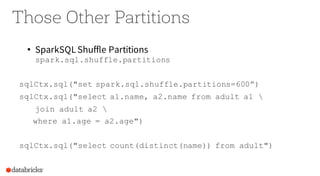

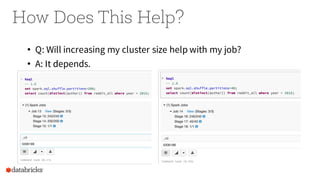

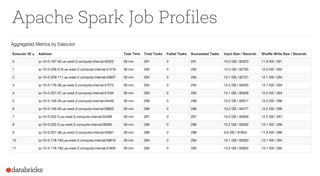

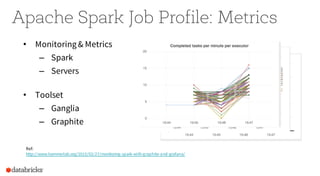

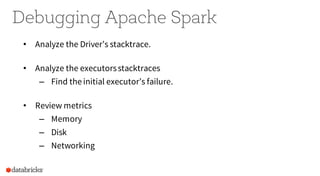

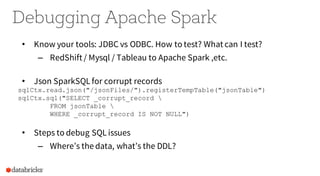

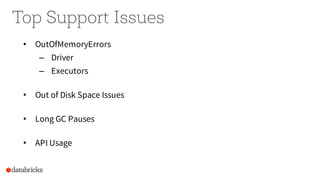

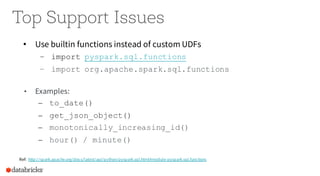

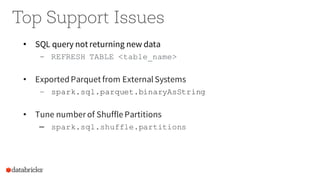

Operational Tips for Deploying Apache Spark provides an overview of Apache Spark configuration, pipeline design best practices, and debugging techniques. It discusses how to configure Spark through command line options, programmatically, and Hadoop configs. It also covers topics like file formats, compression codecs, partitioning, and monitoring Spark jobs. The document provides tips on common issues like OutOfMemoryErrors, debugging SQL queries, and tuning shuffle partitions.