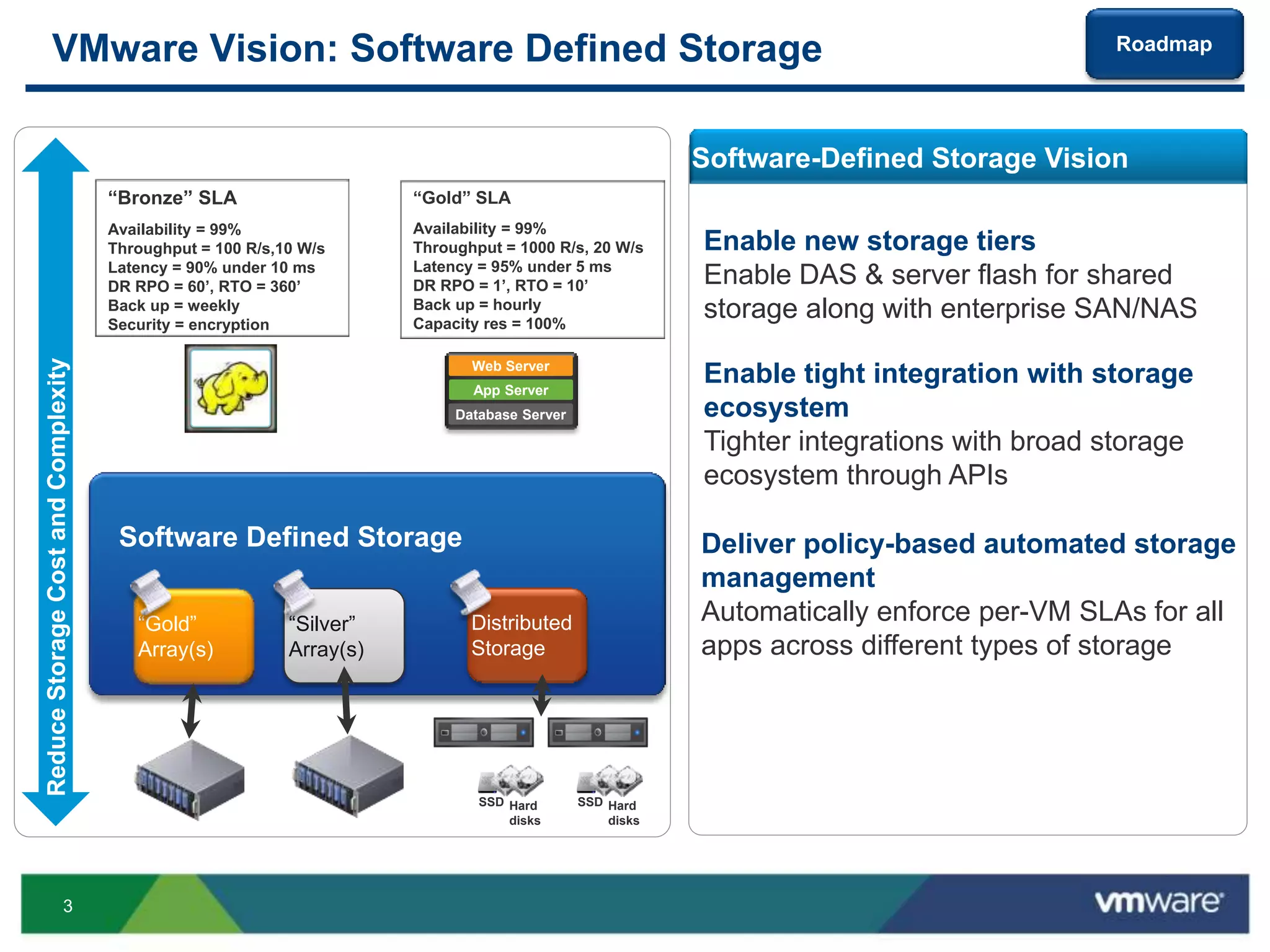

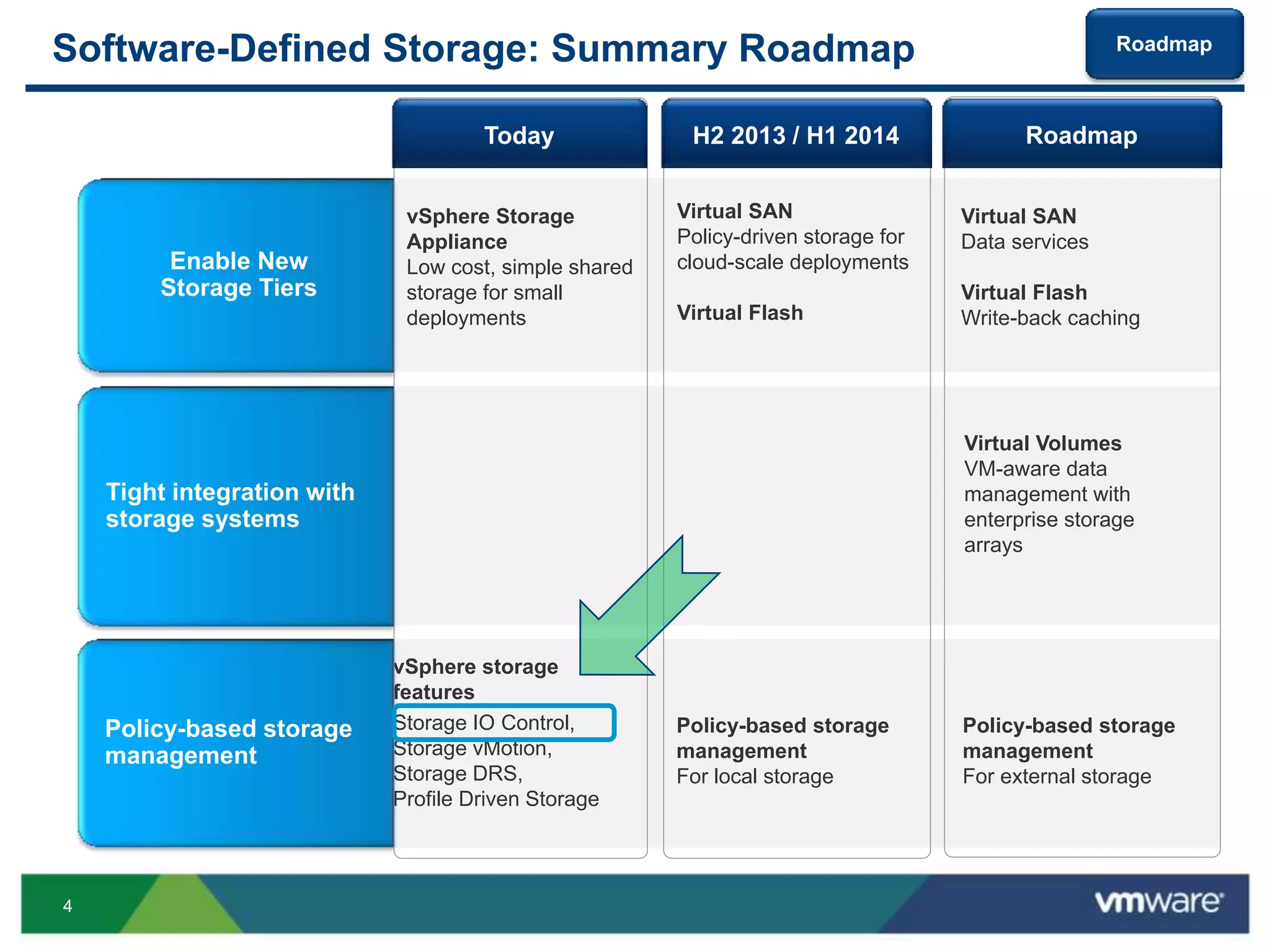

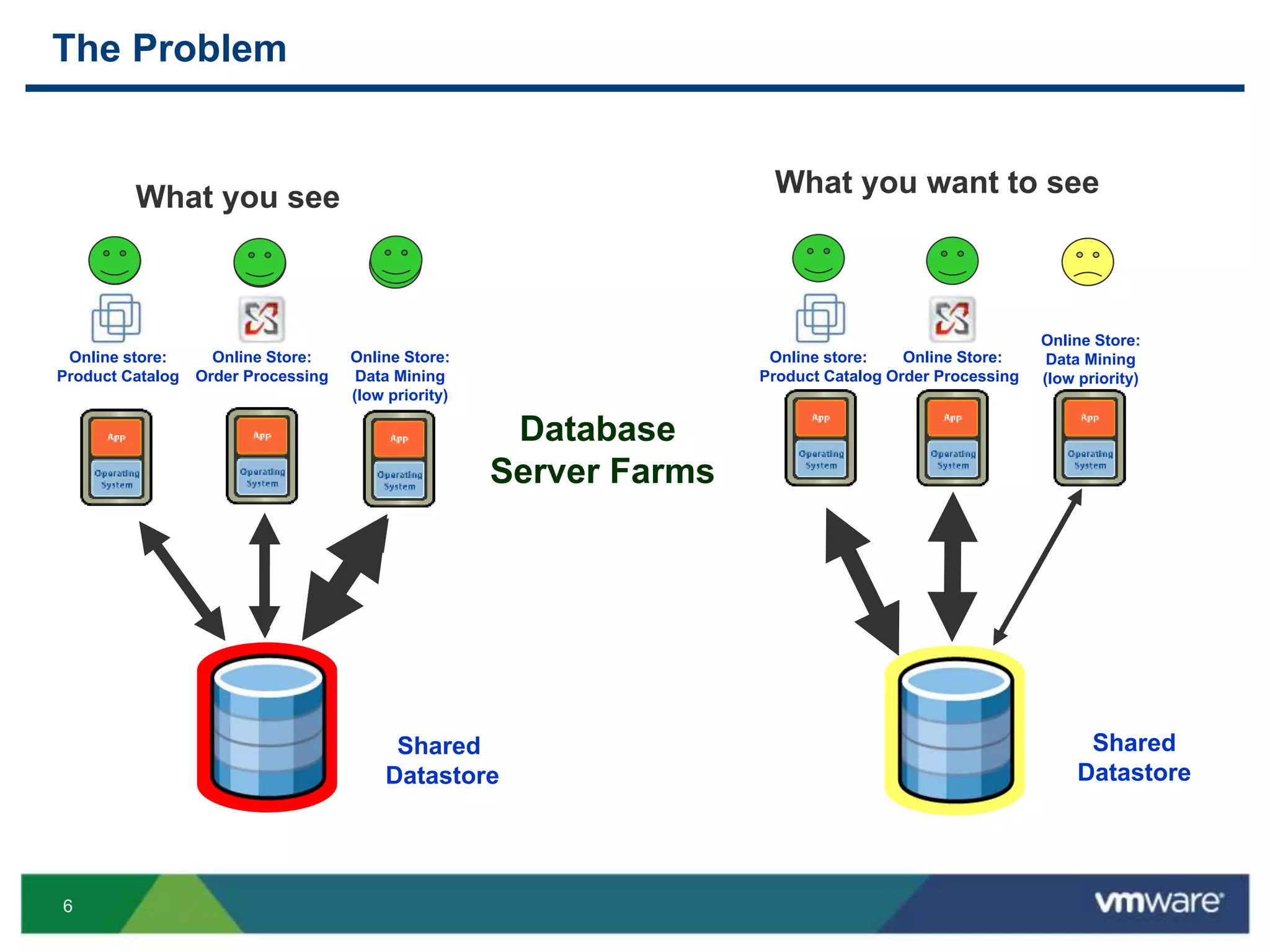

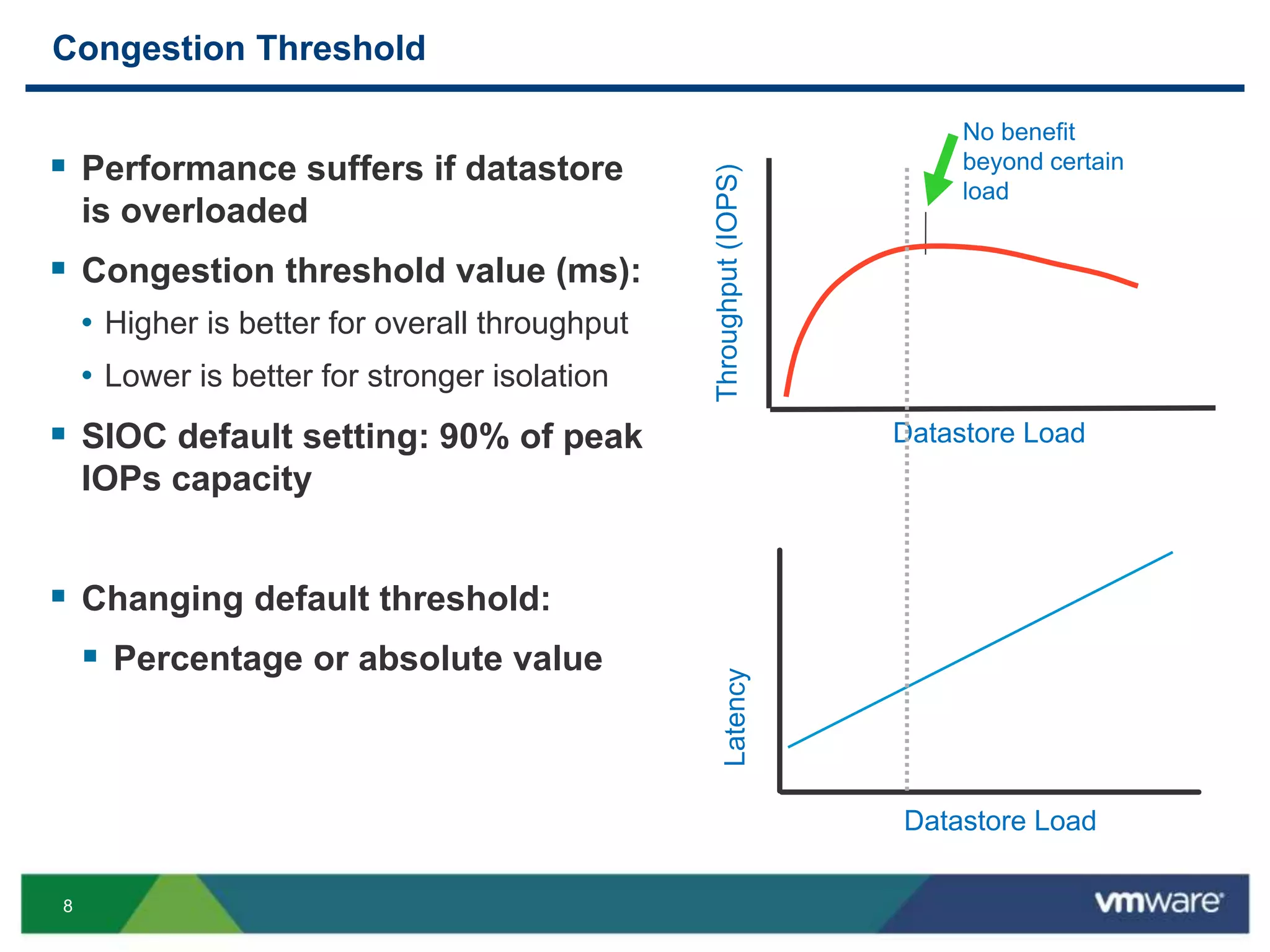

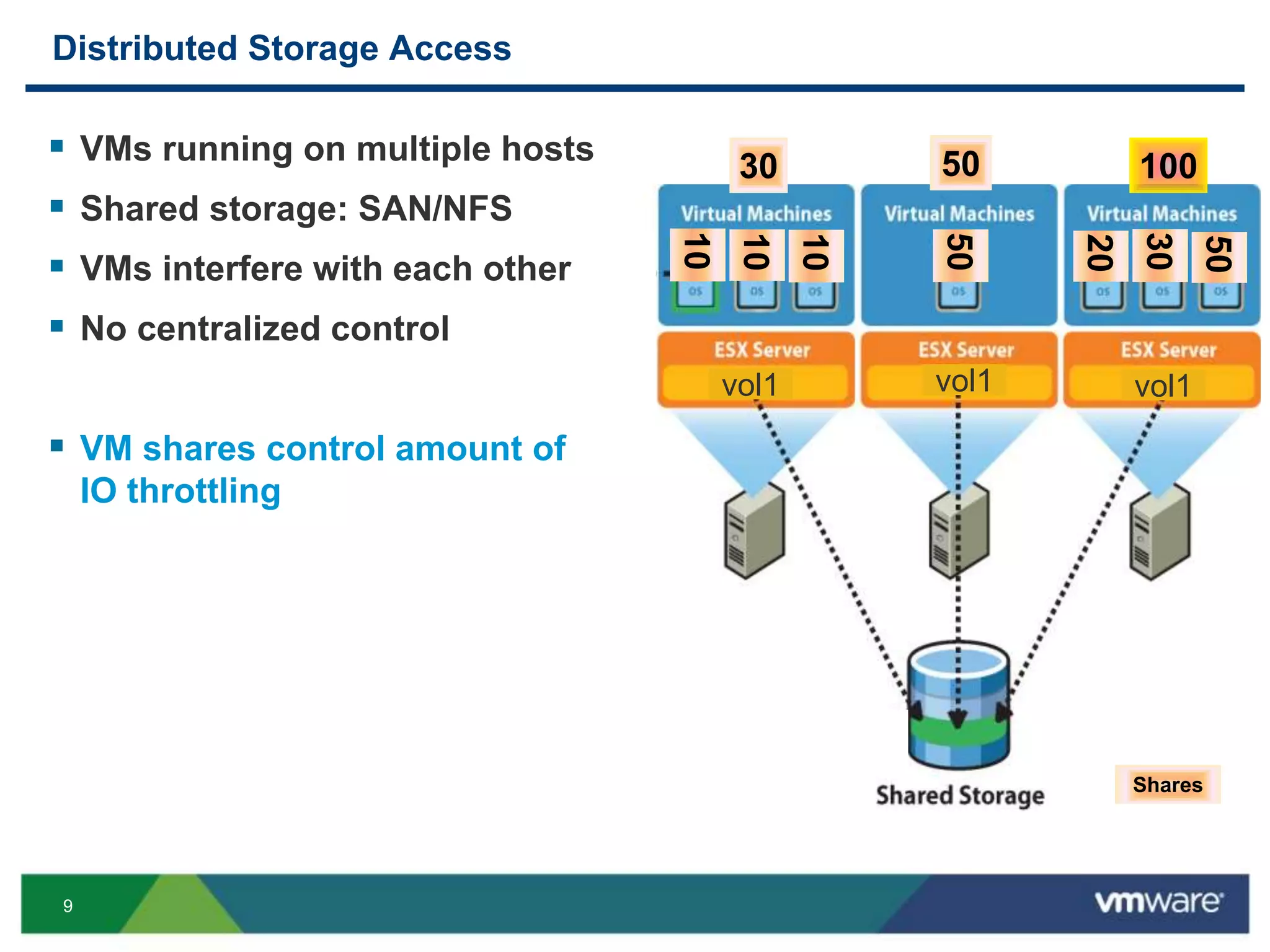

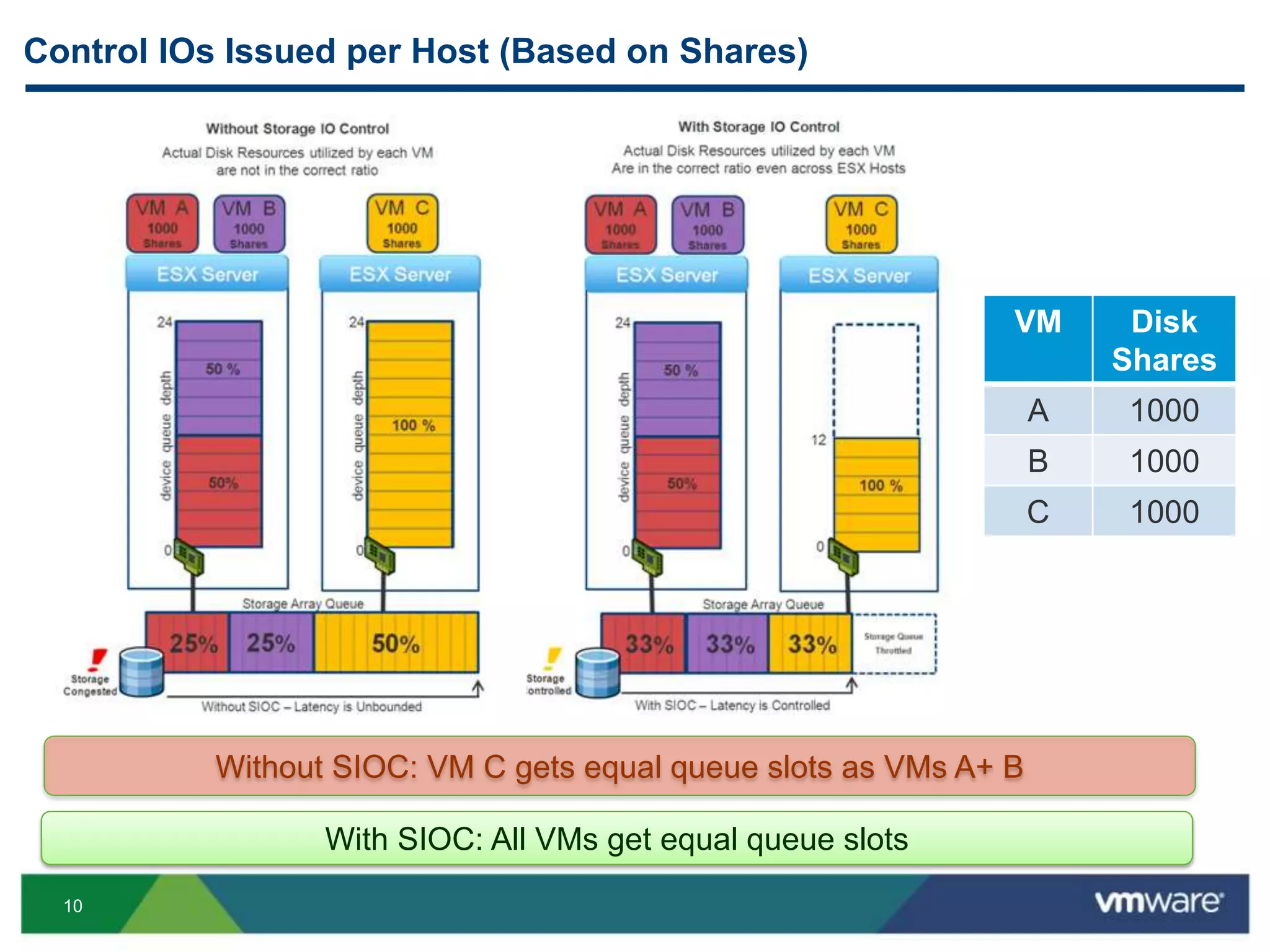

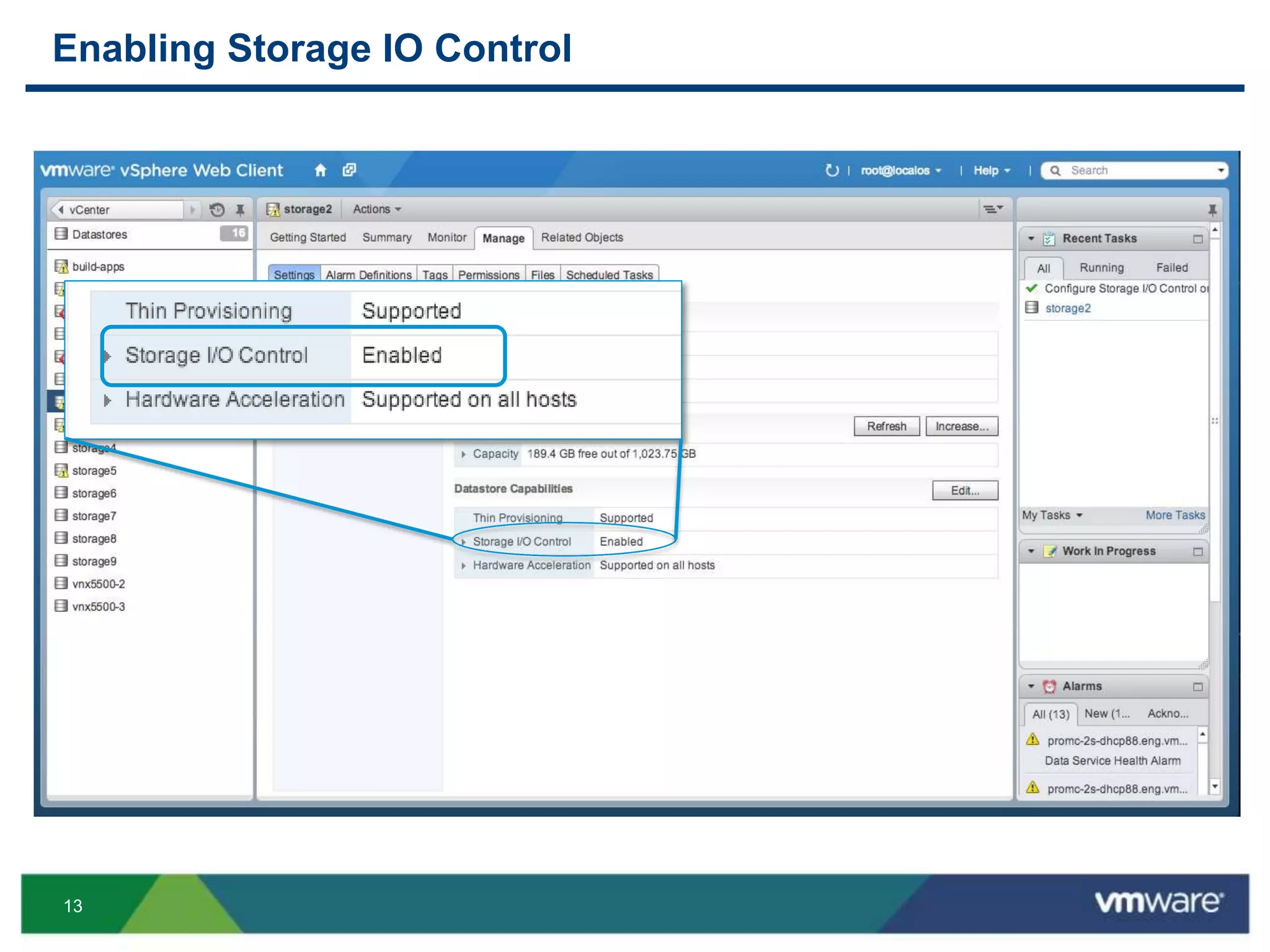

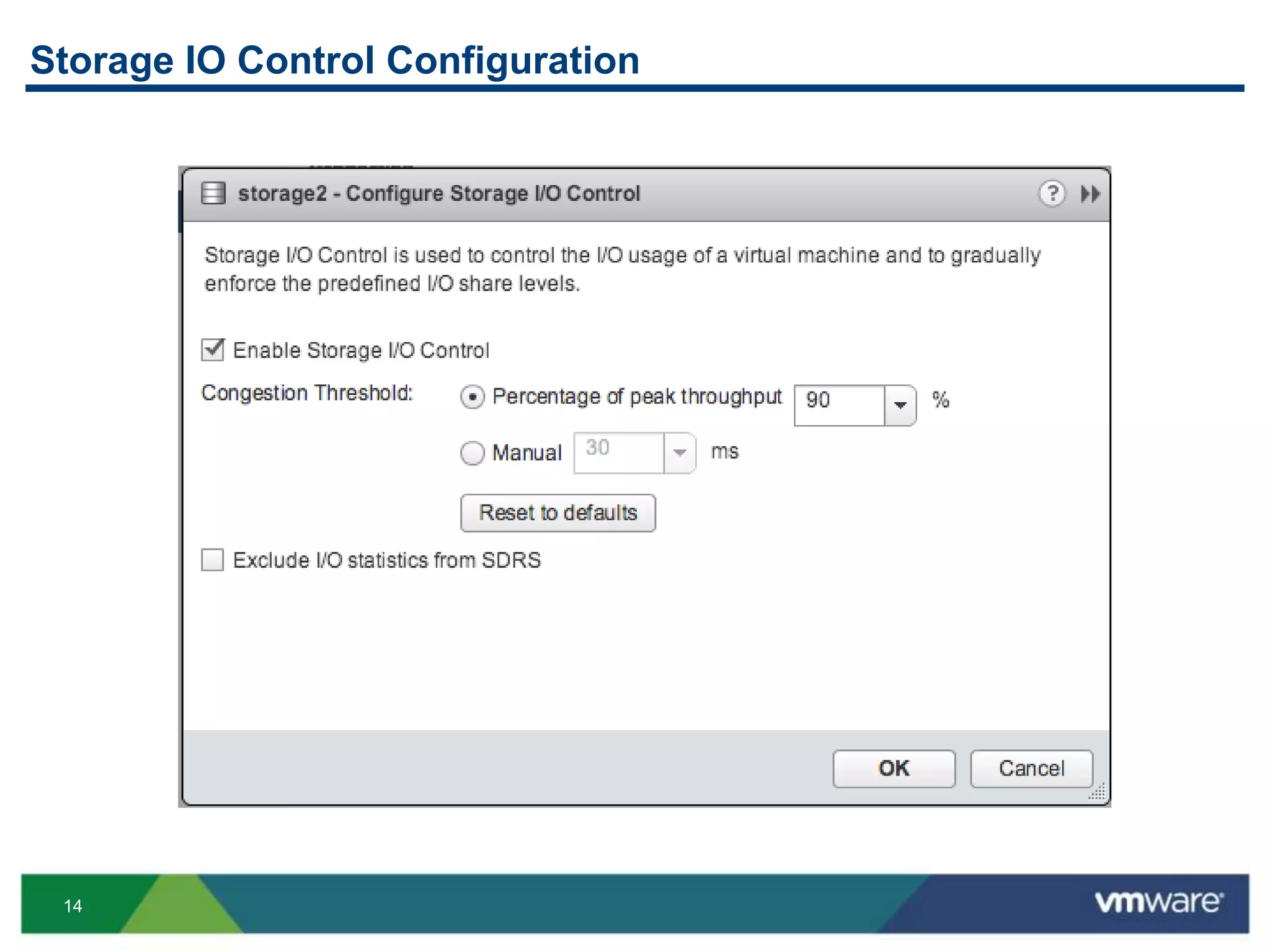

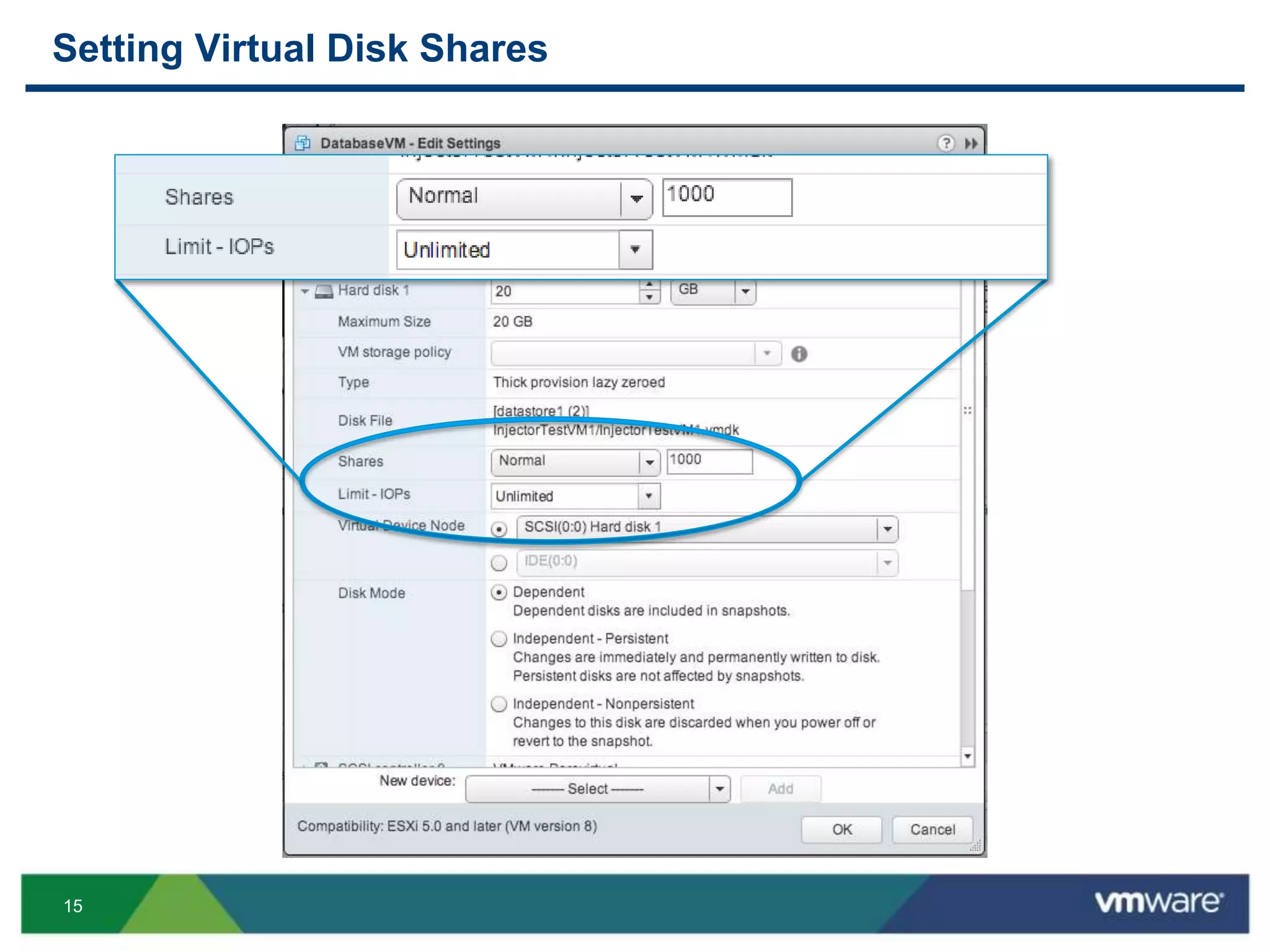

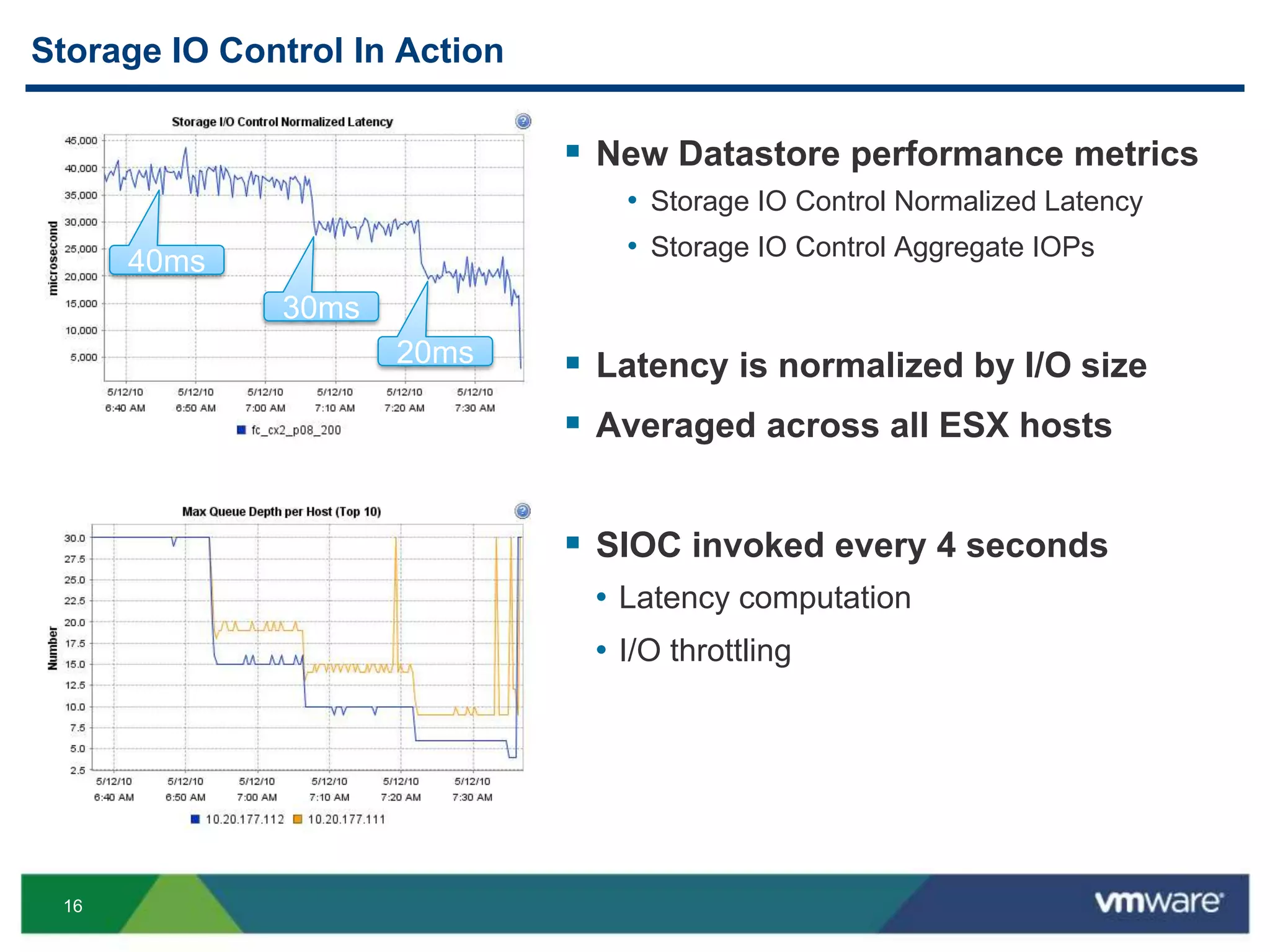

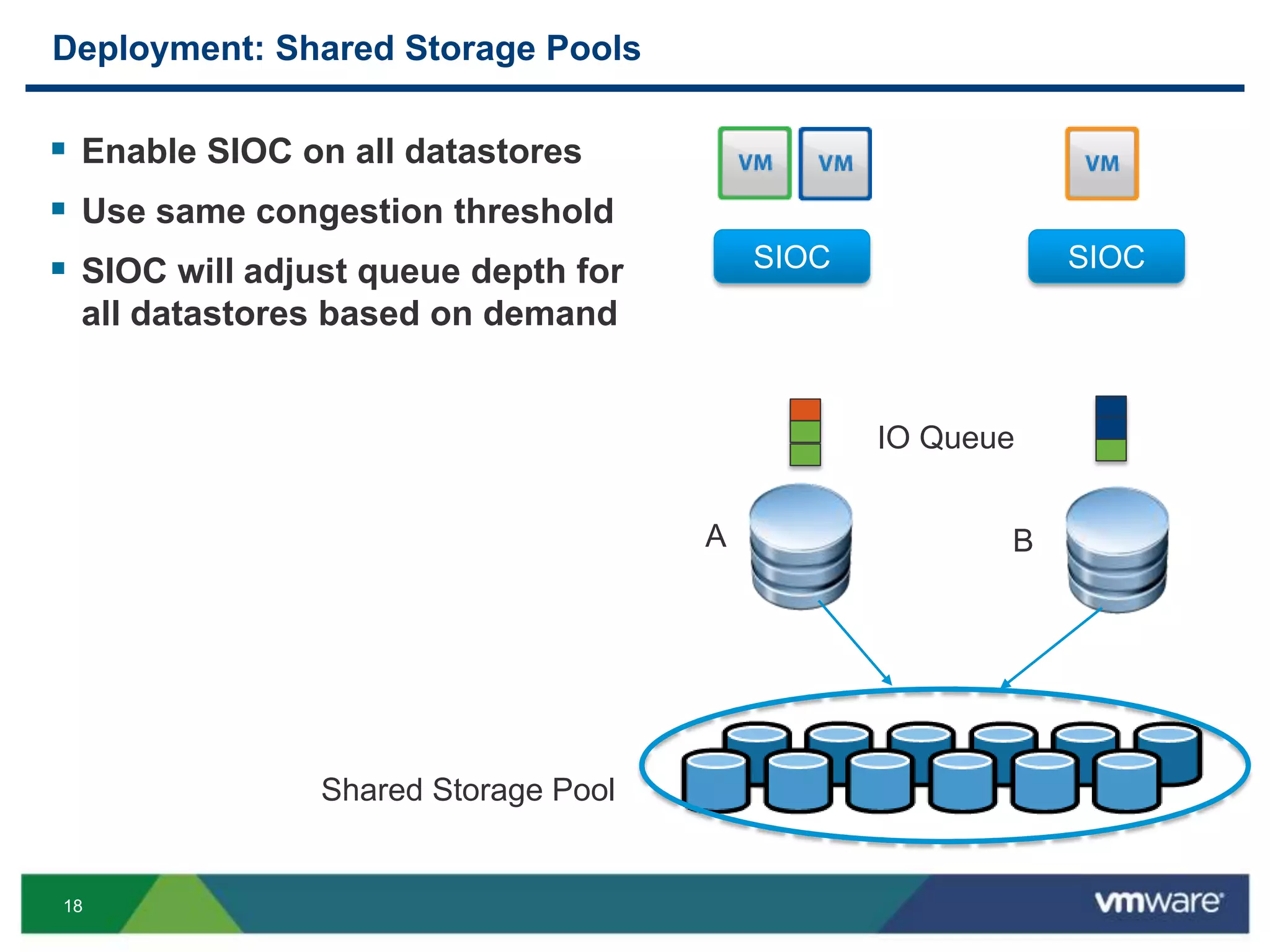

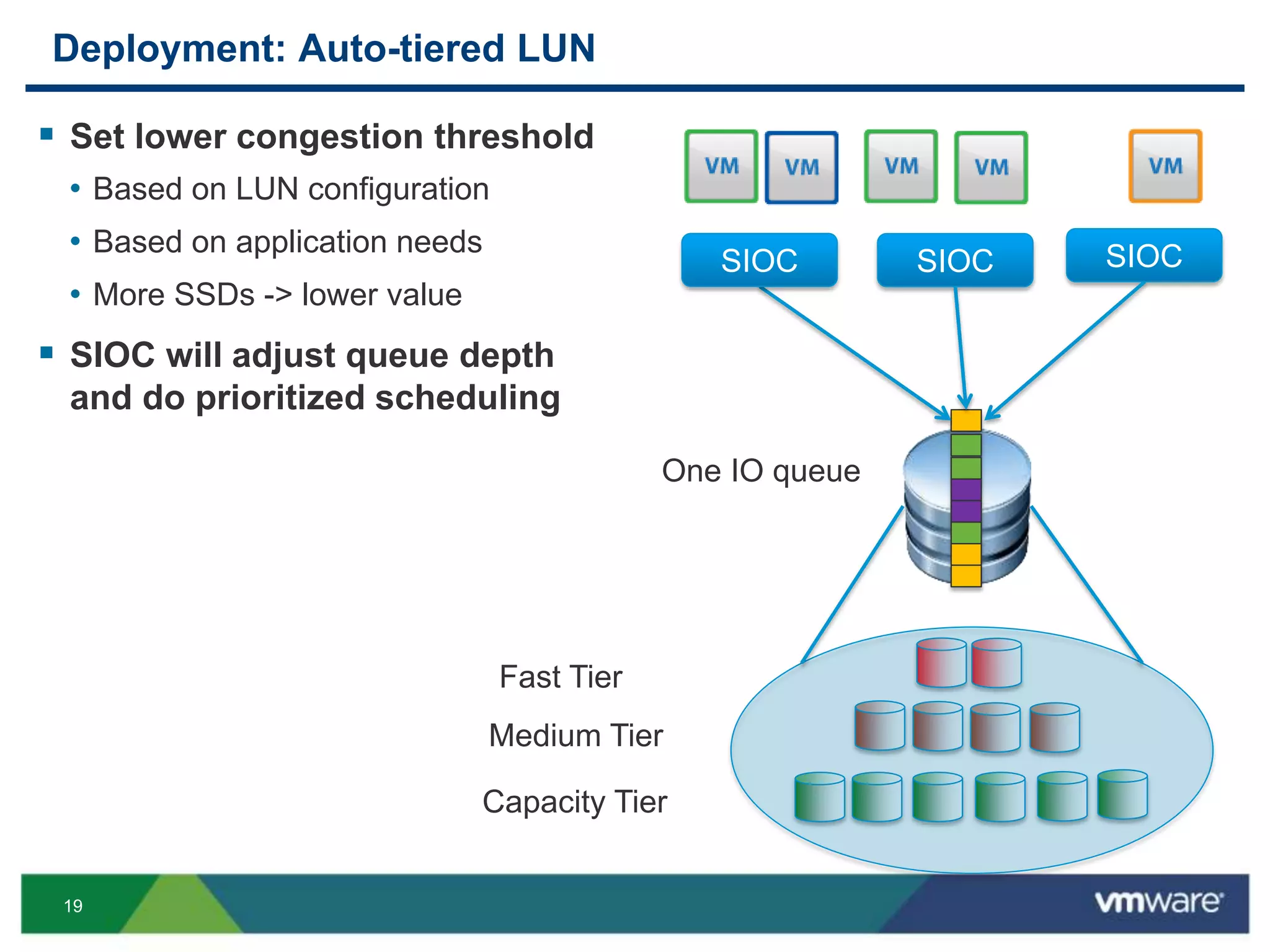

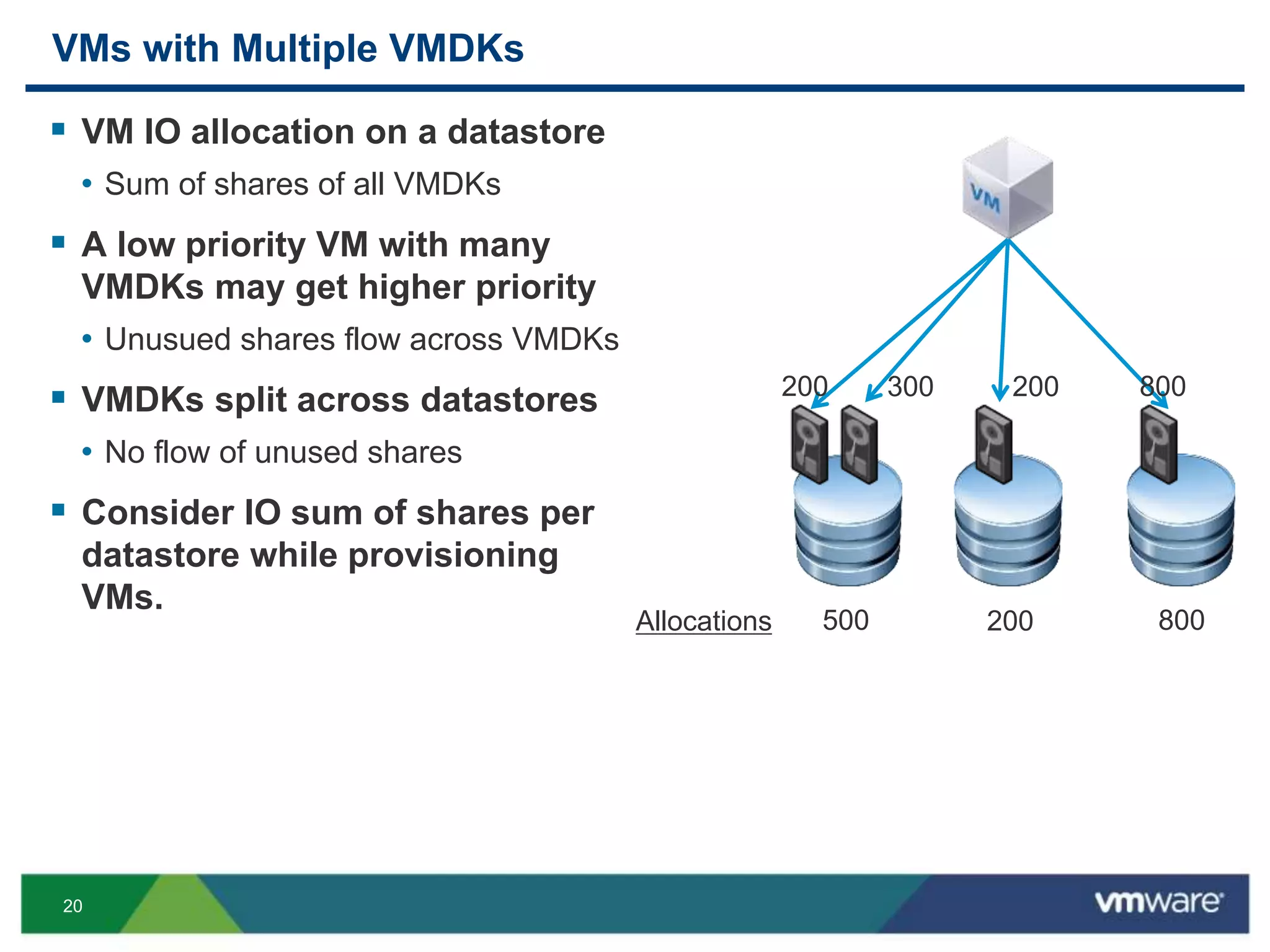

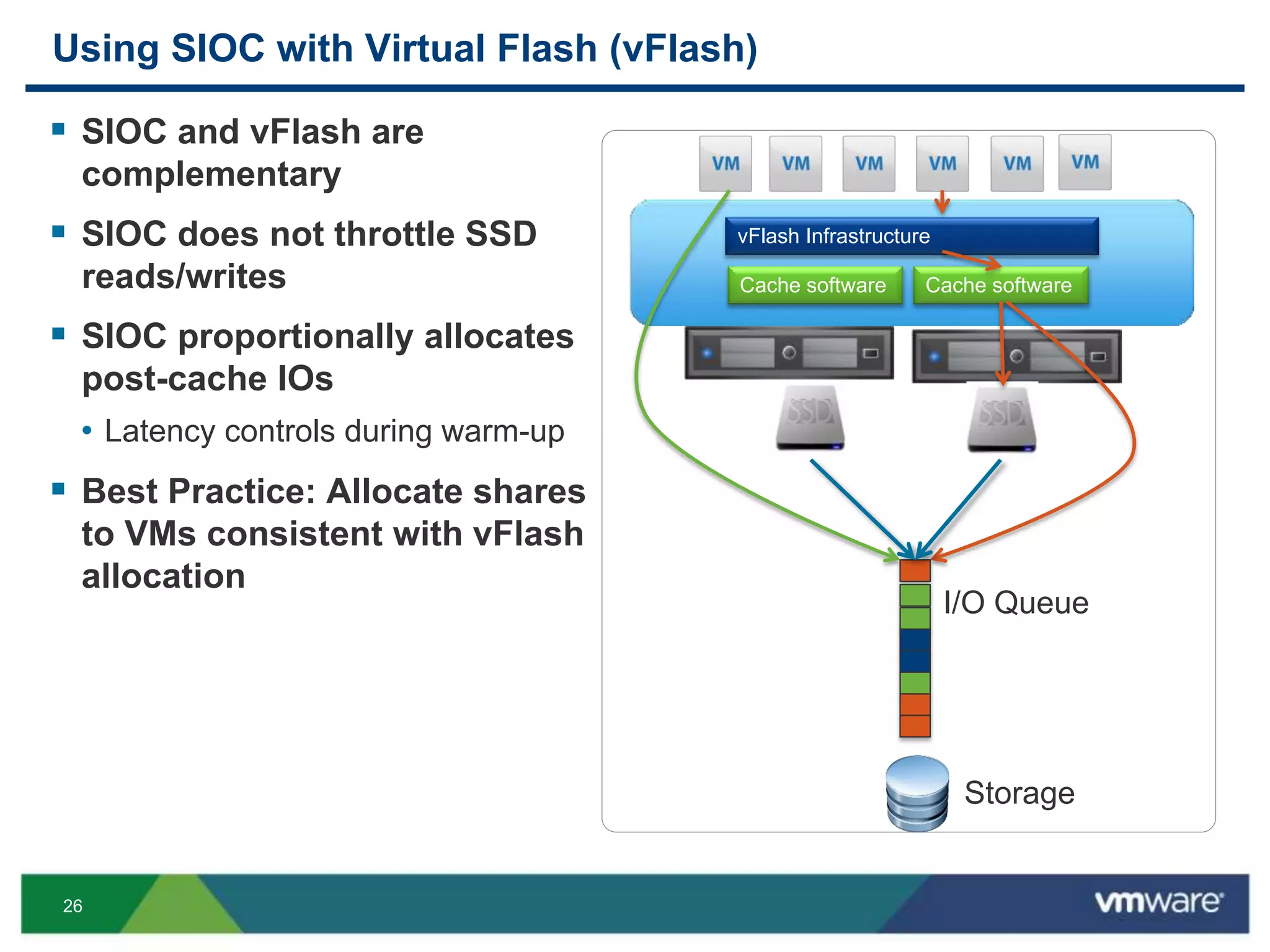

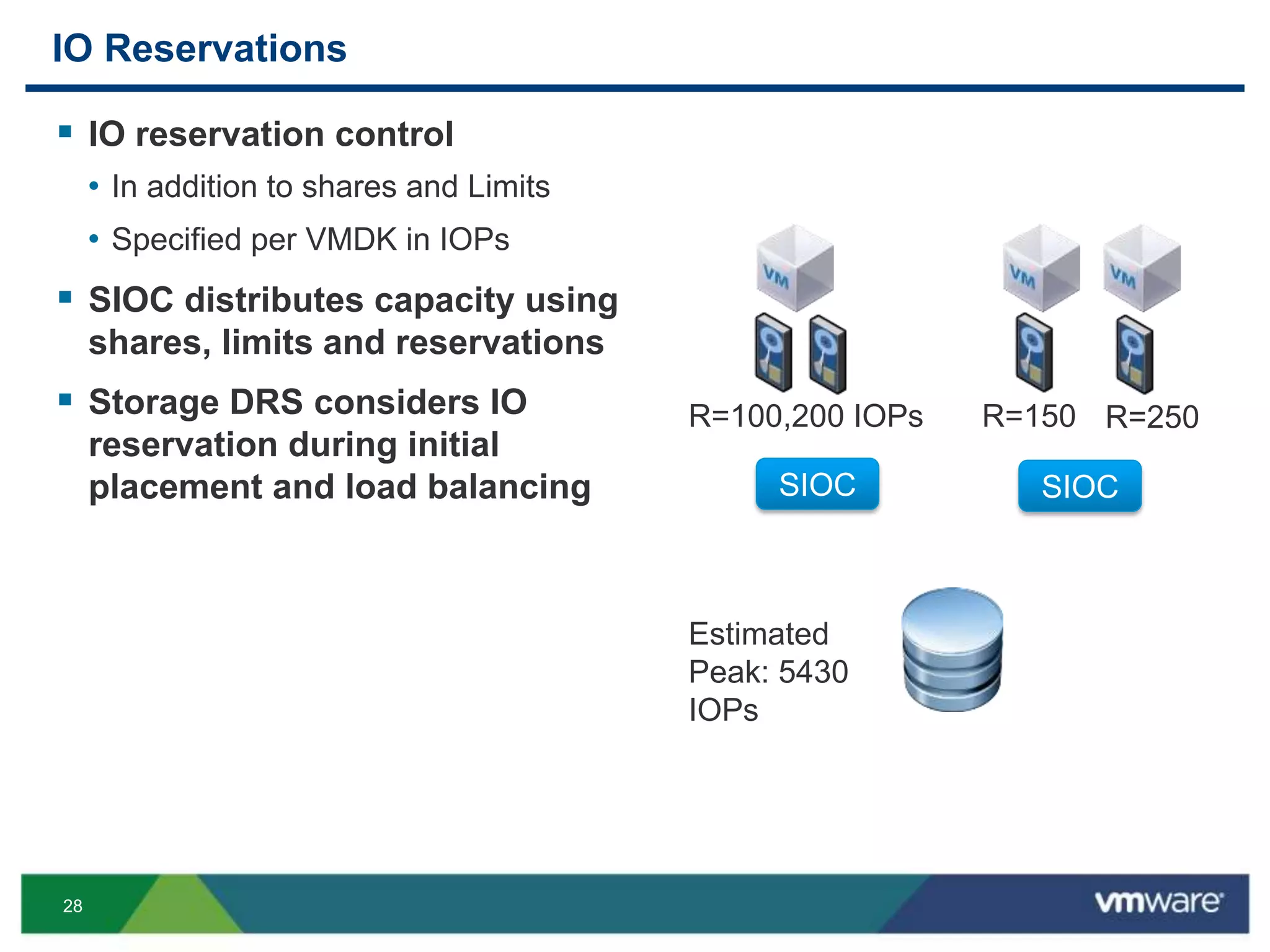

The document presents an overview of Storage I/O Control (SIOC) by VMware, detailing its concepts, configuration, and best practices for managing diverse storage architectures. It emphasizes SIOC's role in enabling performance isolation among virtual machines through automatic detection of I/O congestion and demonstrates configuration steps for optimal usage. The roadmap and improvements in VMware vSphere versions 5.1 and 5.5 are also outlined, highlighting features that enhance storage management capabilities.