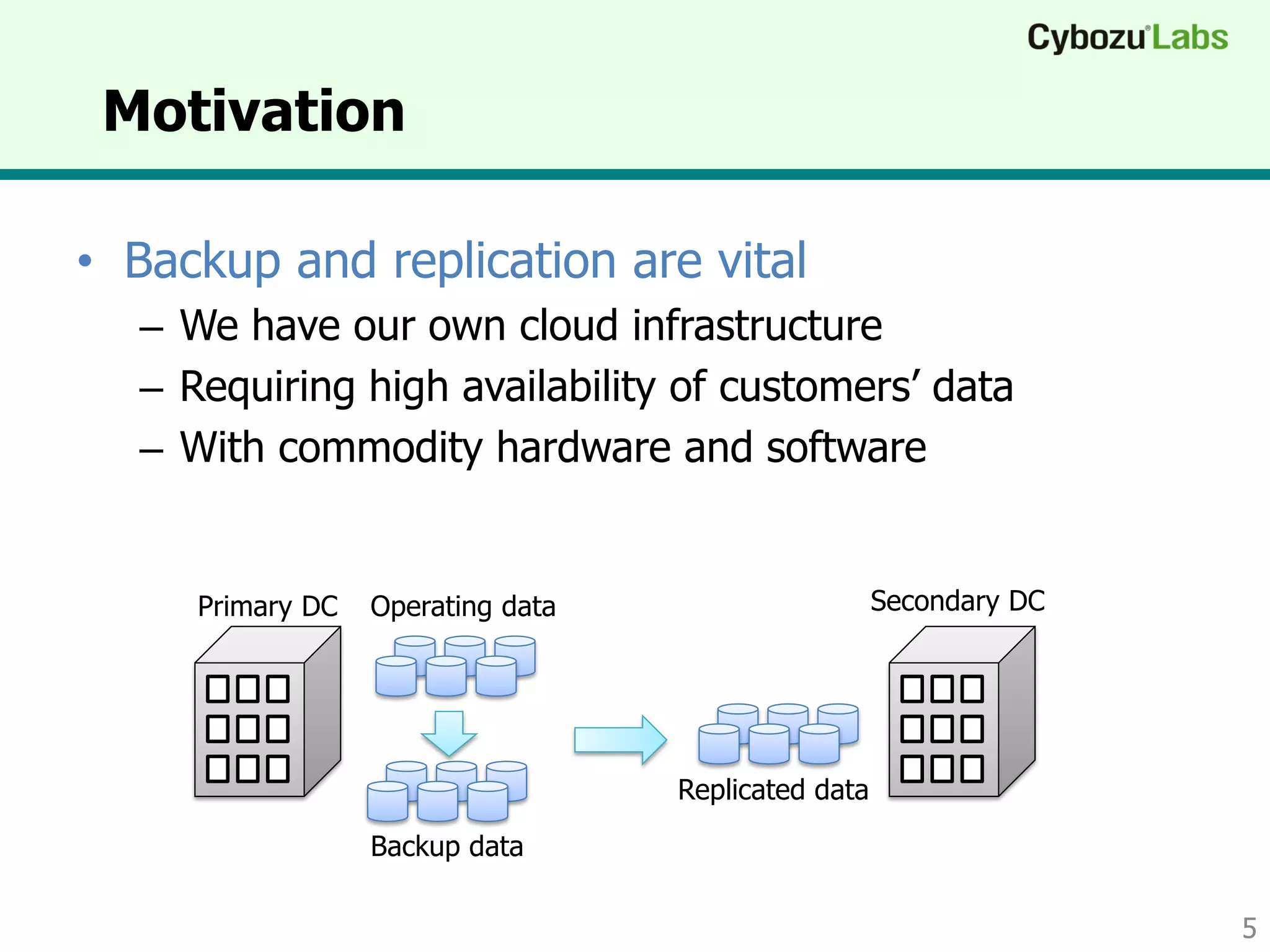

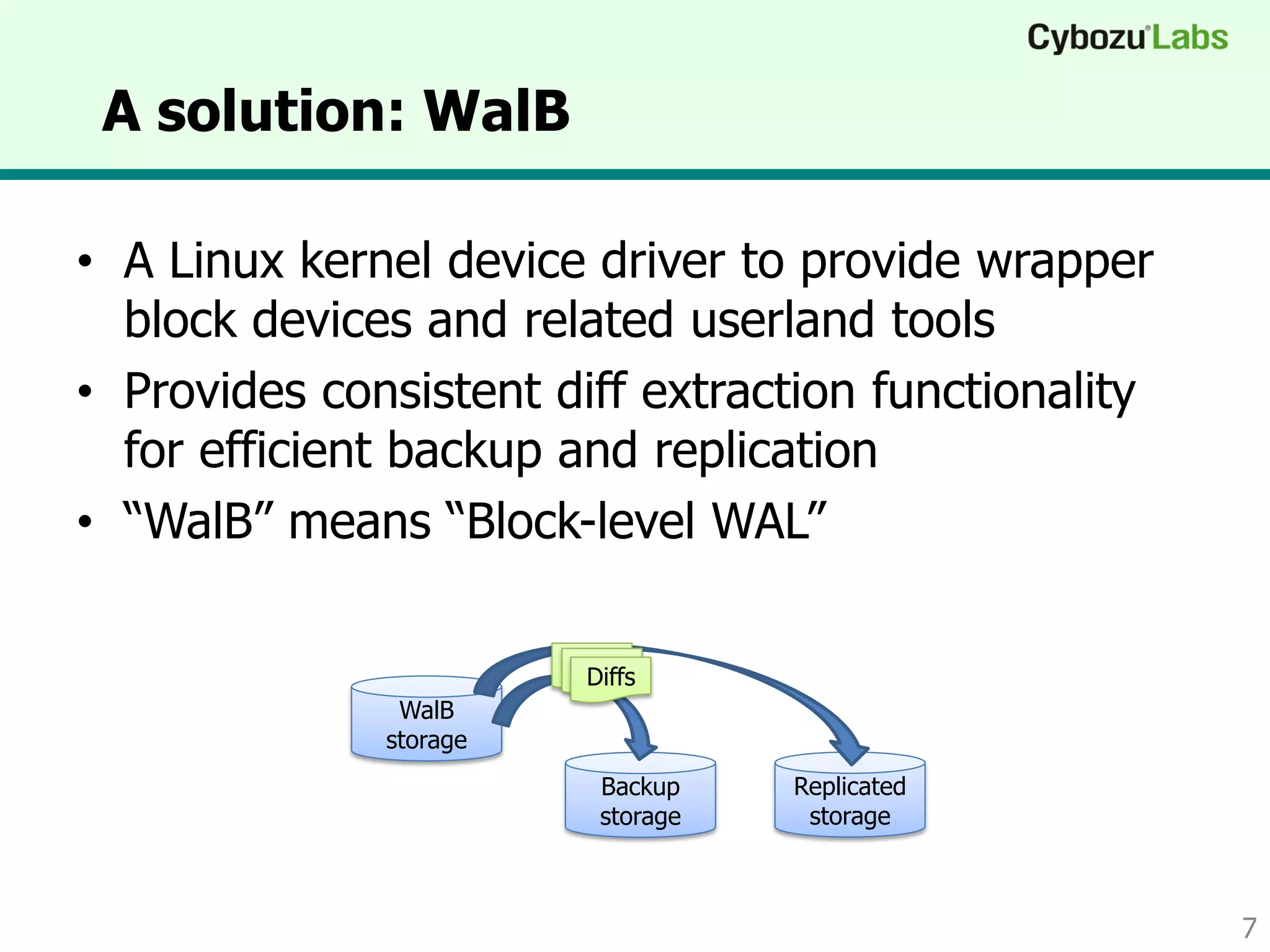

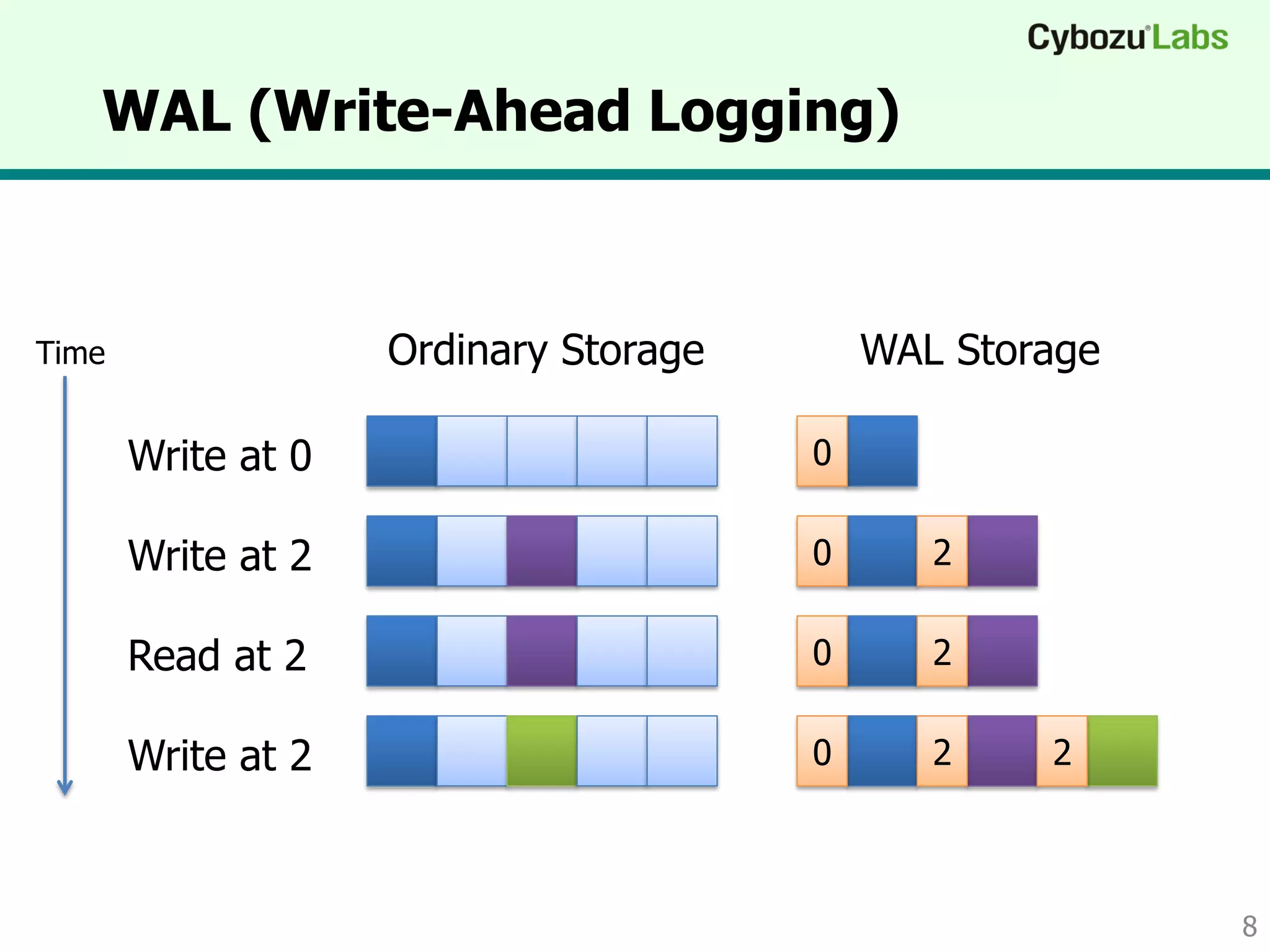

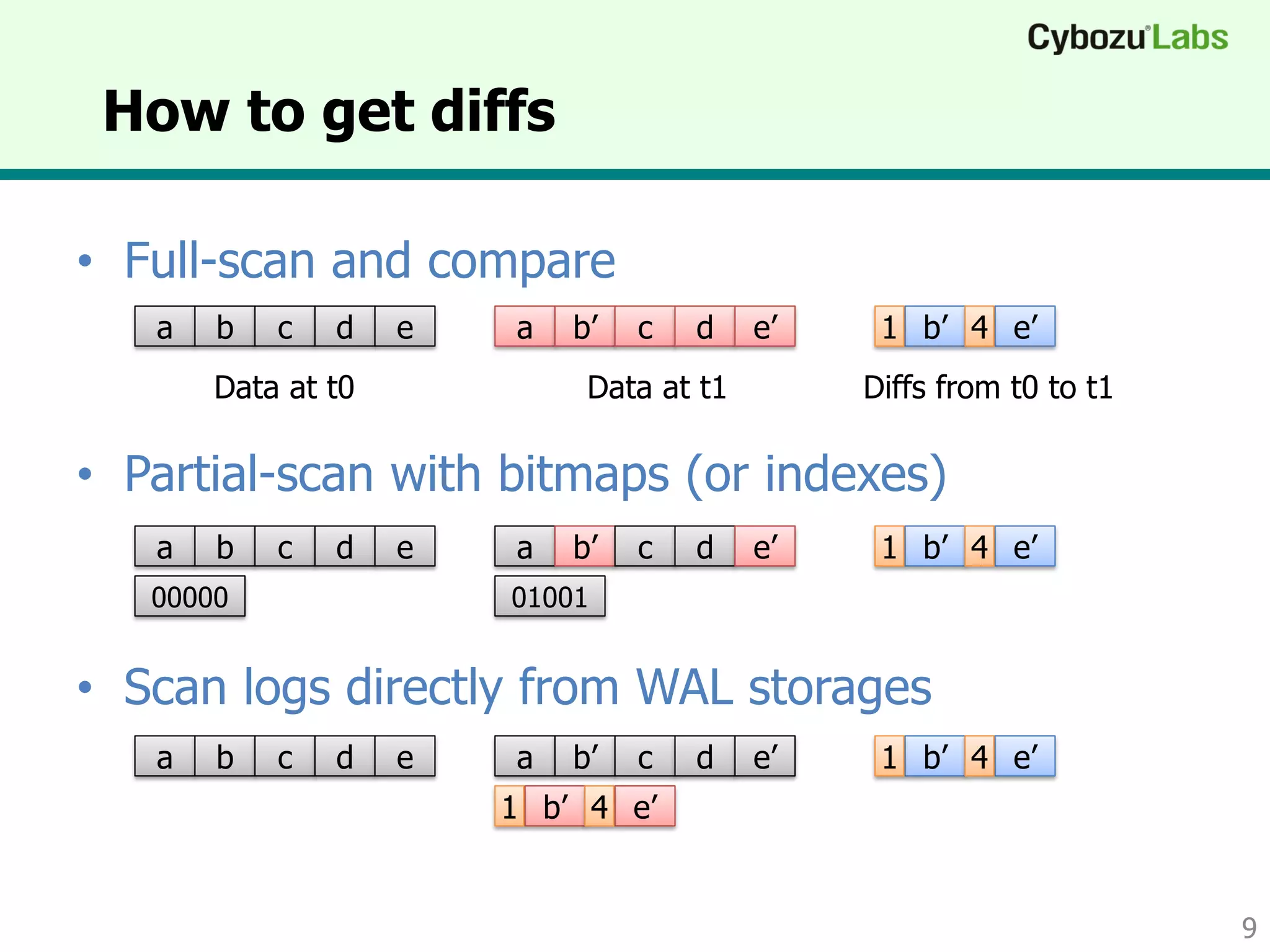

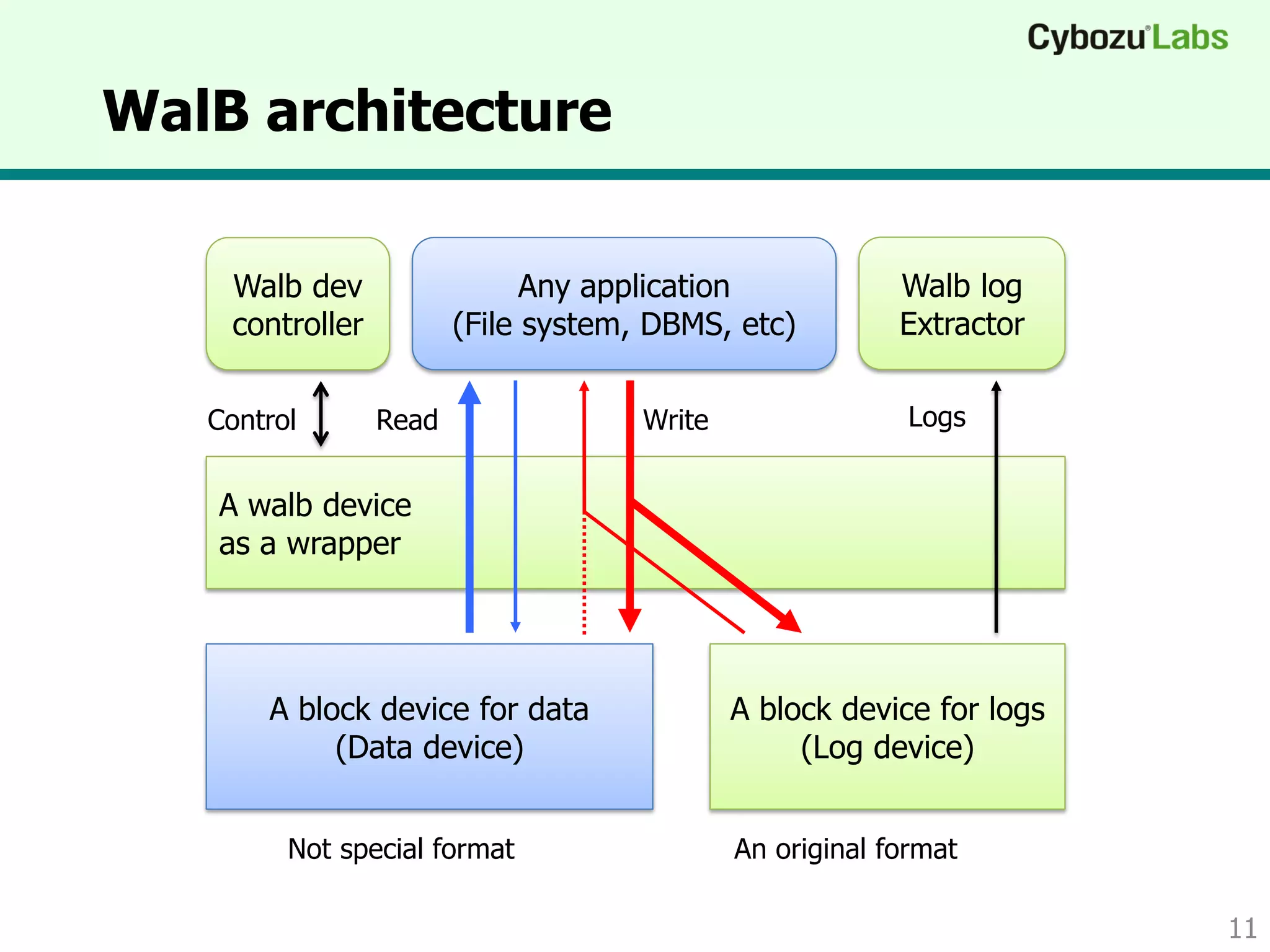

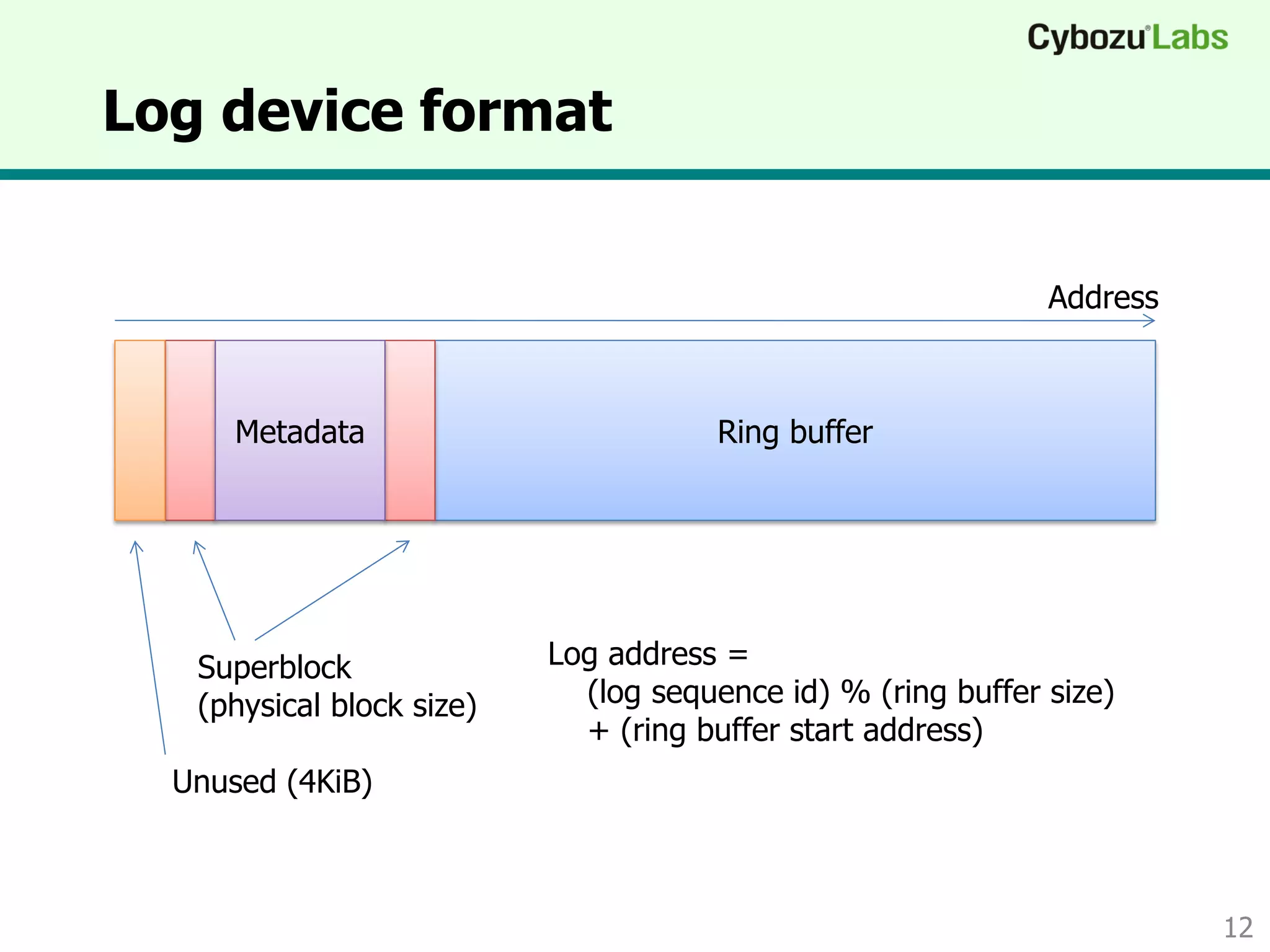

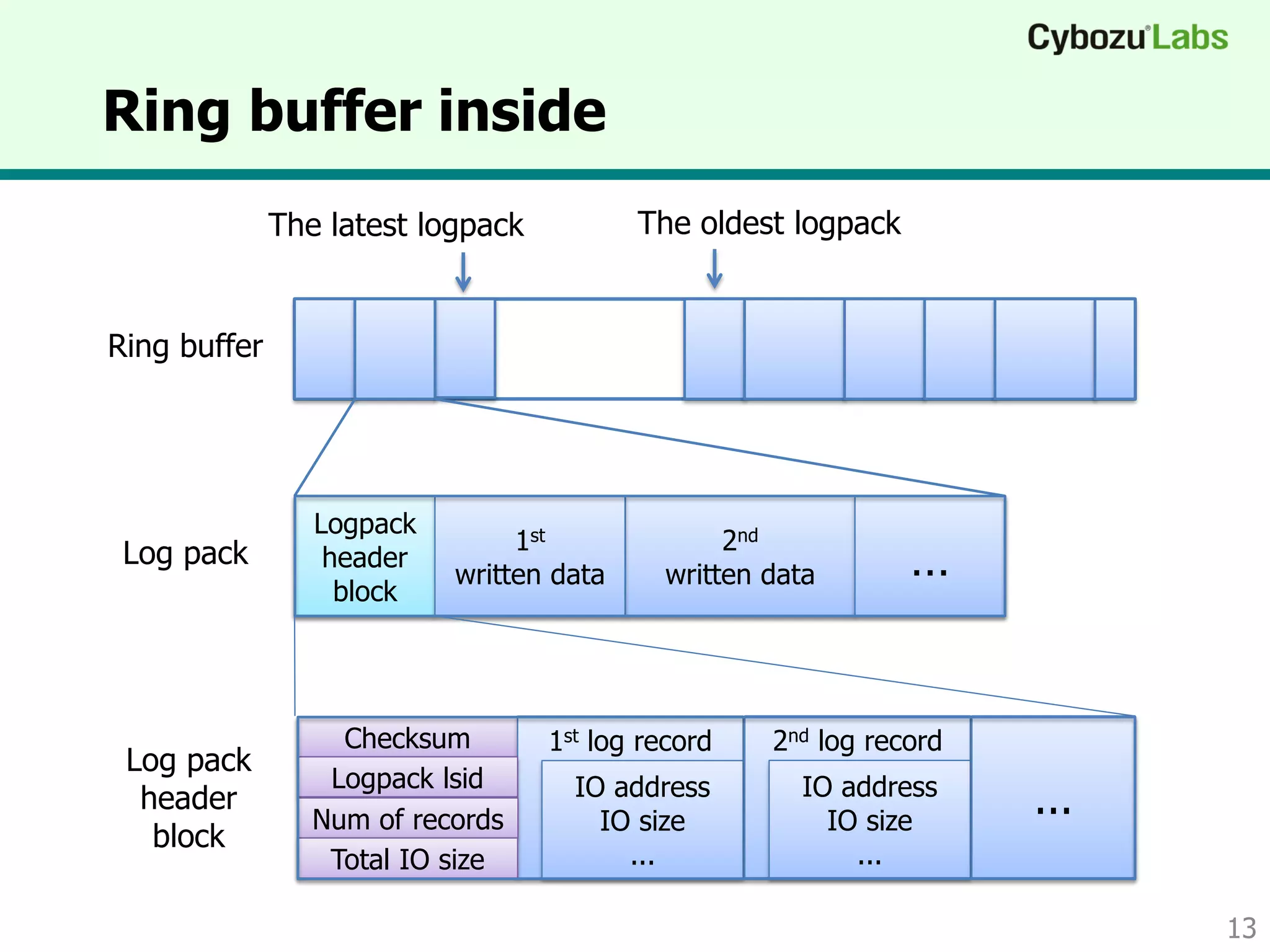

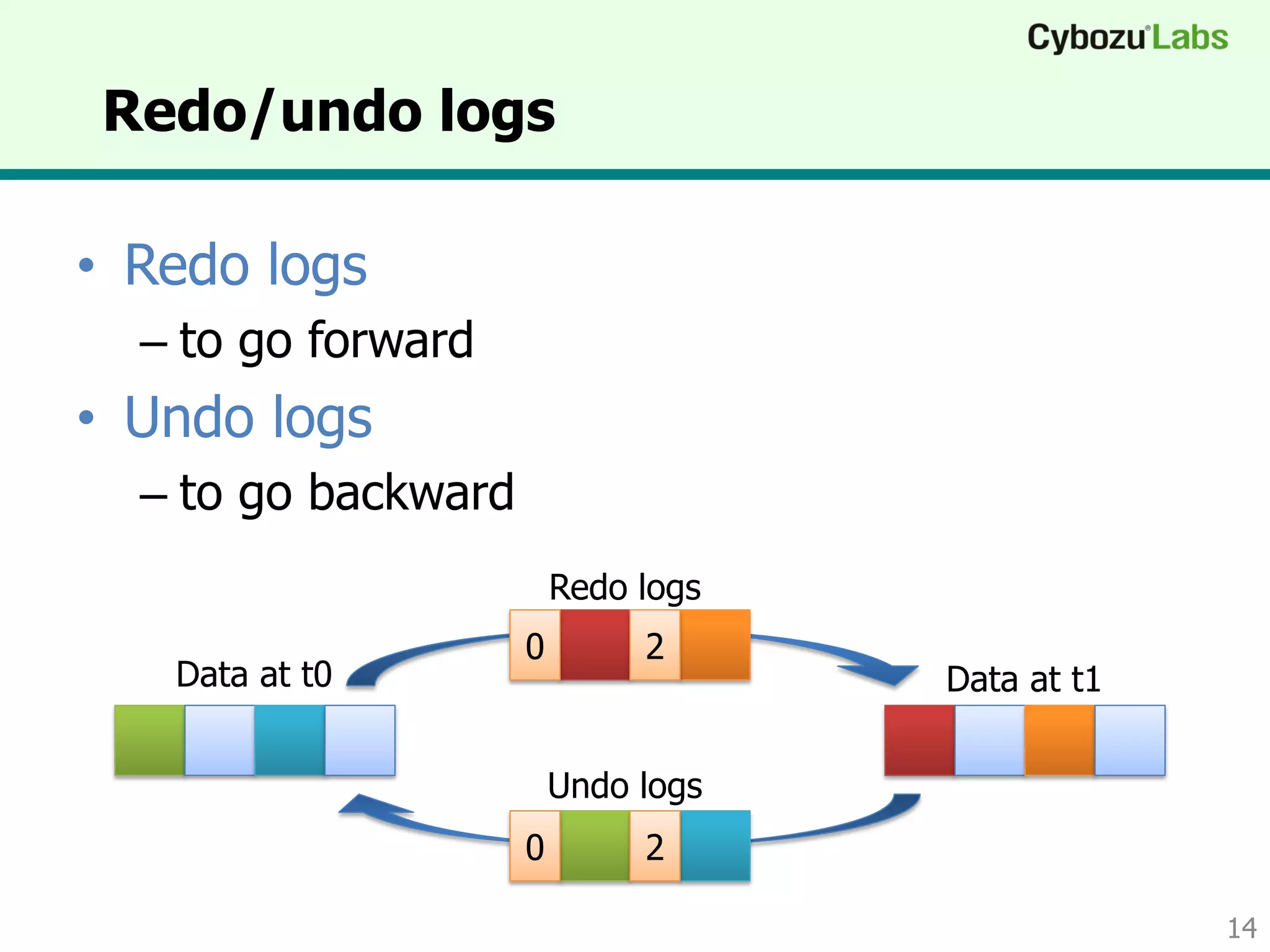

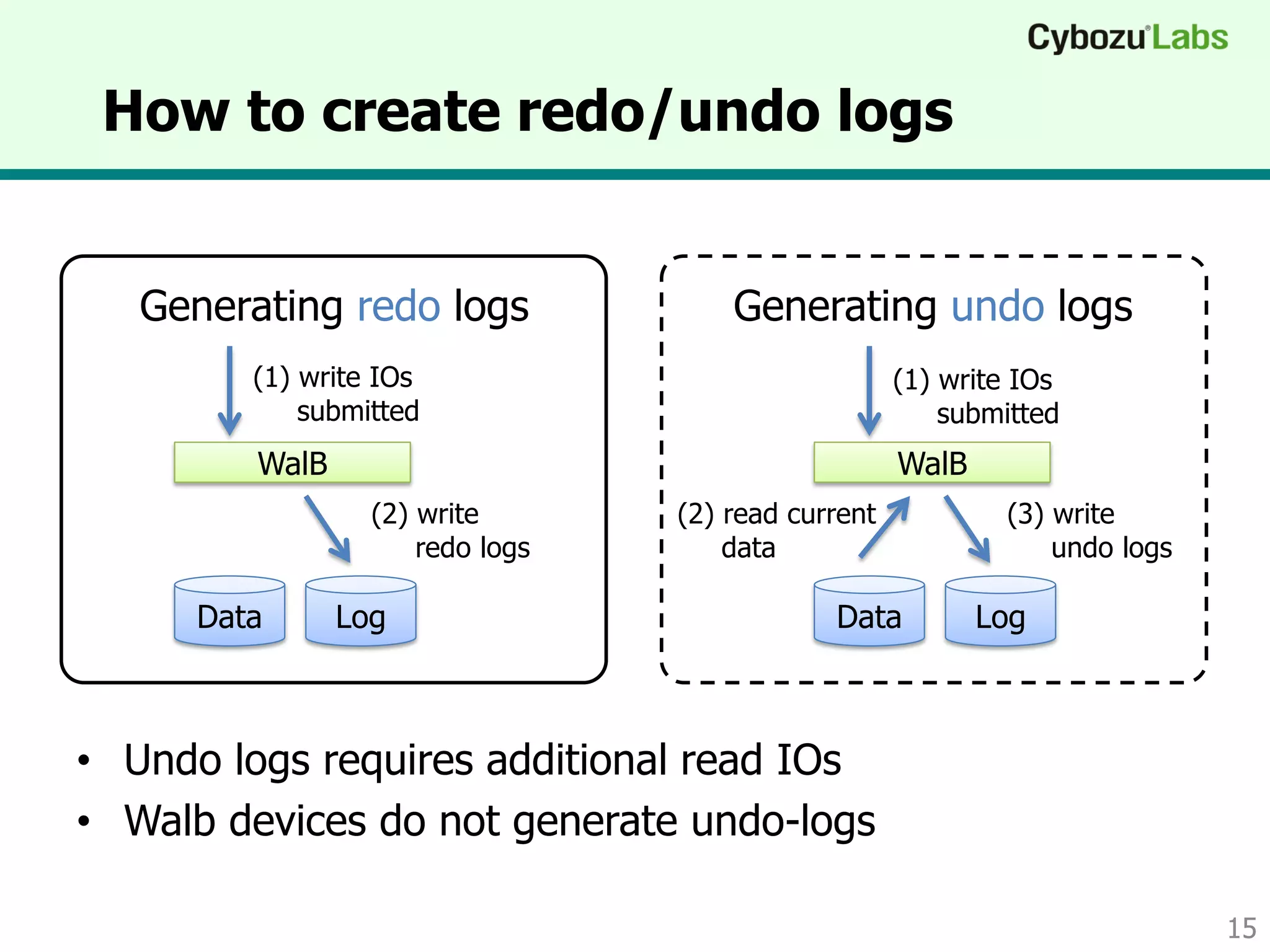

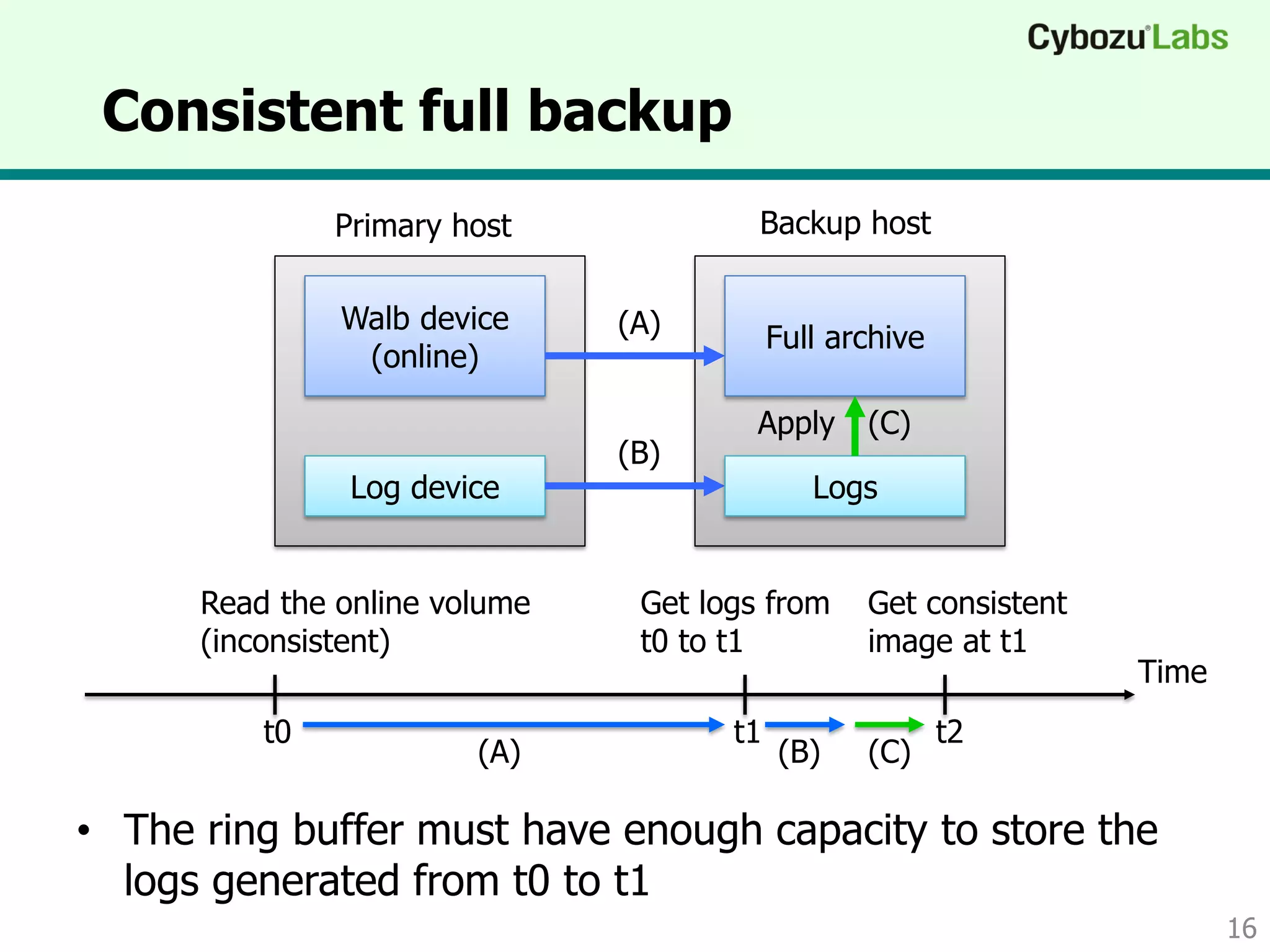

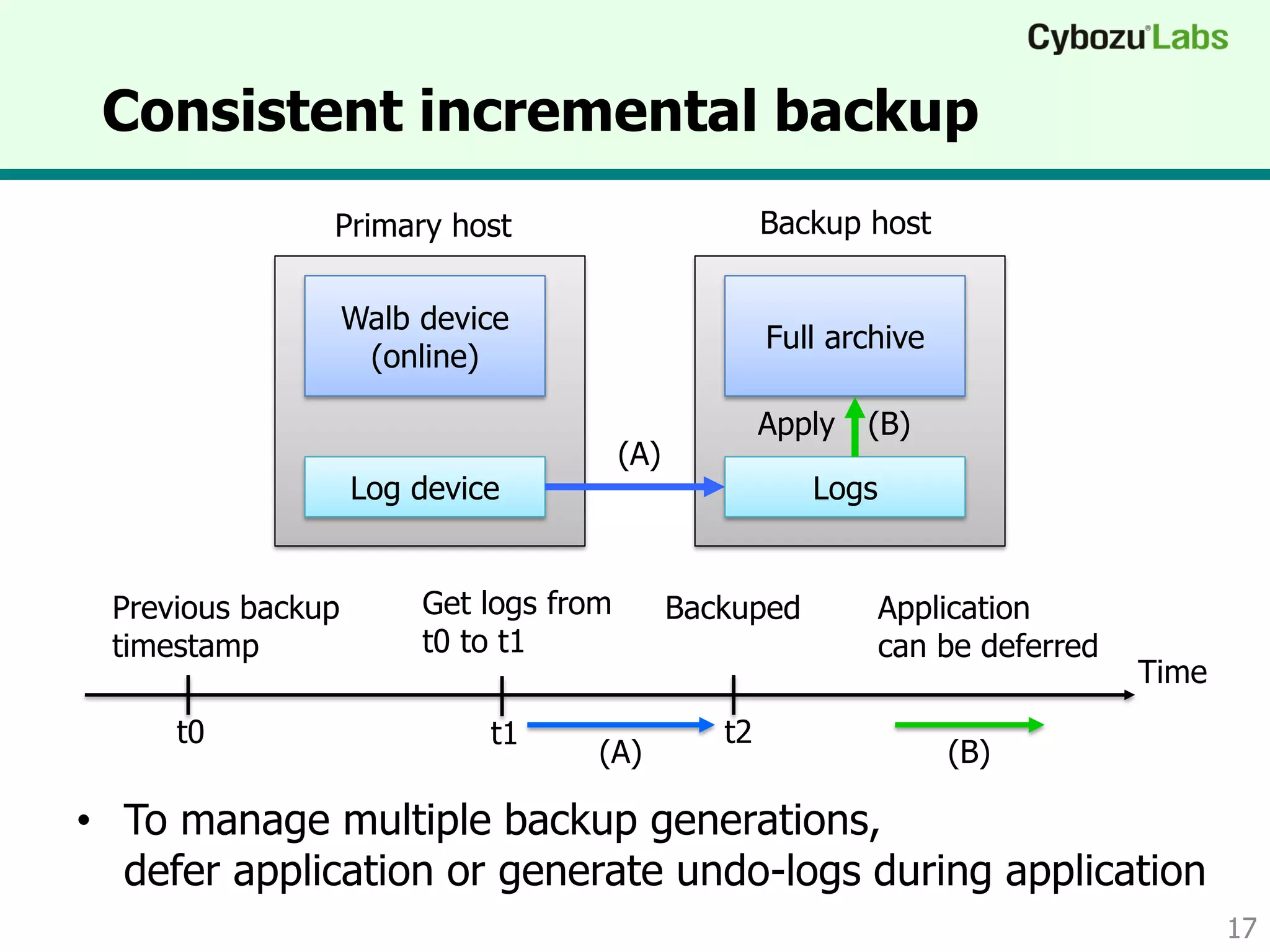

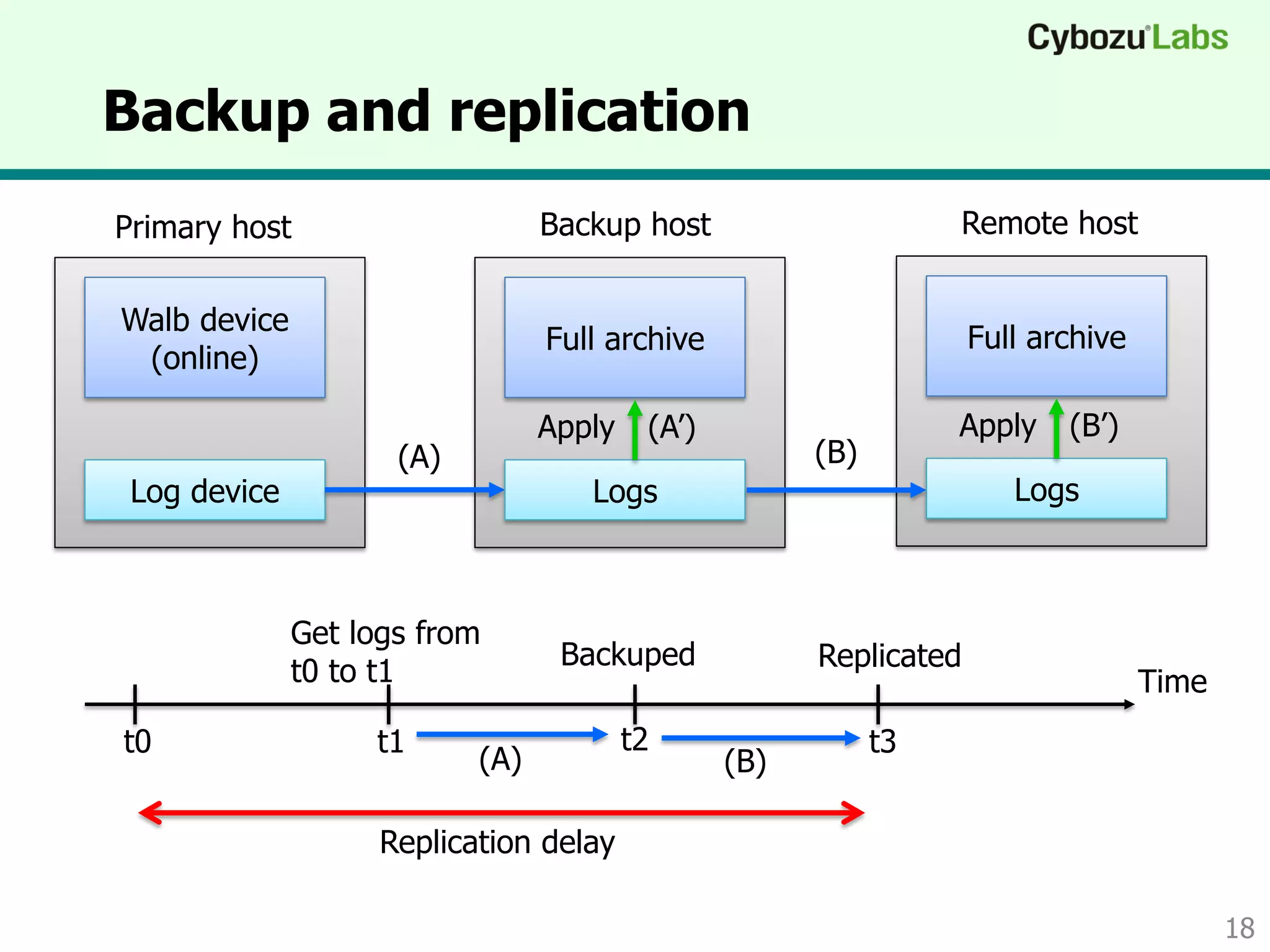

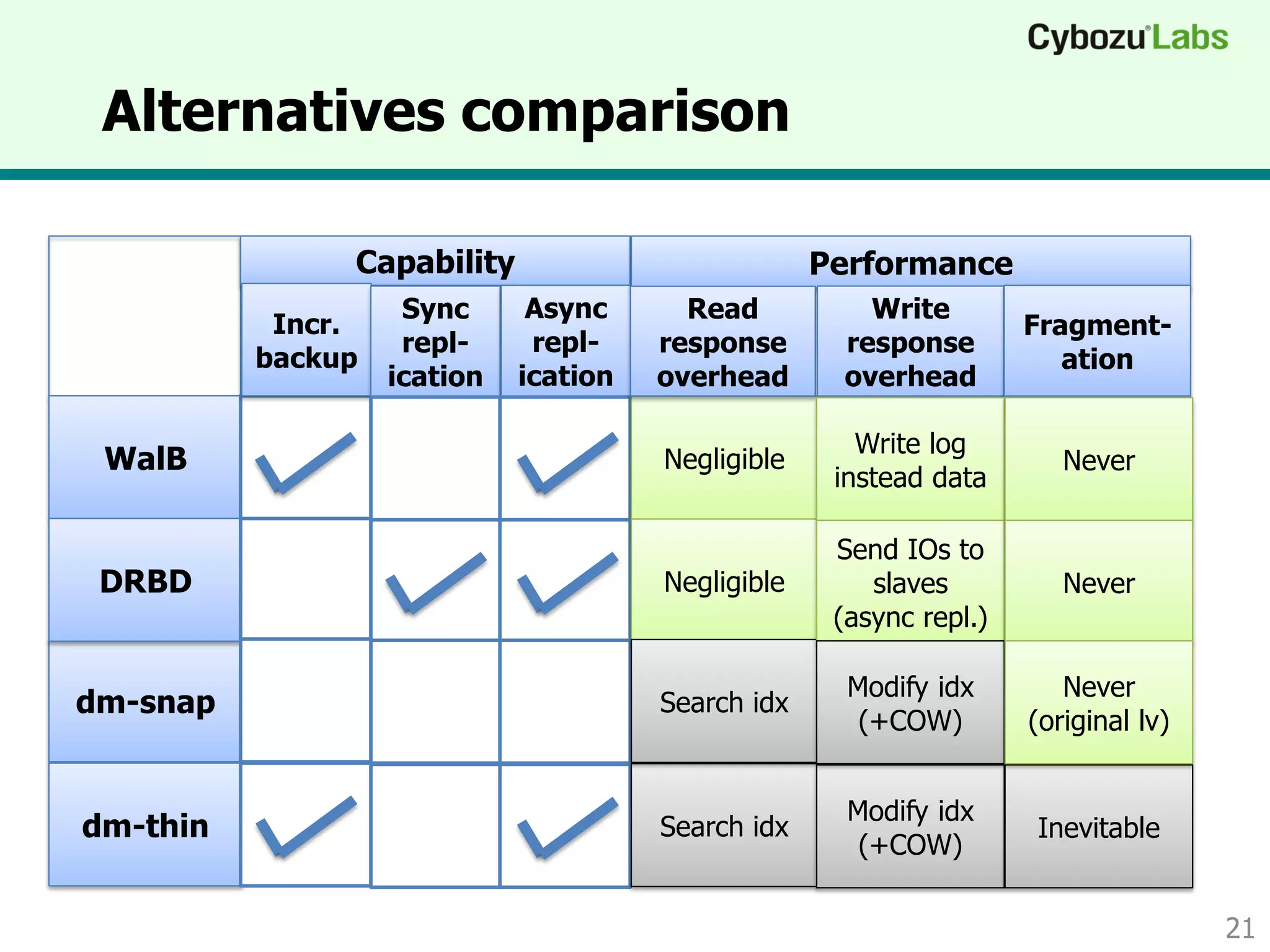

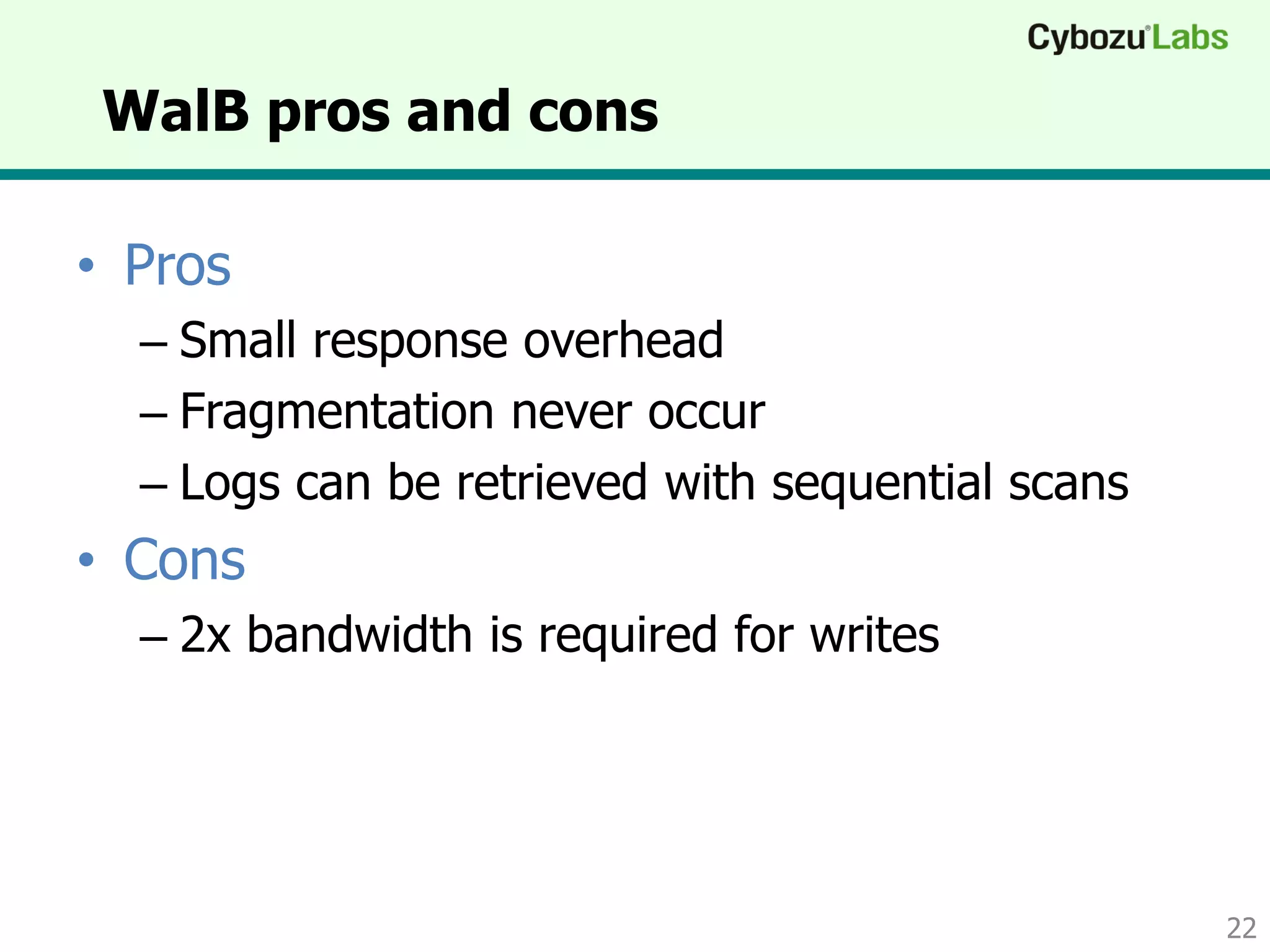

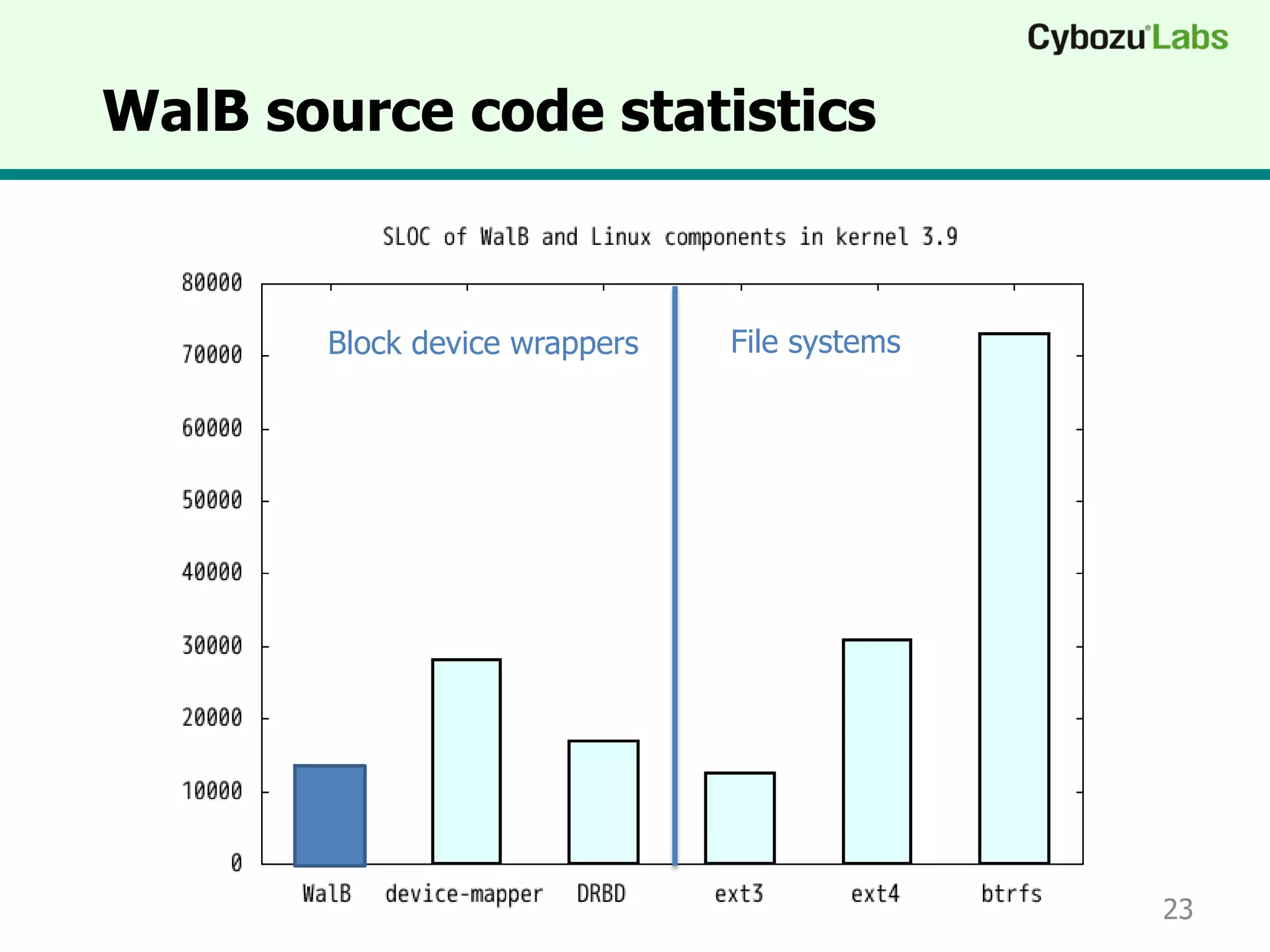

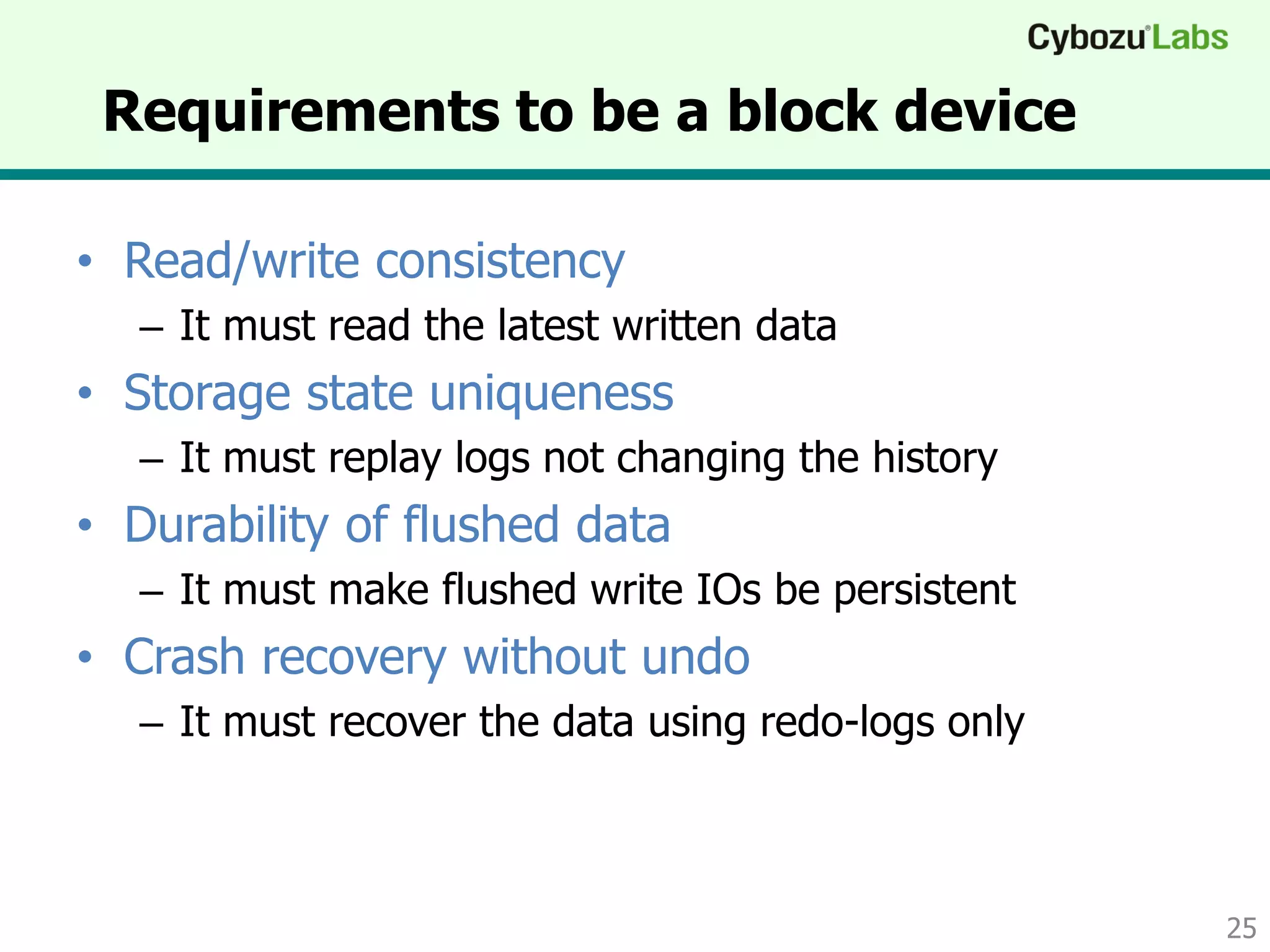

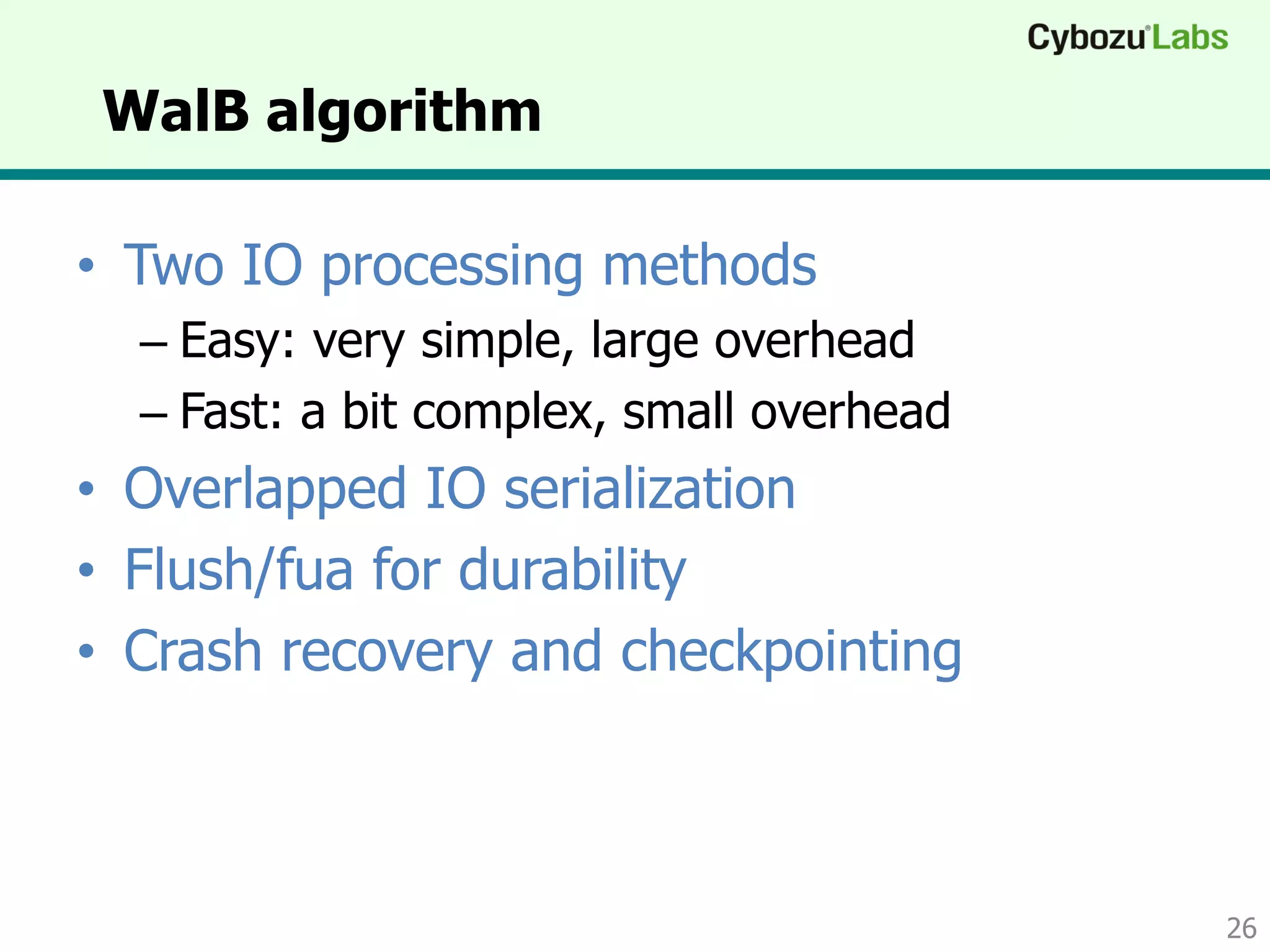

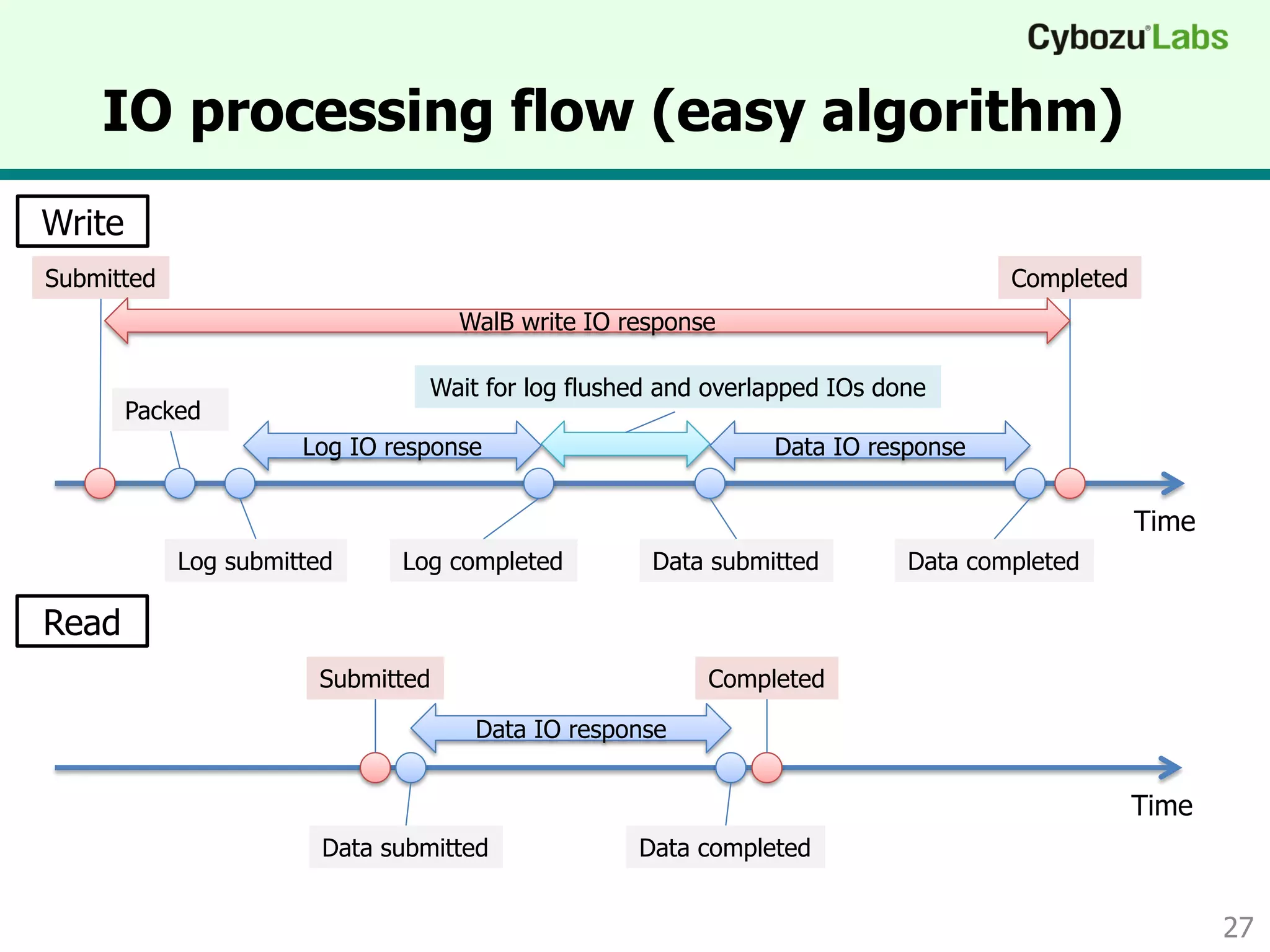

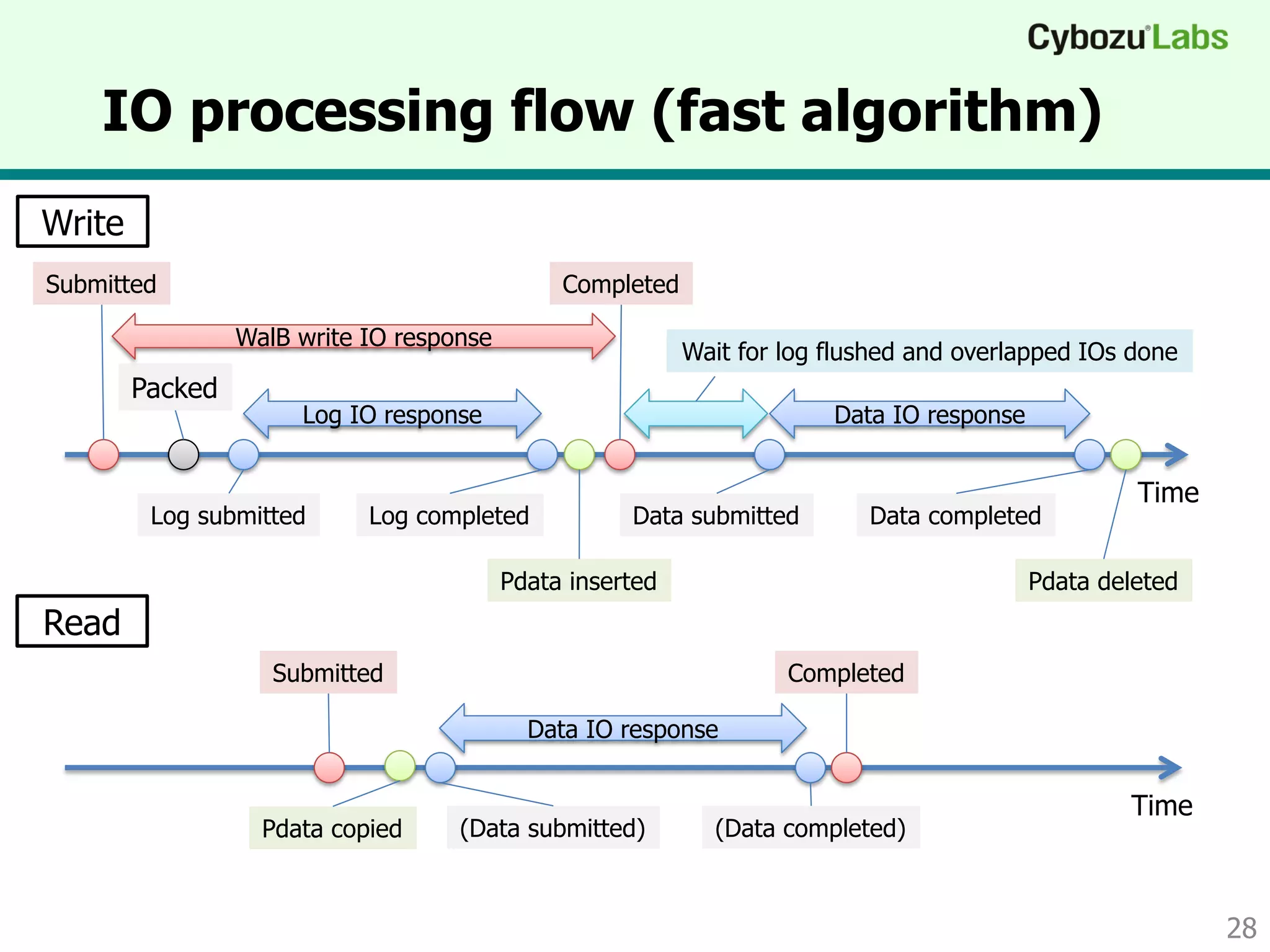

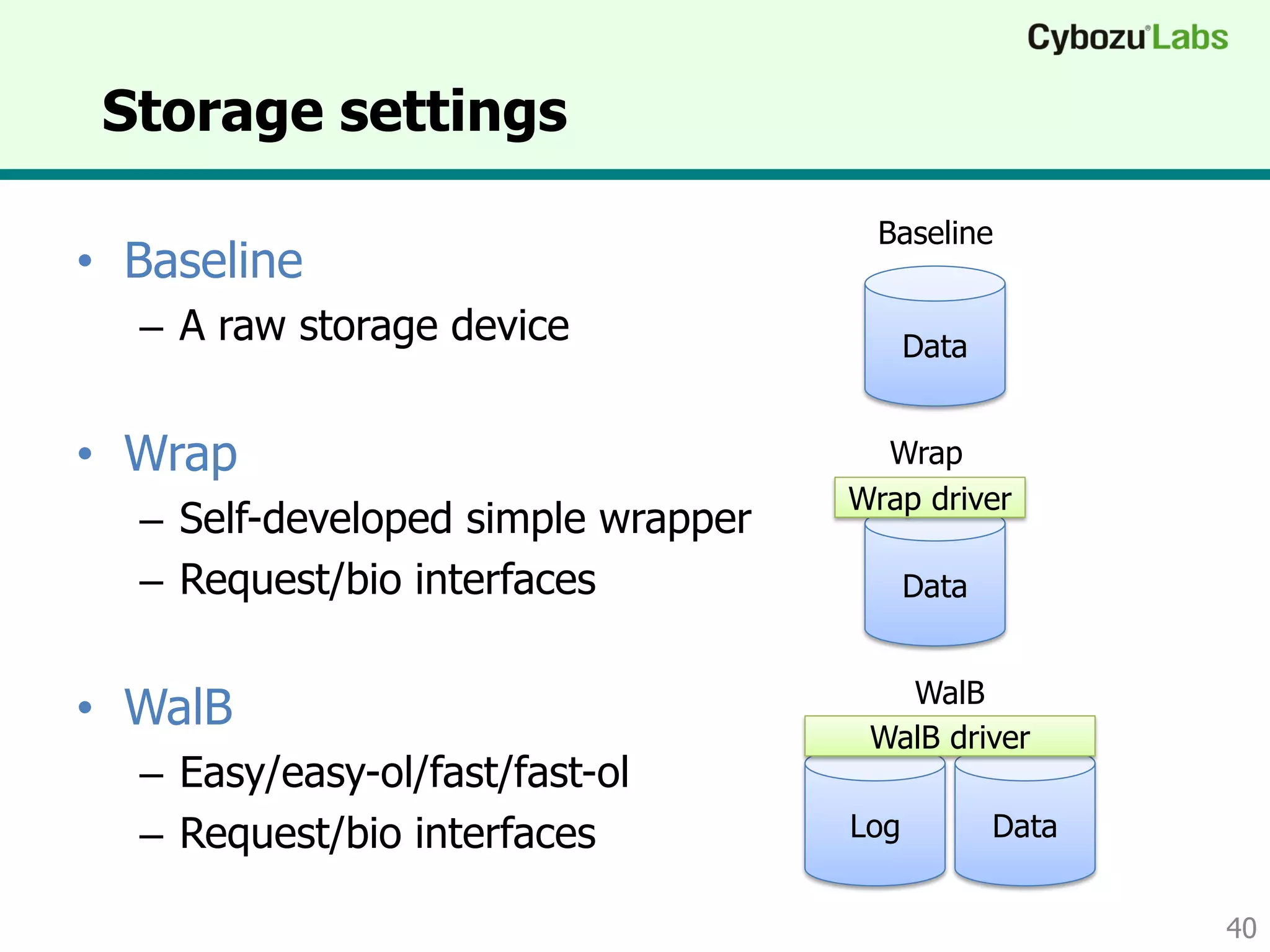

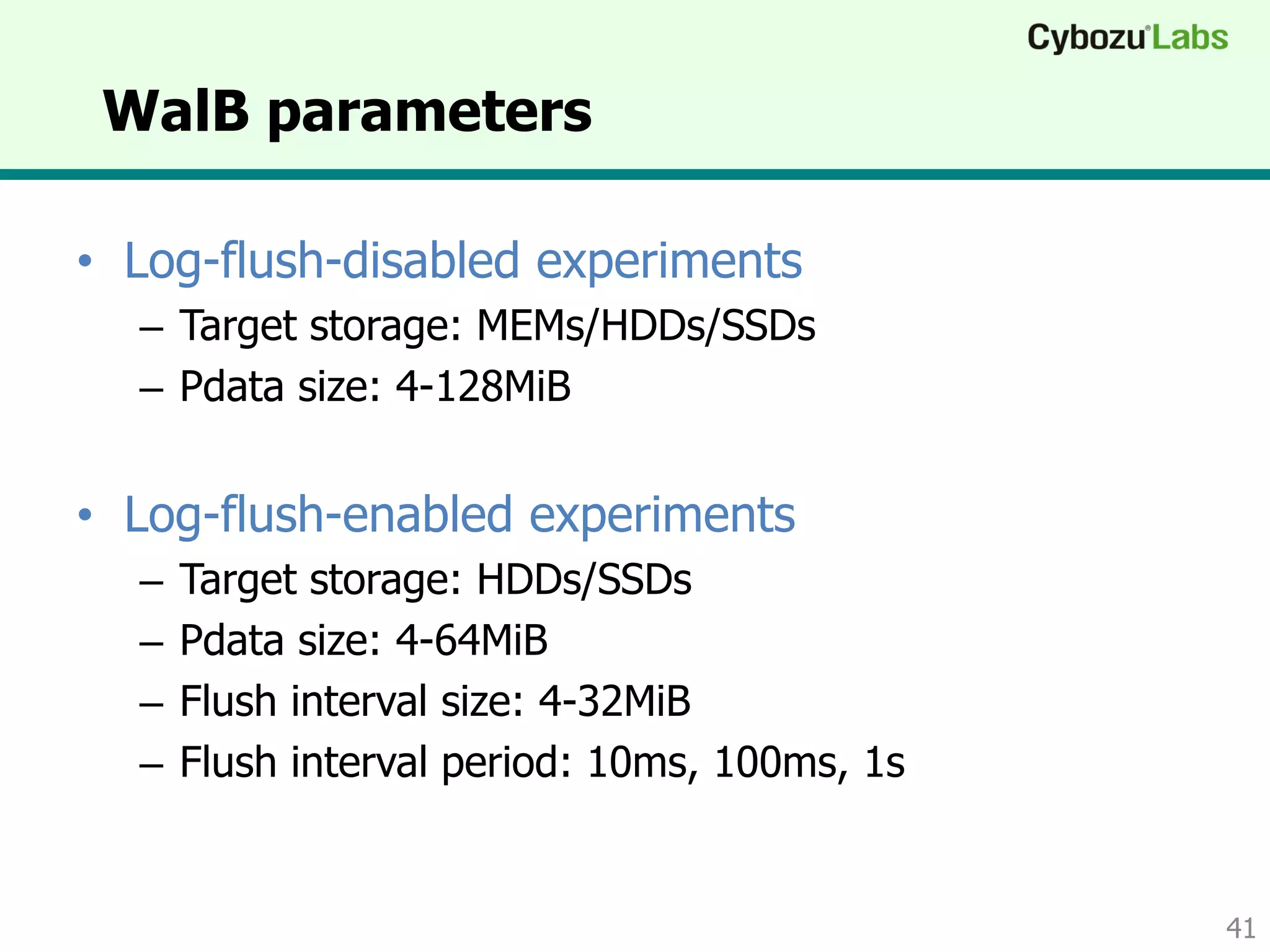

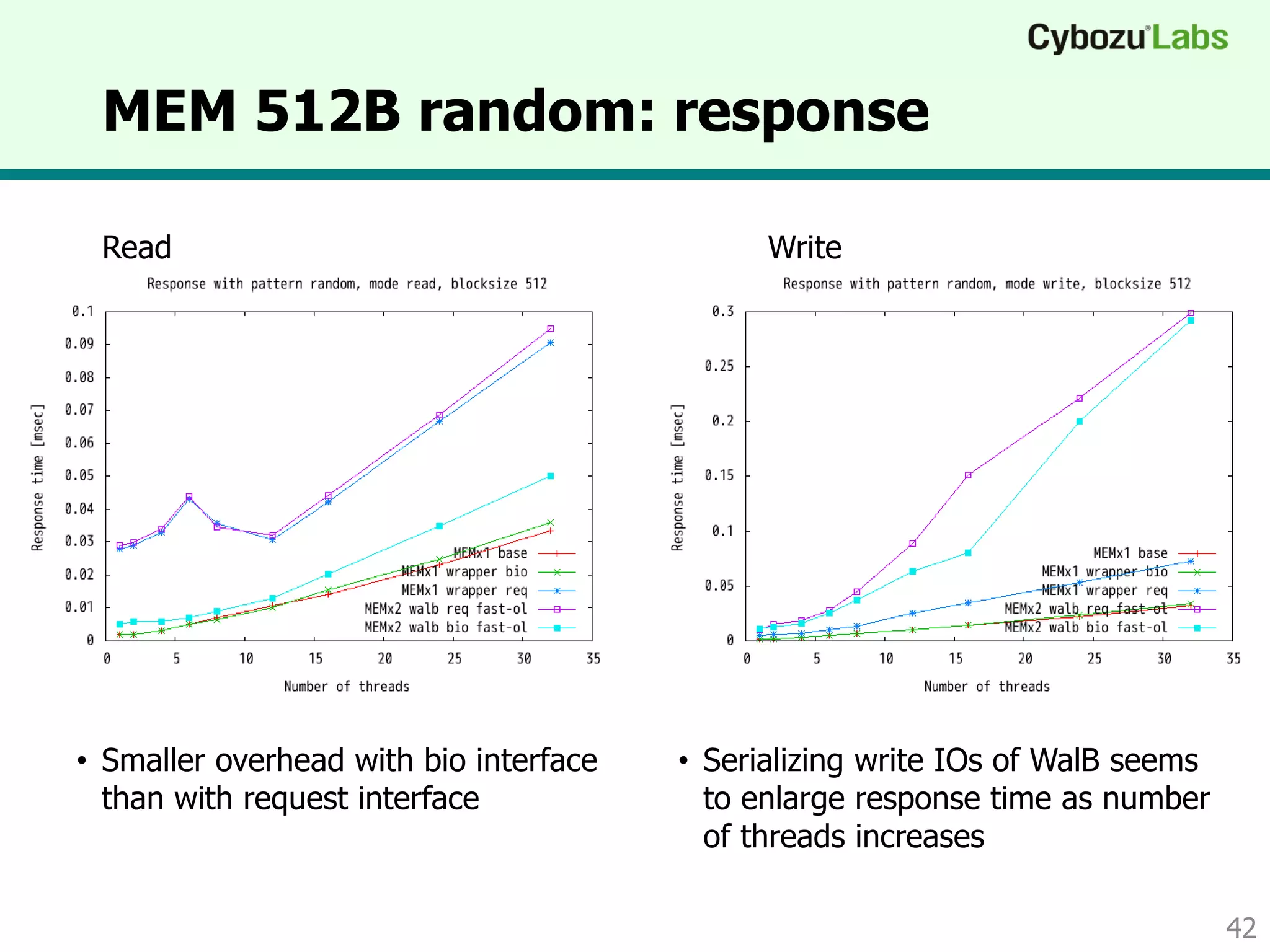

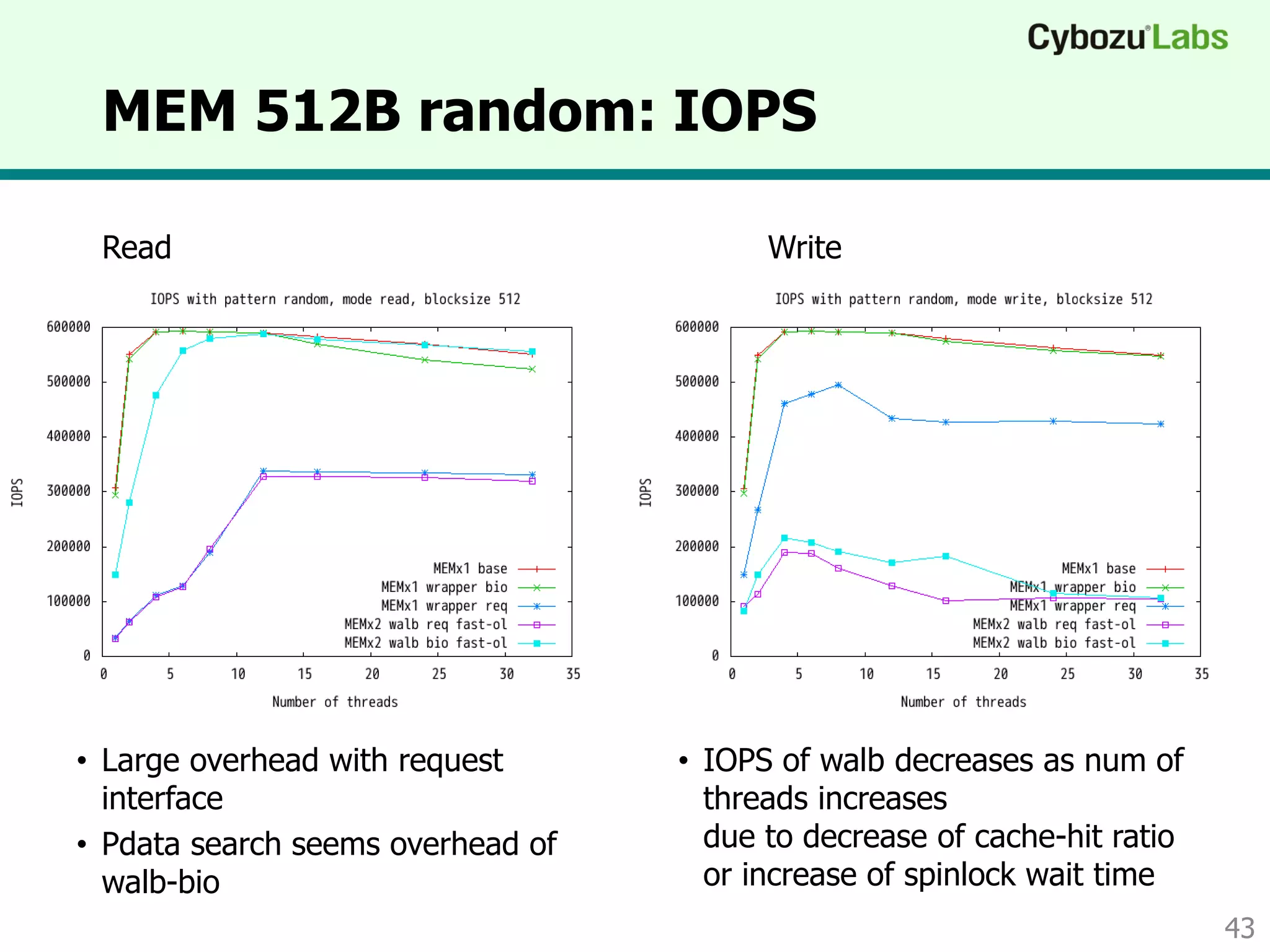

This document describes WalB, a Linux kernel device driver that provides efficient backup and replication of storage using block-level write-ahead logging (WAL). It has negligible performance overhead and avoids issues like fragmentation. WalB works by wrapping a block device and writing redo logs to a separate log device. It then extracts diffs for backup/replication. The document discusses WalB's architecture, algorithm, performance evaluation and future work.