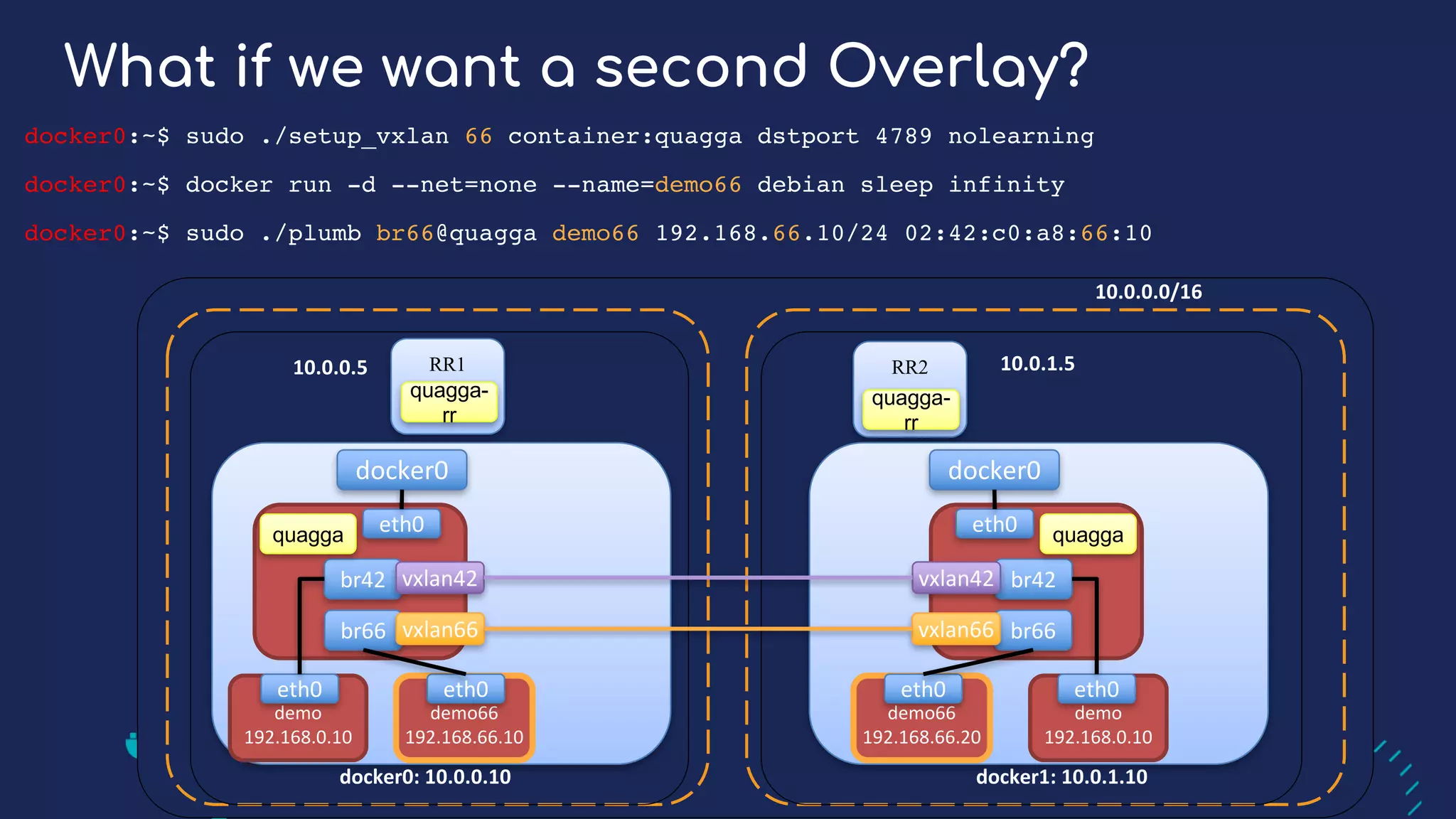

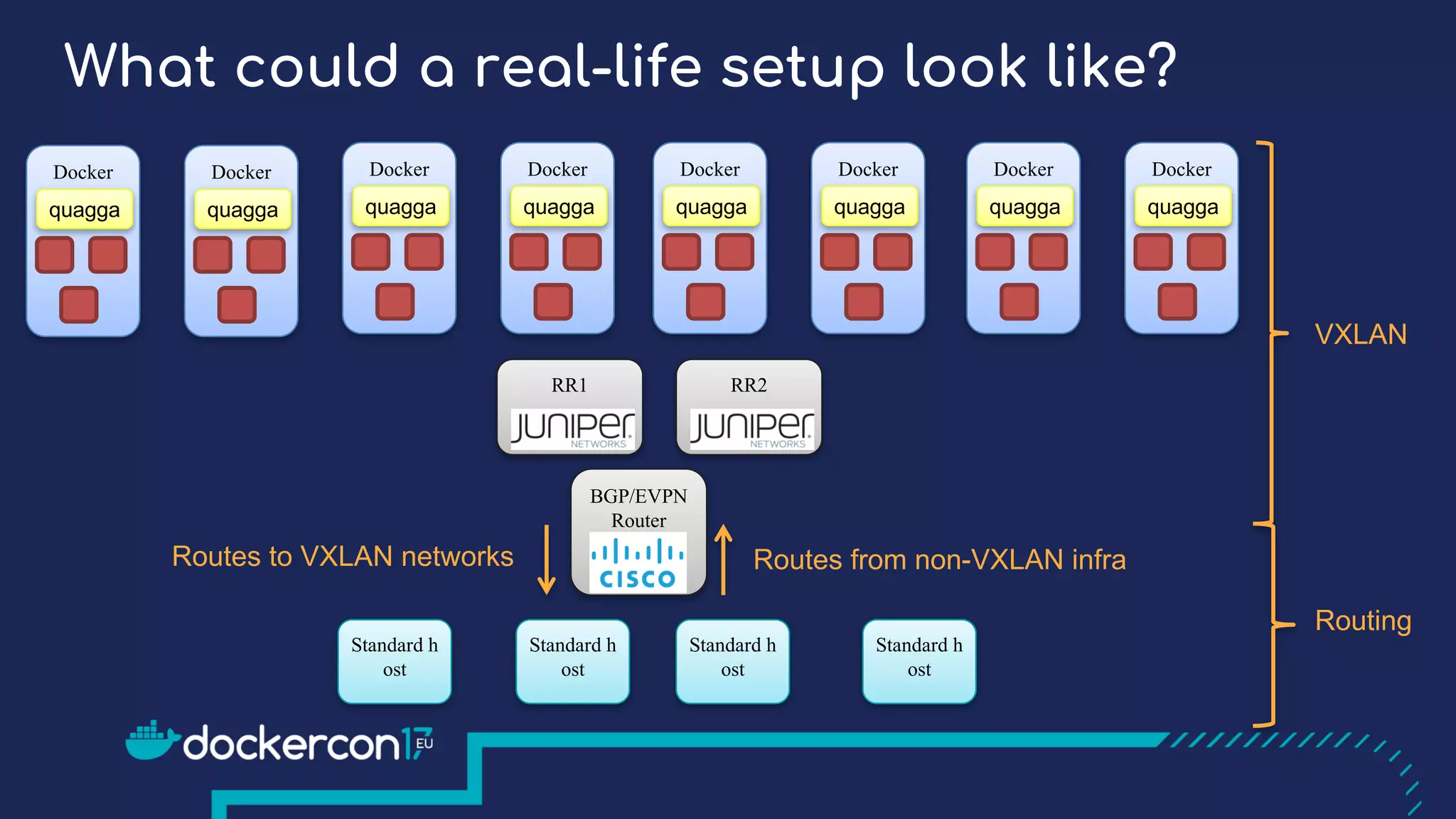

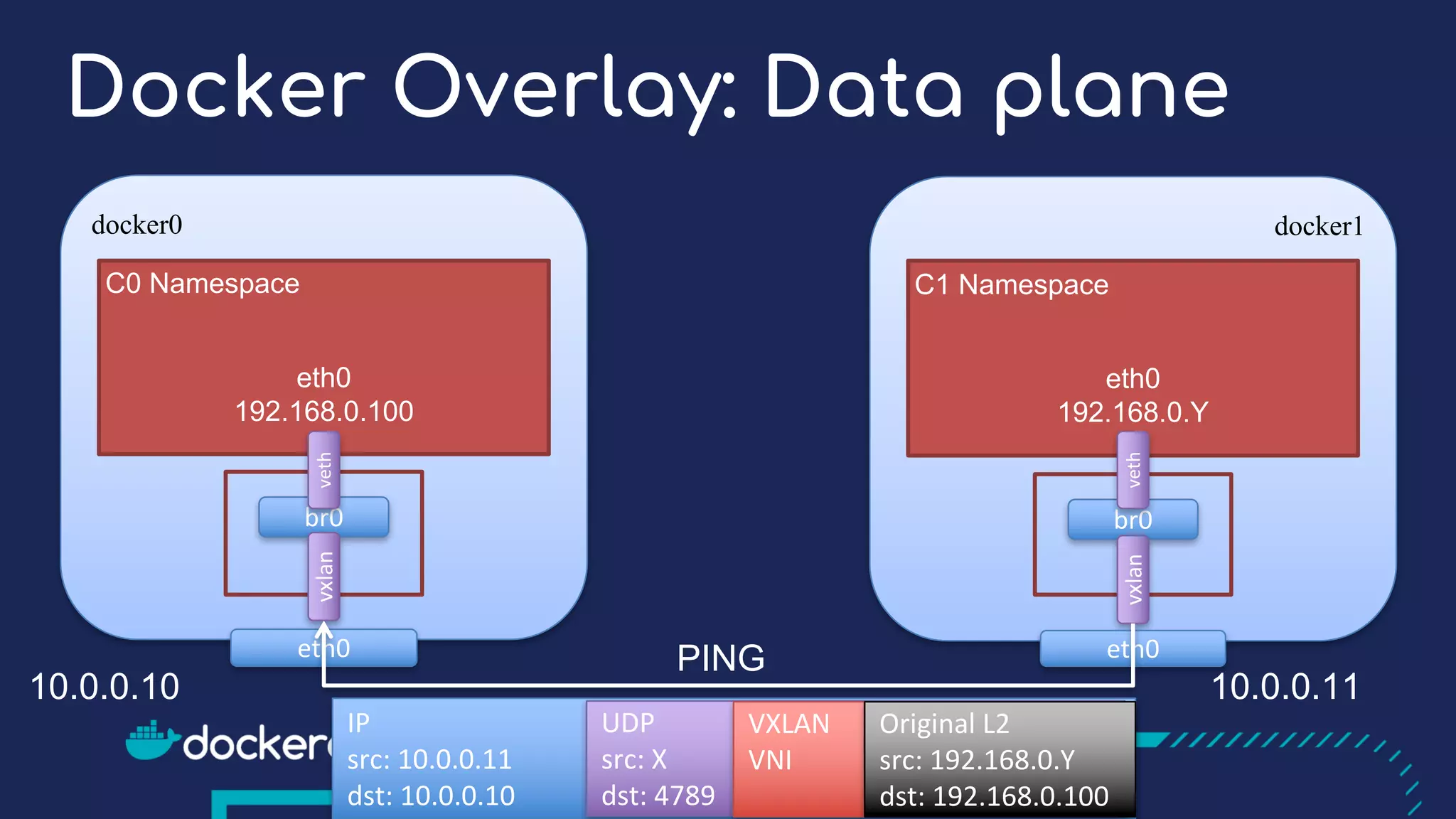

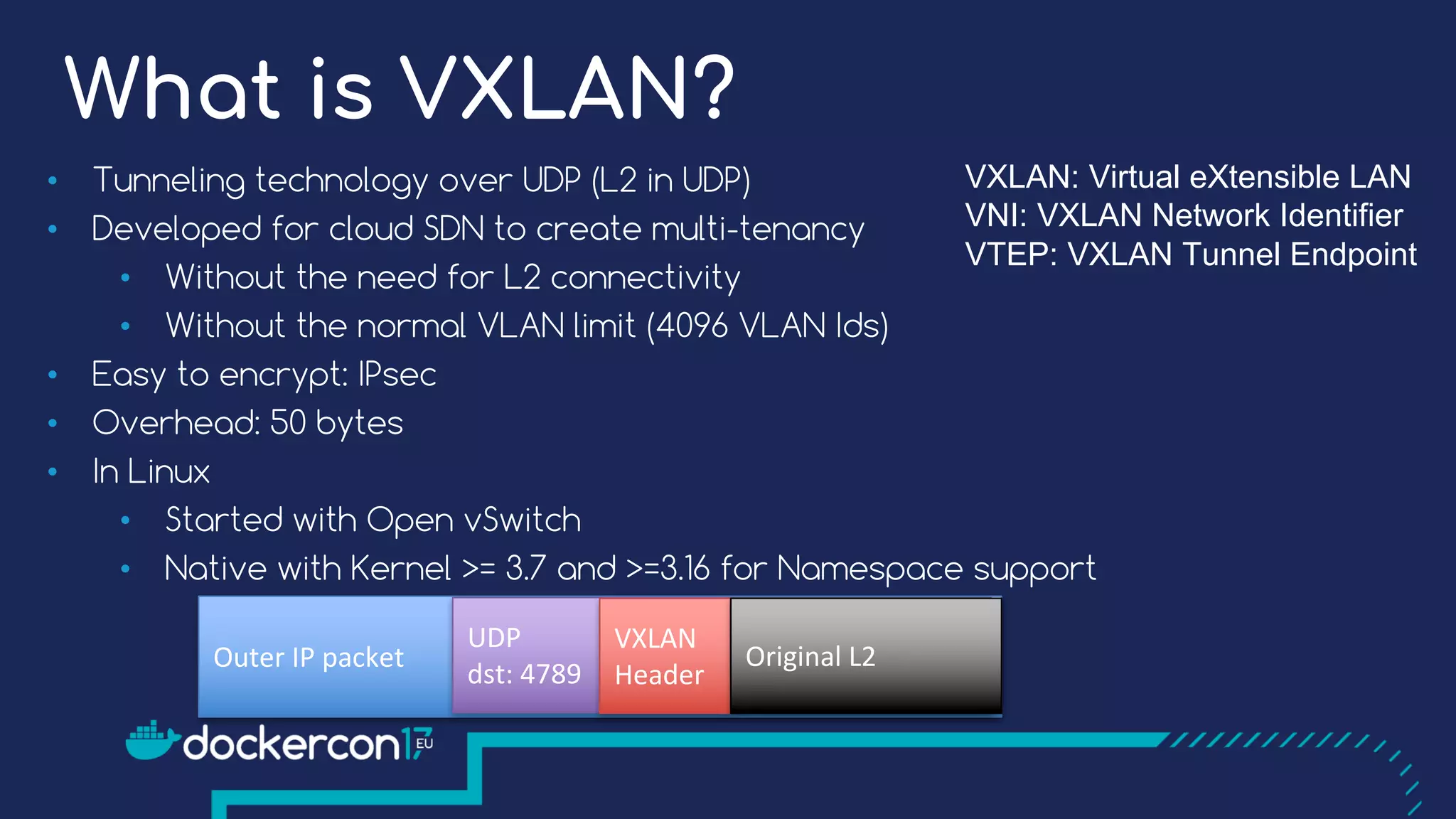

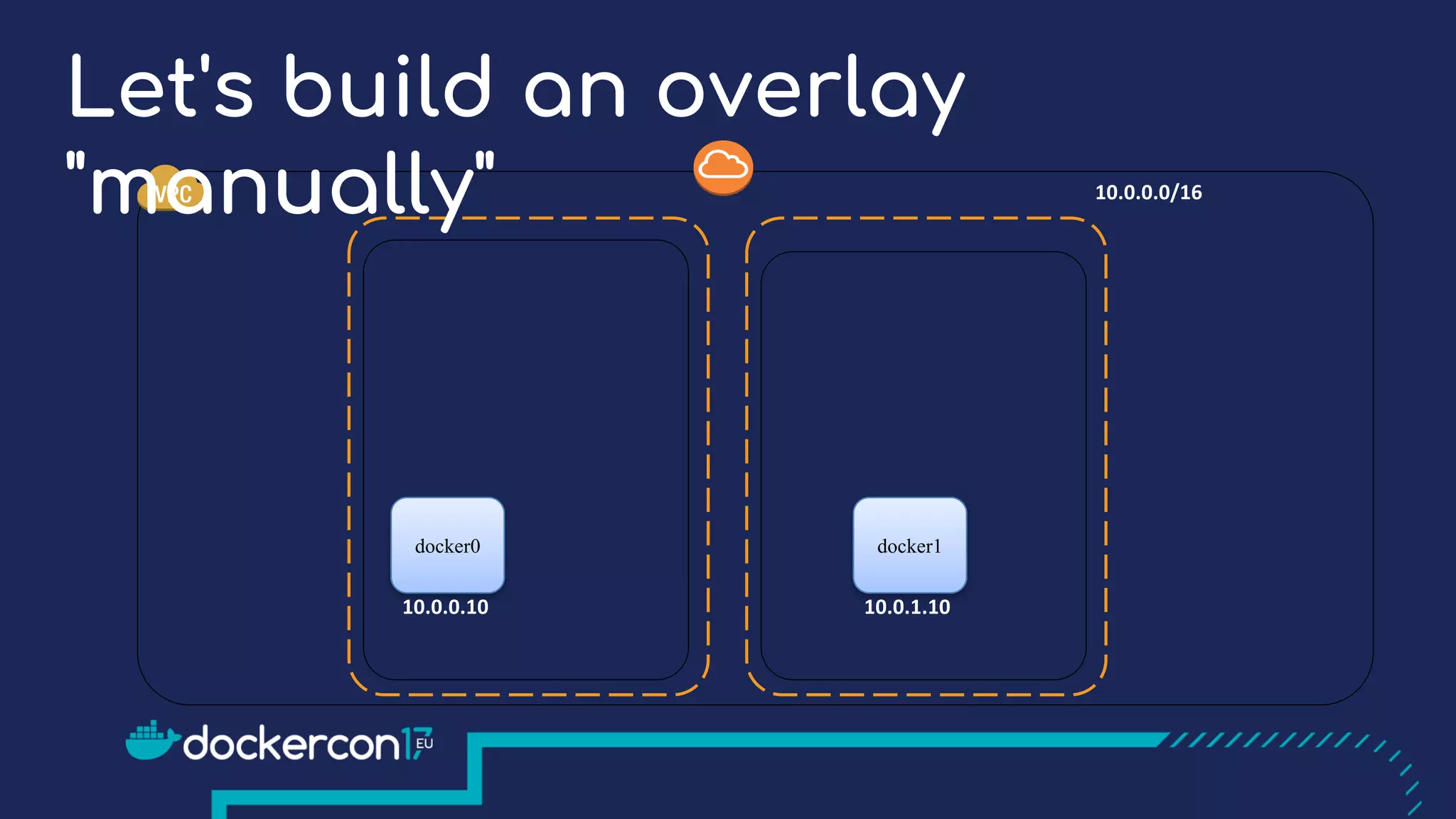

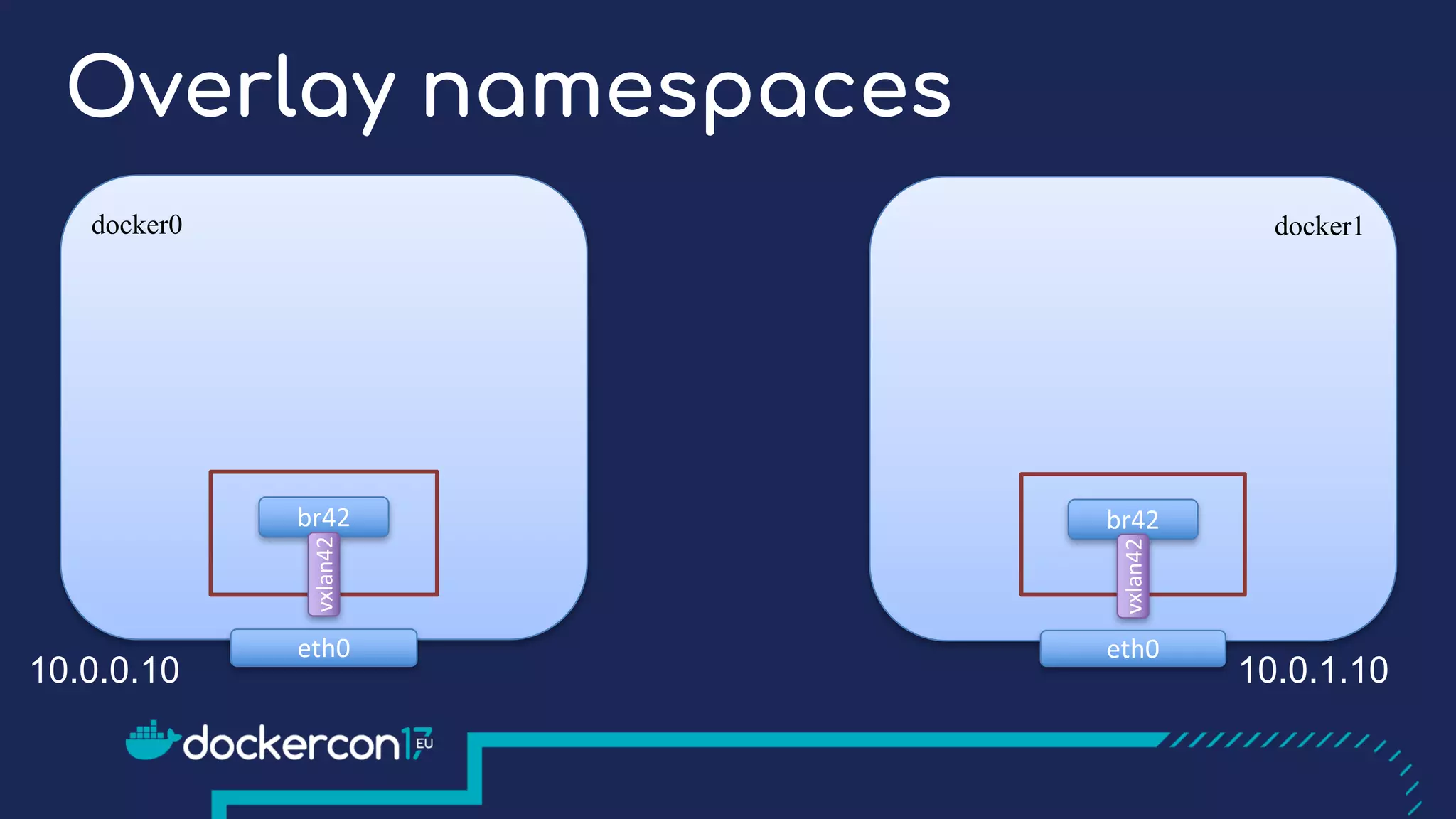

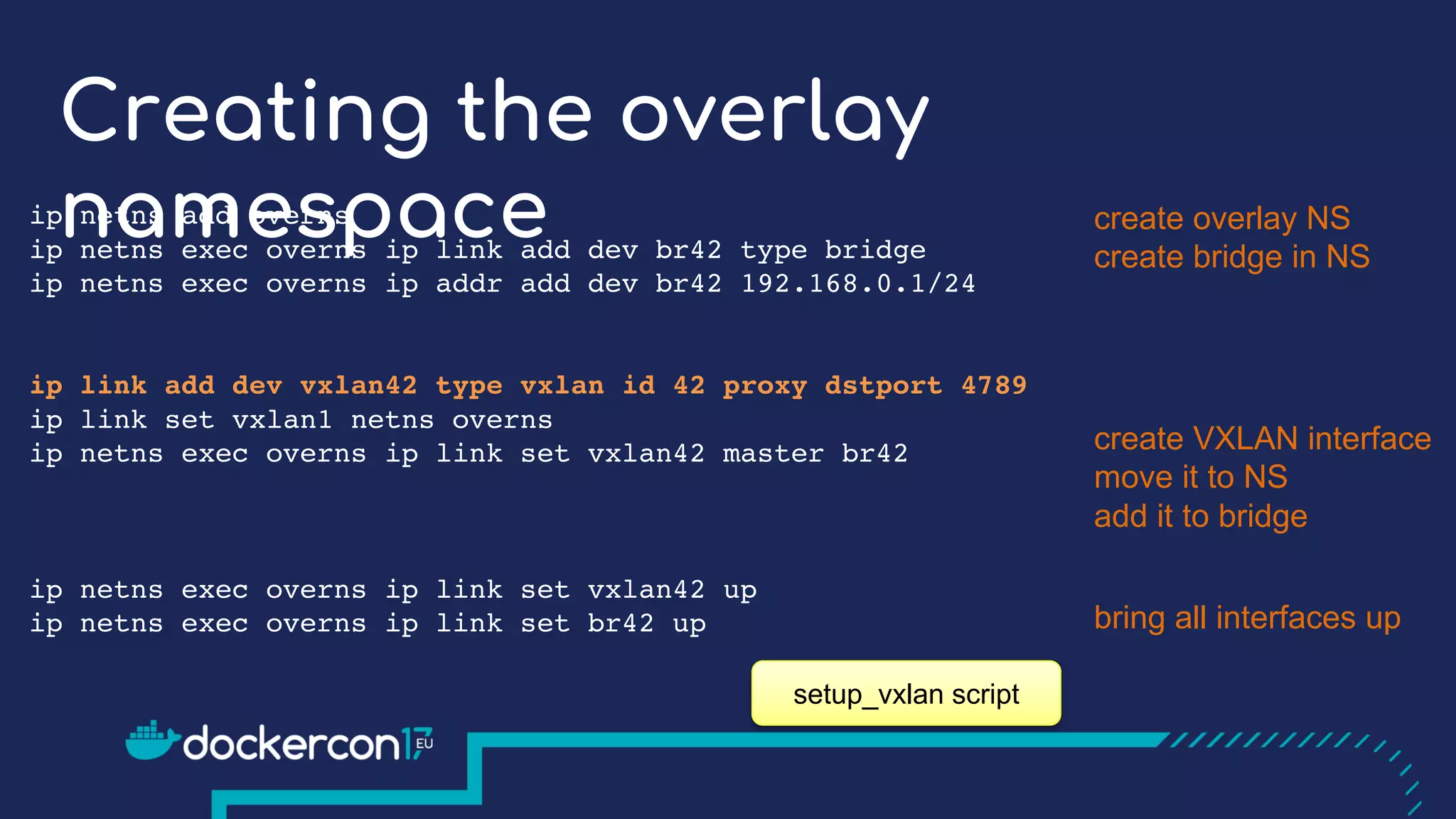

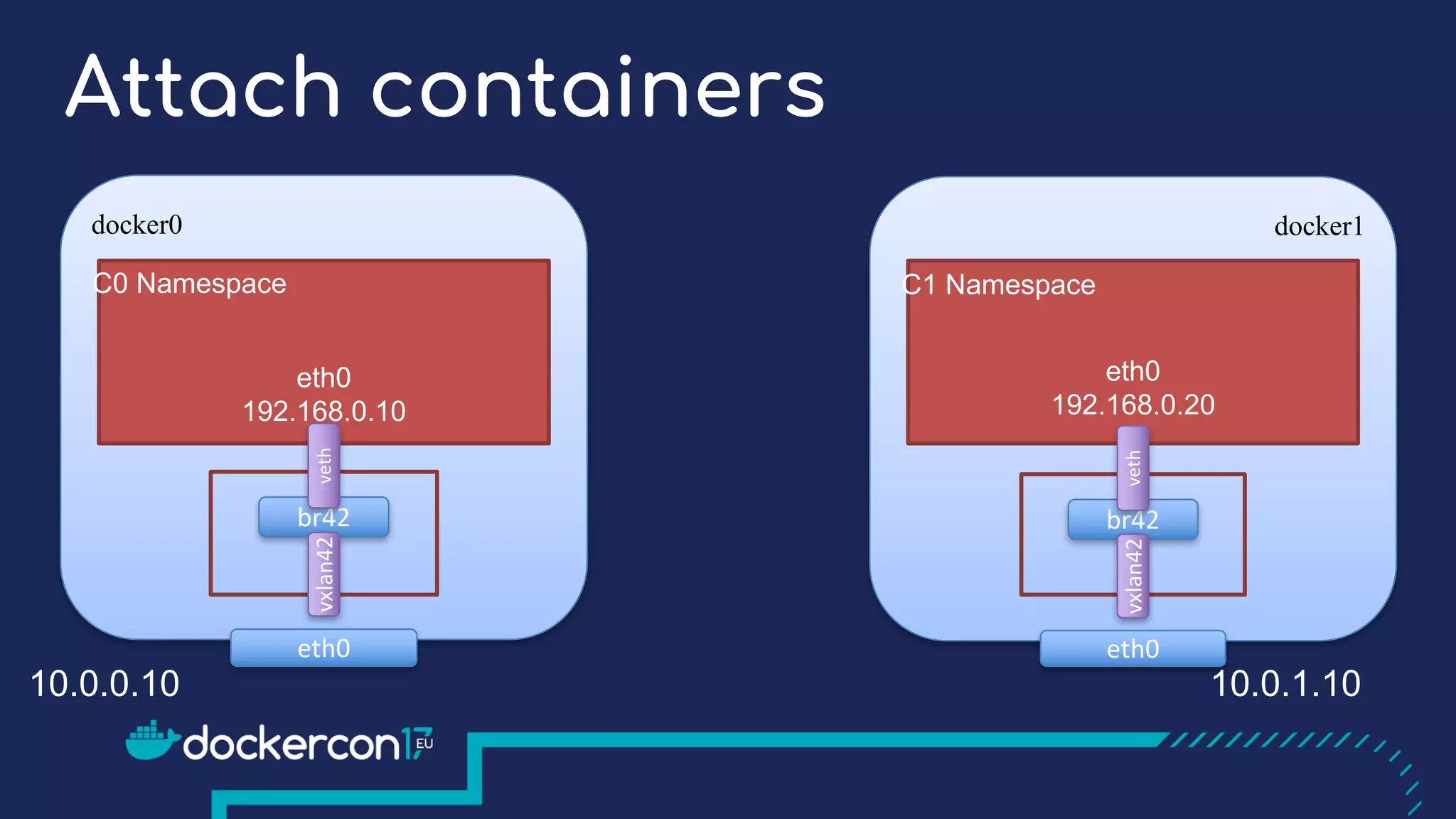

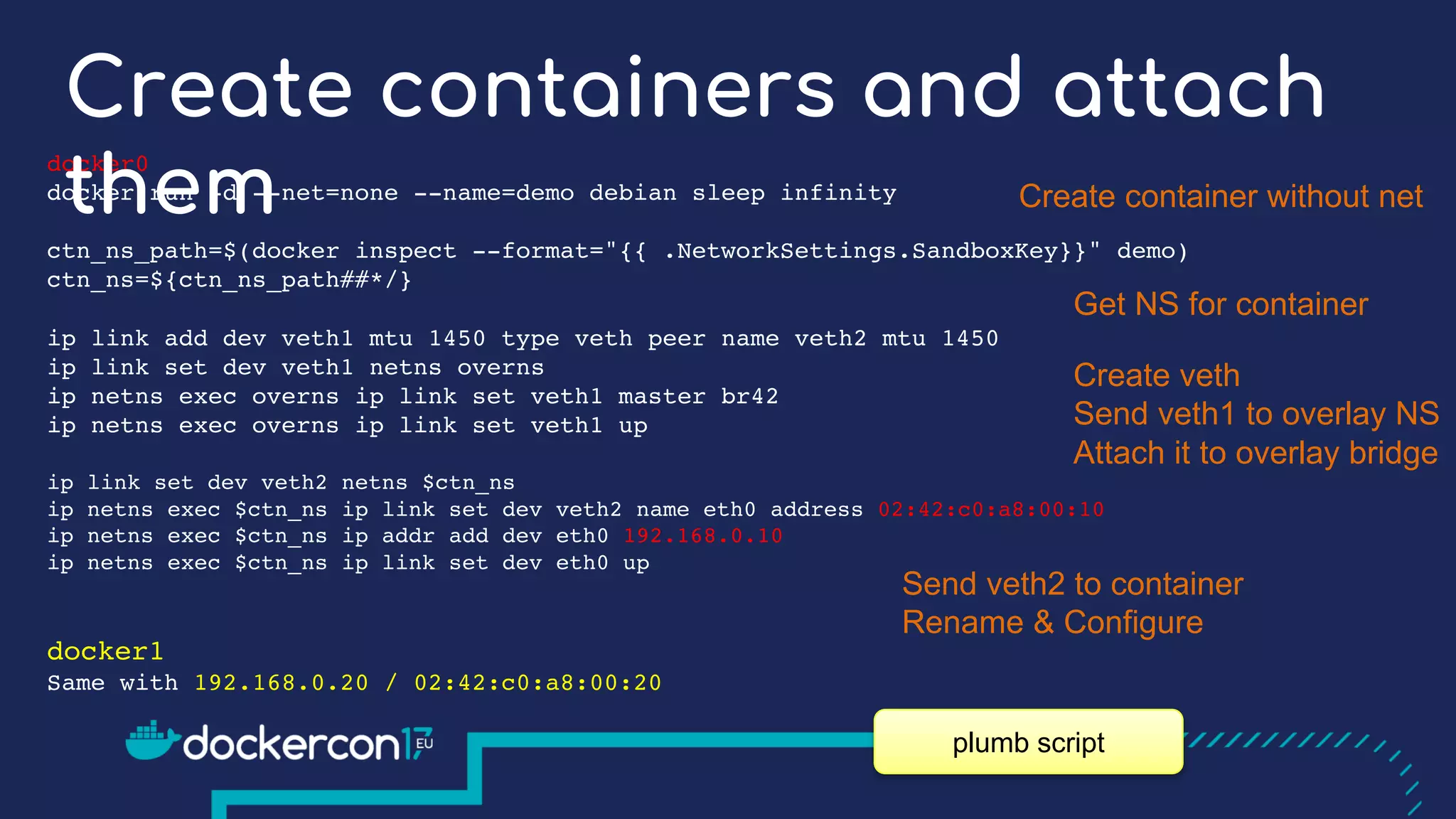

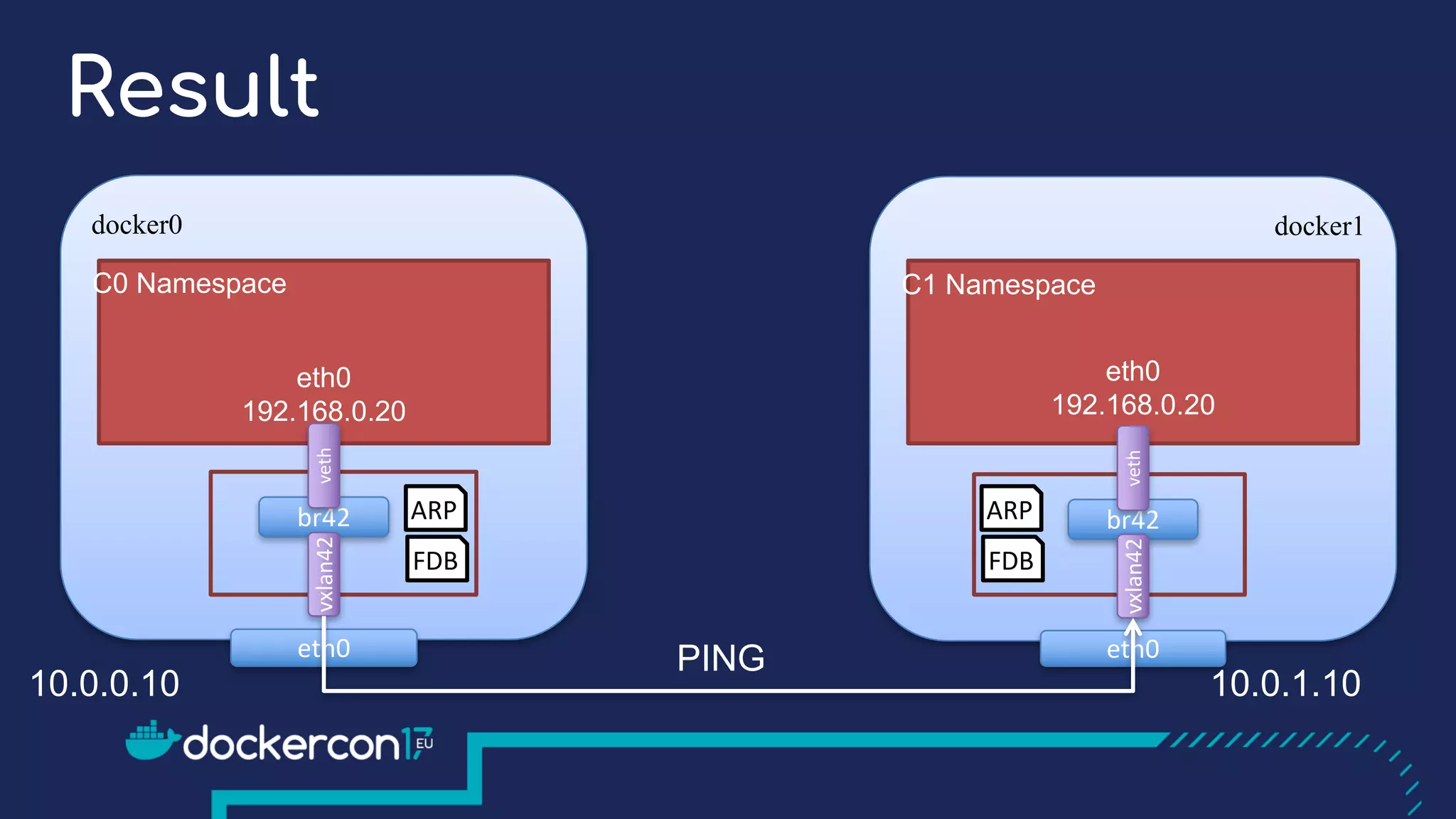

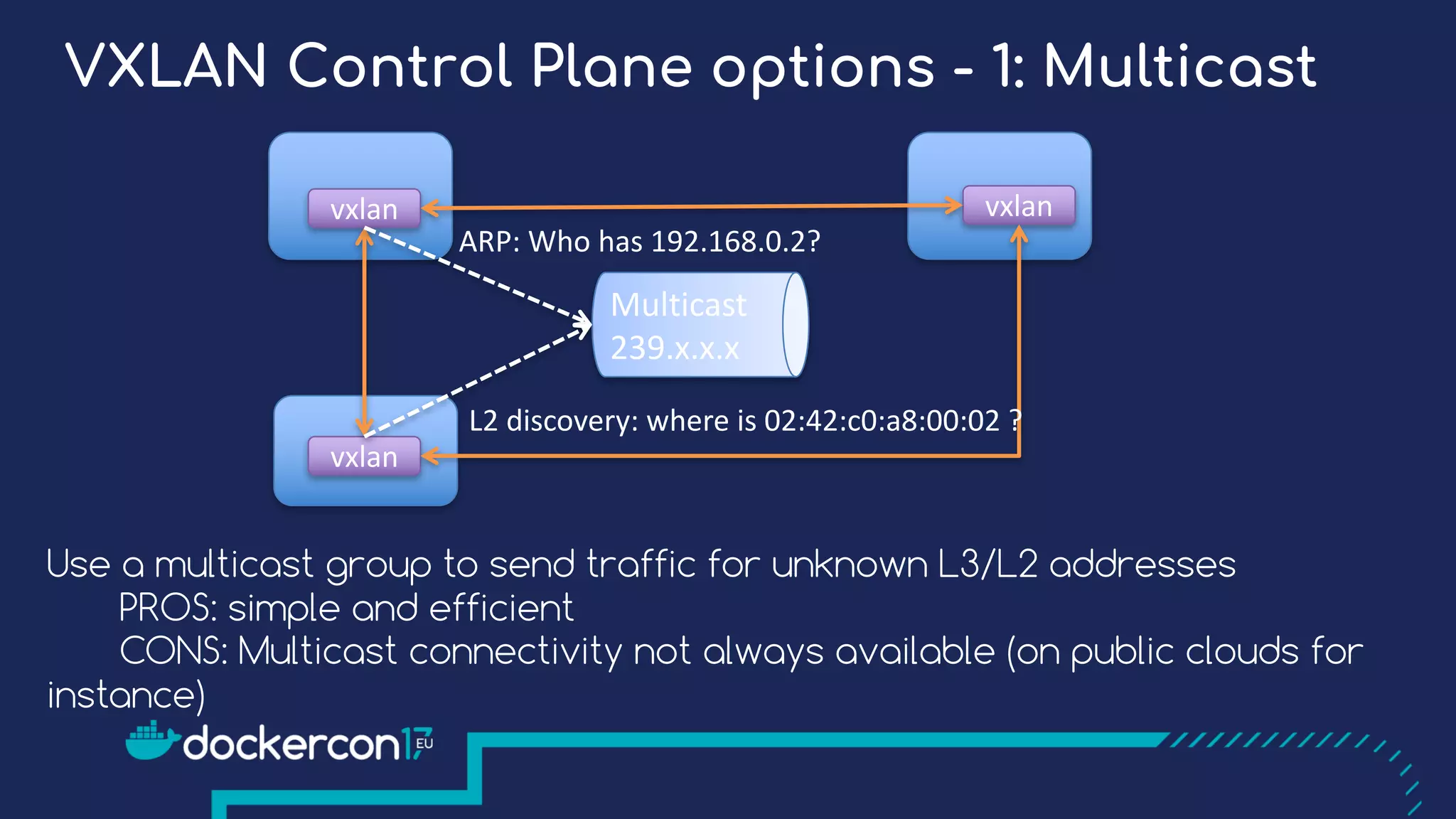

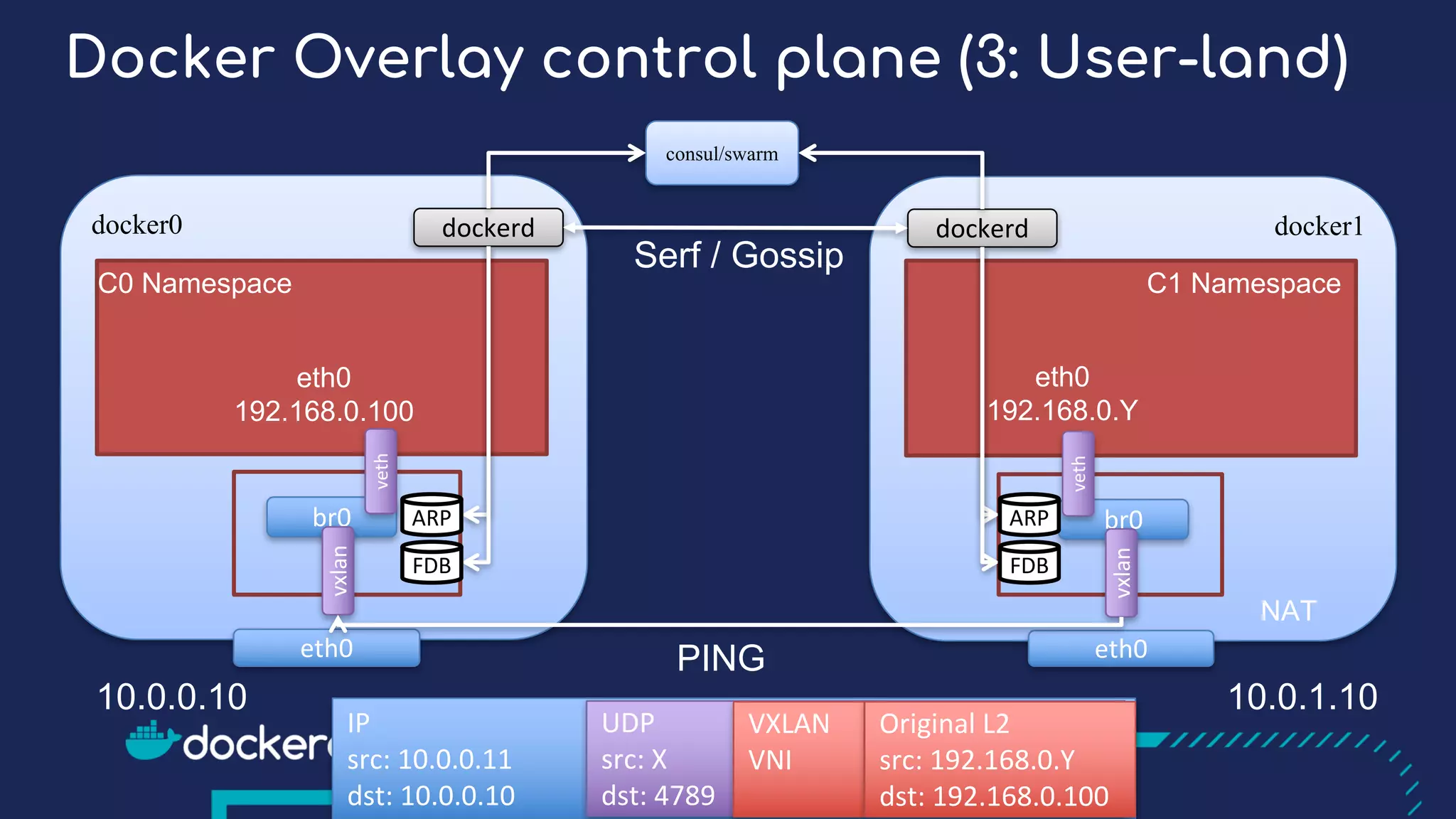

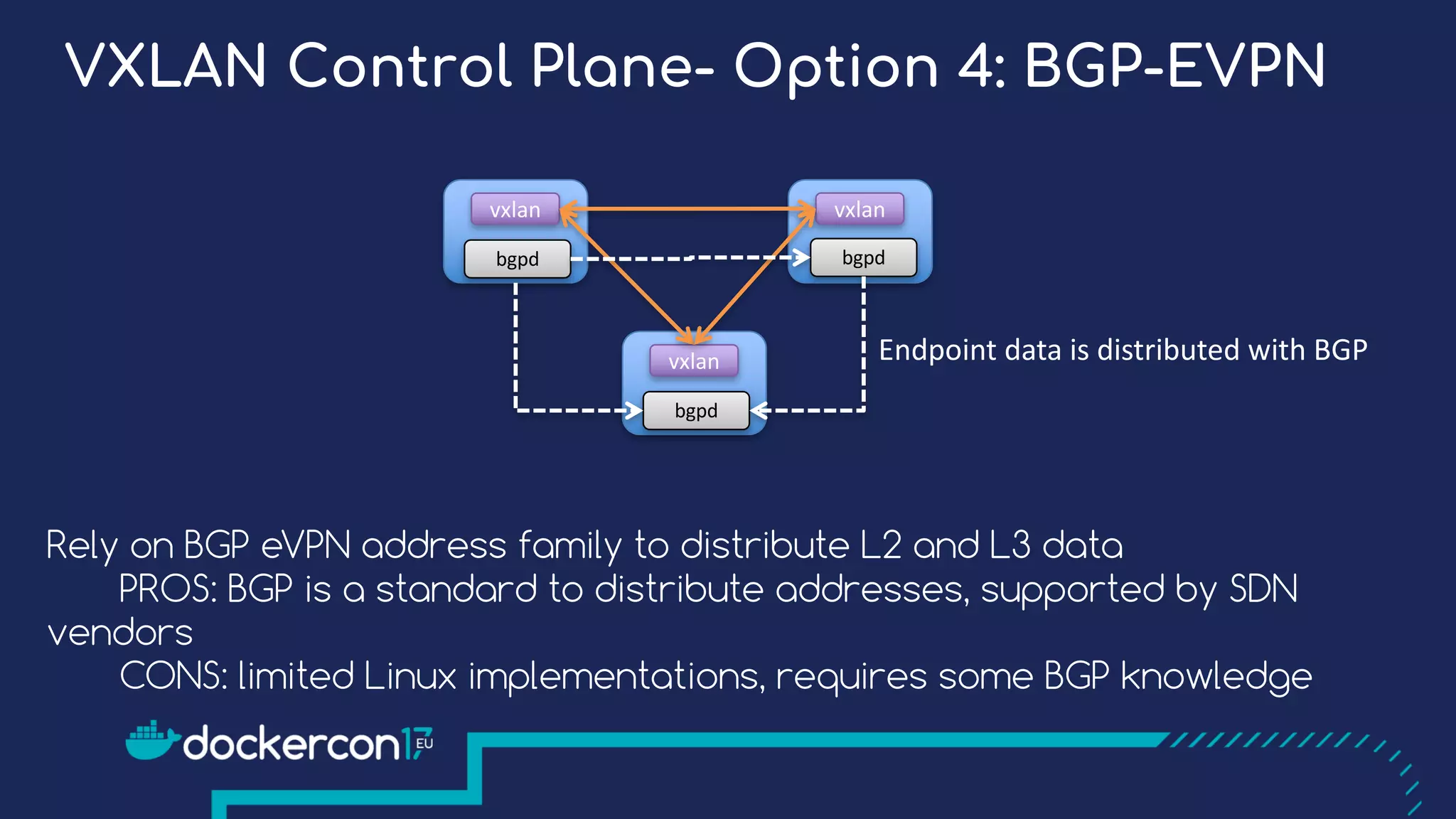

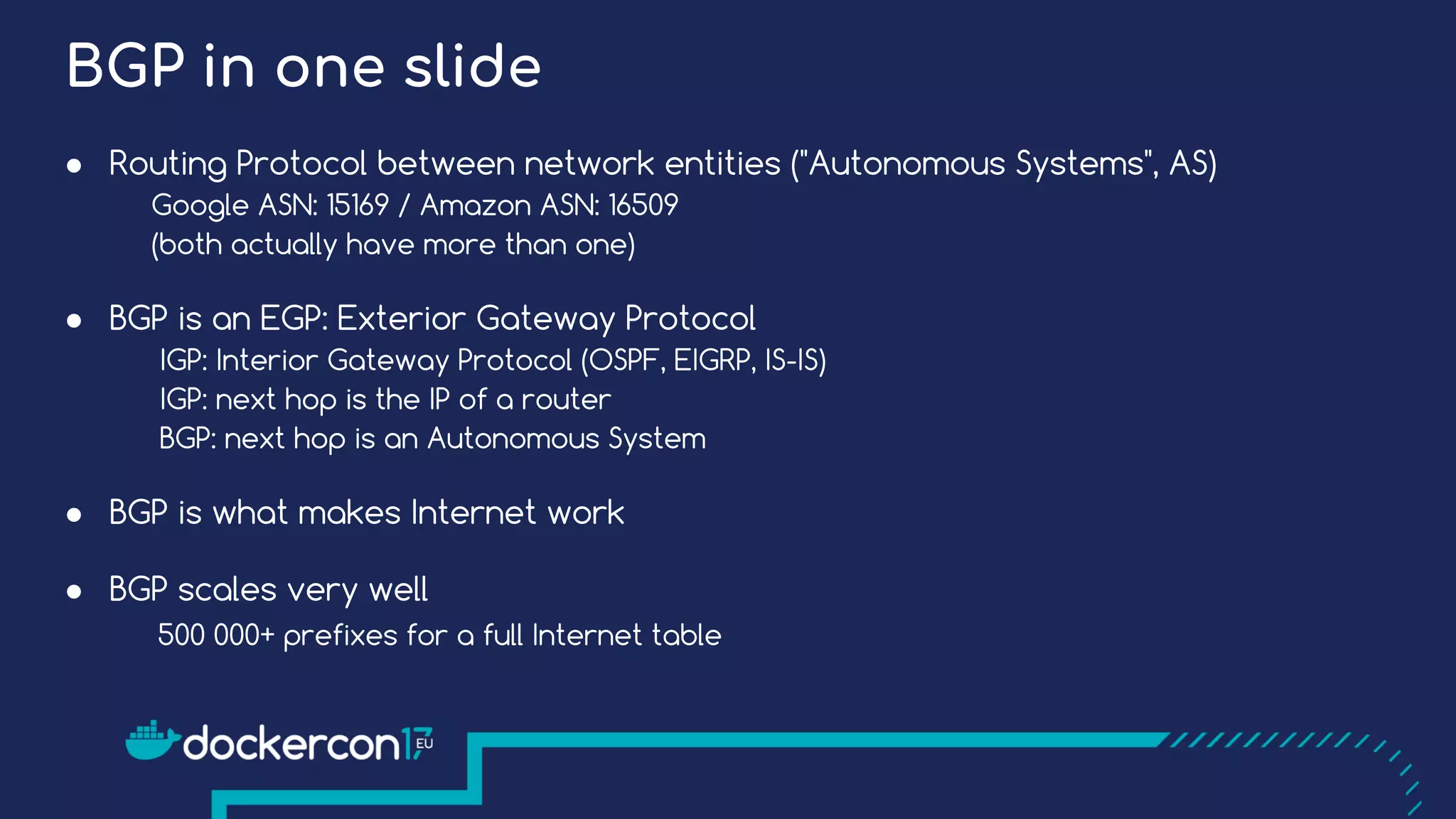

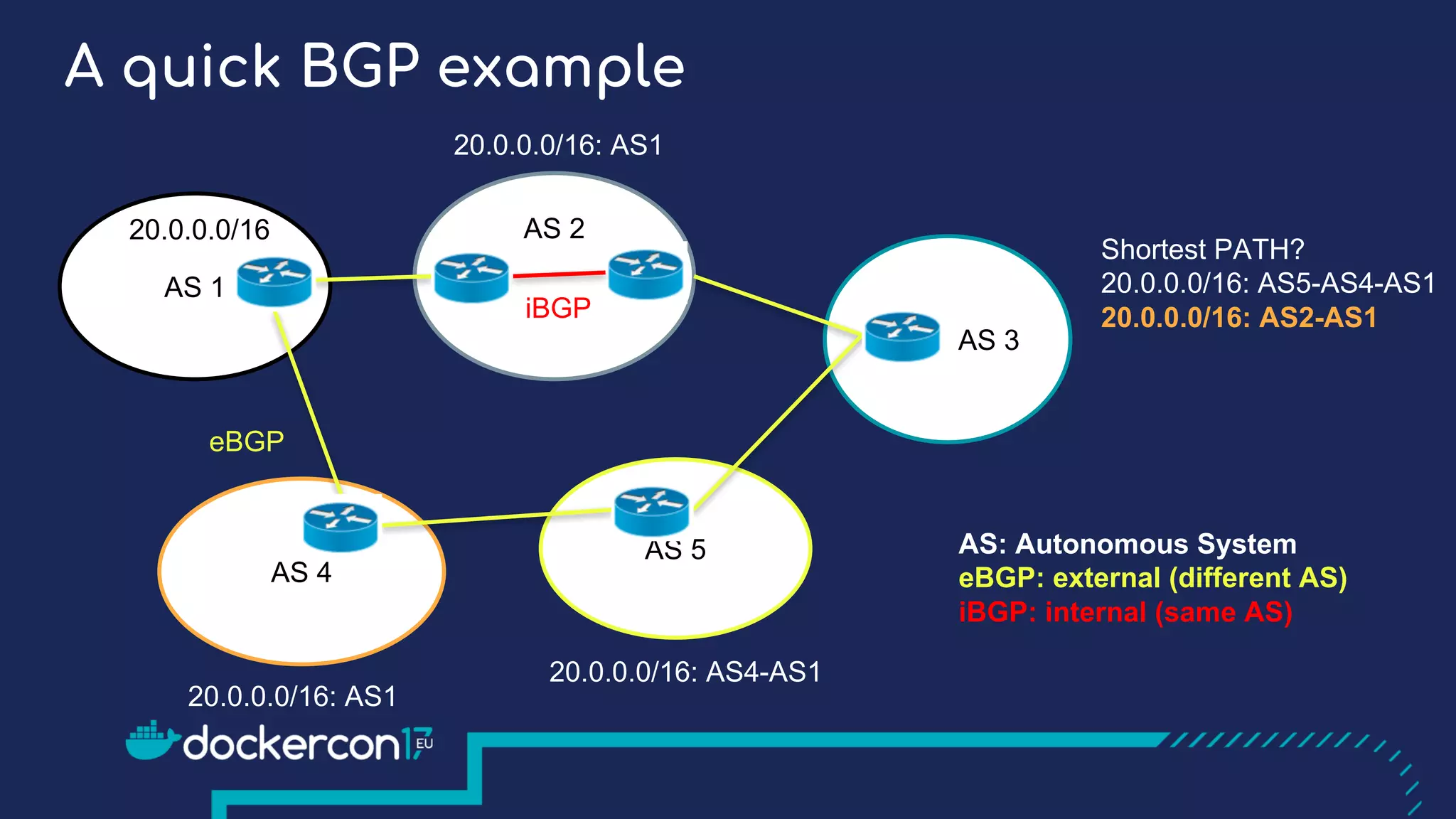

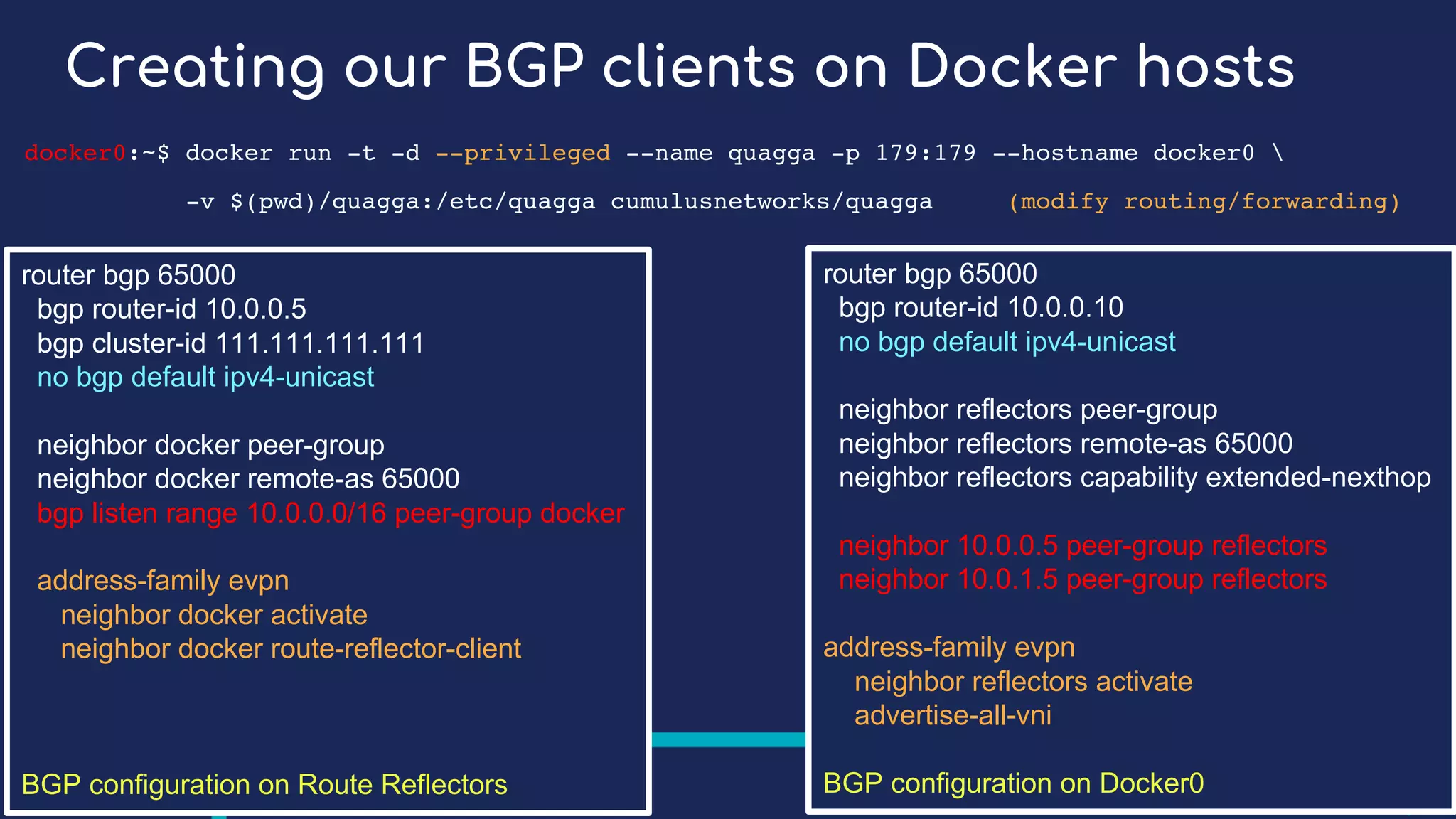

The document presents a detailed exploration of Docker overlay networks, focusing on the use of VXLAN as a tunneling technology for creating multi-tenant environments without the limitations of traditional VLANs. It discusses various VXLAN control plane options, including multicast, point-to-point configurations, and leveraging BGP for dynamic address distribution. Practical examples and scripts demonstrate setting up overlay namespaces, bridges, and containers, emphasizing the configuration of networking components within Docker environments.

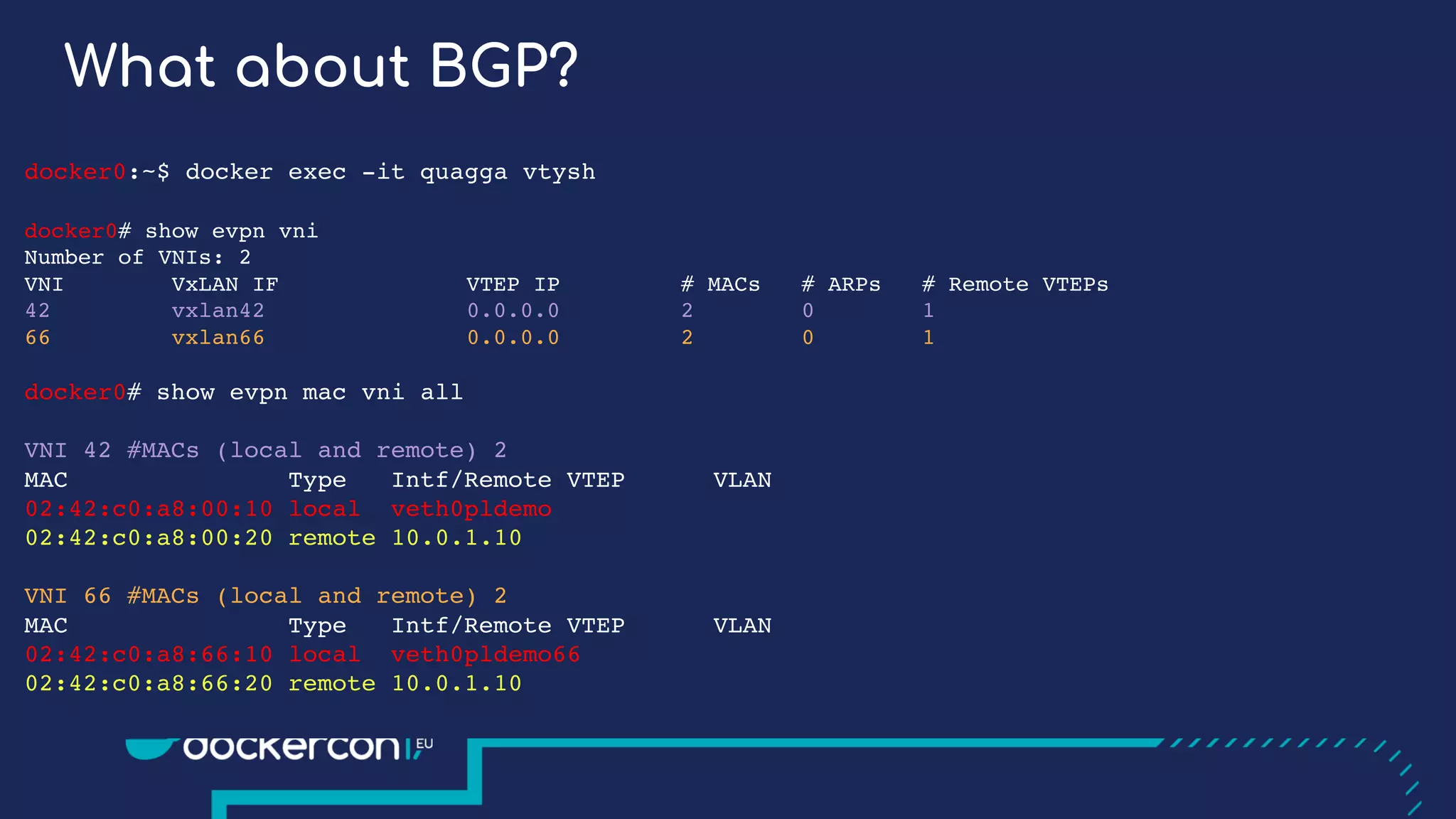

![Let's look at the BGP data

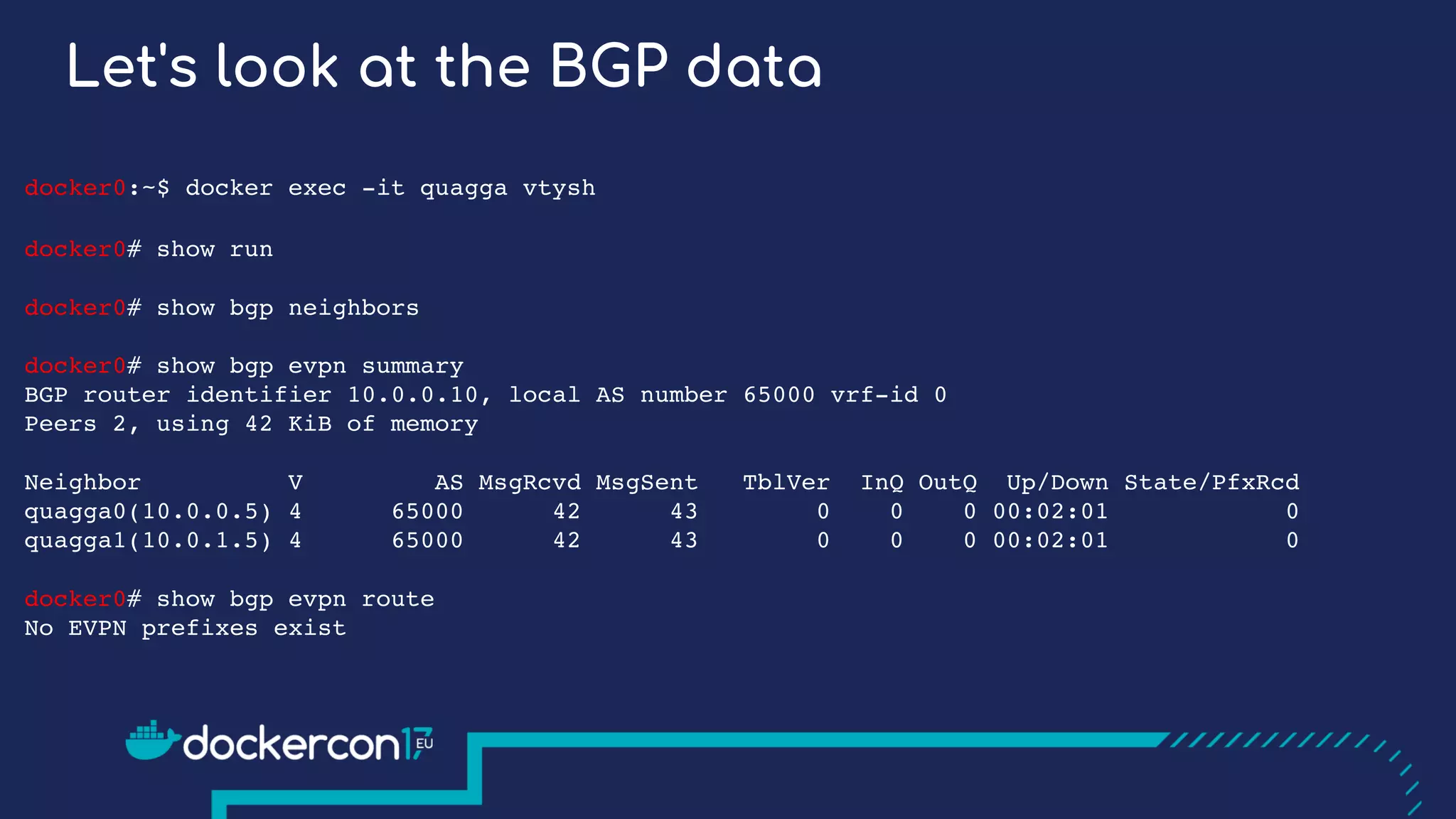

docker0:~$ docker exec -it quagga vtysh

docker0# show bgp evpn route

BGP table version is 0, local router ID is 10.0.0.10

EVPN type-2 prefix: [2]:[ESI]:[EthTag]:[MAClen]:[MAC]

EVPN type-3 prefix: [3]:[EthTag]:[IPlen]:[OrigIP]

Network Next Hop Metric LocPrf Weight Path

Route Distinguisher: 10.0.0.10:1

*> [3]:[0]:[32]:[10.0.0.10]

10.0.0.10 32768 i

Route Distinguisher: 10.0.1.10:1

*>i[3]:[0]:[32]:[10.0.1.10]

10.0.1.10 0 100 0 i

docker0# show evpn mac vni all](https://image.slidesharecdn.com/deeperdiveindockeroverlaynetworks-171020102717/75/Deeper-Dive-in-Docker-Overlay-Networks-31-2048.jpg)

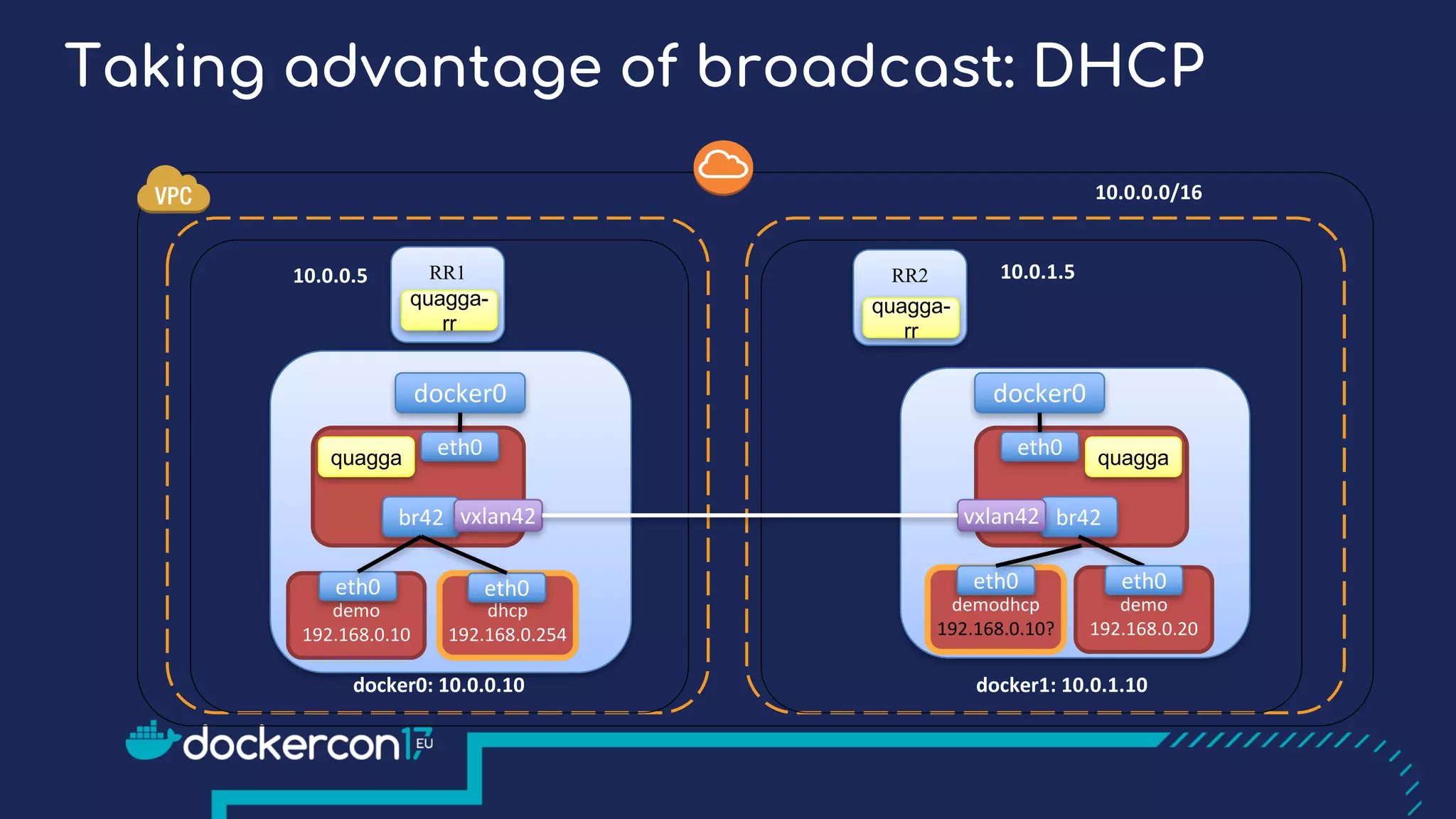

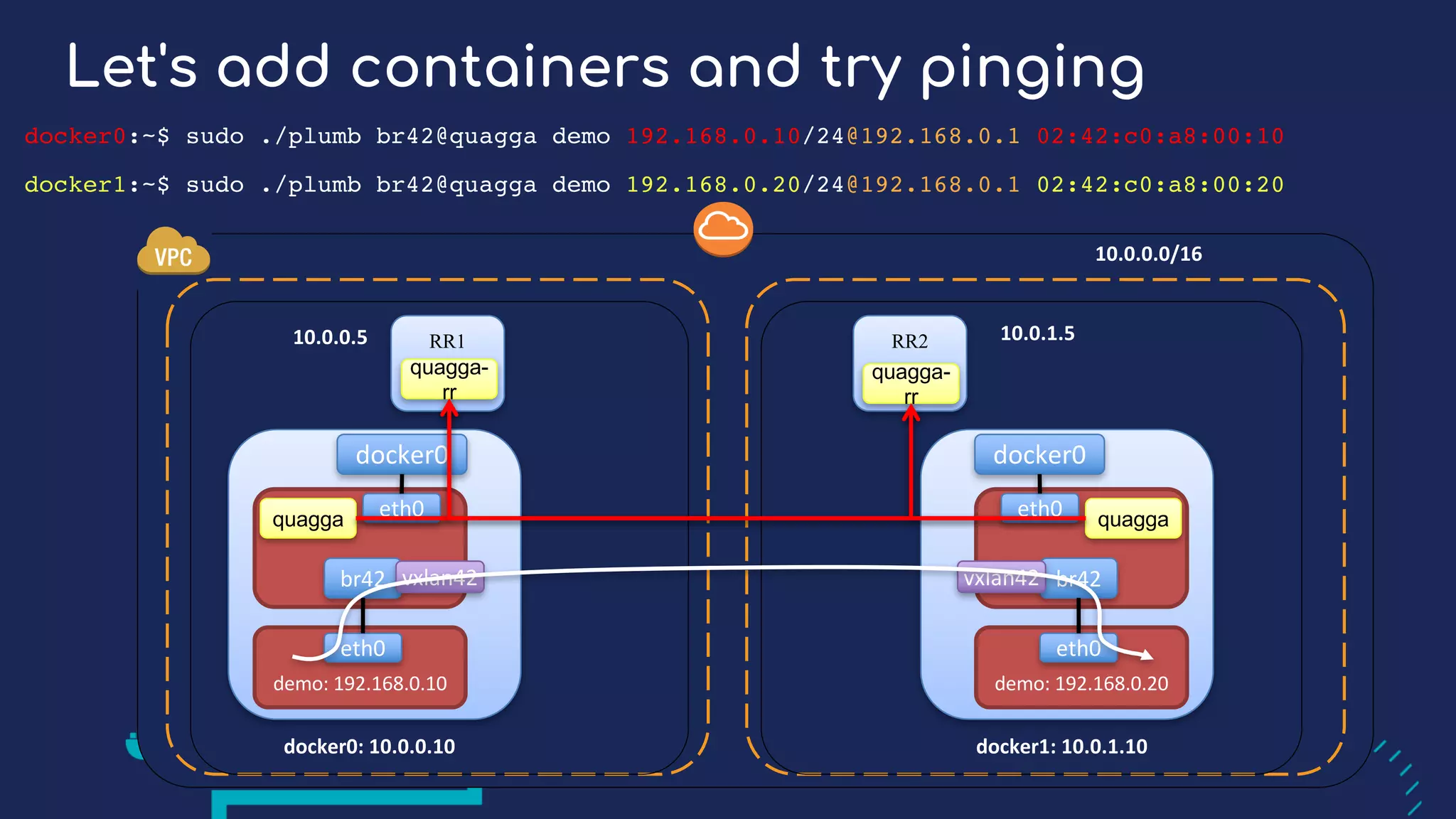

![What about BGP?

docker0:~$ docker exec -it quagga vtysh

docker0# show bgp evpn route

BGP table version is 0, local router ID is 10.0.0.10

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal

Origin codes: i - IGP, e - EGP, ? - incomplete

EVPN type-2 prefix: [2]:[ESI]:[EthTag]:[MAClen]:[MAC]

EVPN type-3 prefix: [3]:[EthTag]:[IPlen]:[OrigIP]

Route Distinguisher: 10.0.1.10:1

*>i[2]:[0]:[0]:[48]:[02:42:c0:a8:00:20]

10.0.1.10 0 100 0 i

* i[3]:[0]:[32]:[10.0.1.10]

10.0.1.10 0 100 0 i

docker0# show evpn mac vni all

VNI 42 #MACs (local and remote) 2

MAC Type Intf/Remote VTEP VLAN

02:42:c0:a8:00:10 local veth0pldemo

02:42:c0:a8:00:20 remote 10.0.1.10](https://image.slidesharecdn.com/deeperdiveindockeroverlaynetworks-171020102717/75/Deeper-Dive-in-Docker-Overlay-Networks-33-2048.jpg)