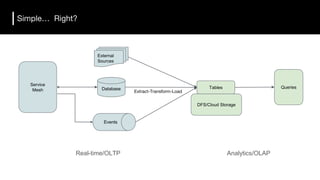

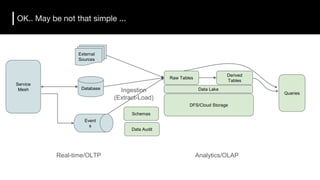

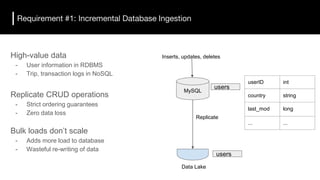

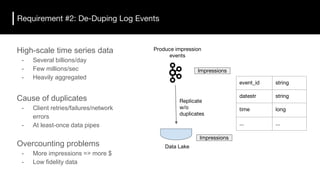

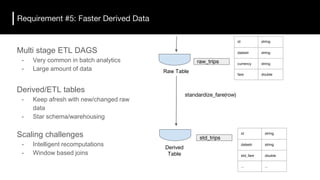

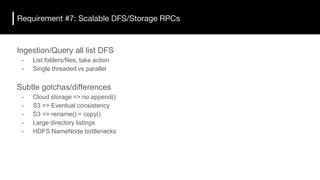

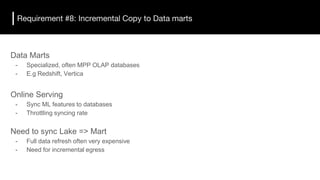

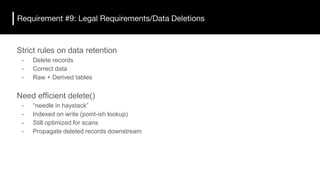

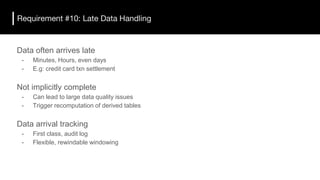

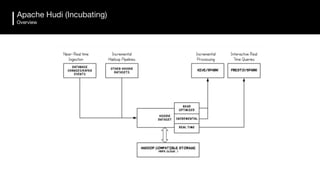

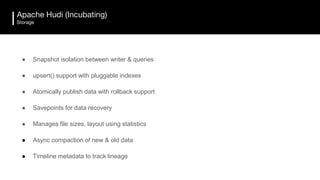

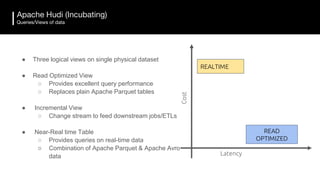

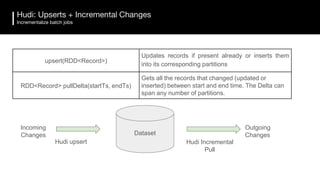

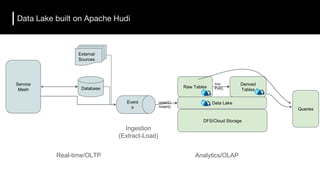

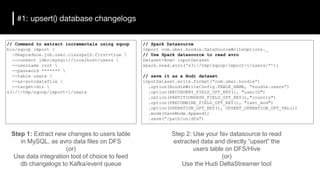

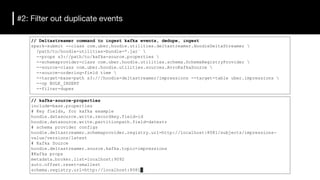

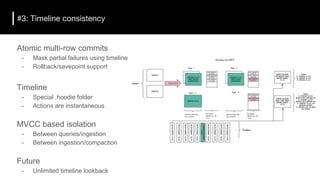

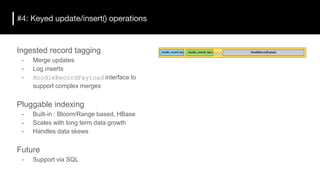

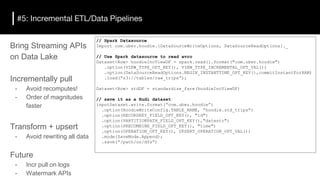

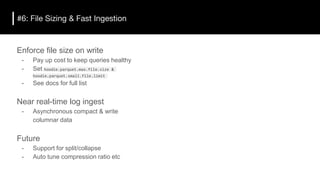

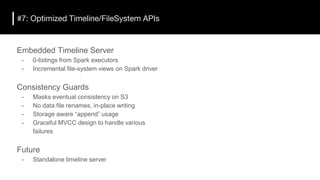

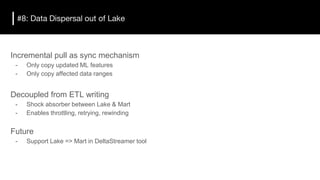

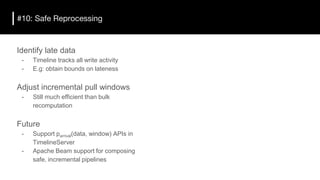

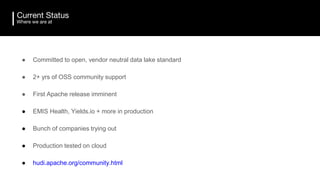

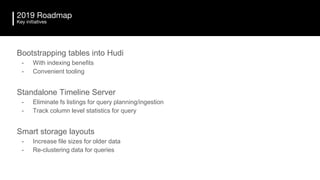

The document discusses the challenges and requirements for building efficient data lakes using Apache Hudi, focusing on issues like incremental data ingestion, deduplication, transactional consistency, file management, and legal requirements for data retention. It provides insights into the architecture, functionality, and benefits of Hudi, including support for snapshot isolation, upserts, and optimized querying. Additionally, it outlines the roadmap for Apache Hudi's development and its current status in open-source community support.