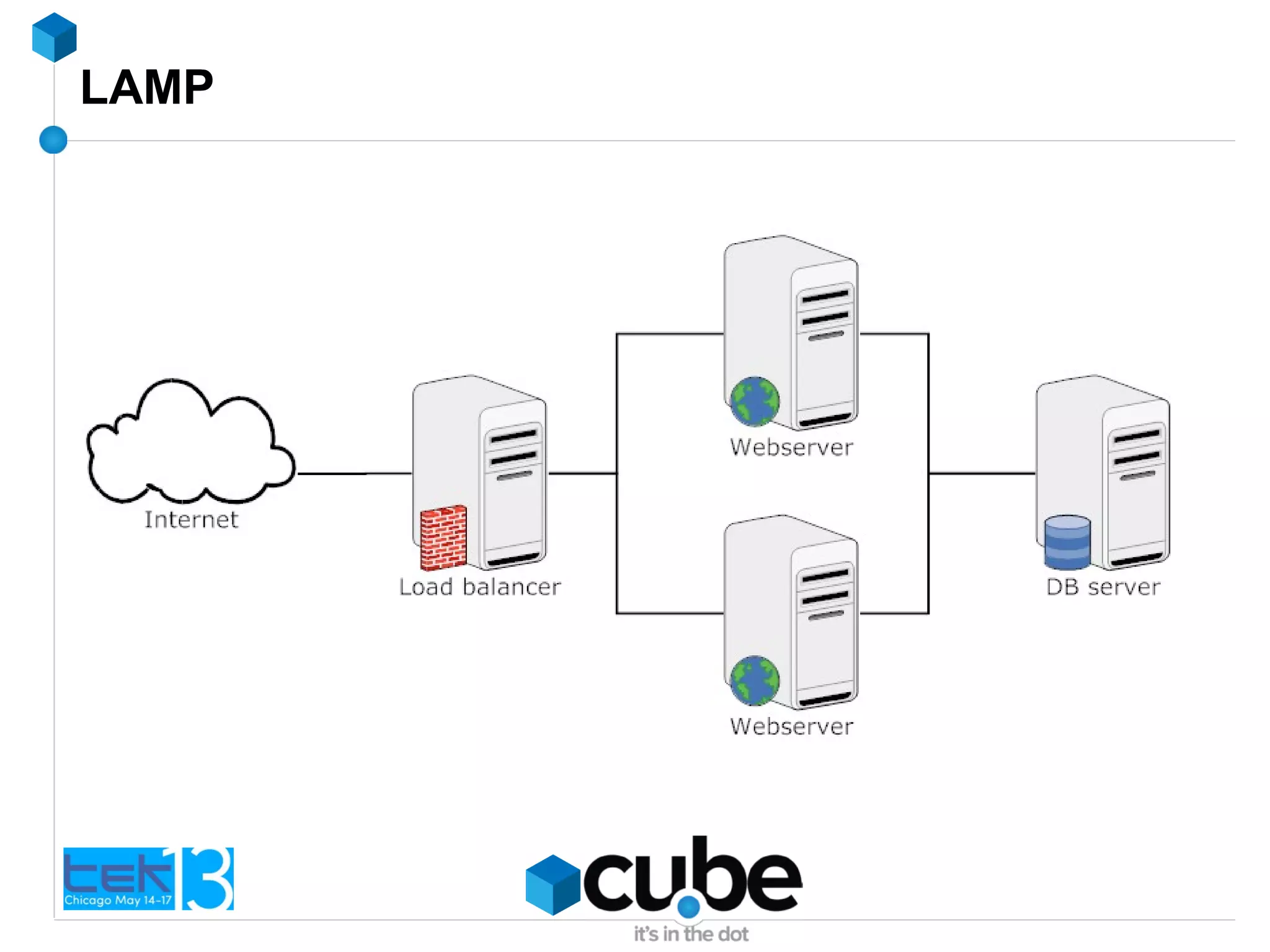

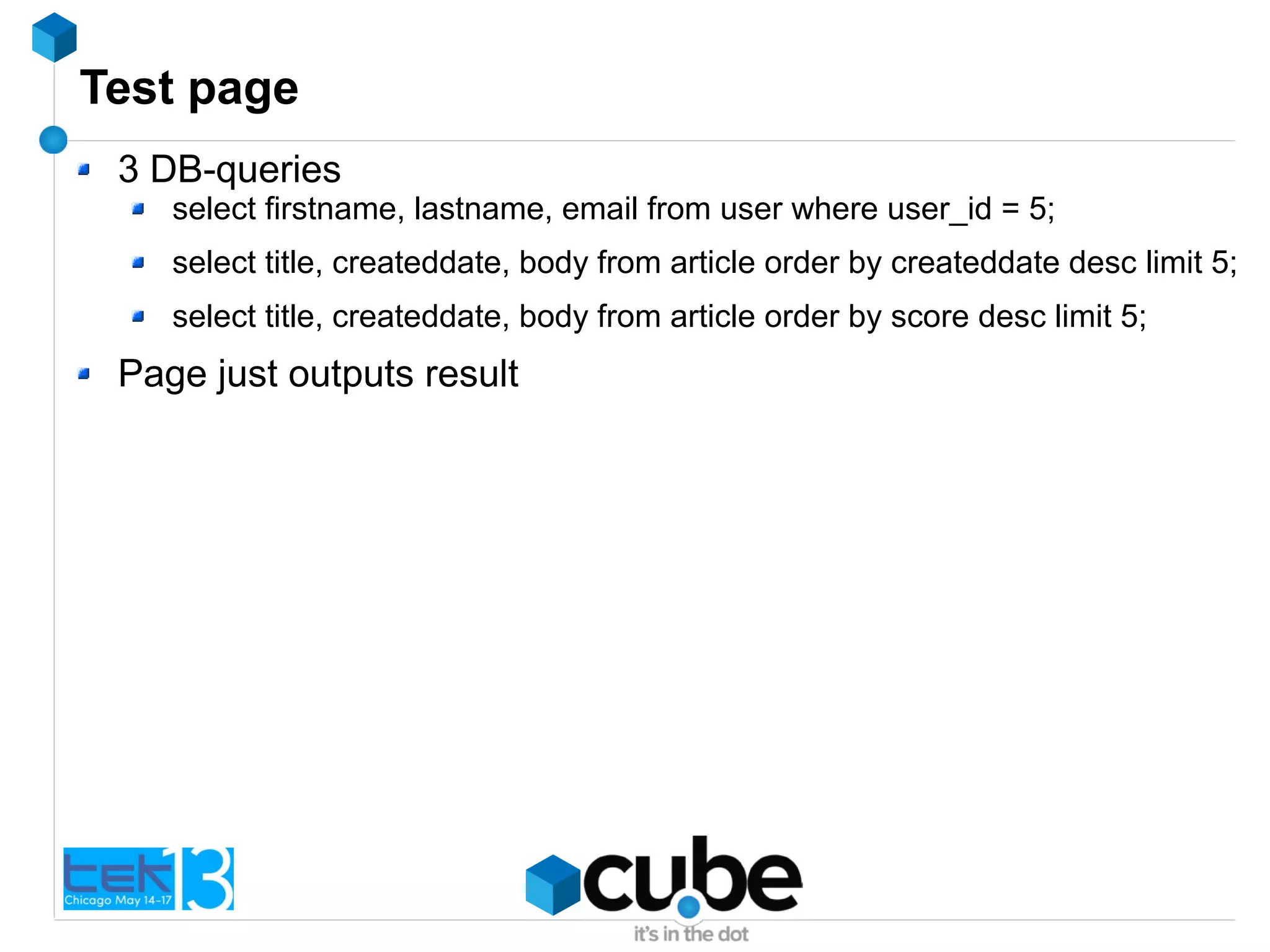

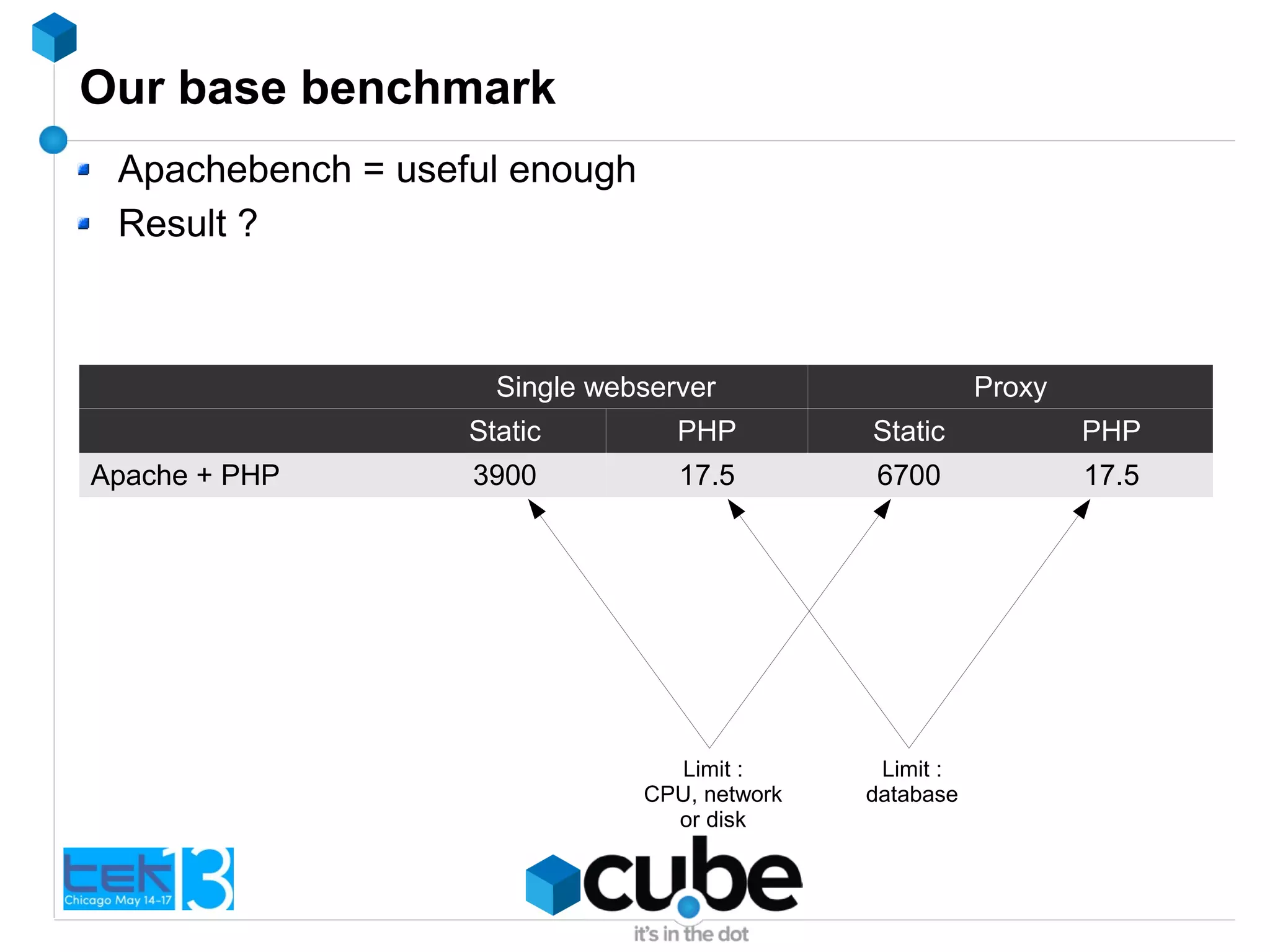

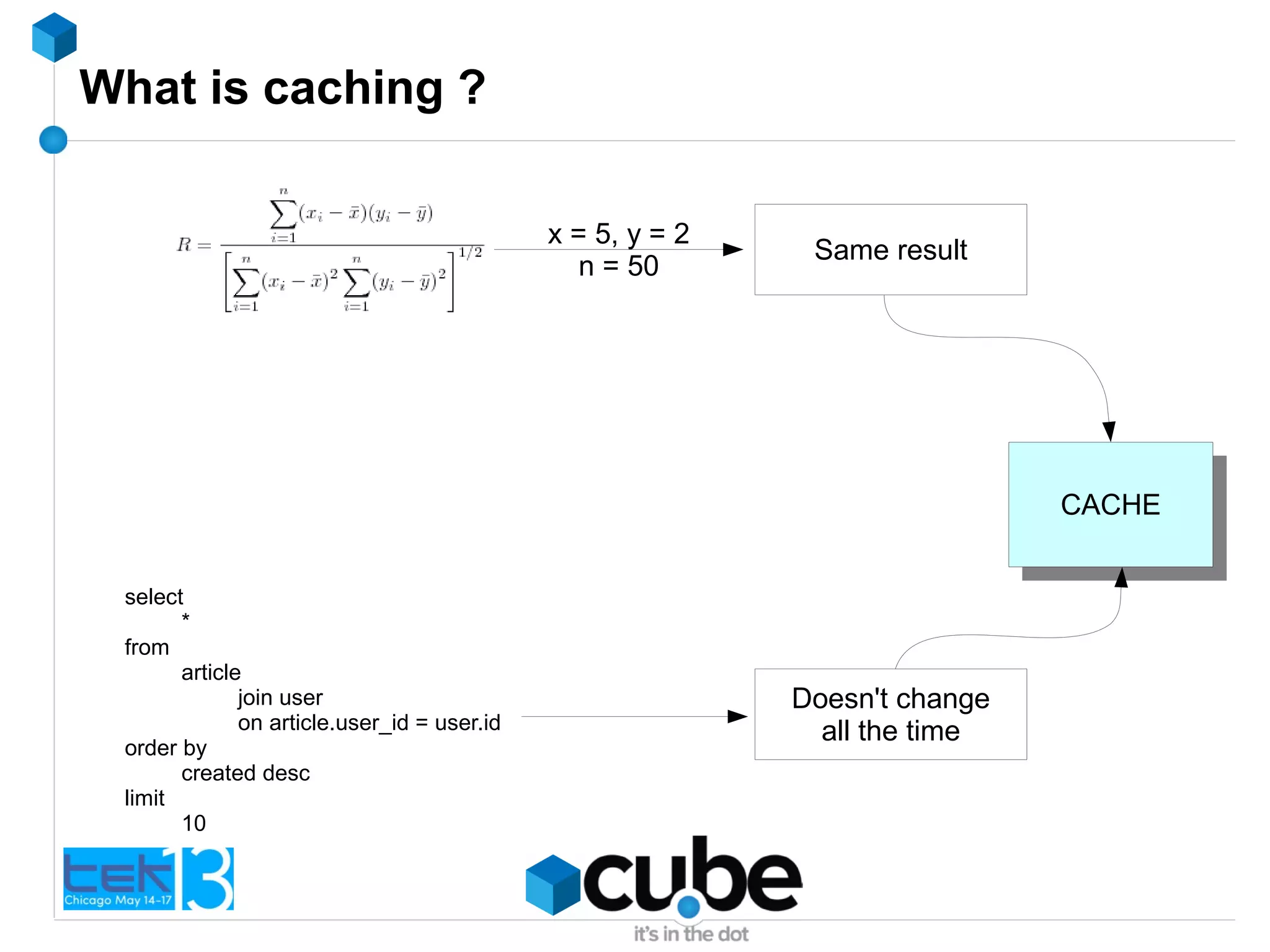

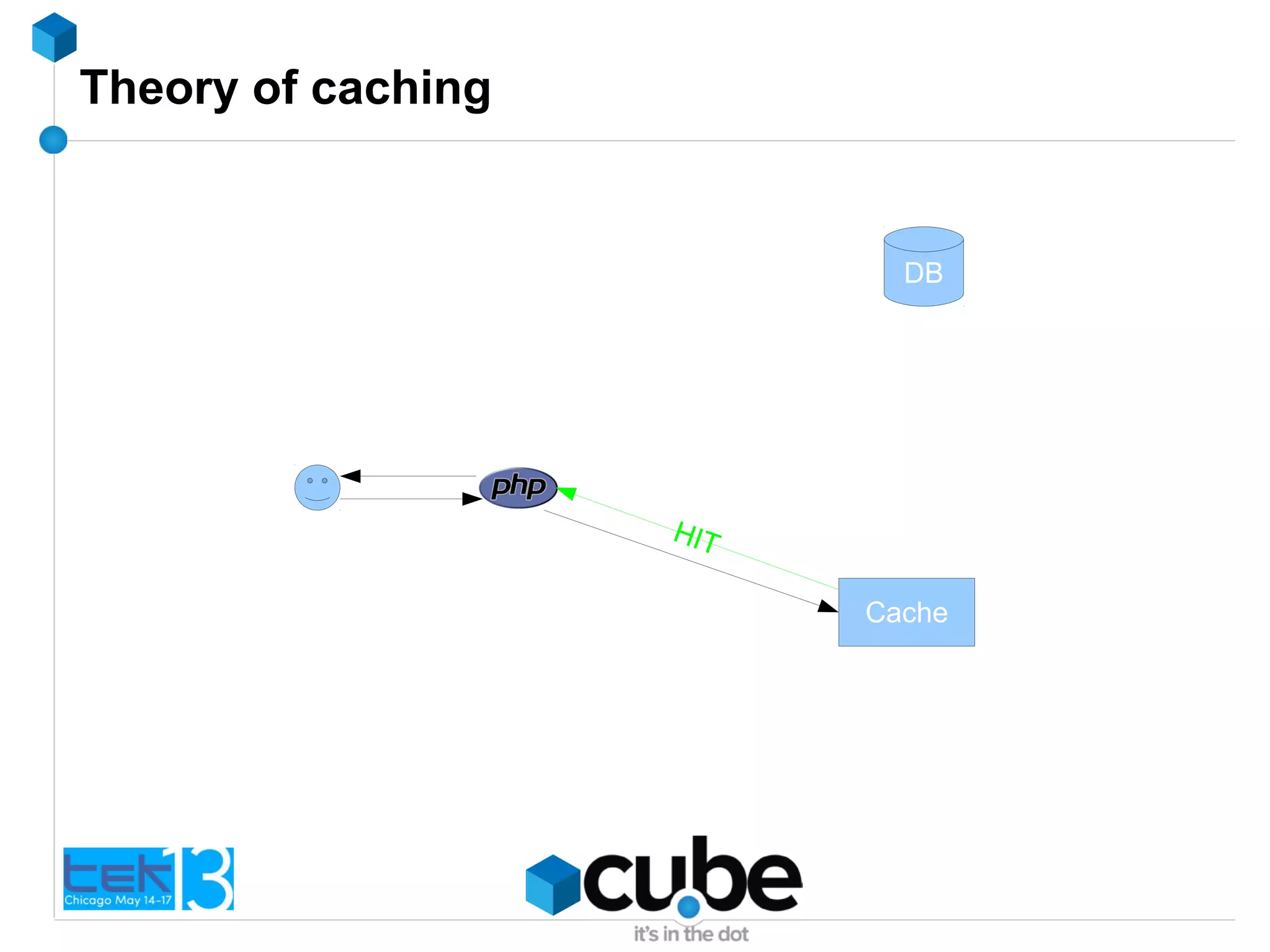

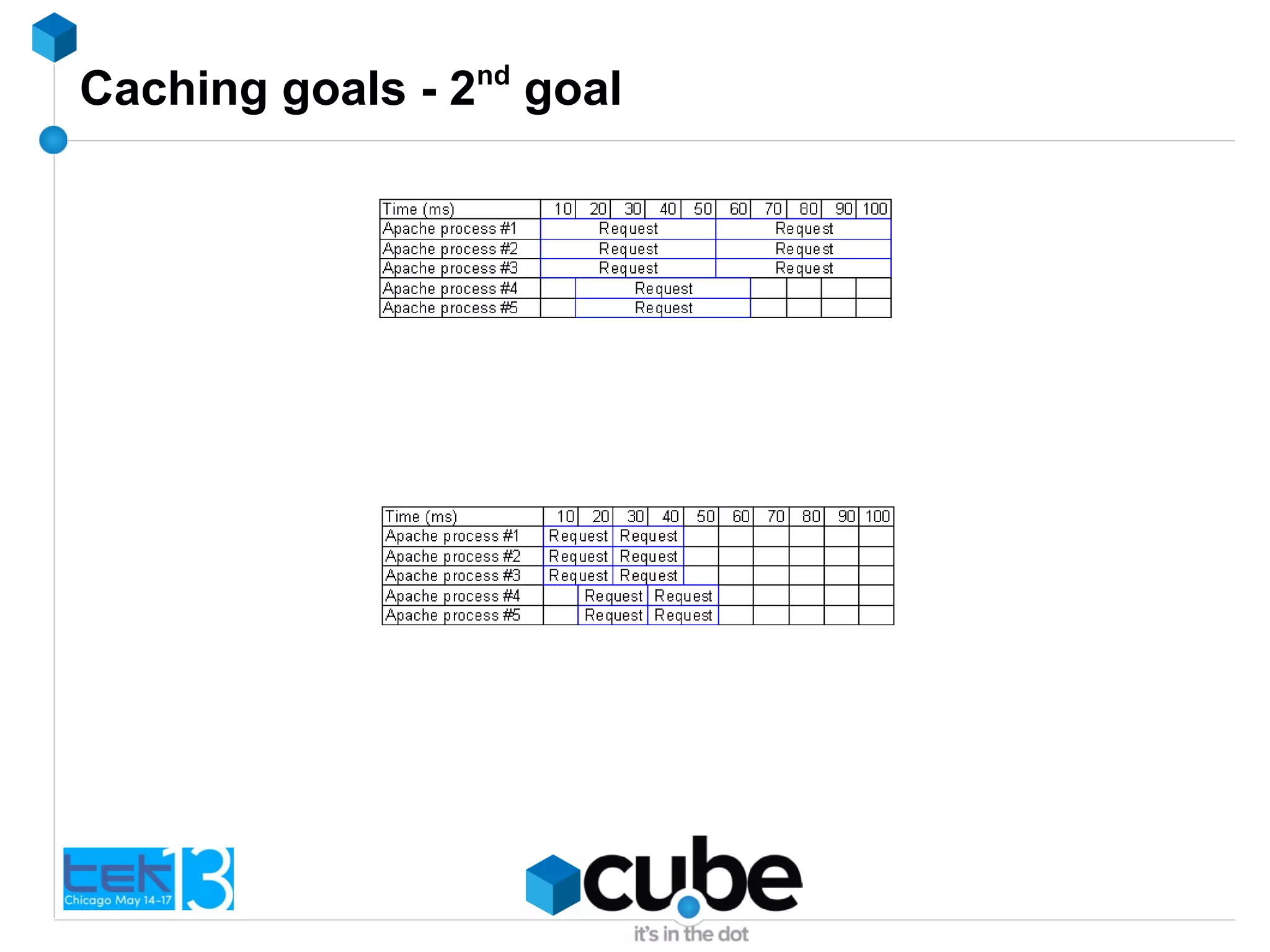

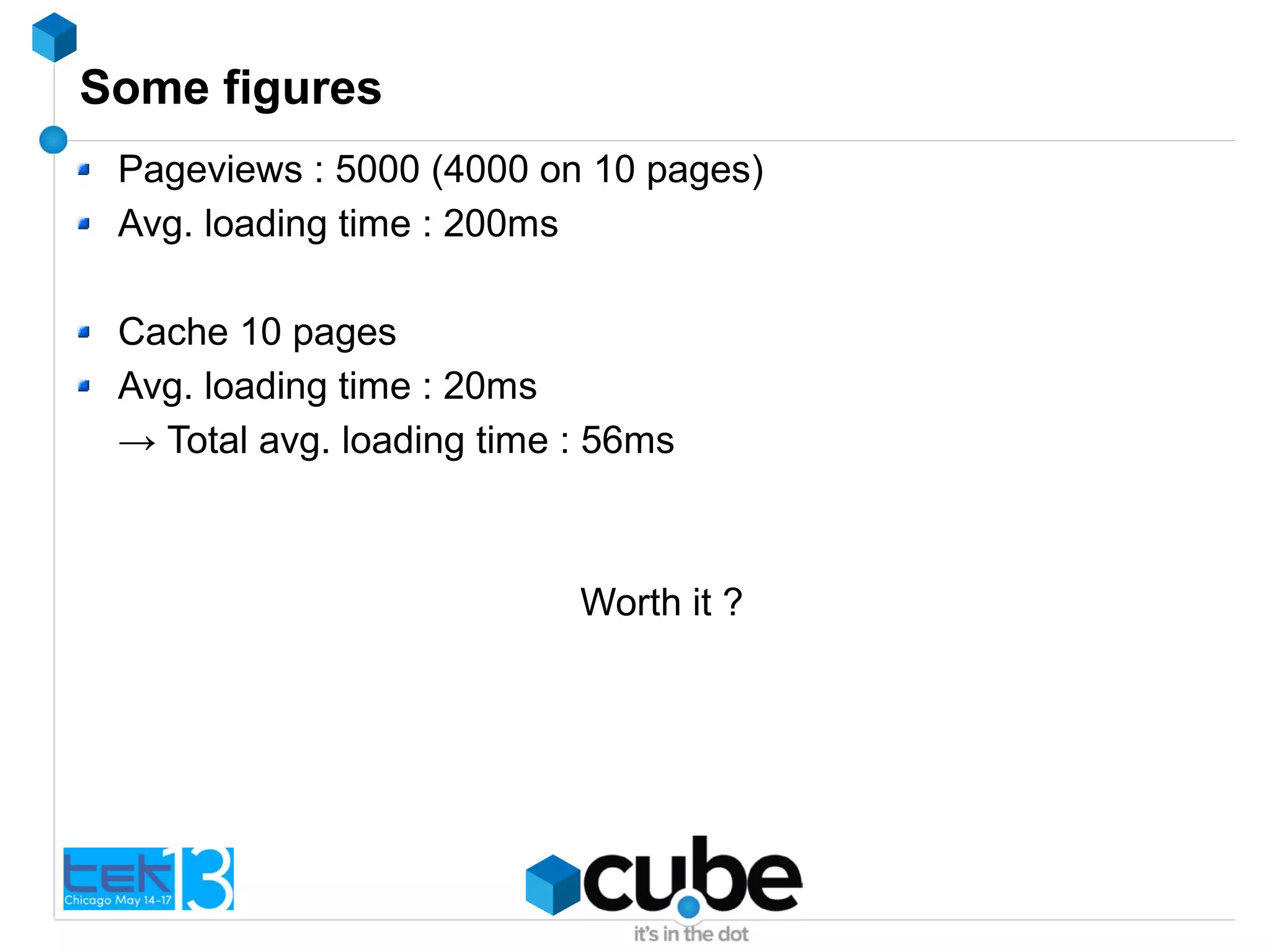

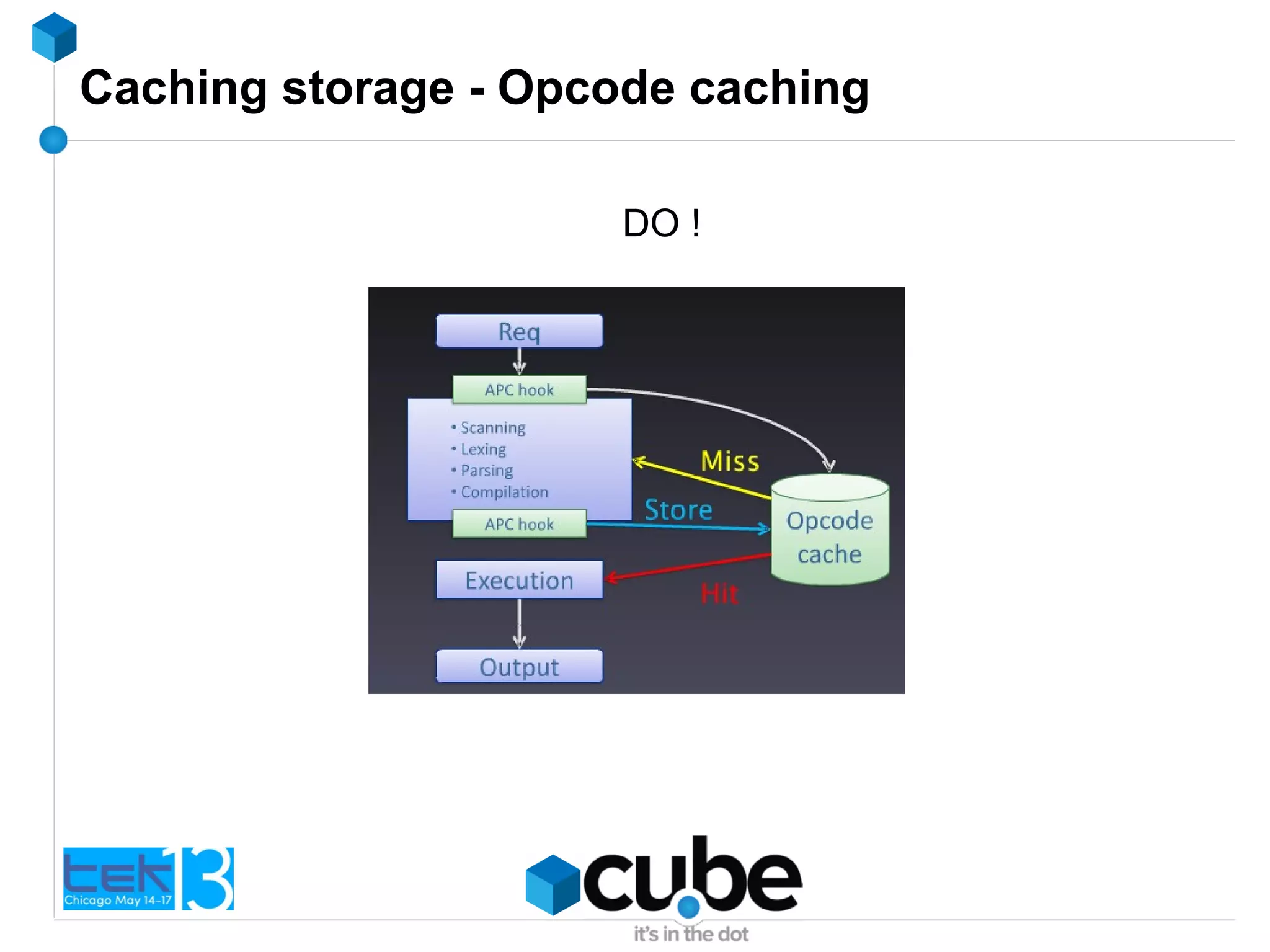

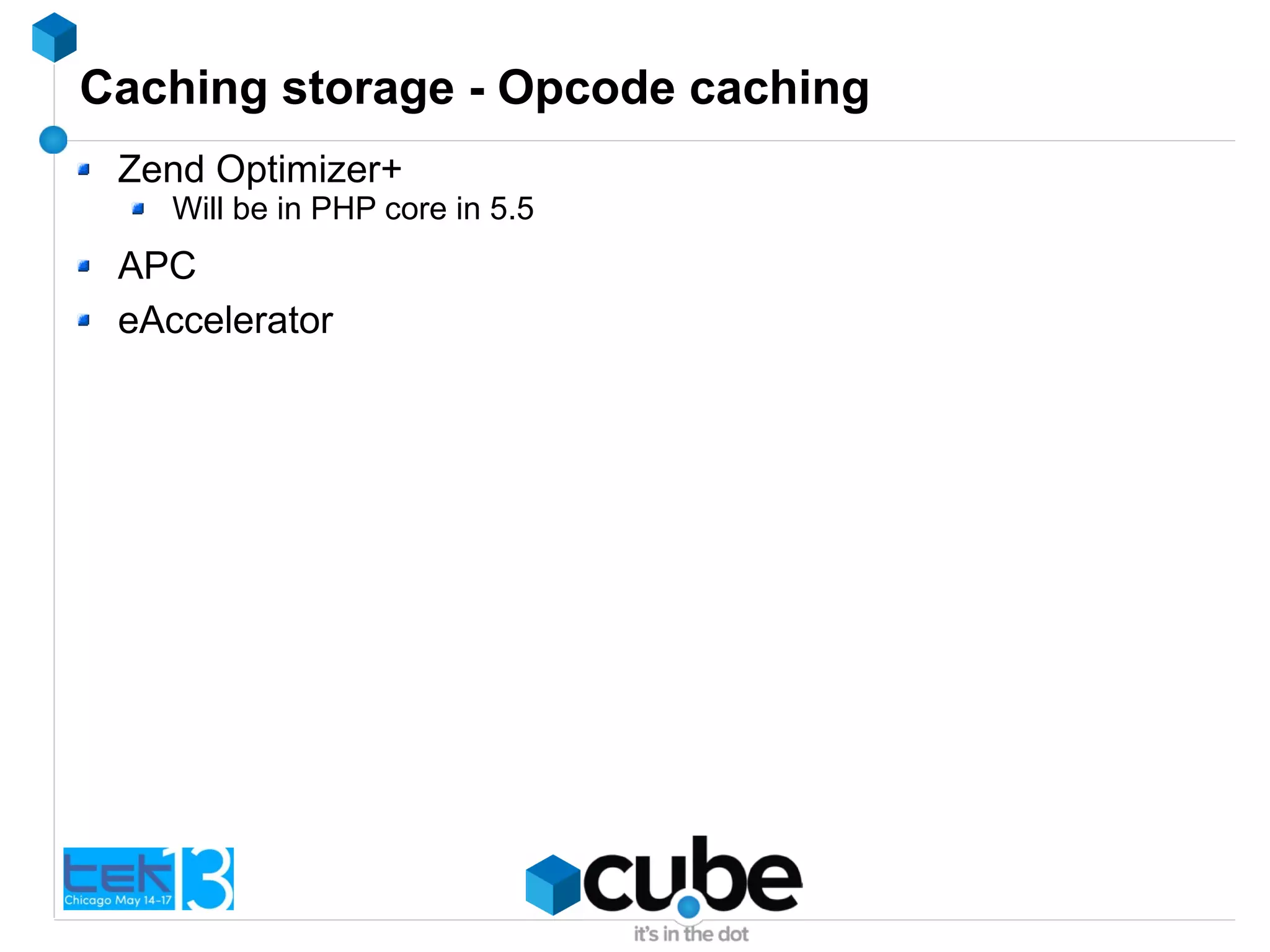

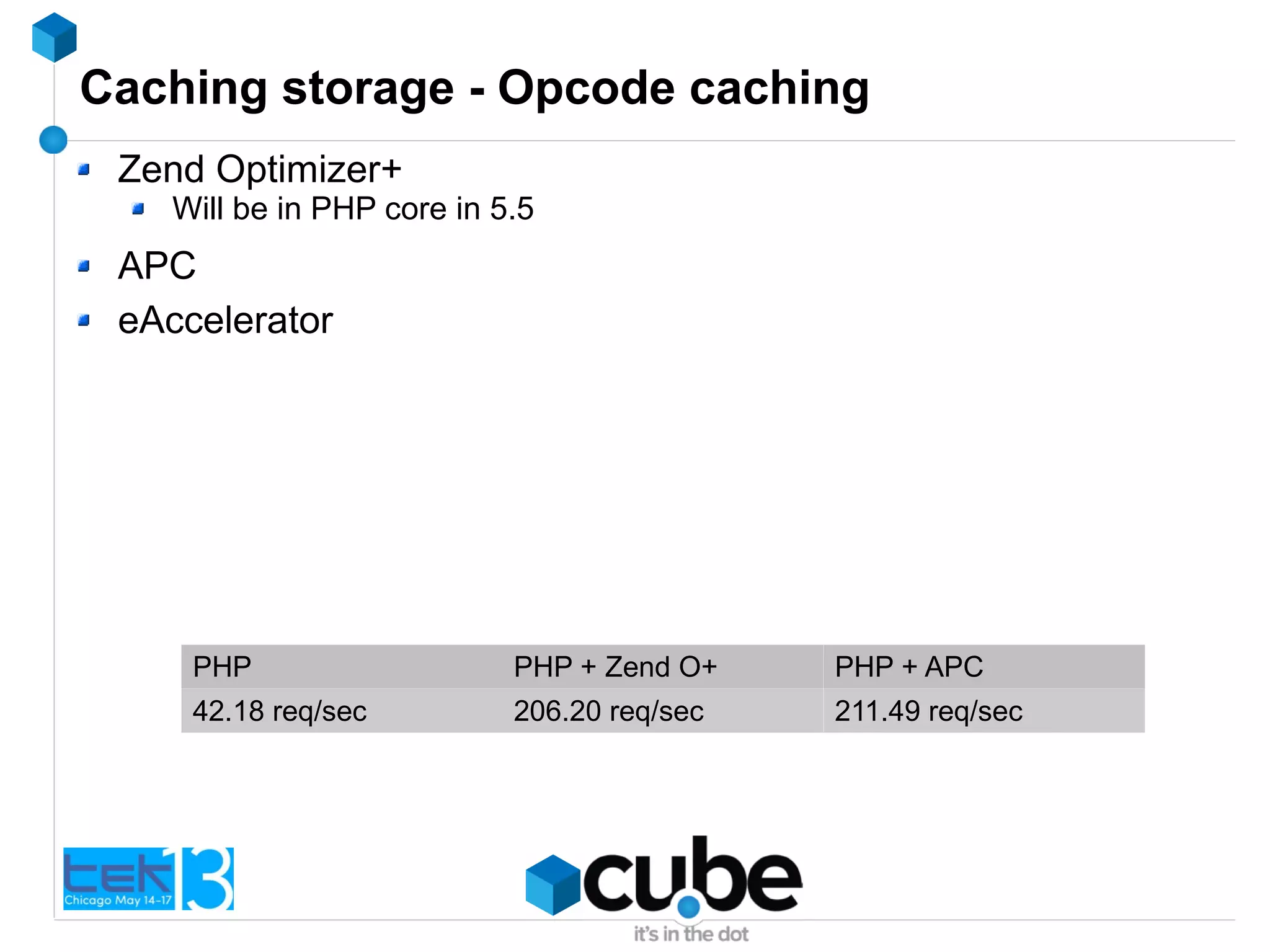

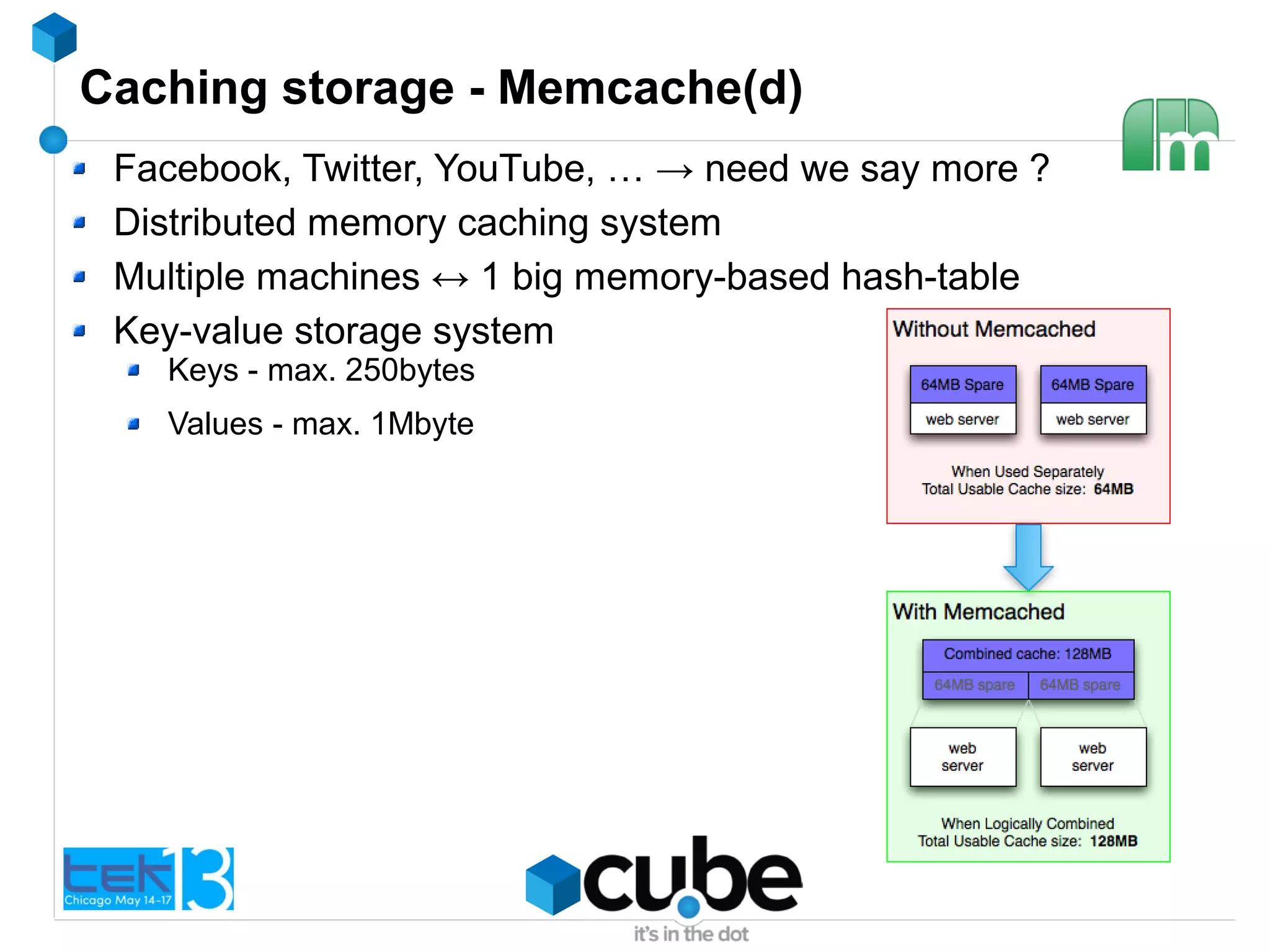

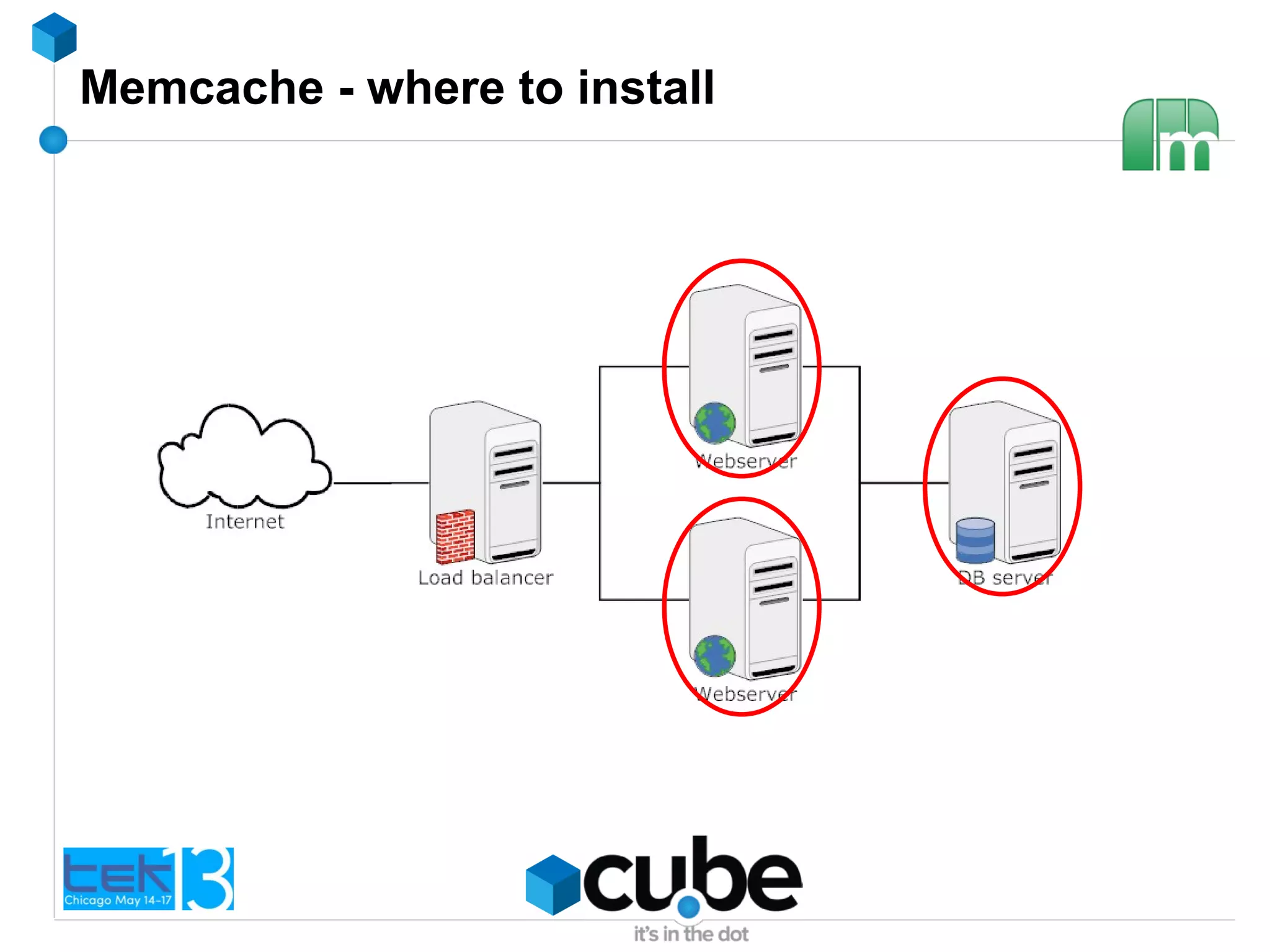

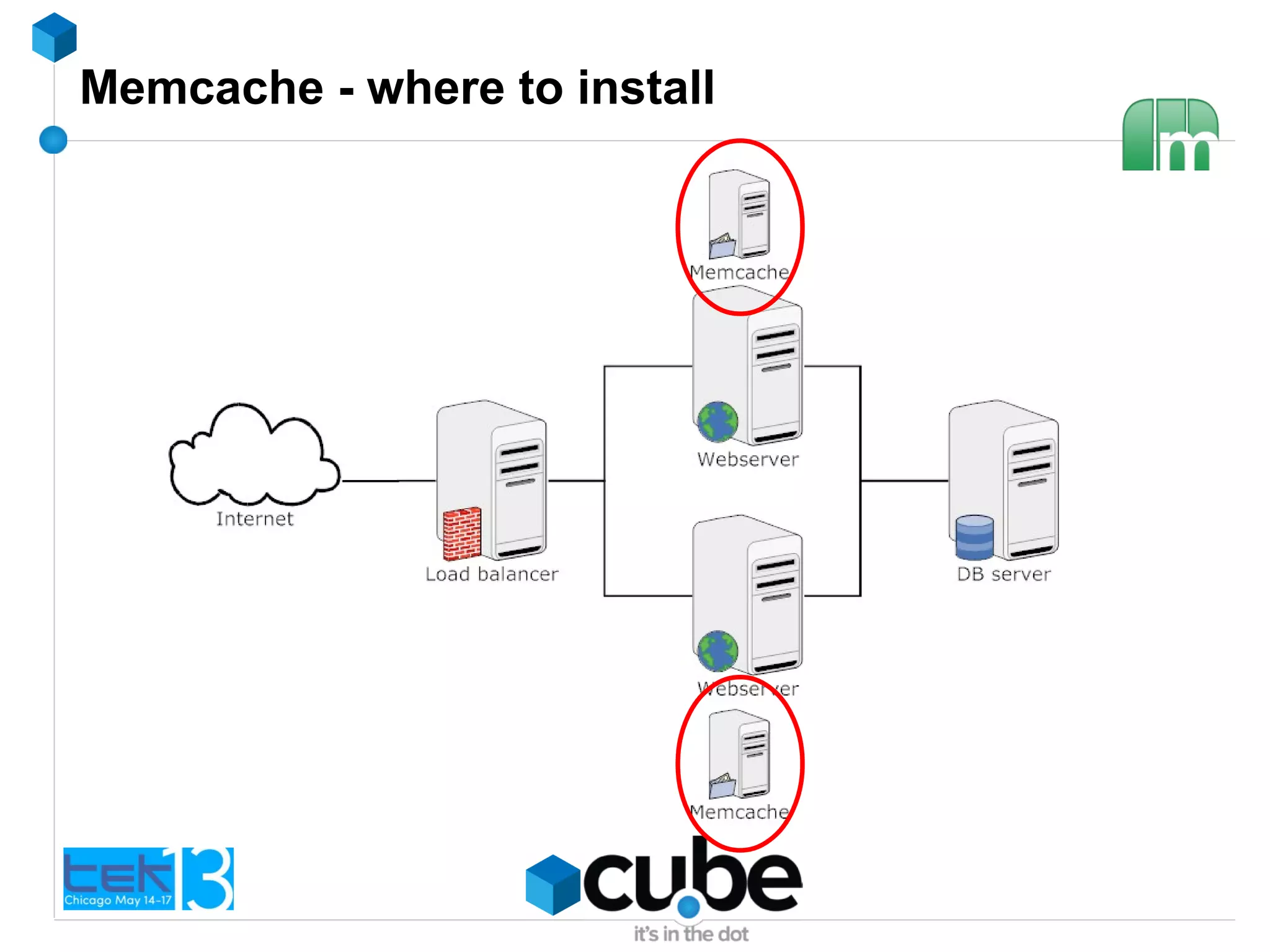

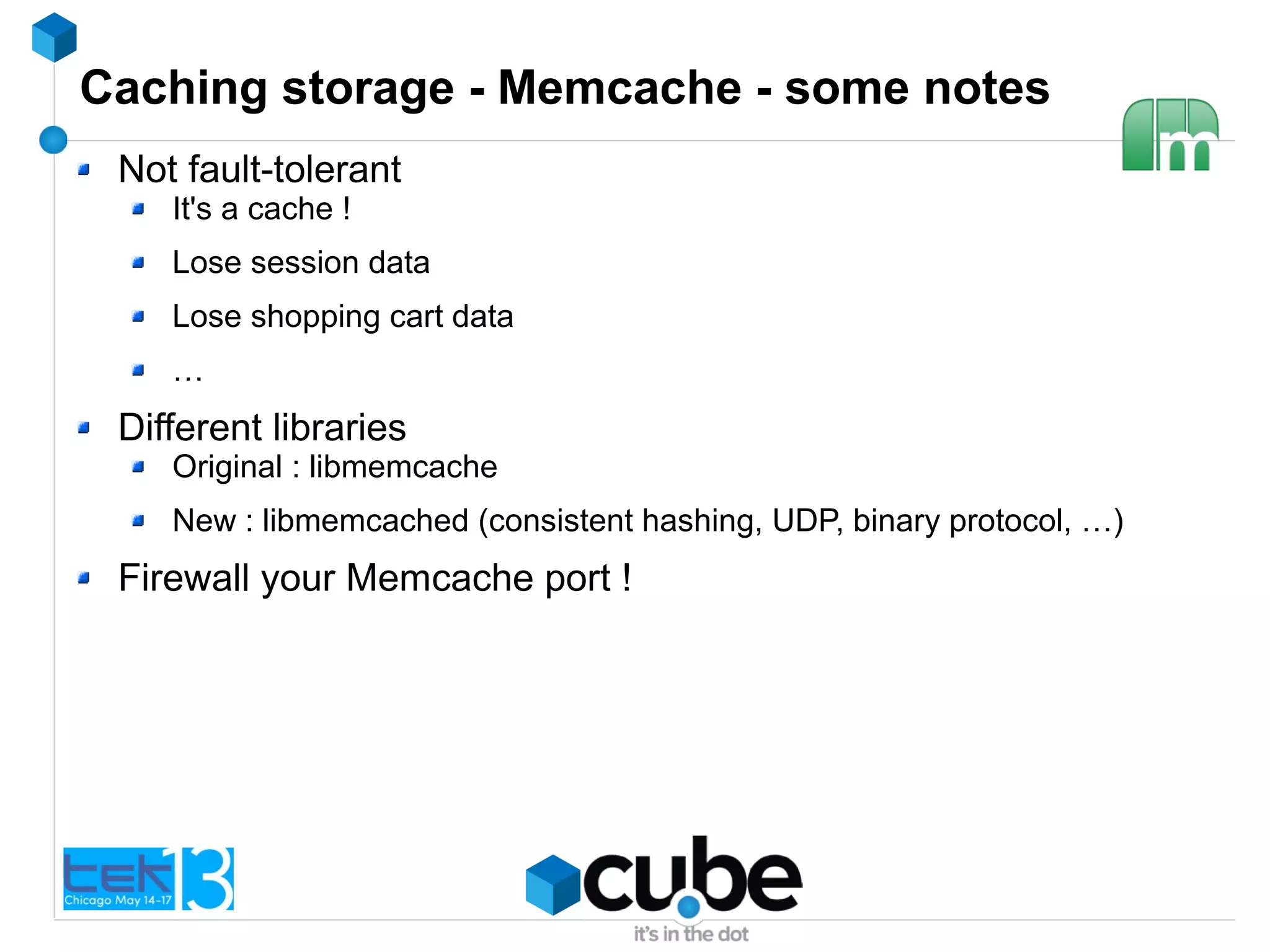

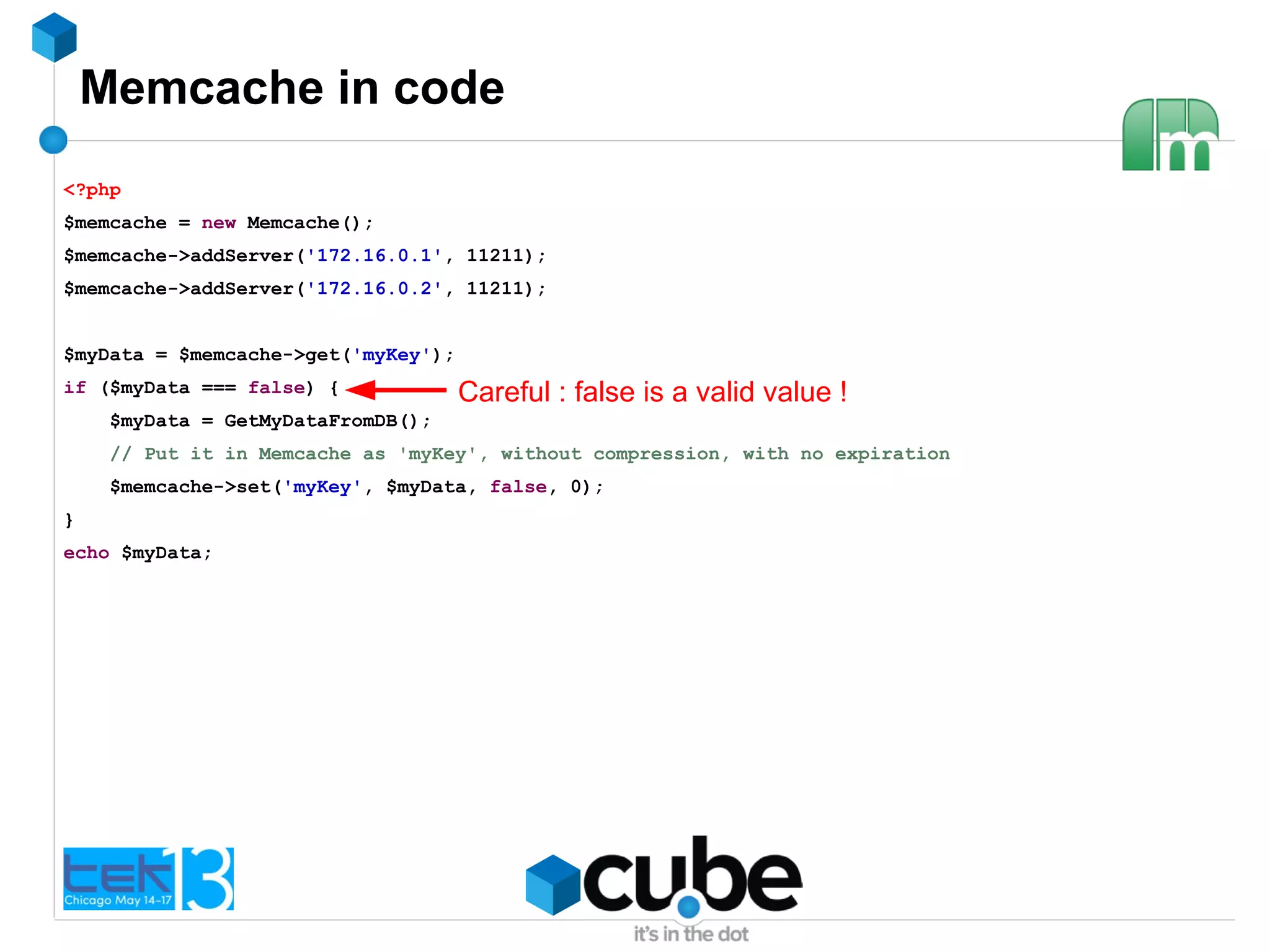

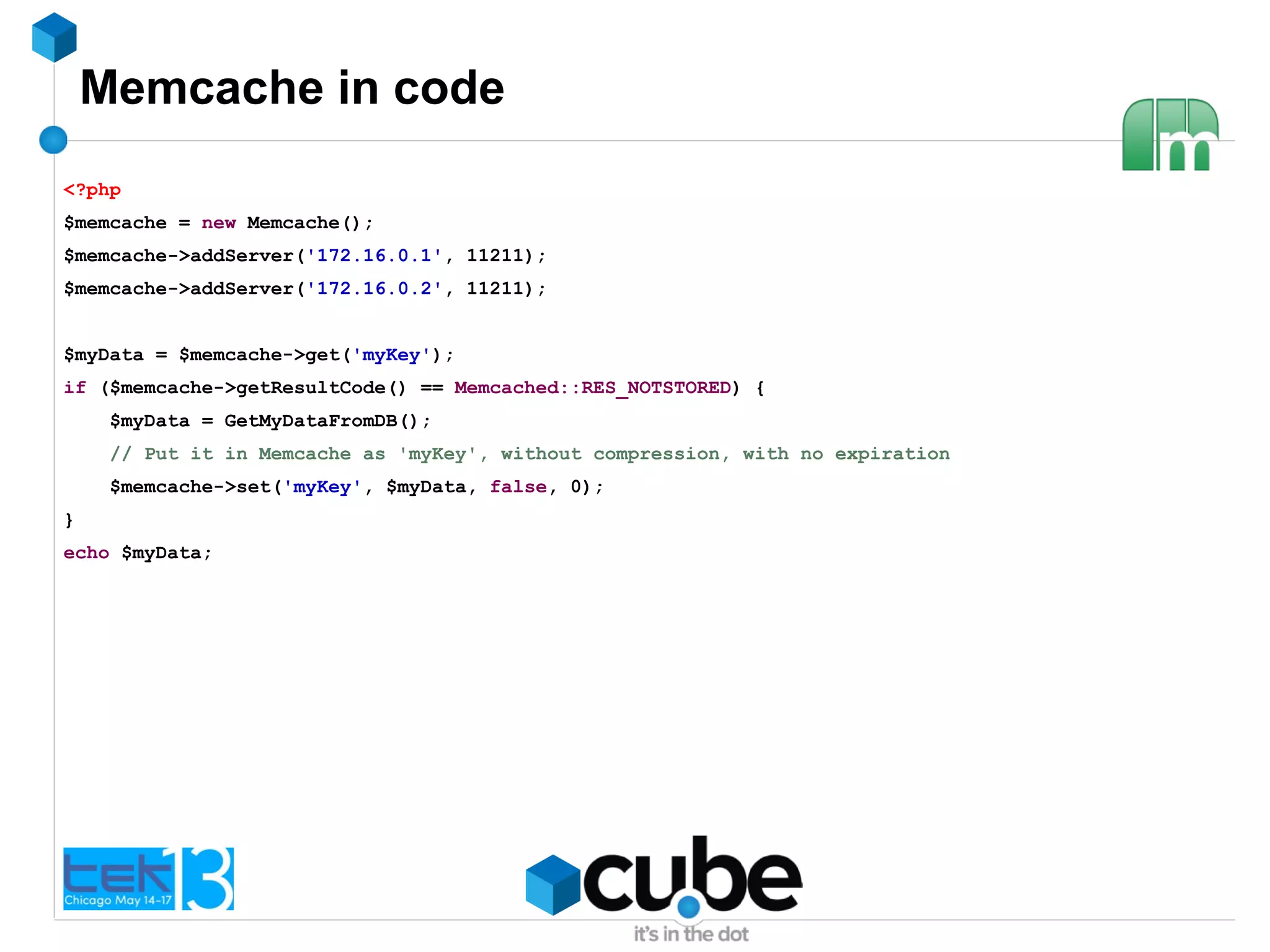

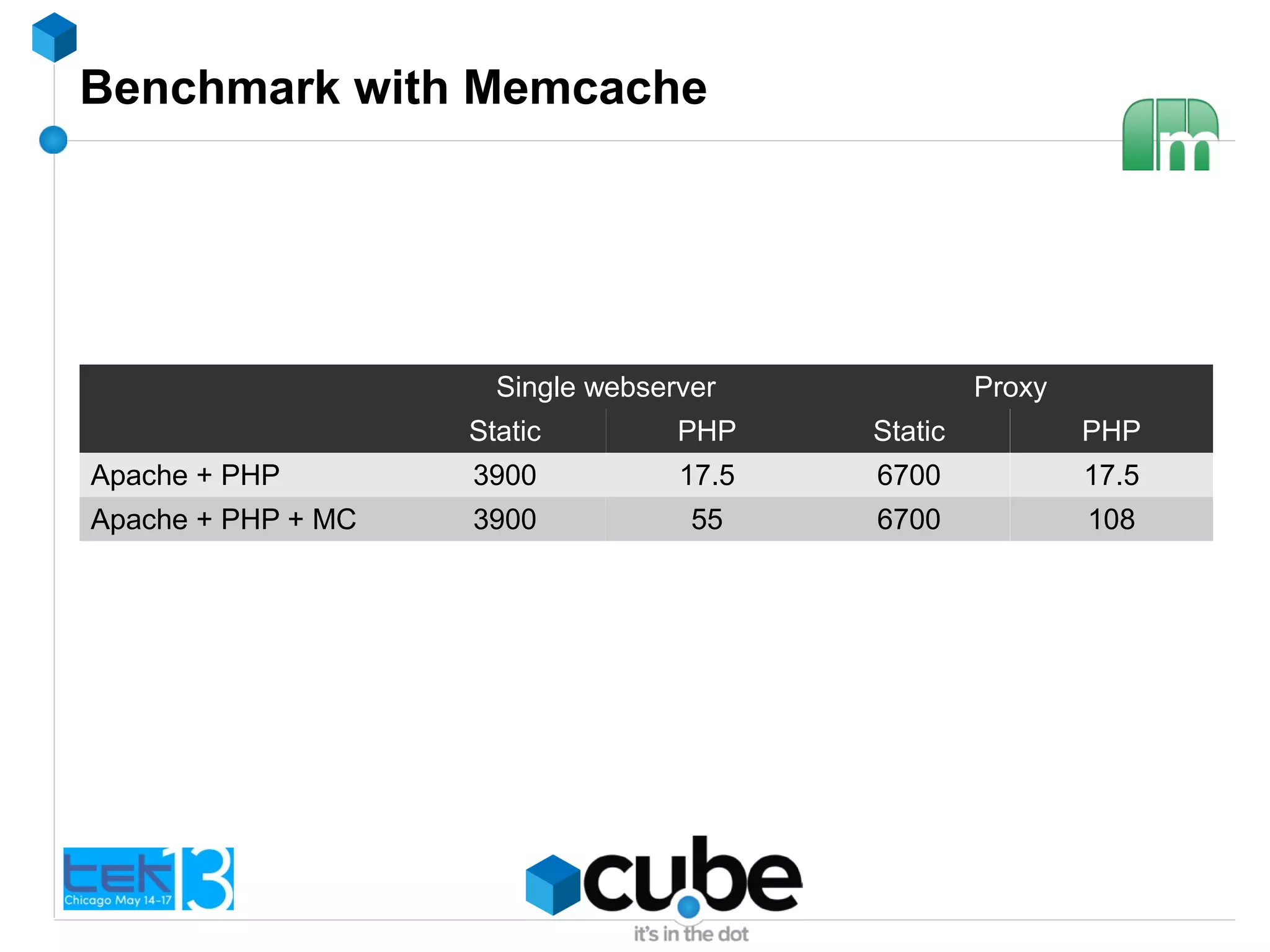

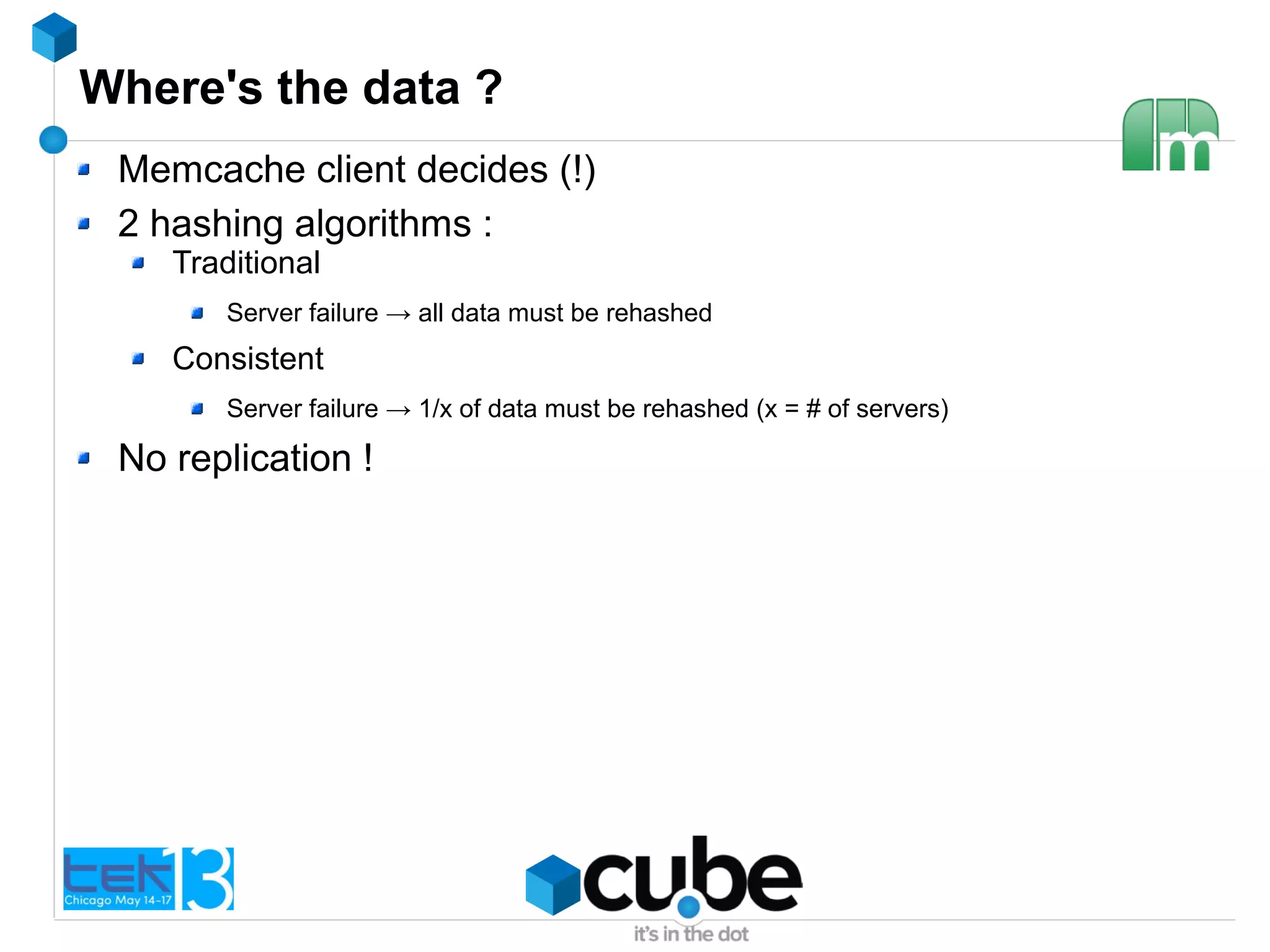

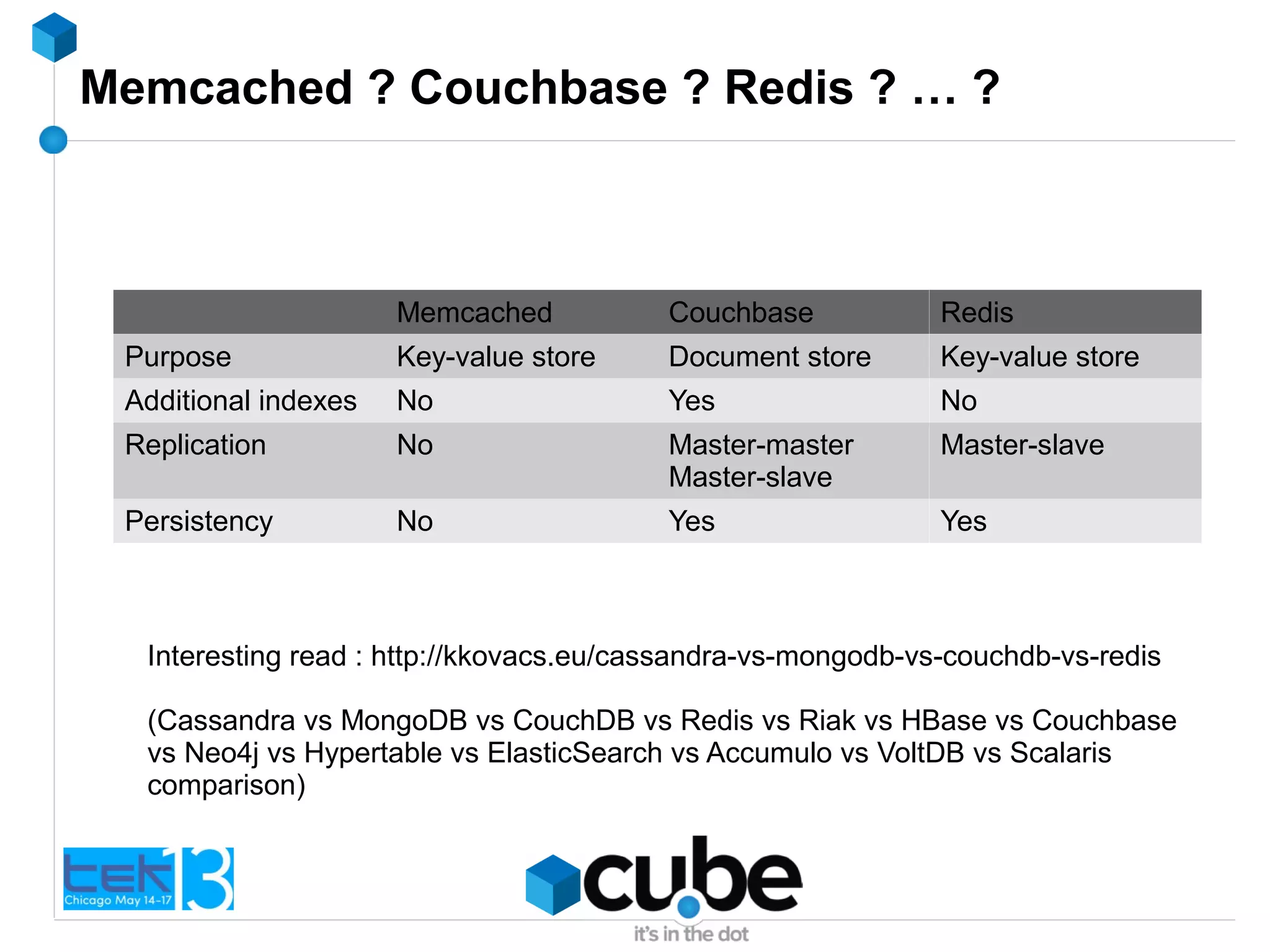

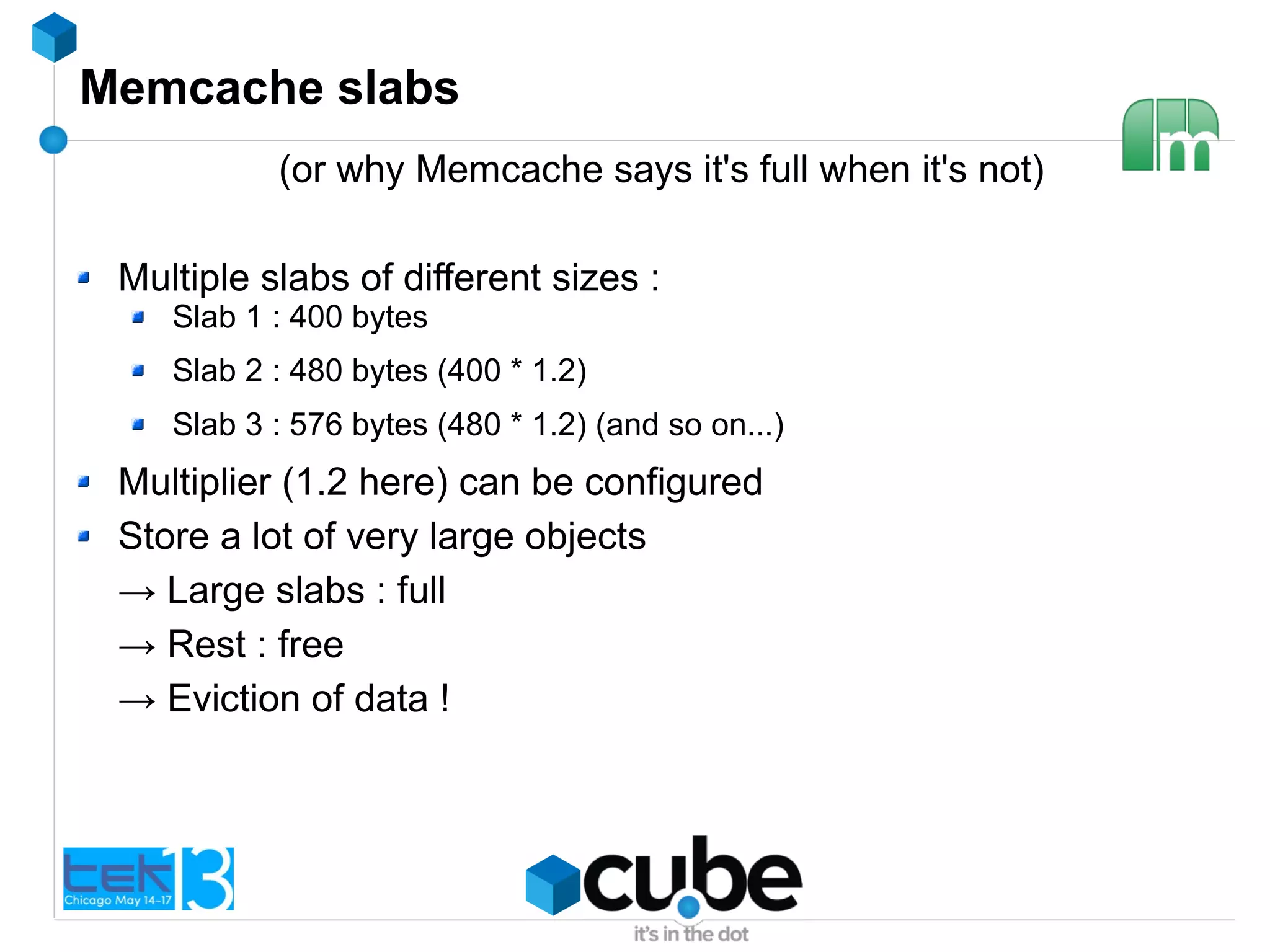

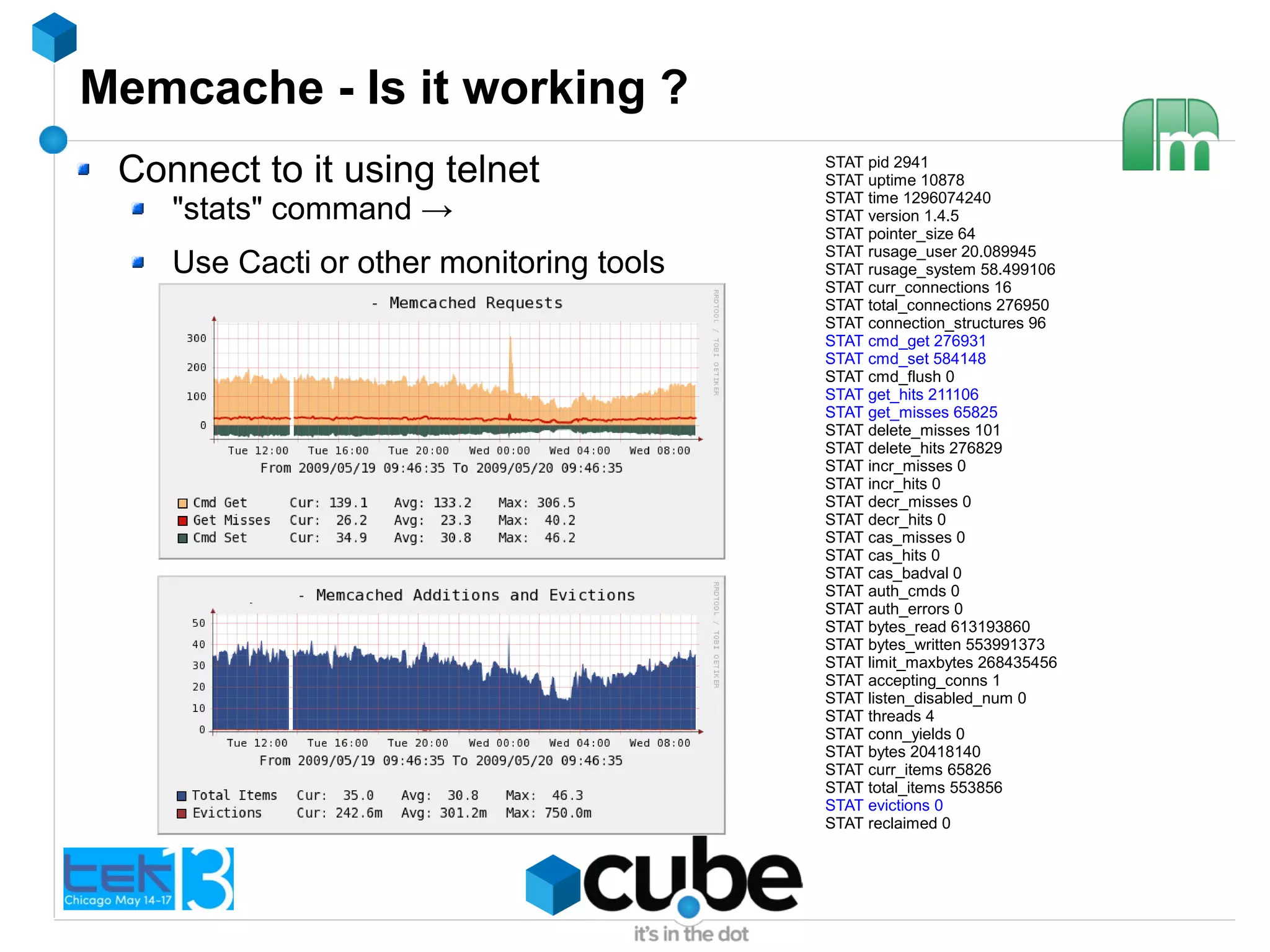

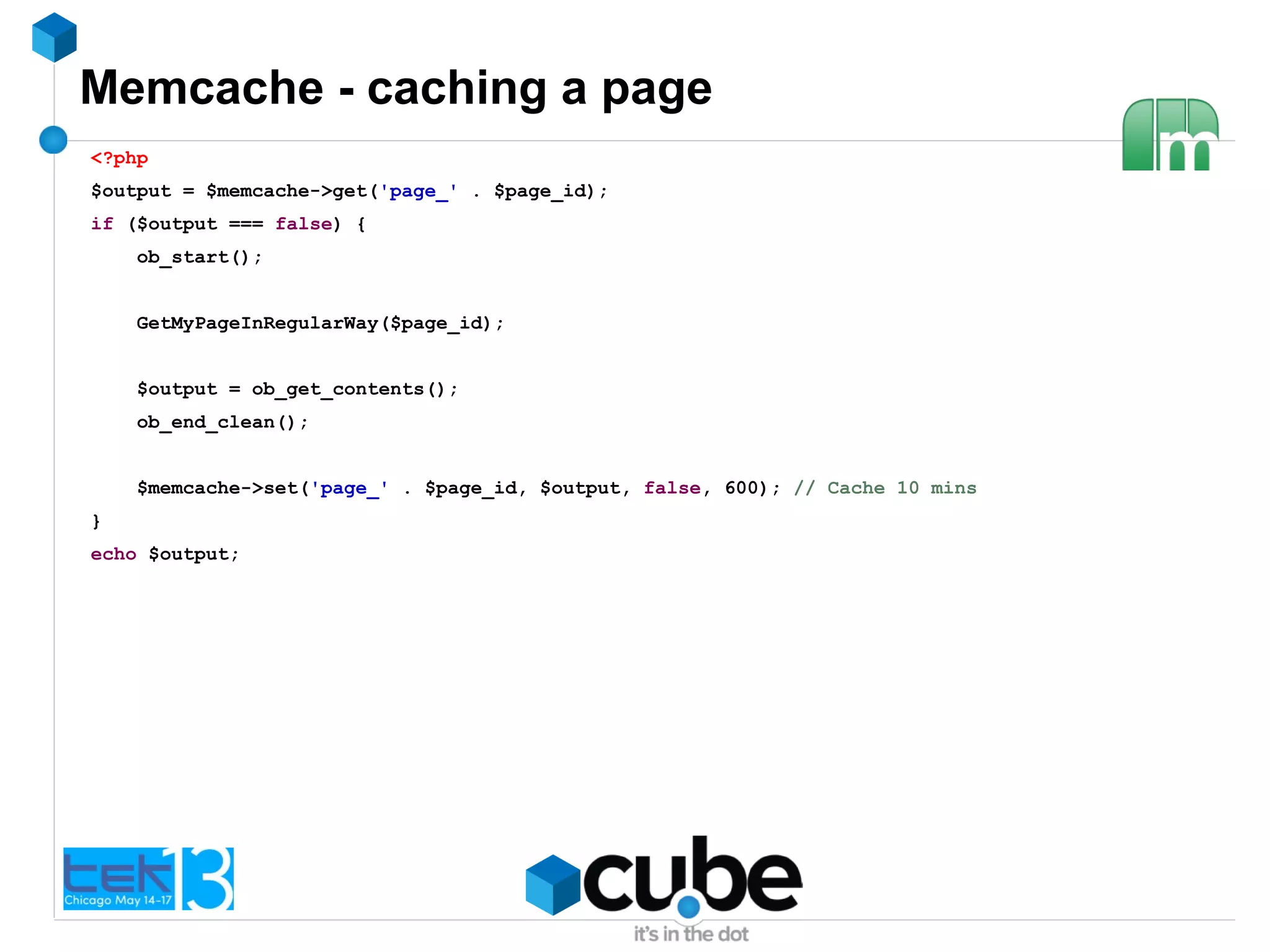

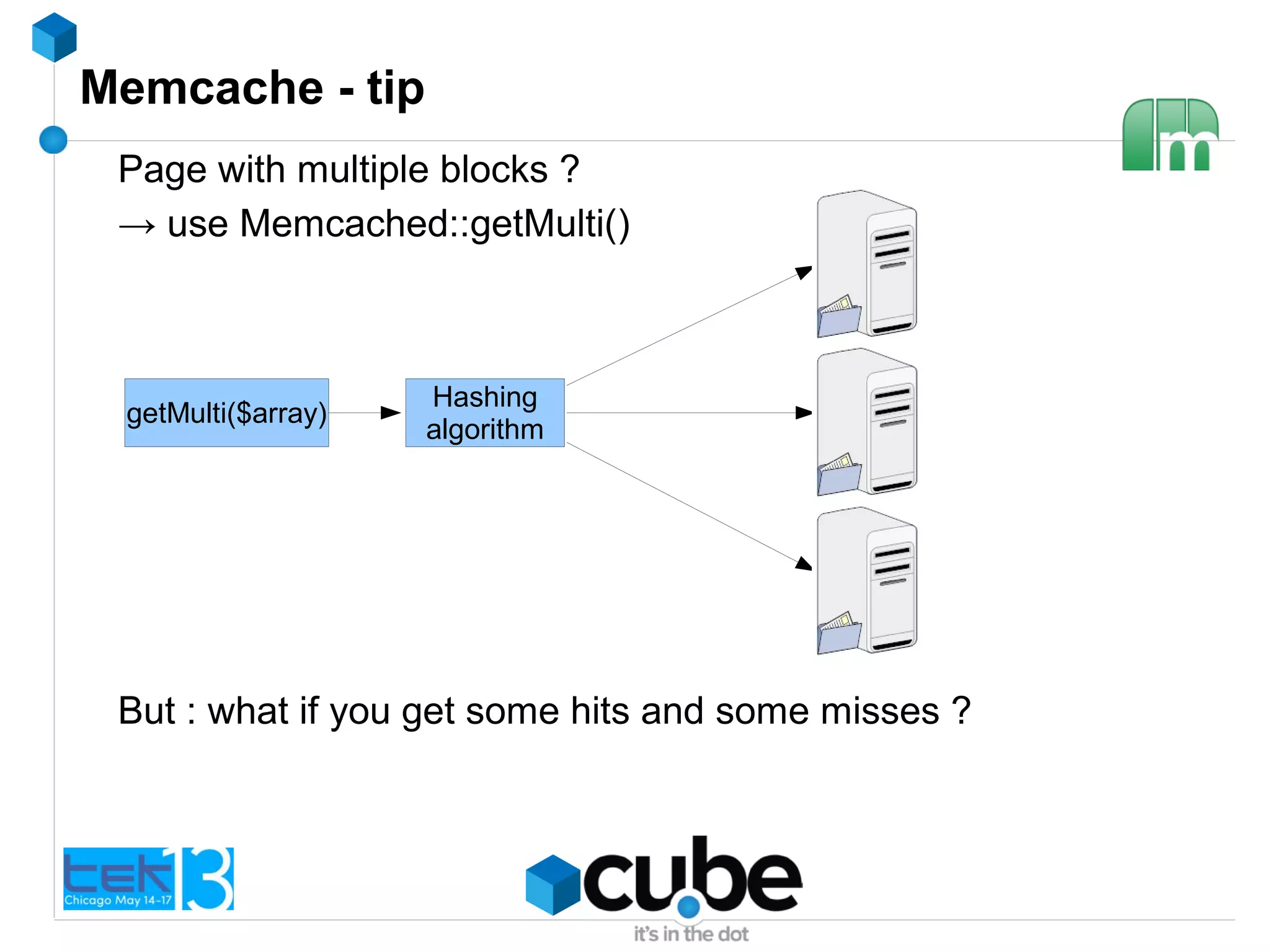

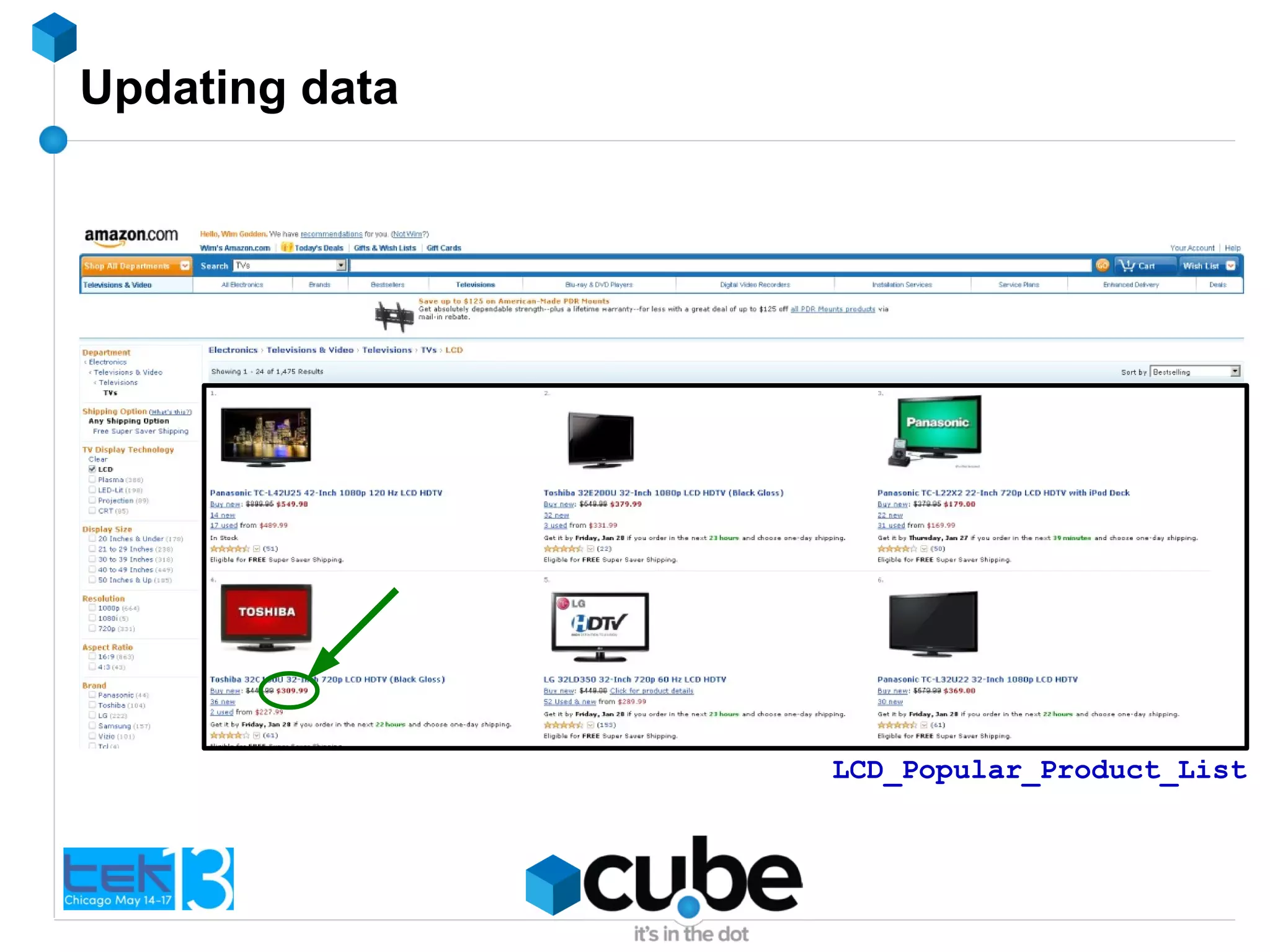

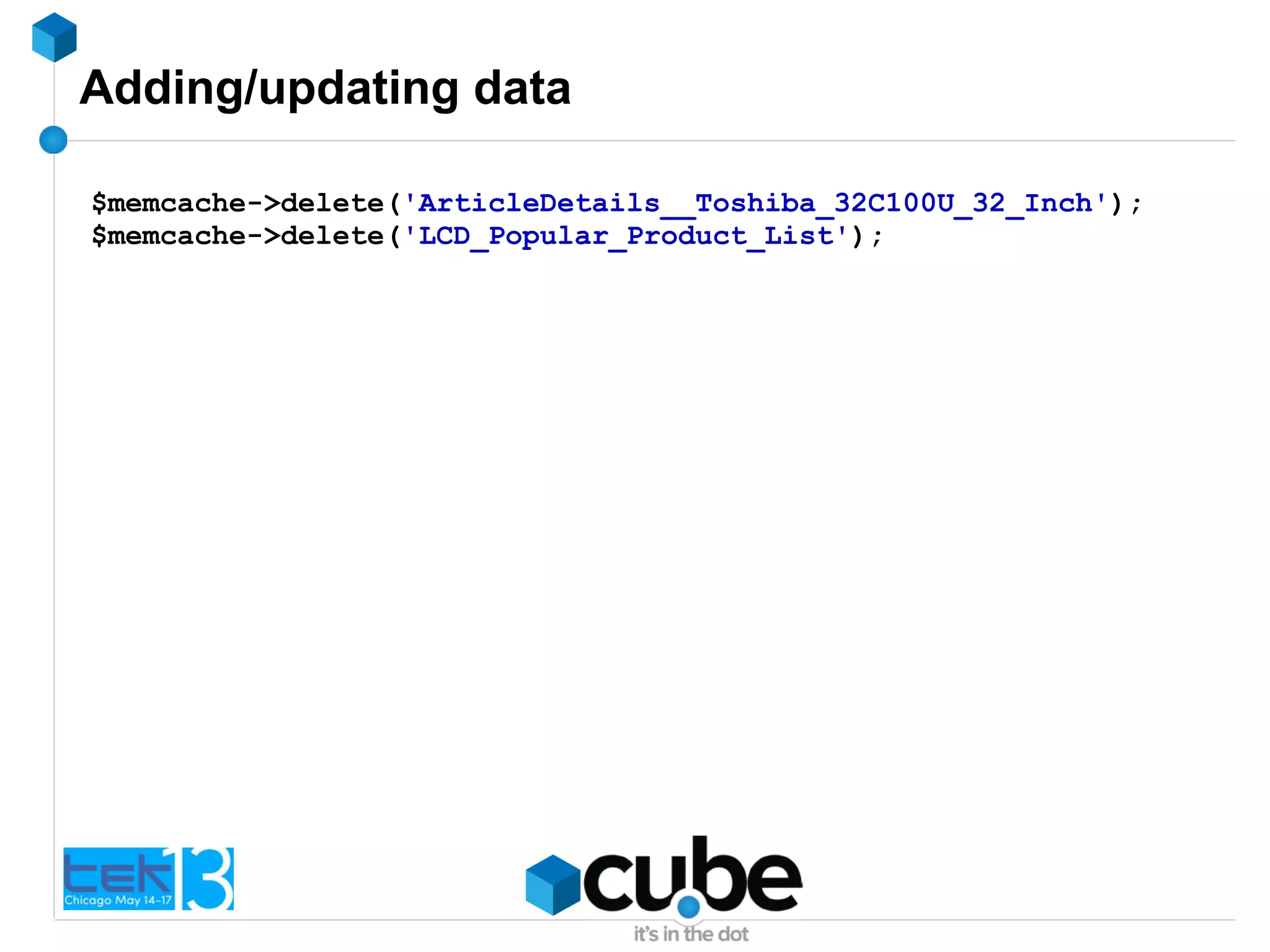

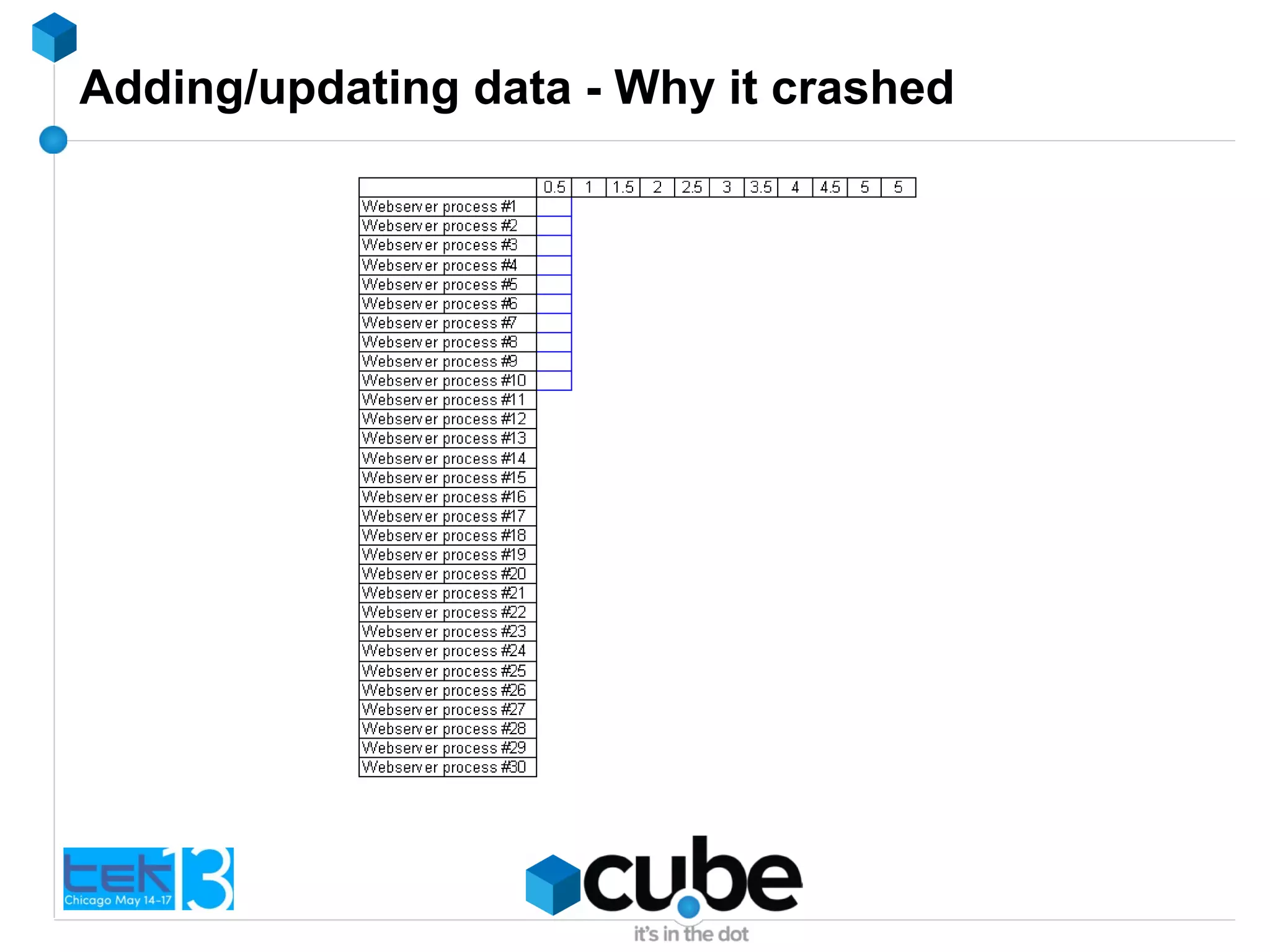

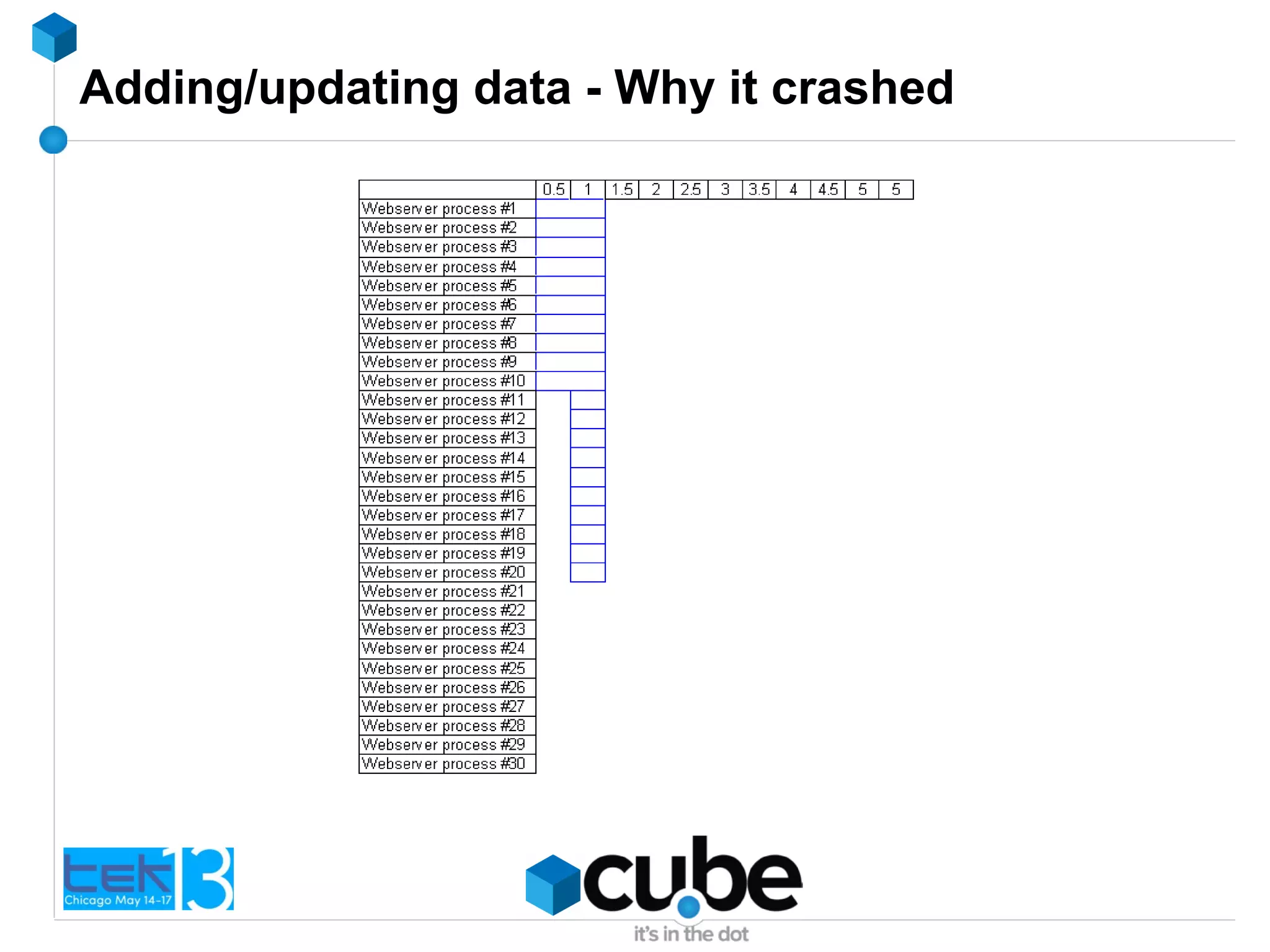

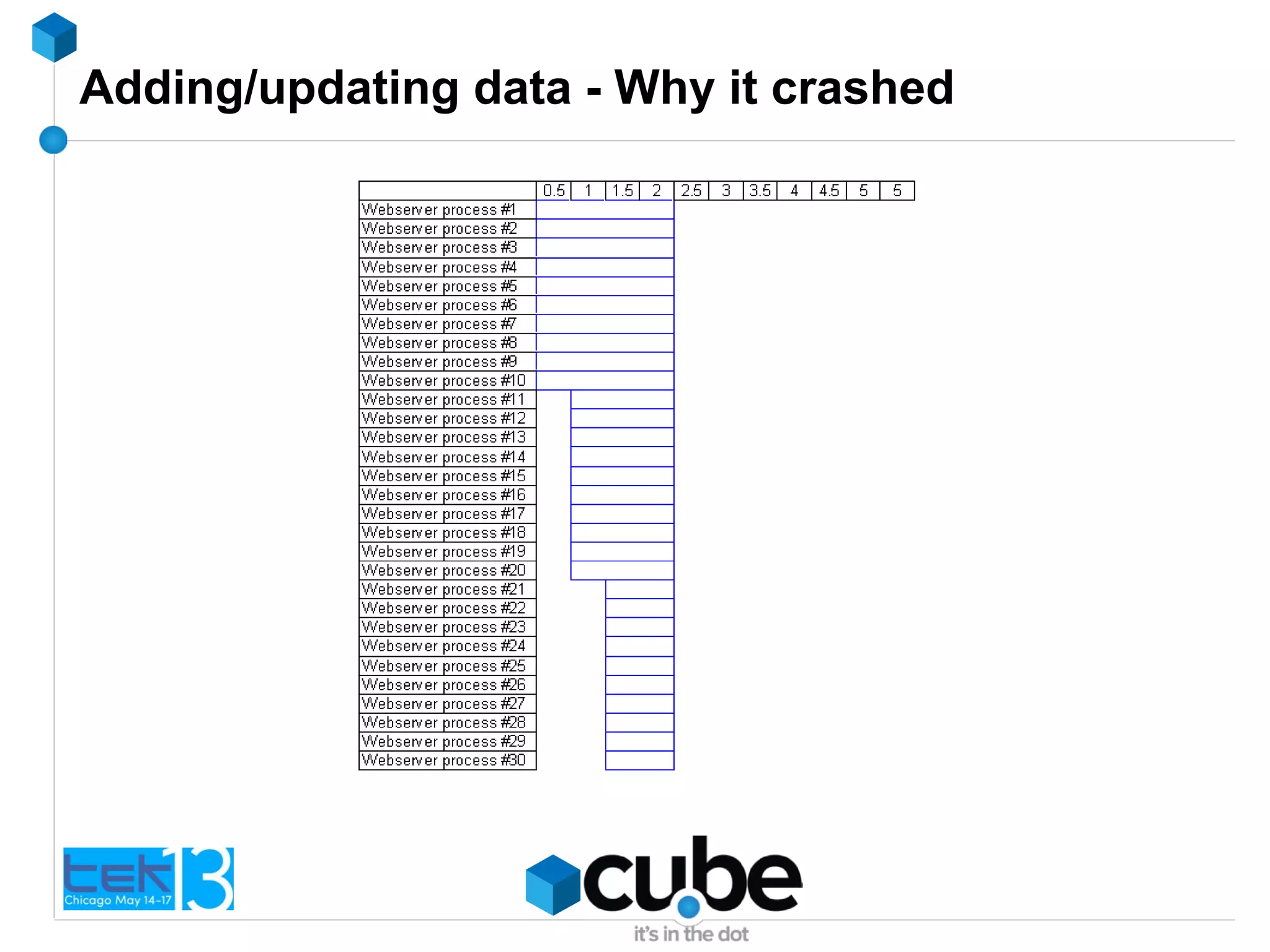

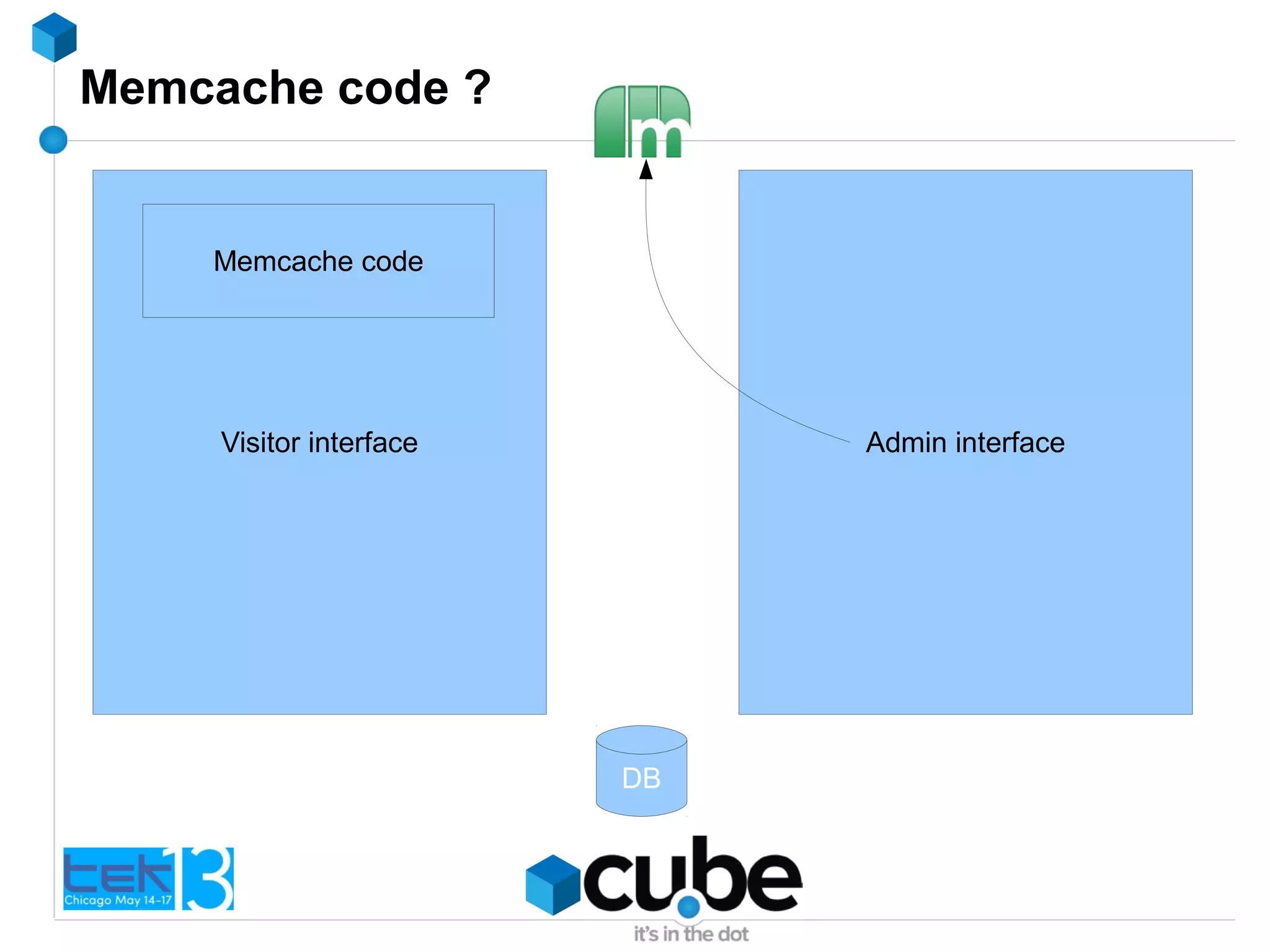

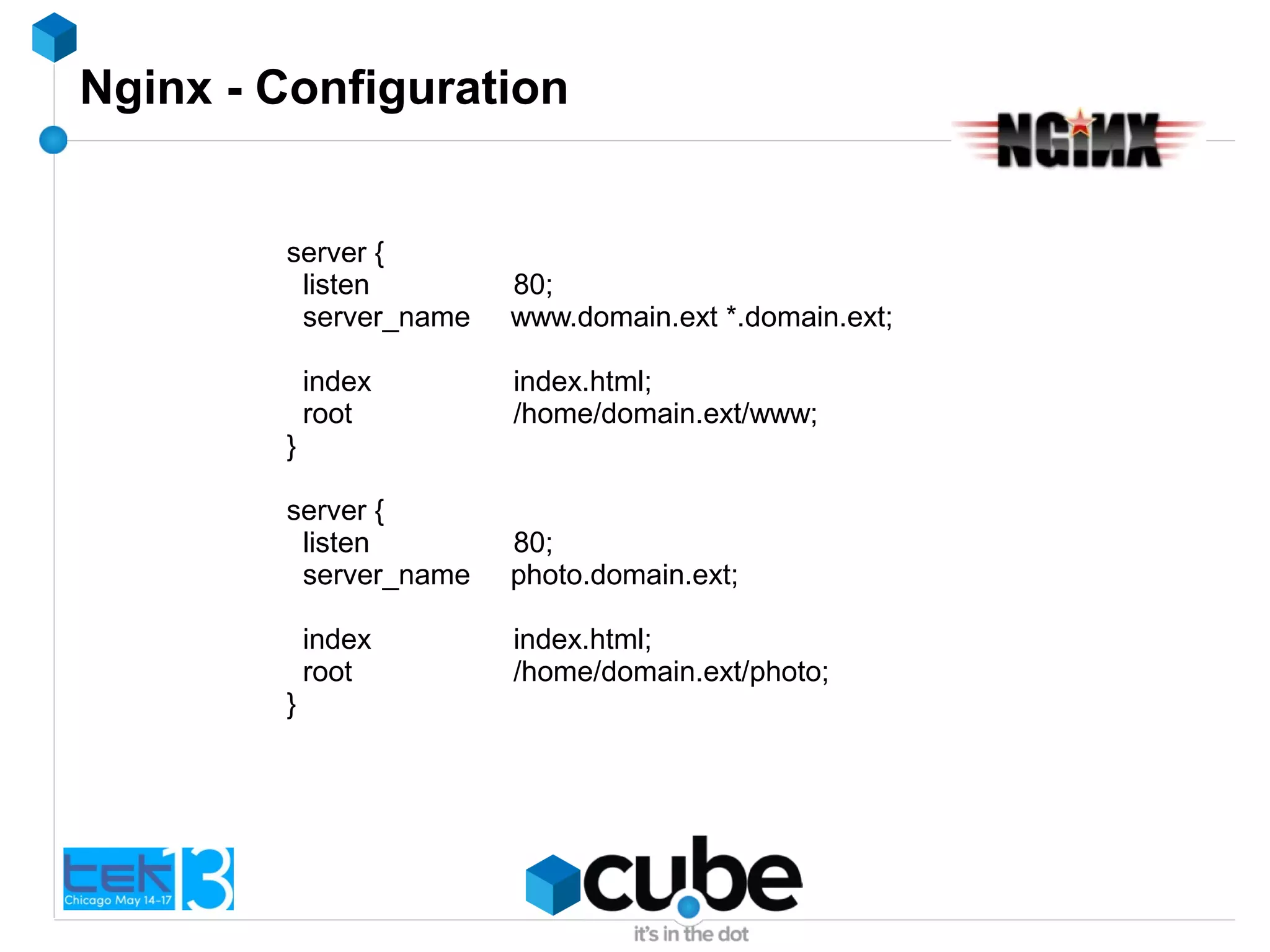

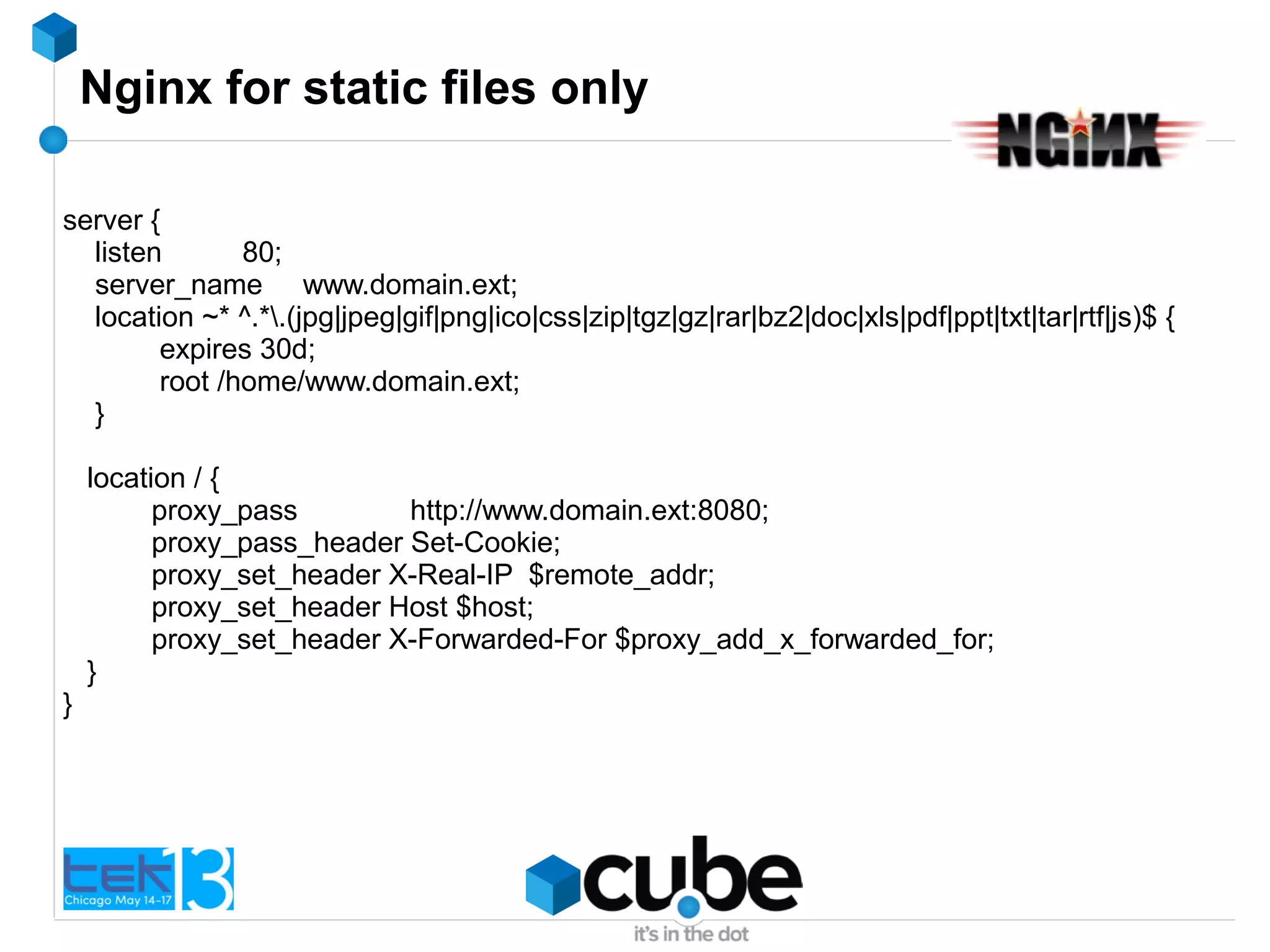

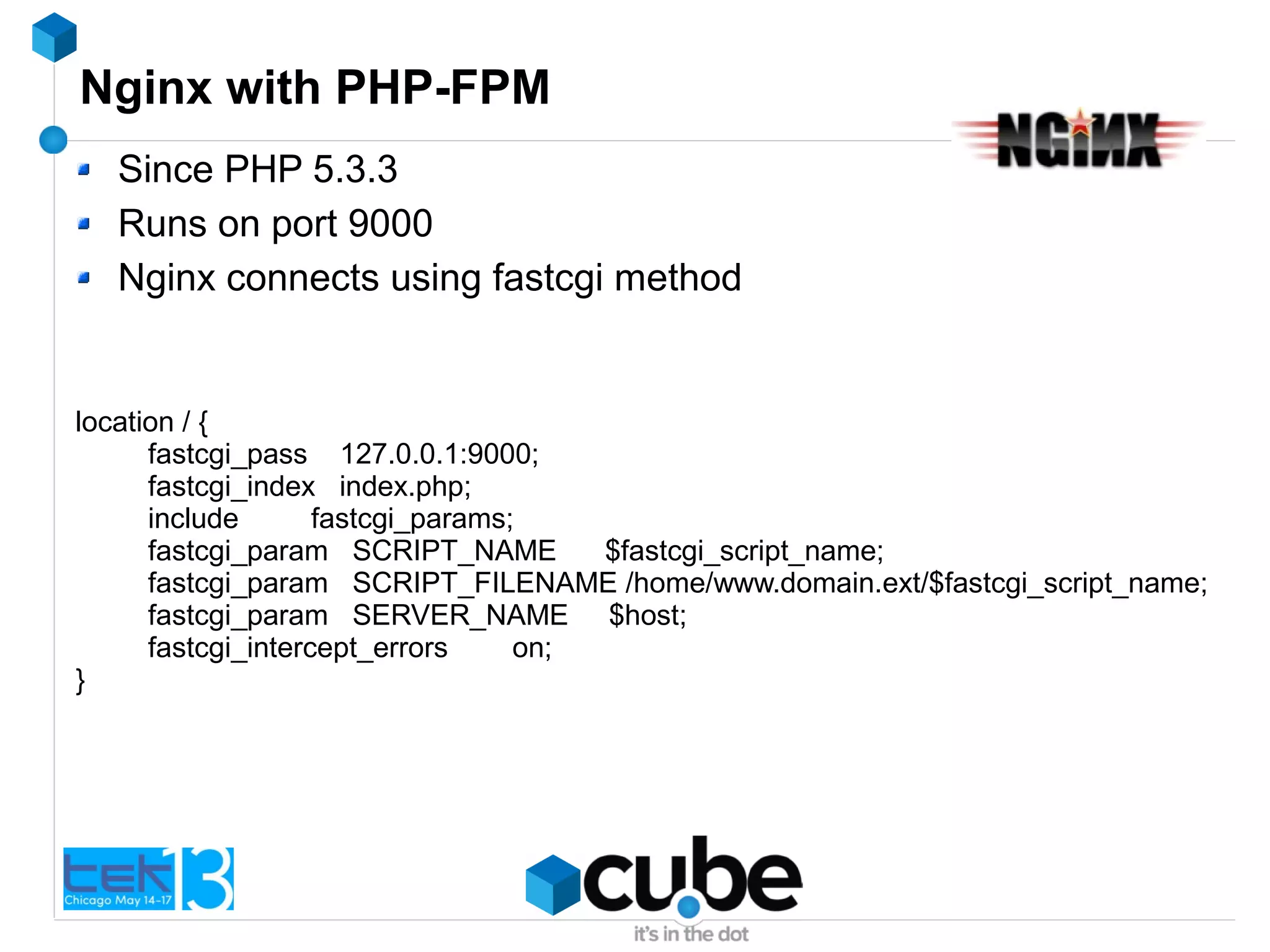

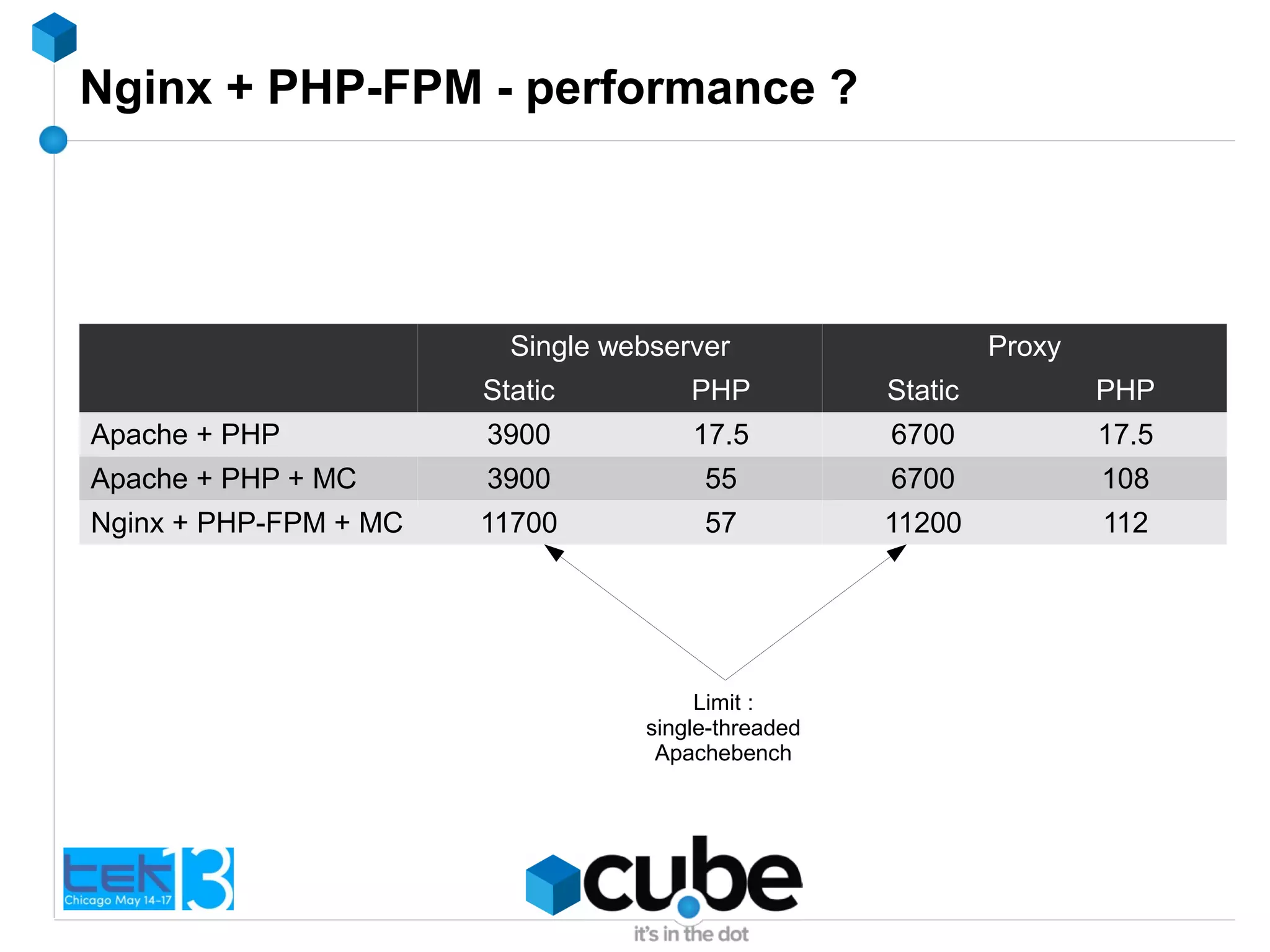

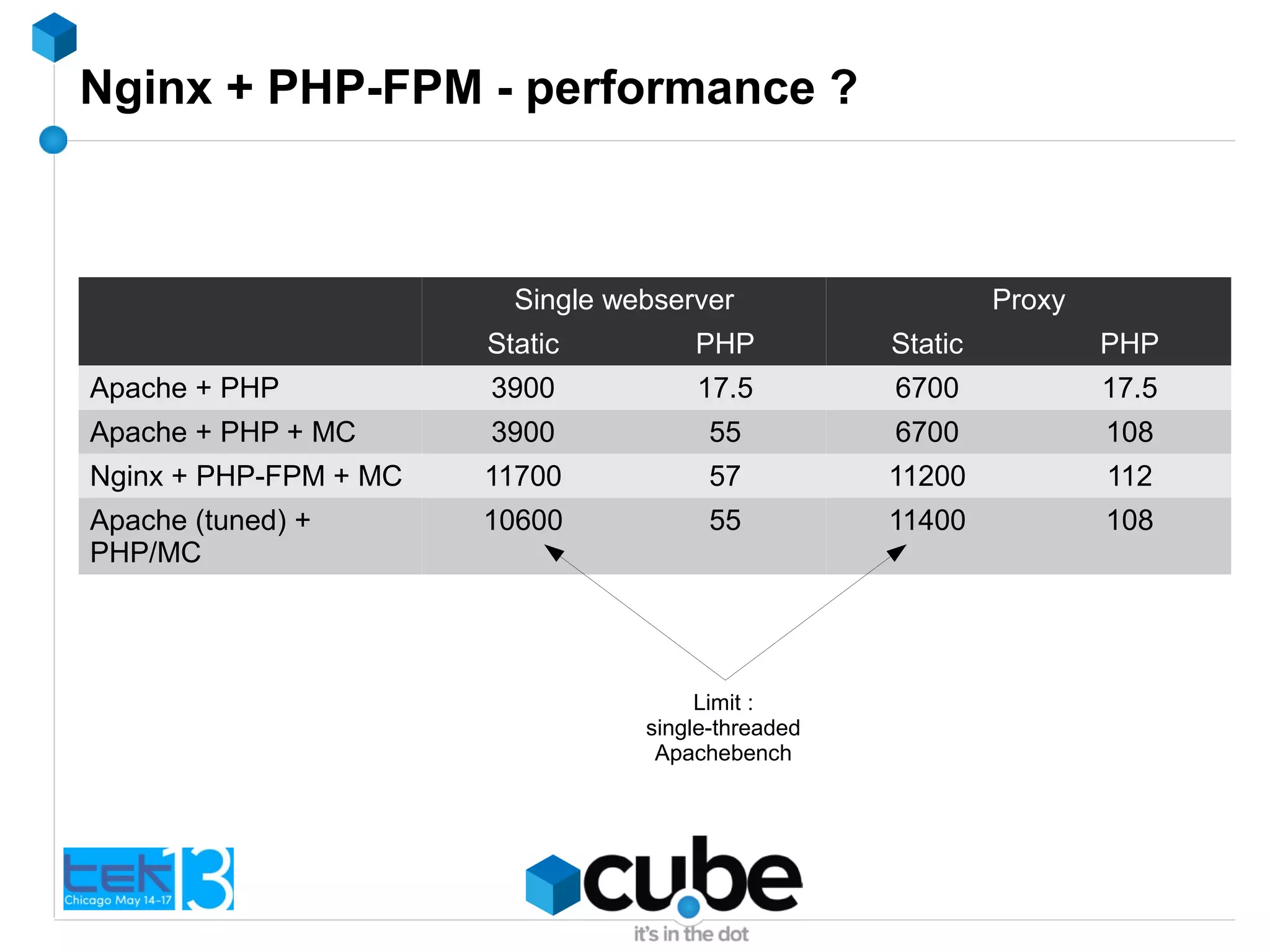

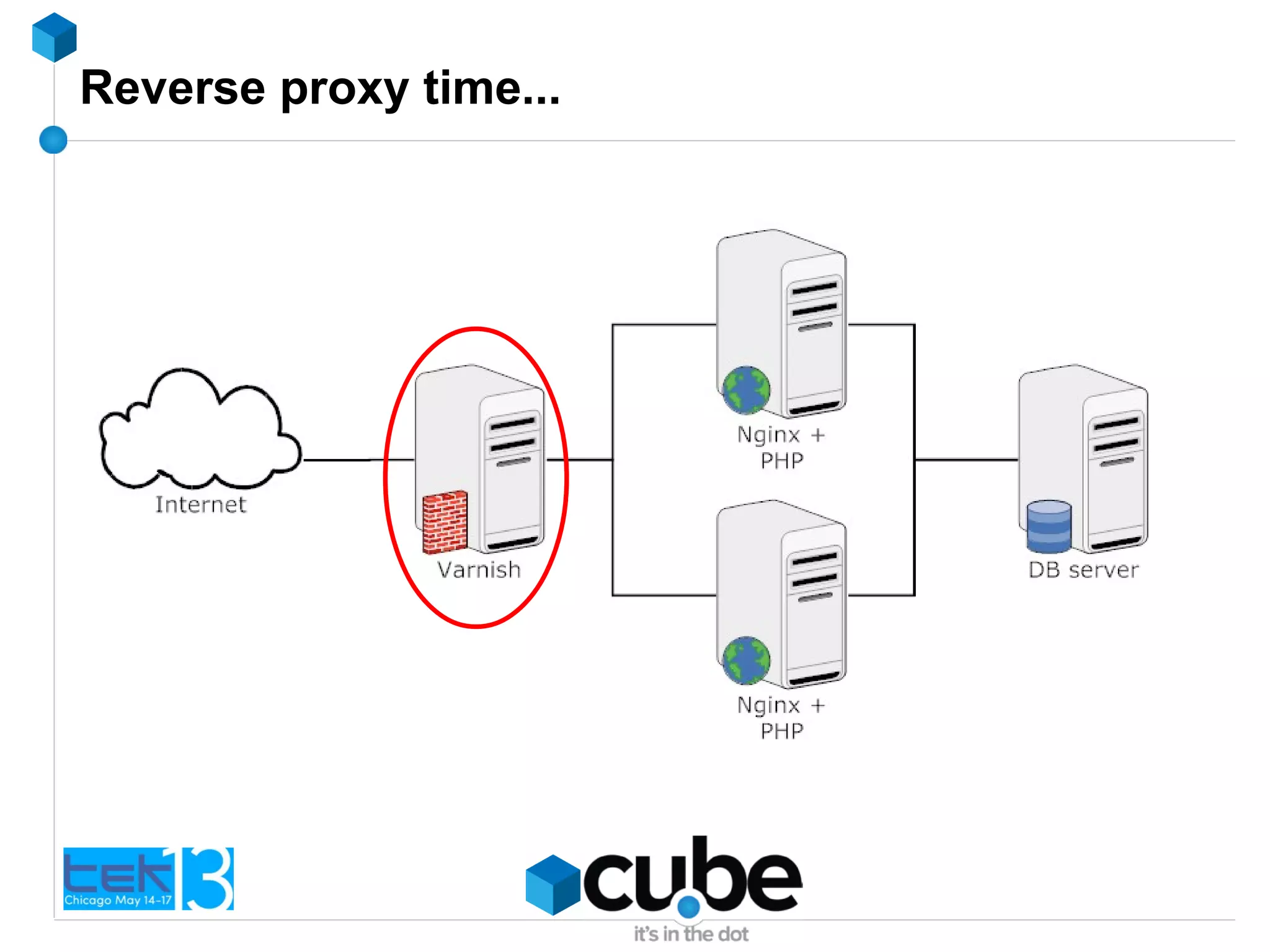

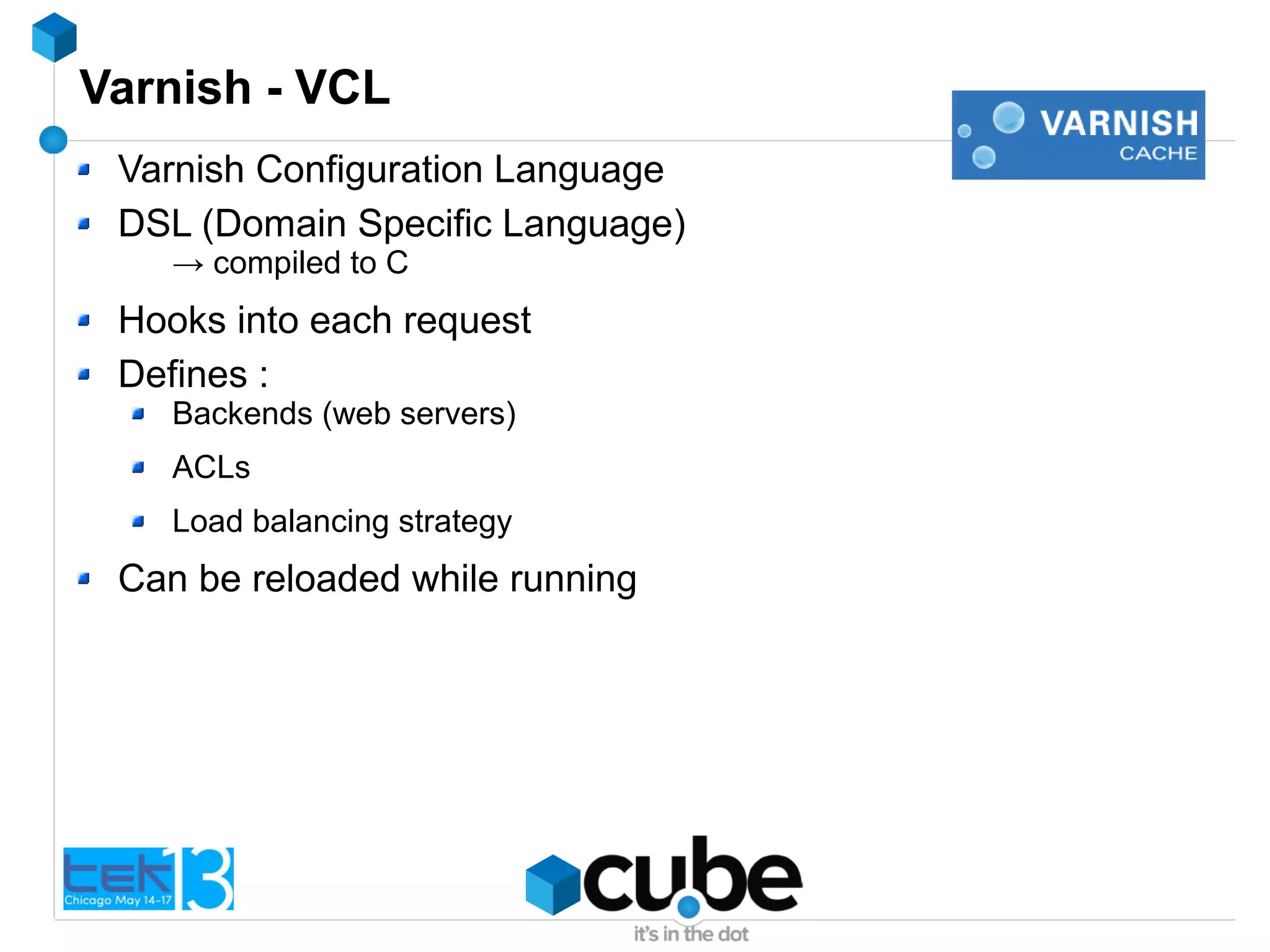

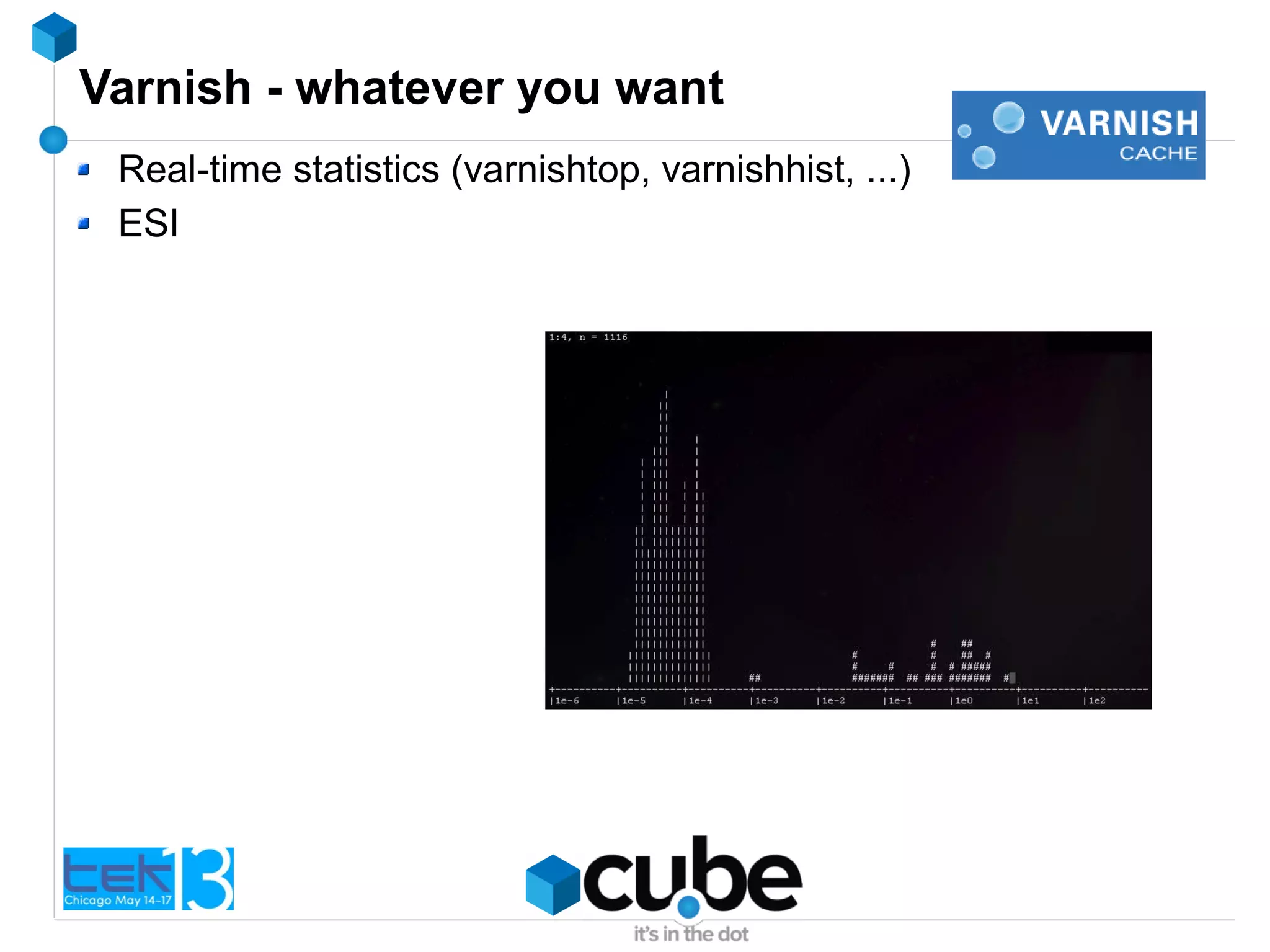

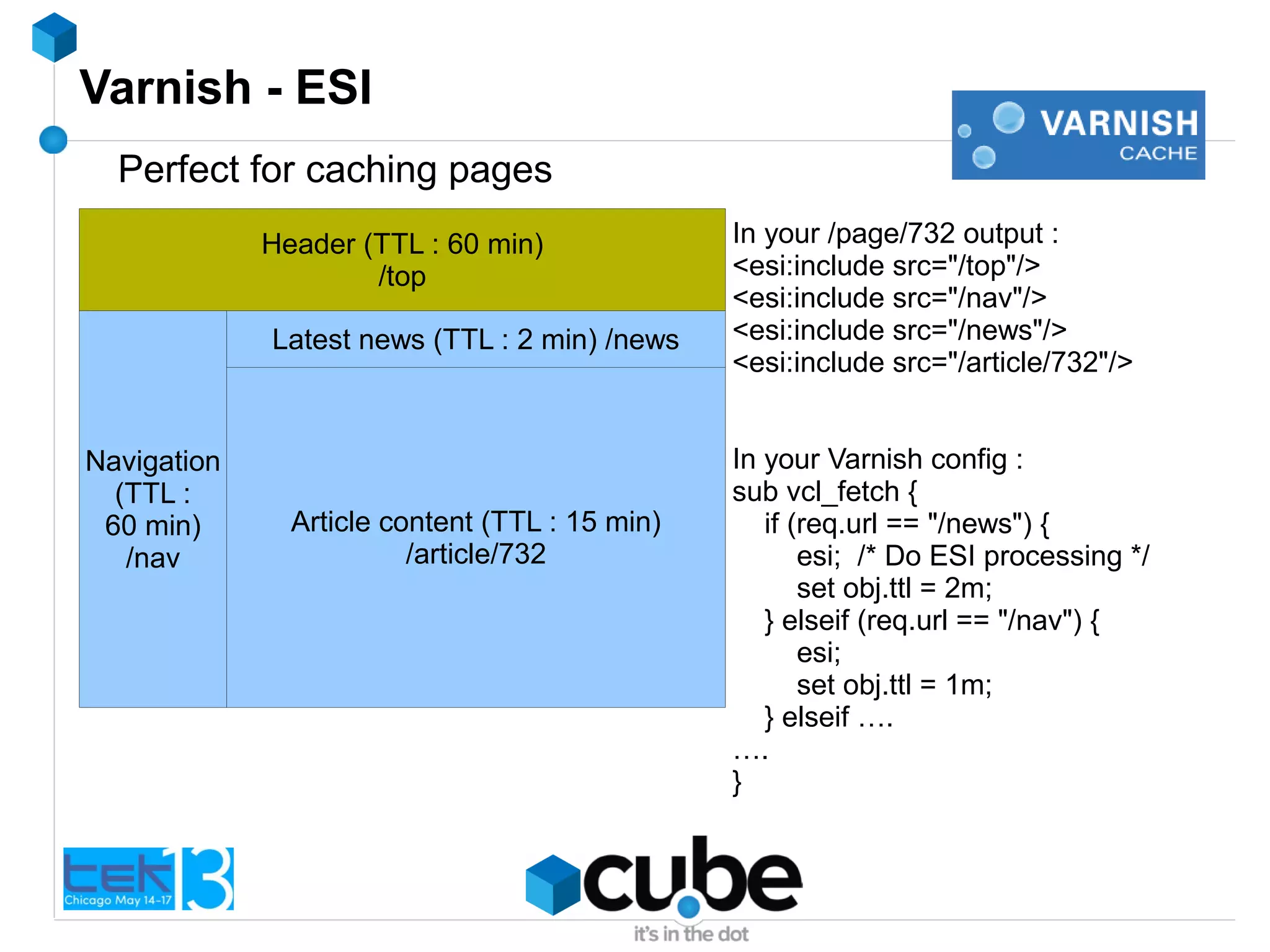

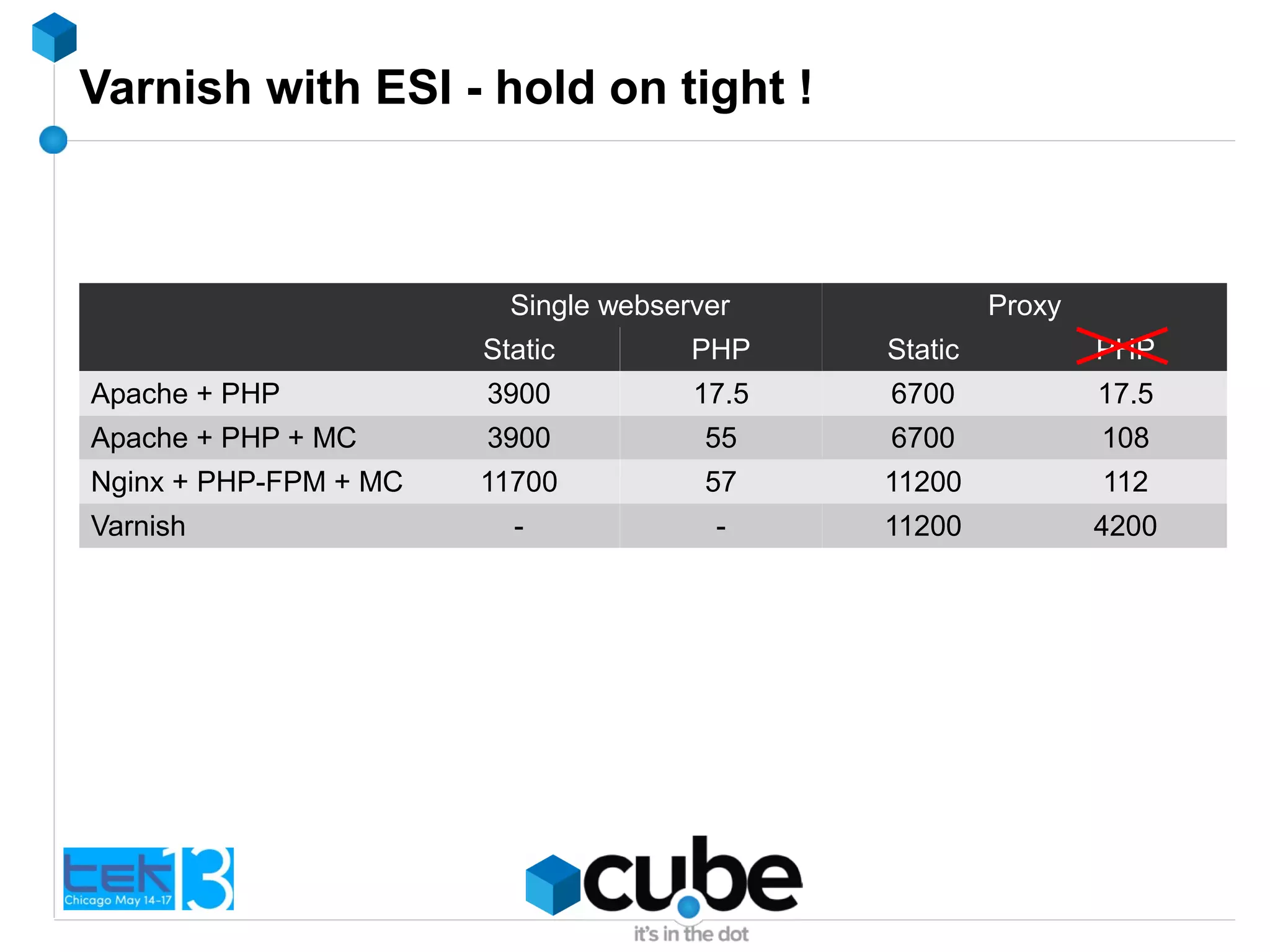

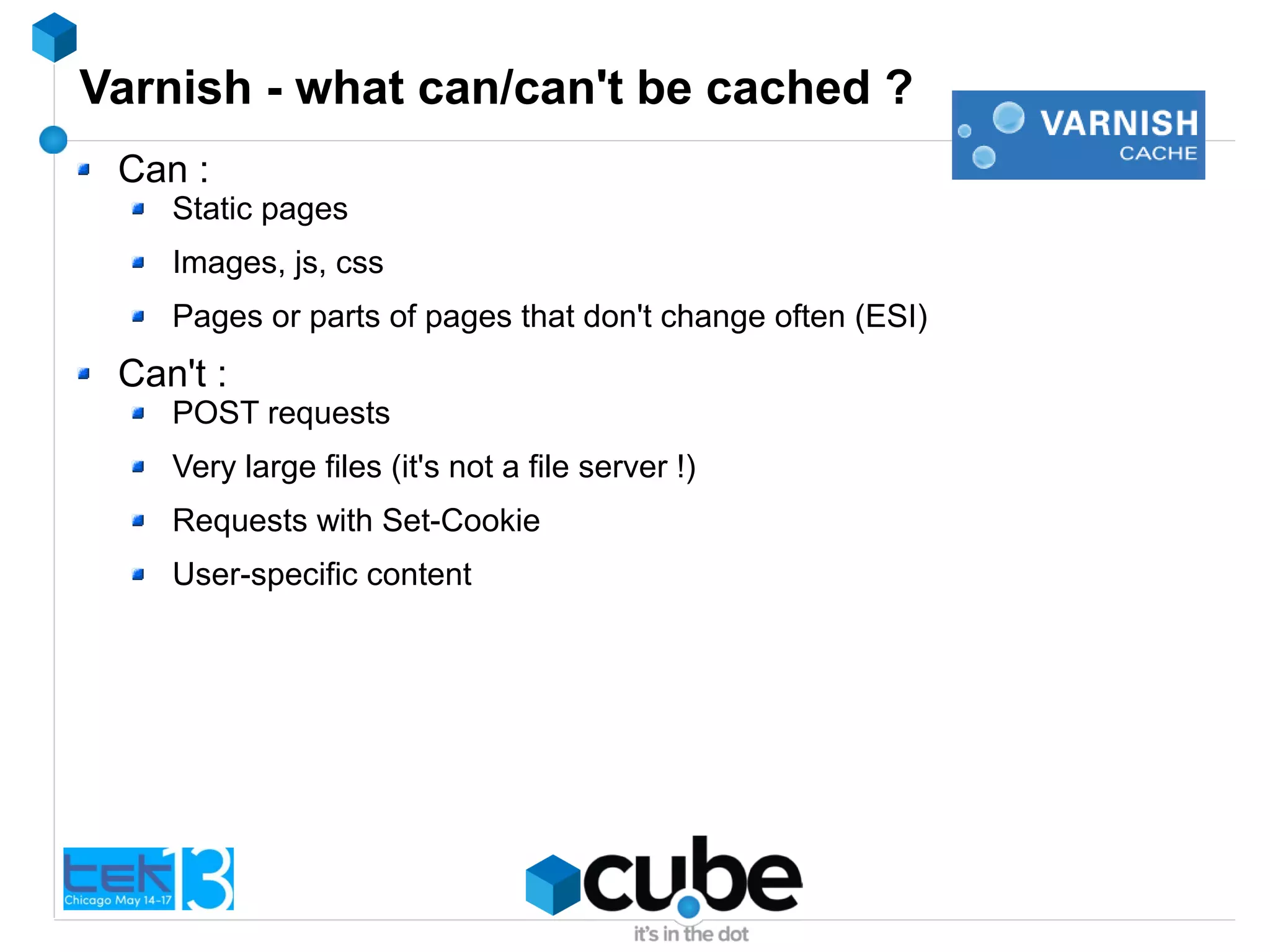

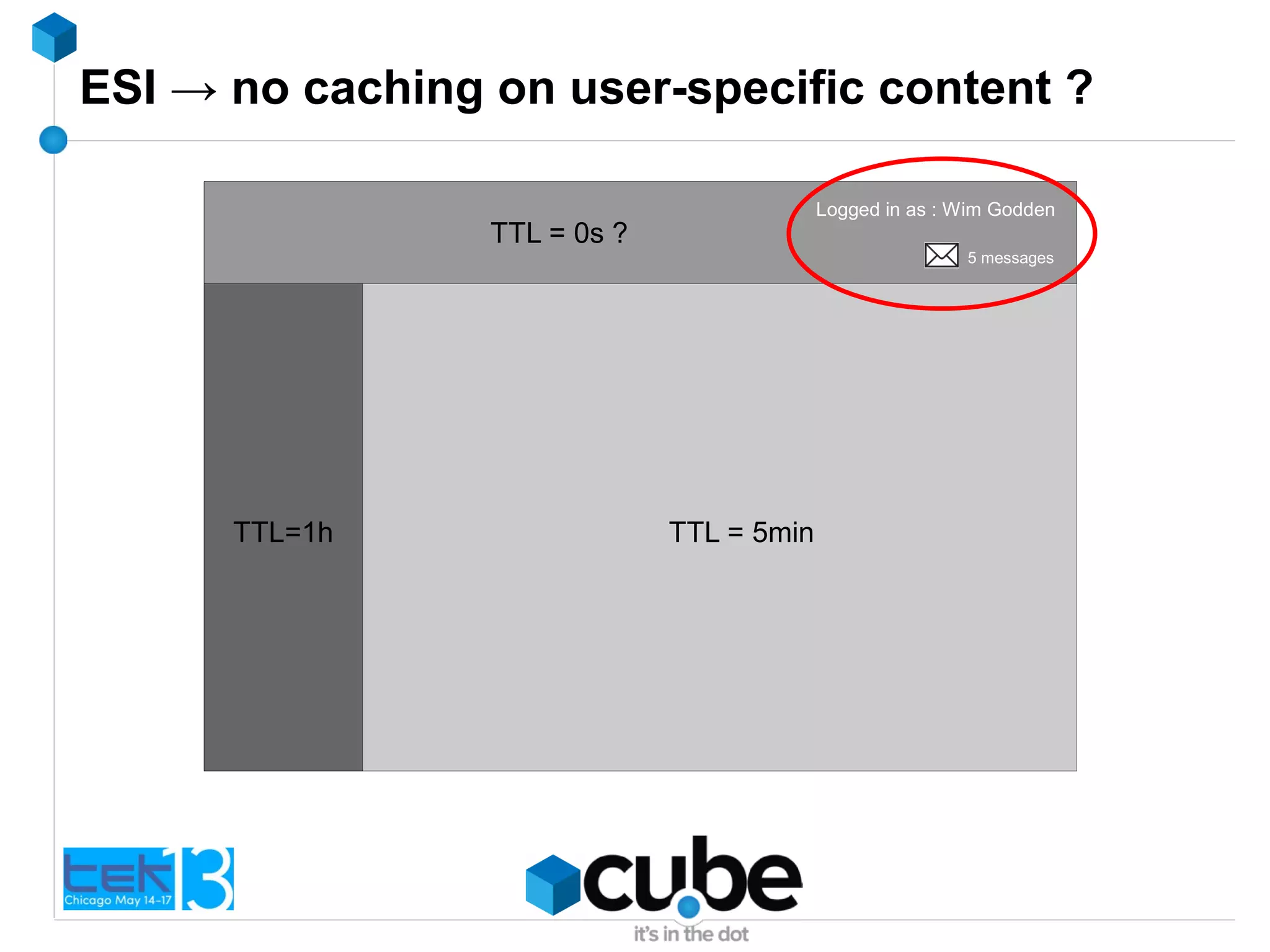

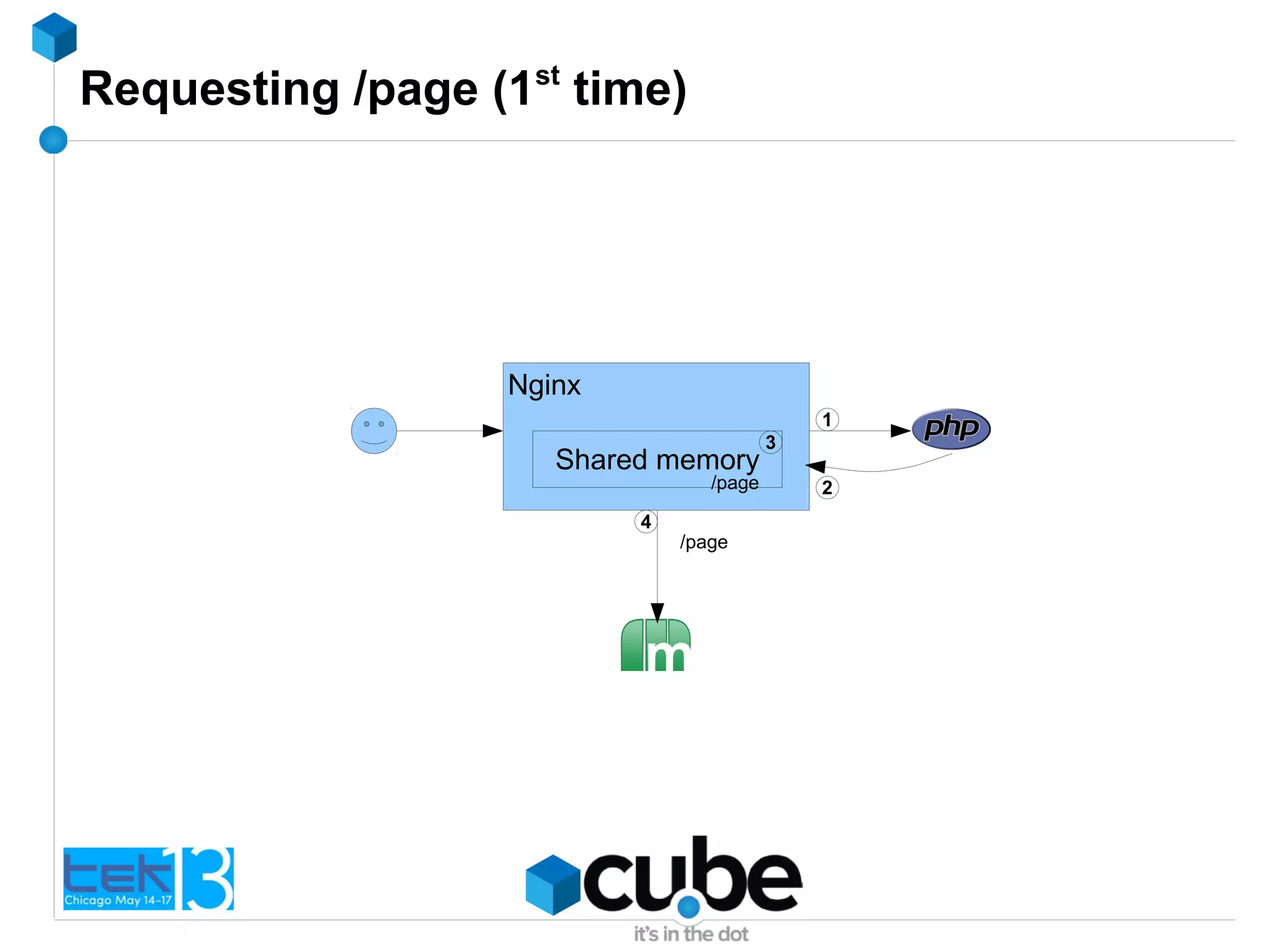

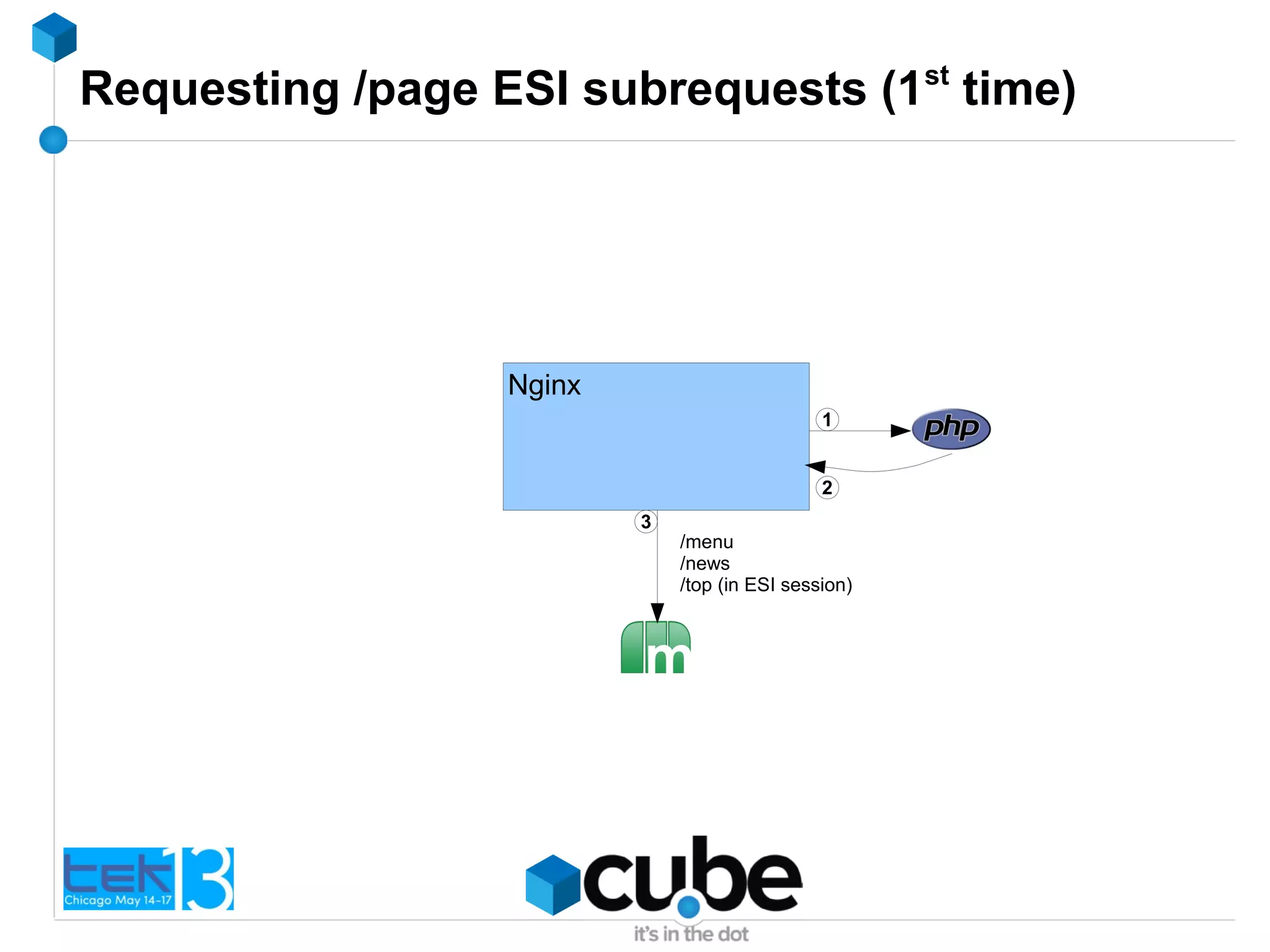

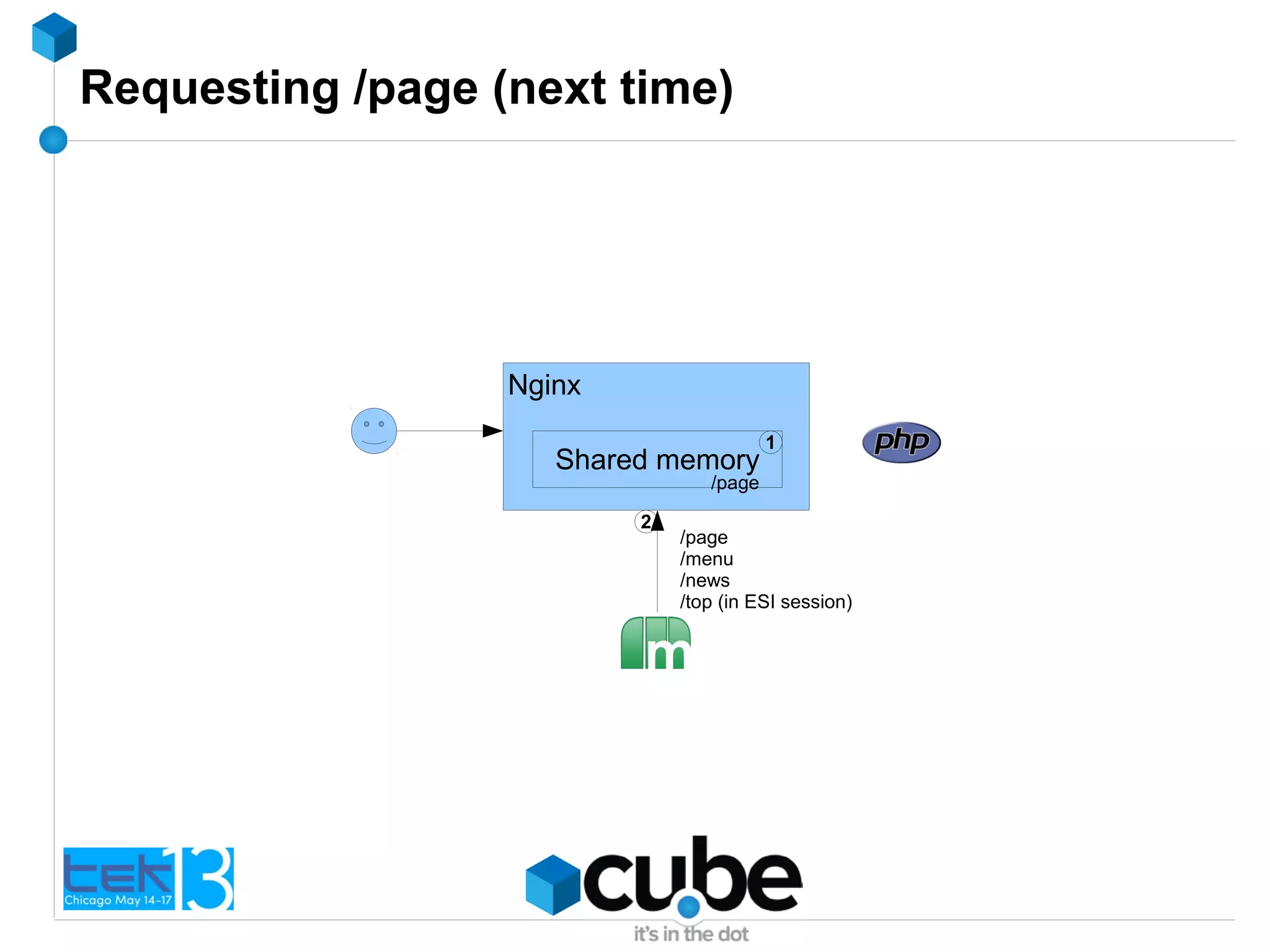

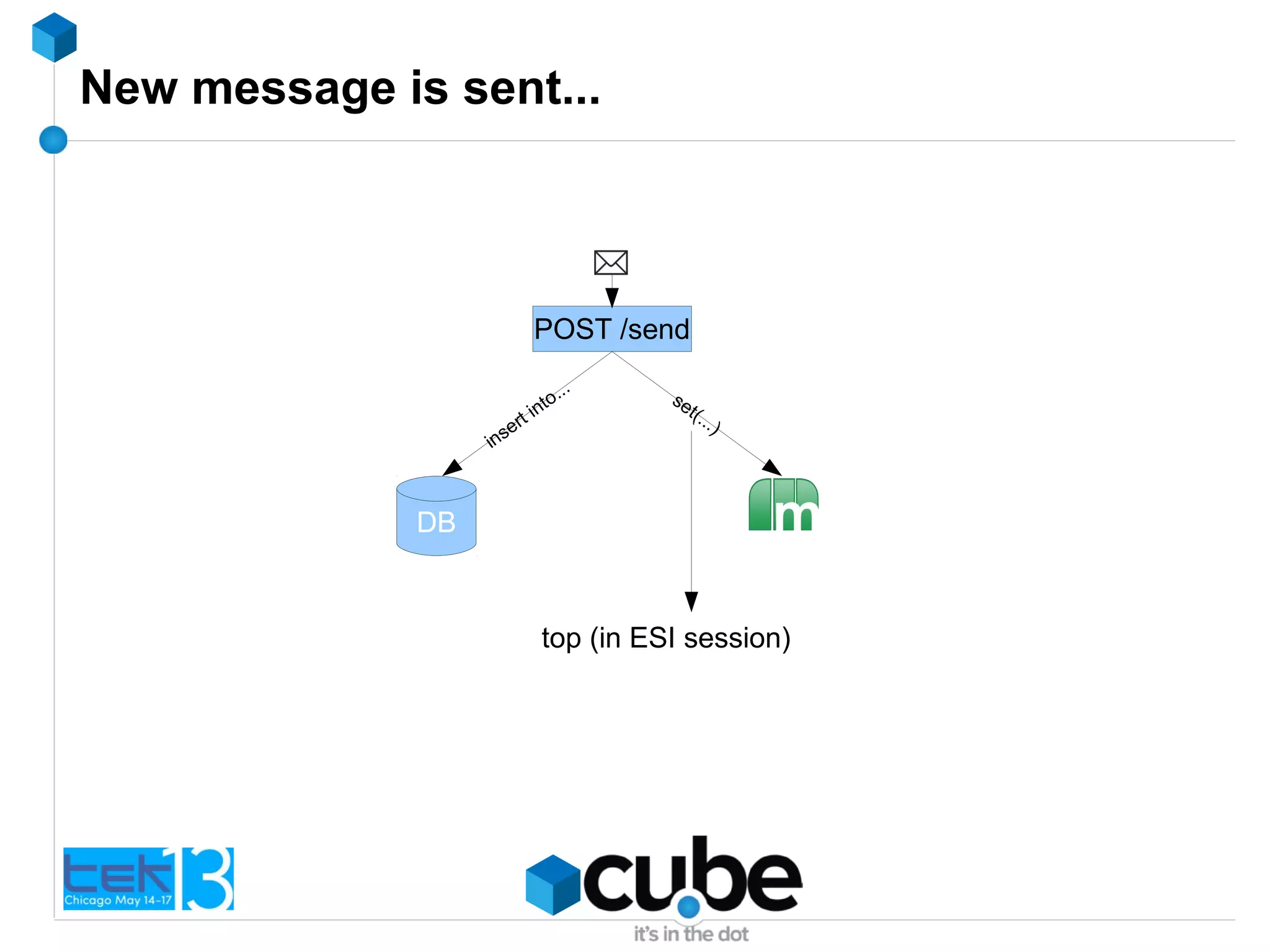

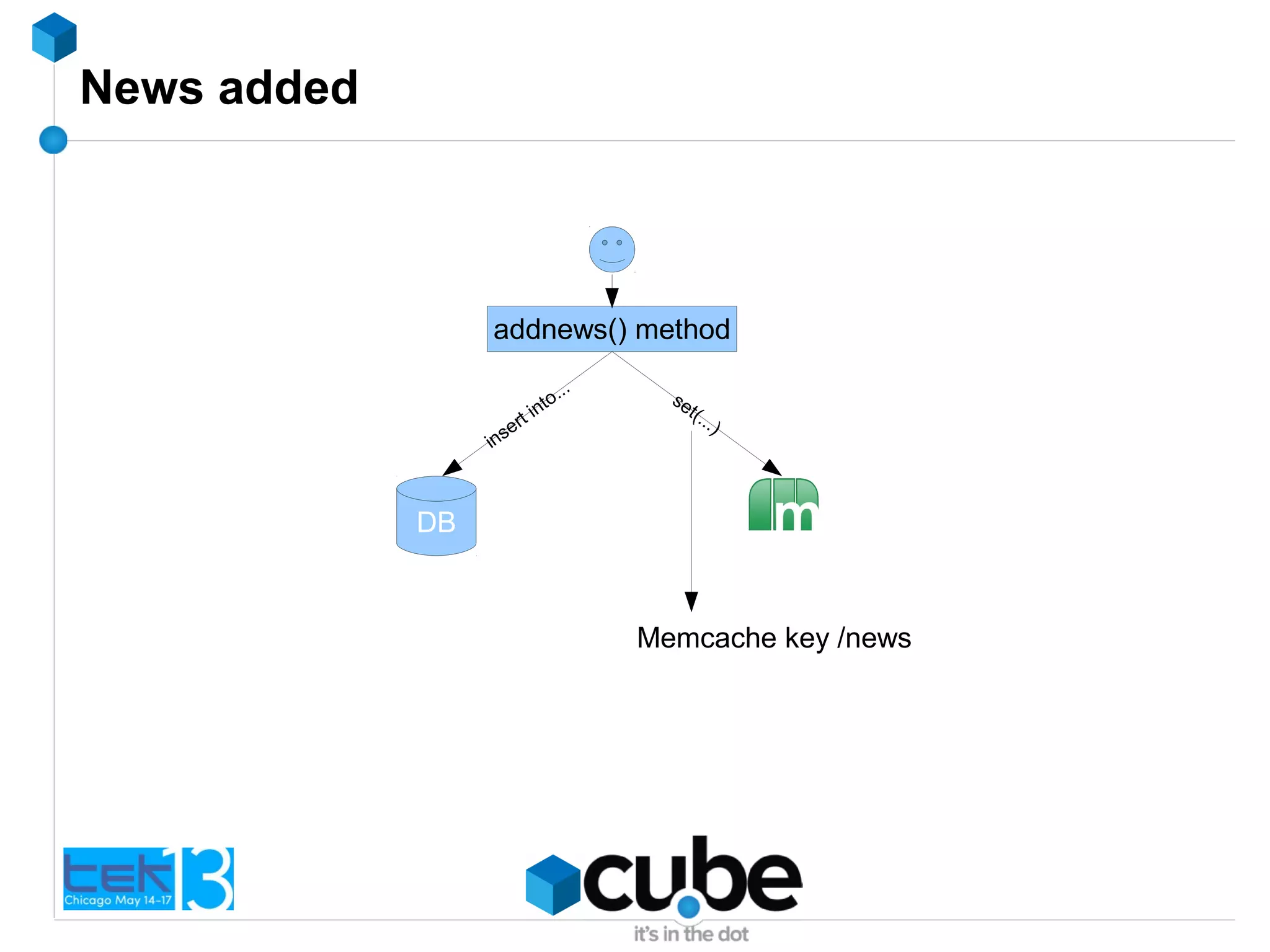

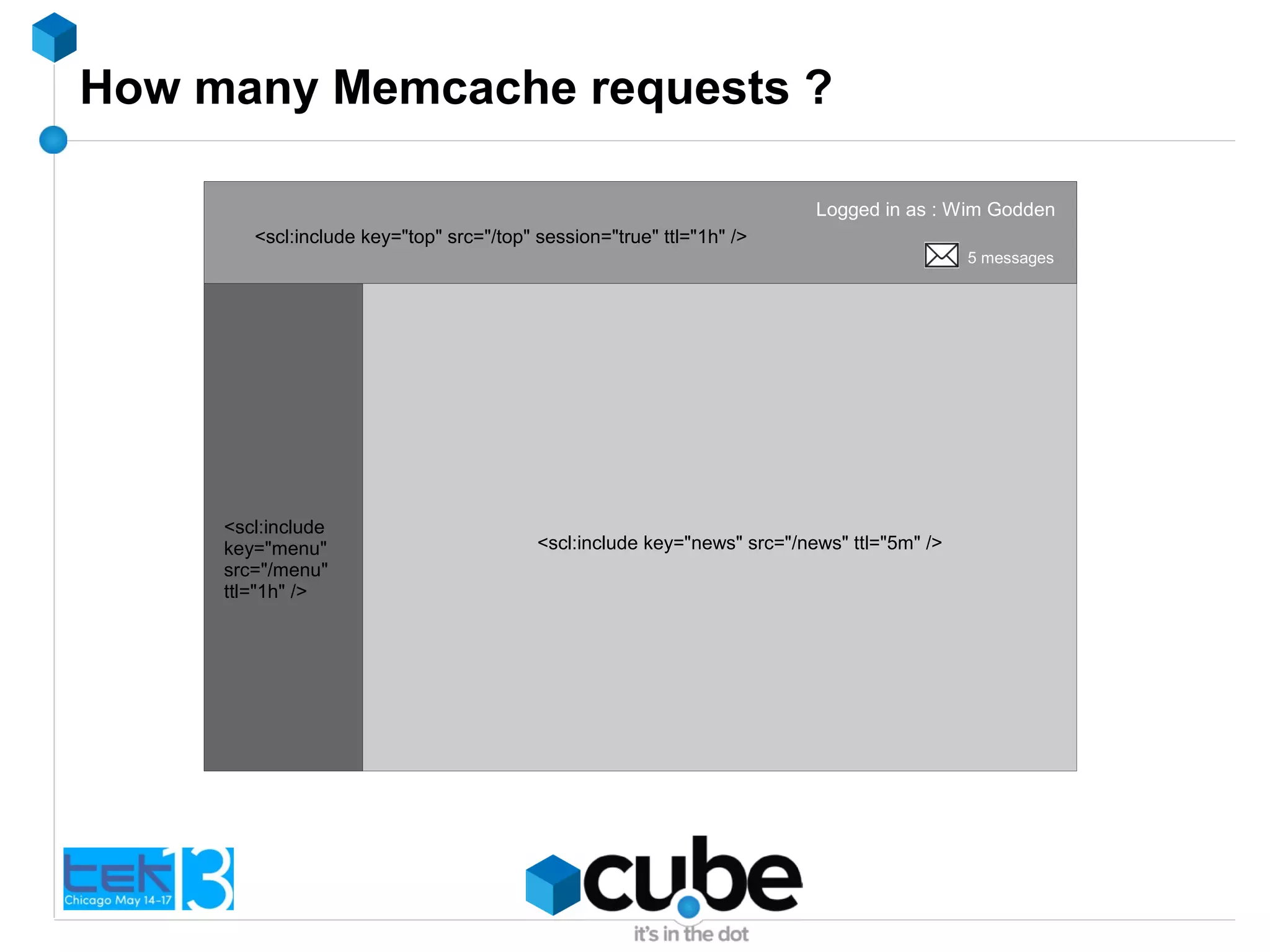

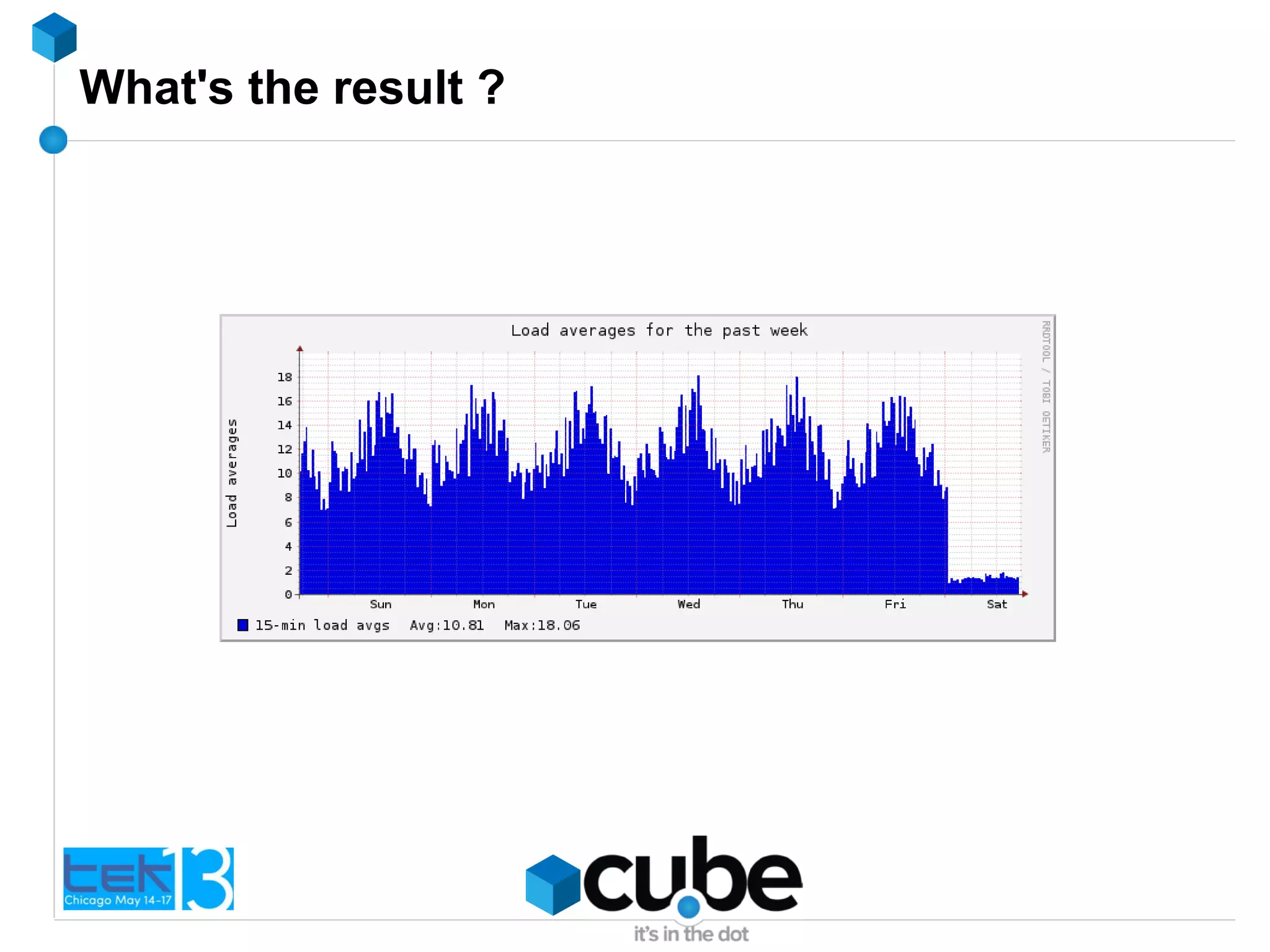

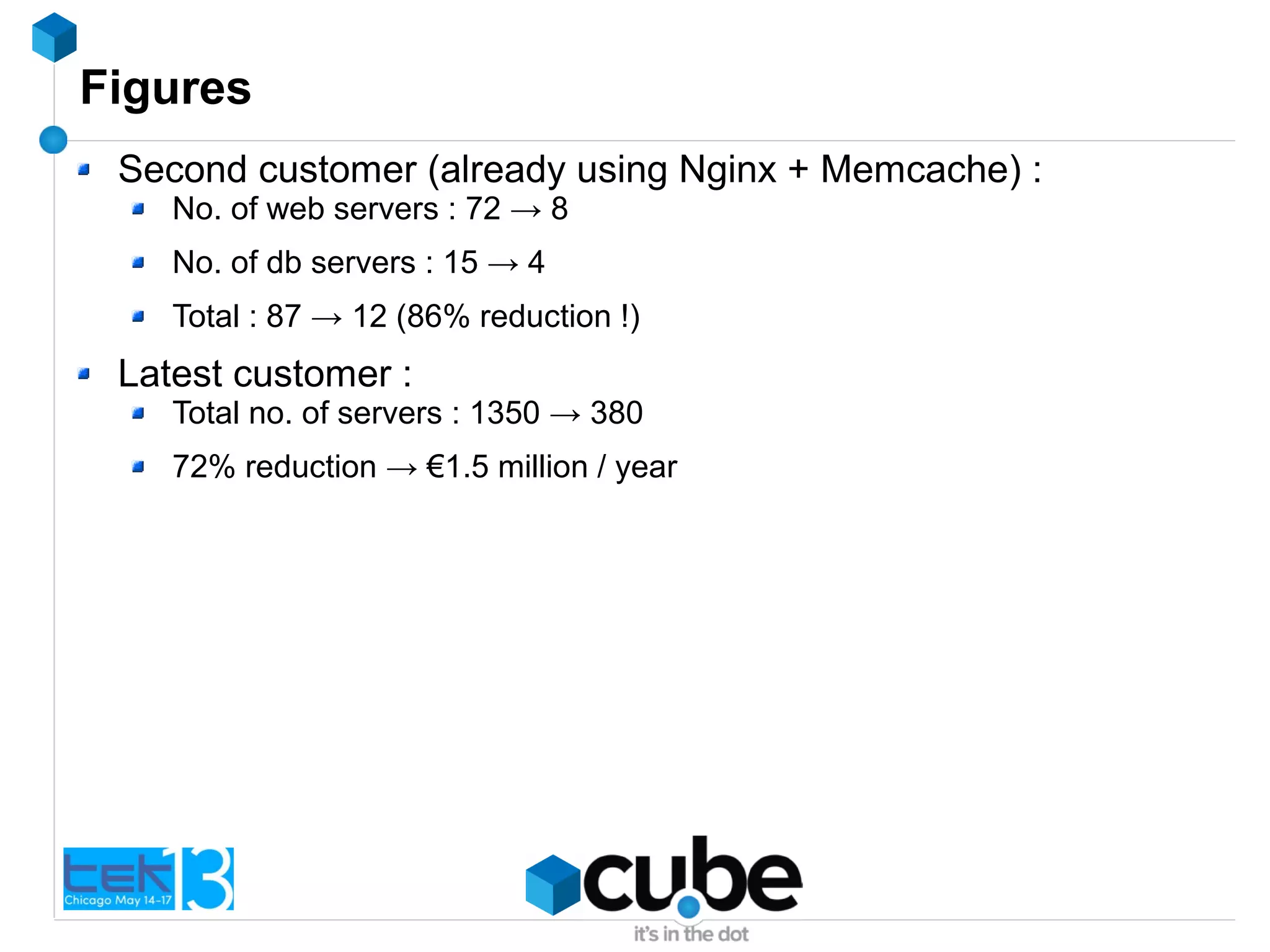

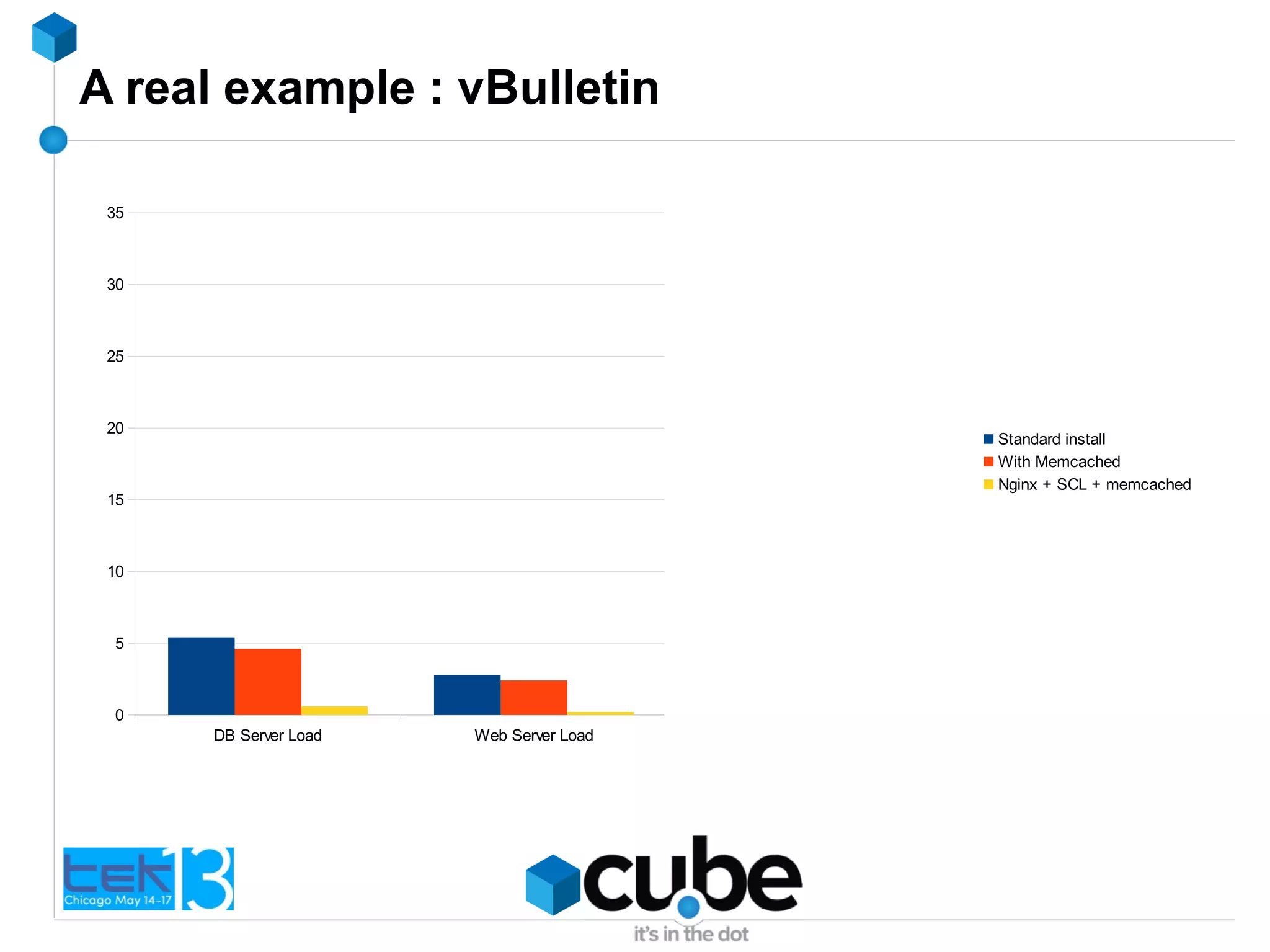

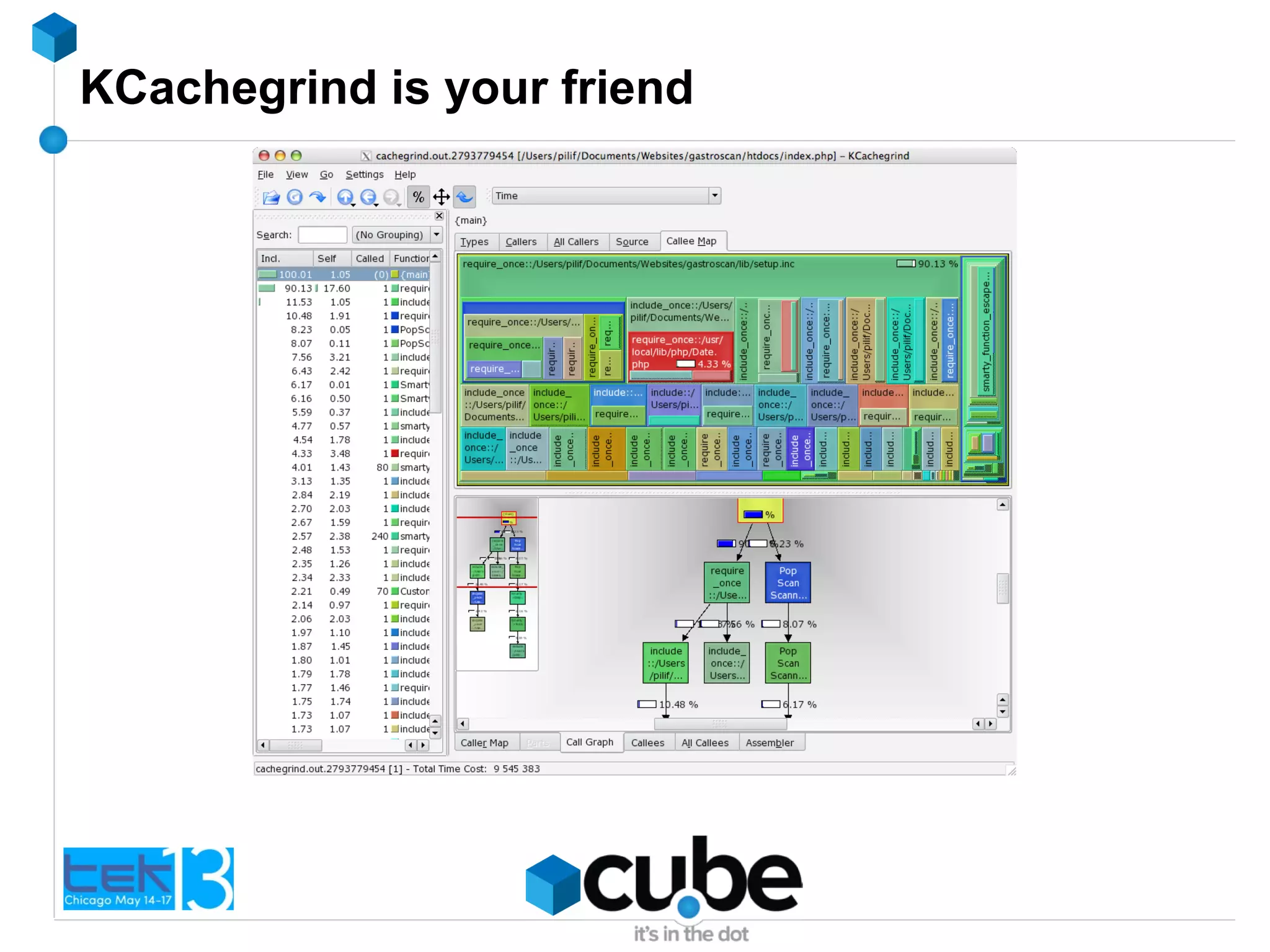

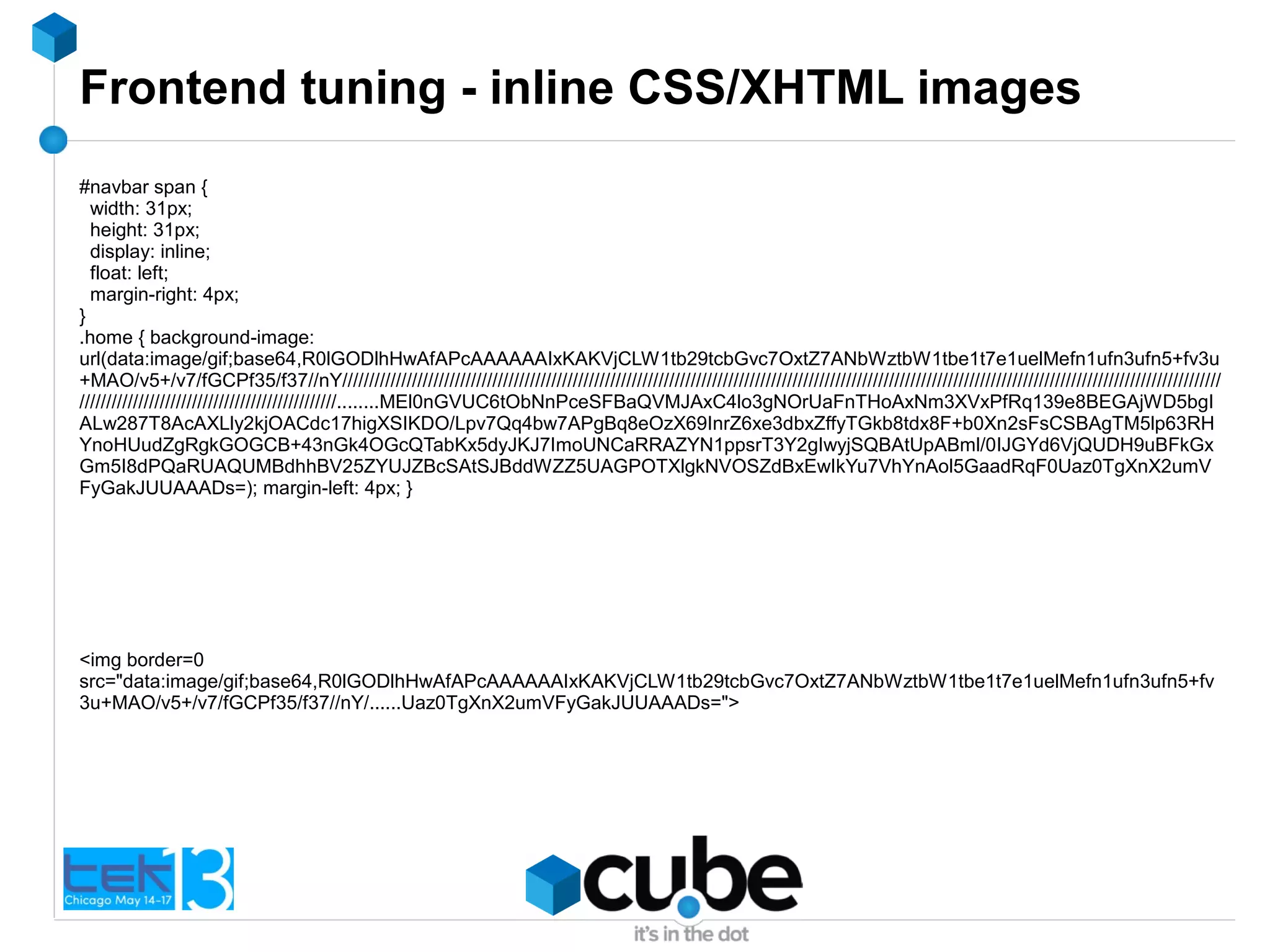

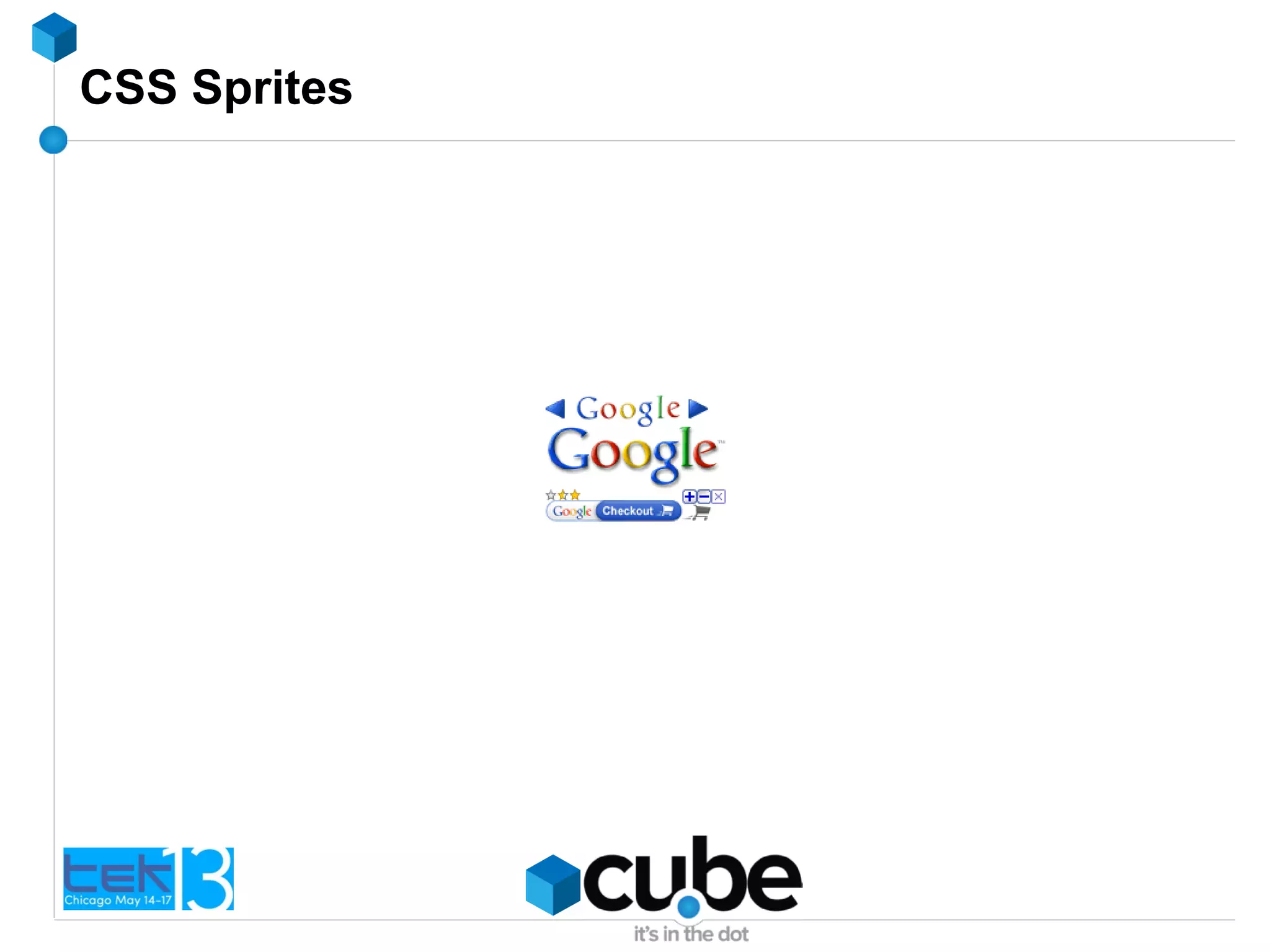

This document discusses caching and tuning techniques to improve scalability for web applications. It begins with an introduction and background on caching. It then covers different caching techniques including caching entire pages, parts of pages, SQL queries, and complex PHP results. It discusses various caching storage options such as the MySQL query cache, memory tables, opcode caching with APC, disk, memory disk, Memcache, and notes on each. The document provides code examples for using Memcache and discusses caching strategies such as updating cached data, cache stampeding, and cache warming scripts. It also covers performance benchmarks and moving to Nginx with PHP-FPM. The overall goal of the techniques discussed is to increase reliability, performance and scalability of a