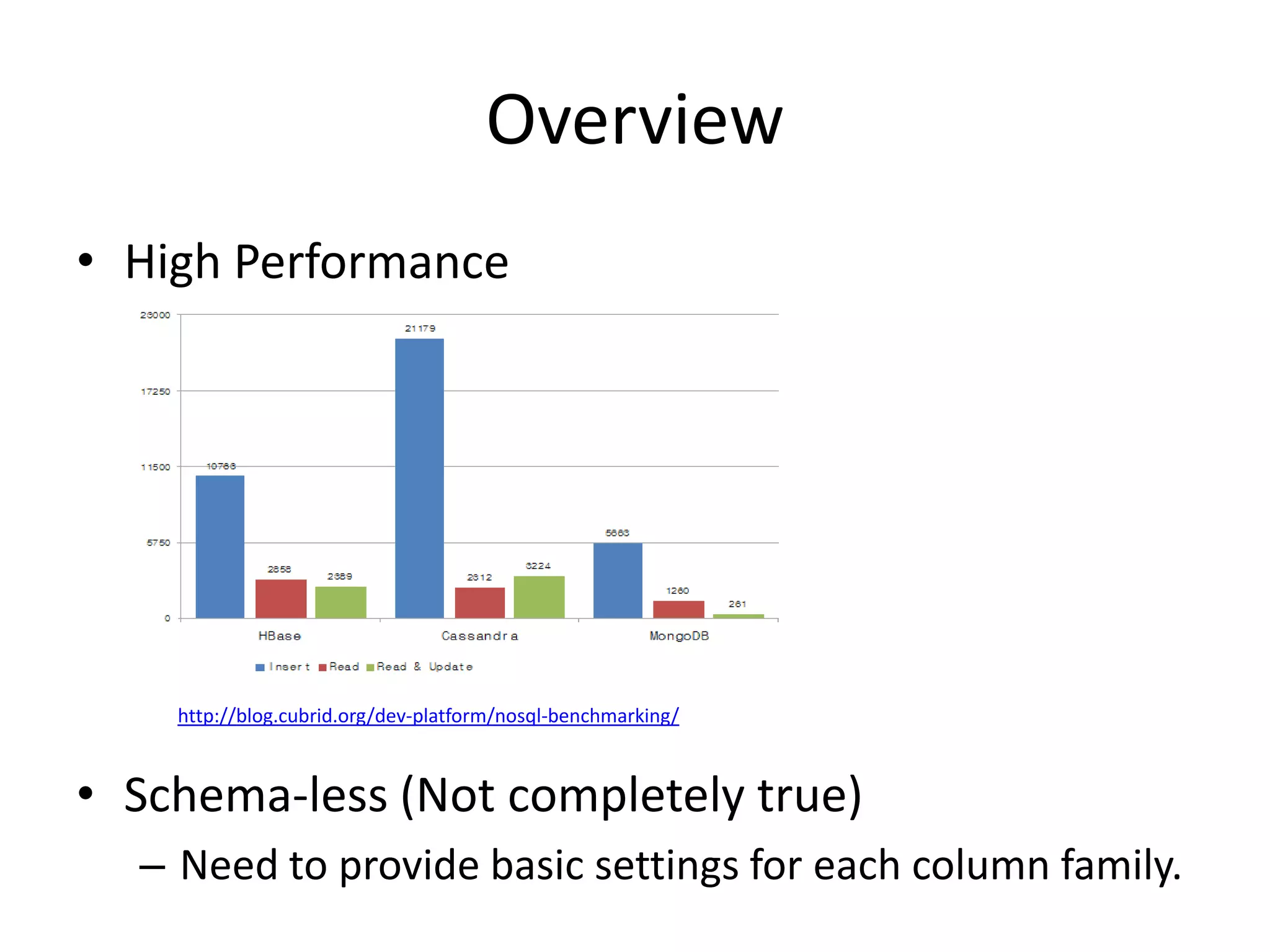

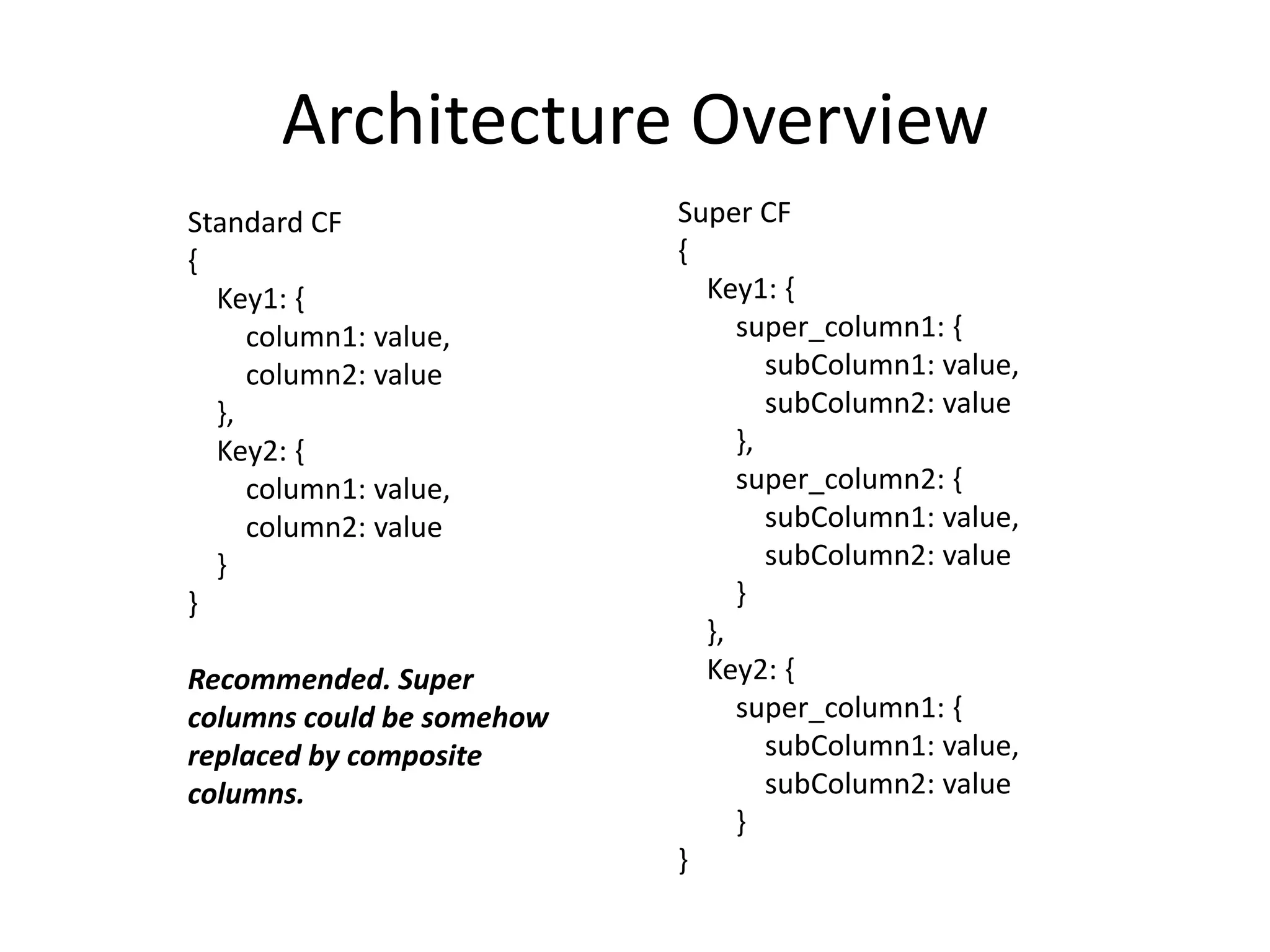

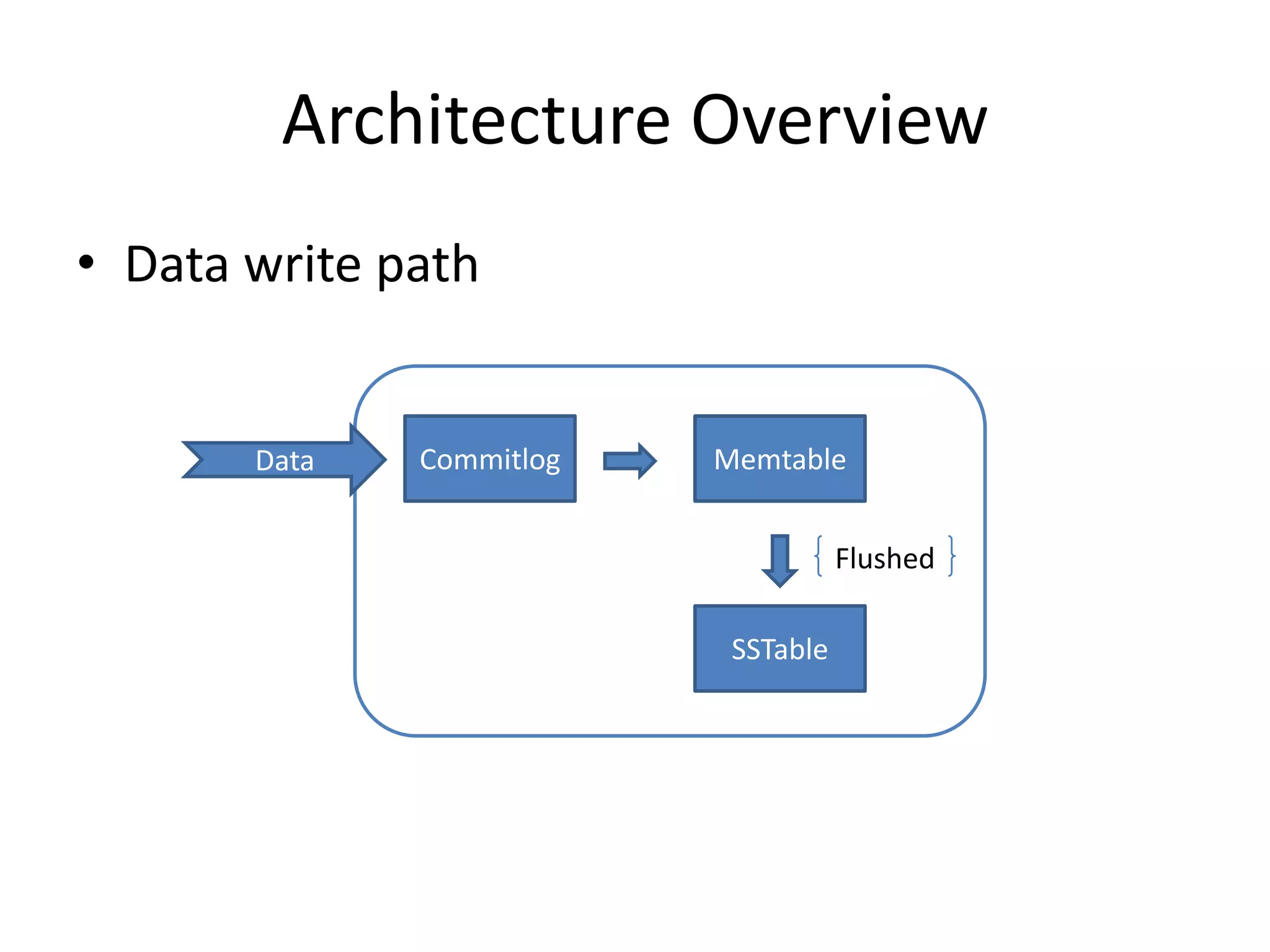

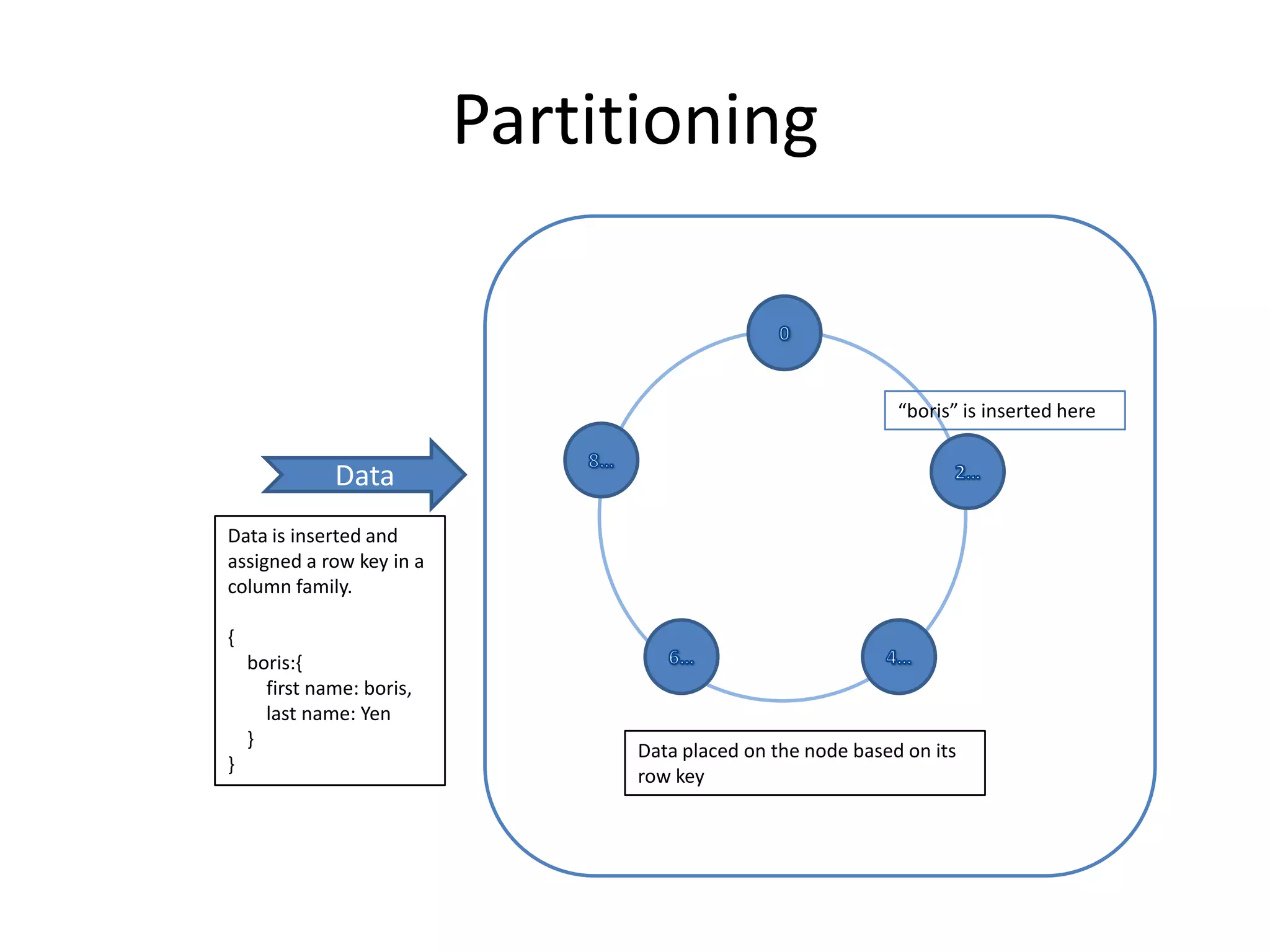

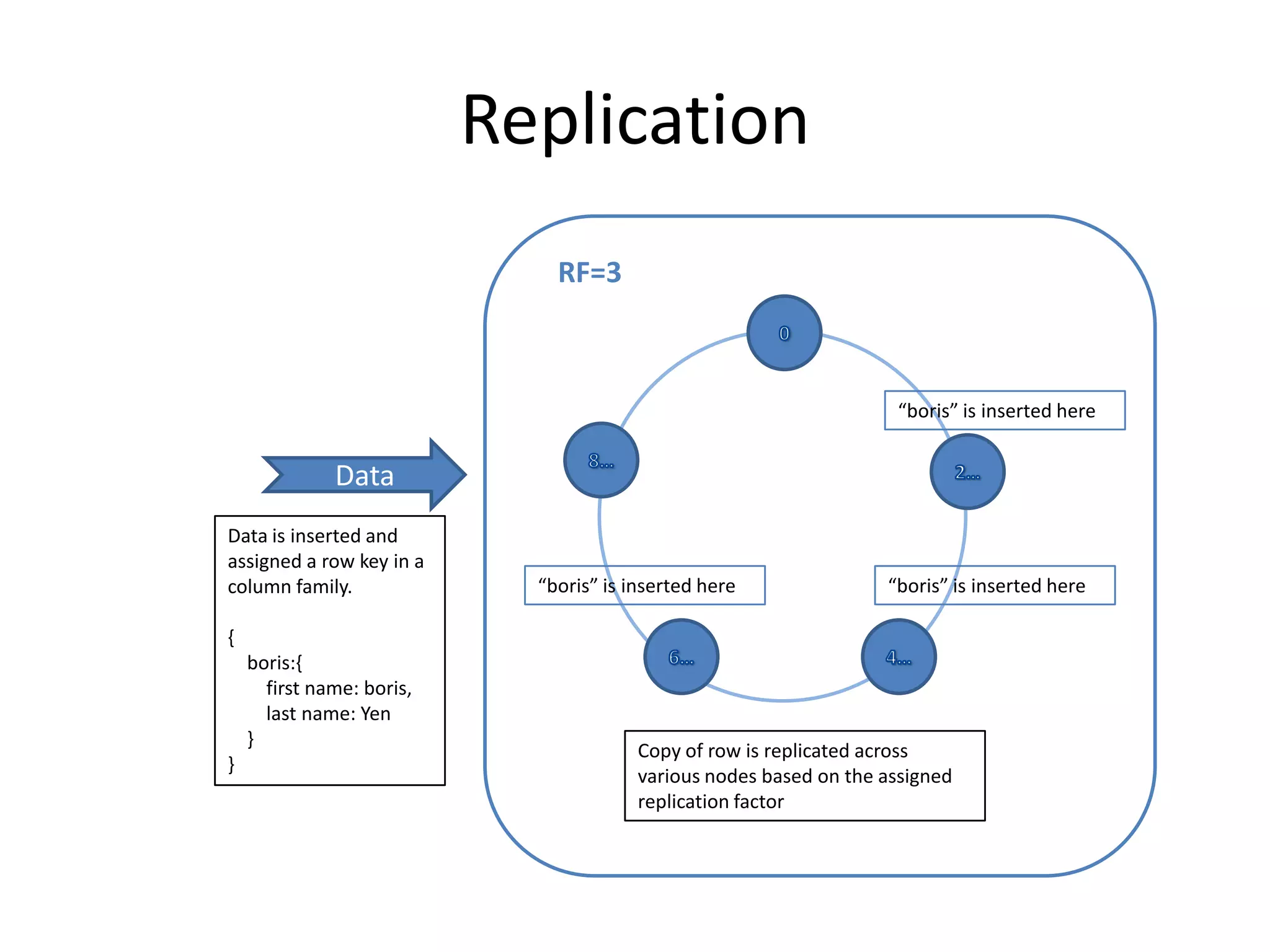

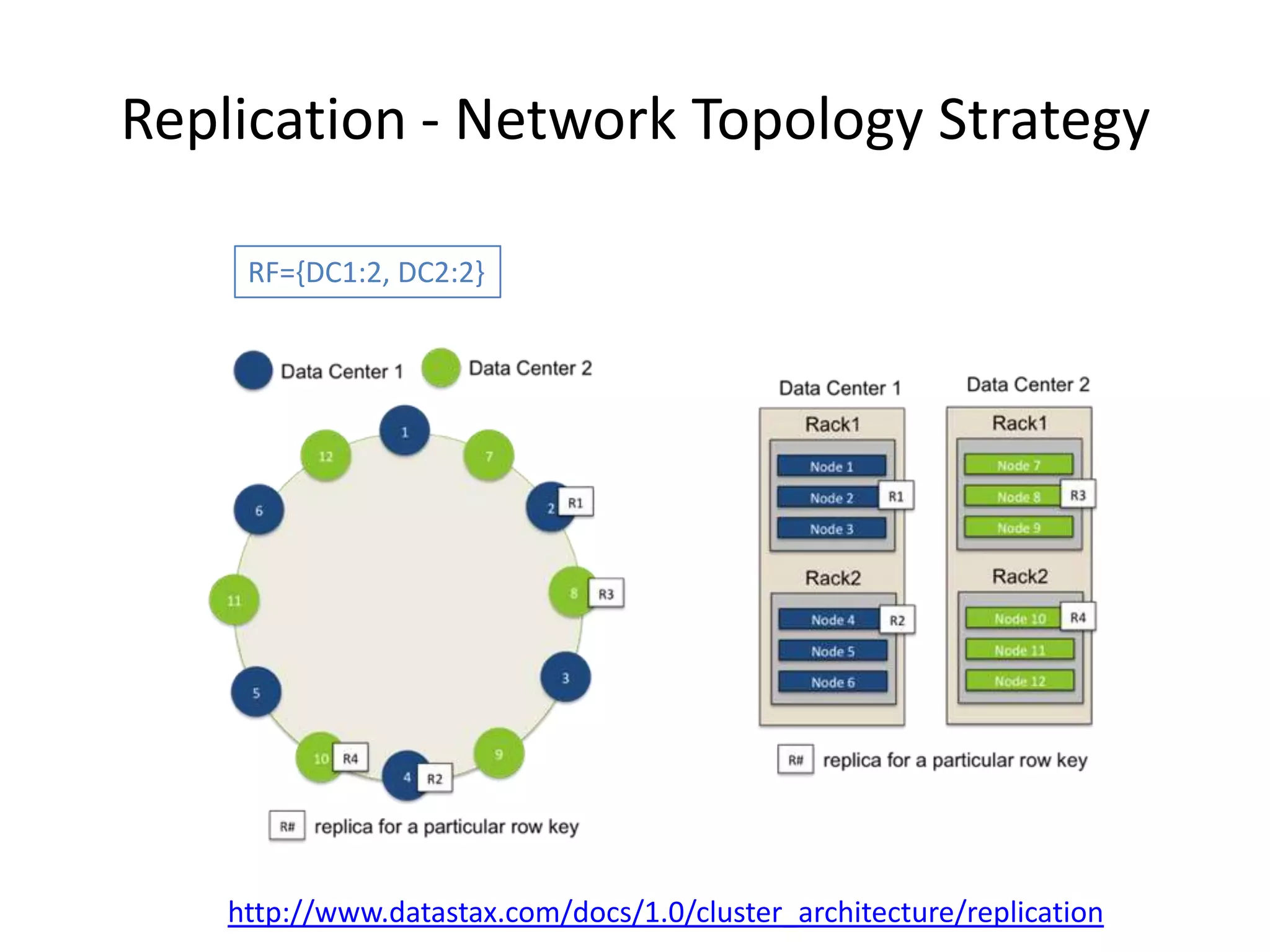

Cassandra is a highly scalable, distributed, and fault-tolerant NoSQL database. It partitions data across nodes through consistent hashing of row keys, and replicates data for fault tolerance based on a replication factor. Cassandra provides tunable consistency levels for reads and writes. It uses a gossip protocol for node discovery and a commit log for write durability.