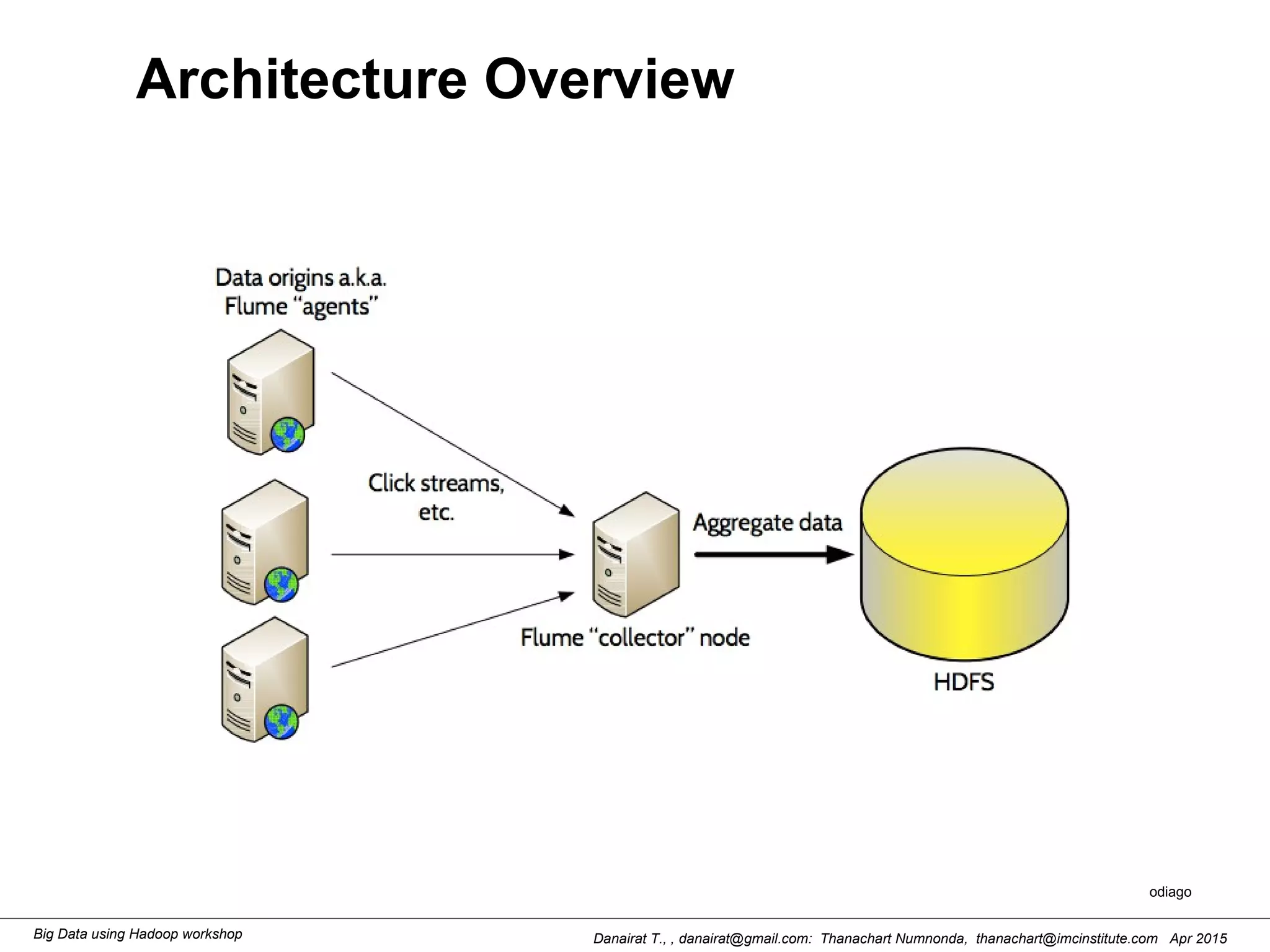

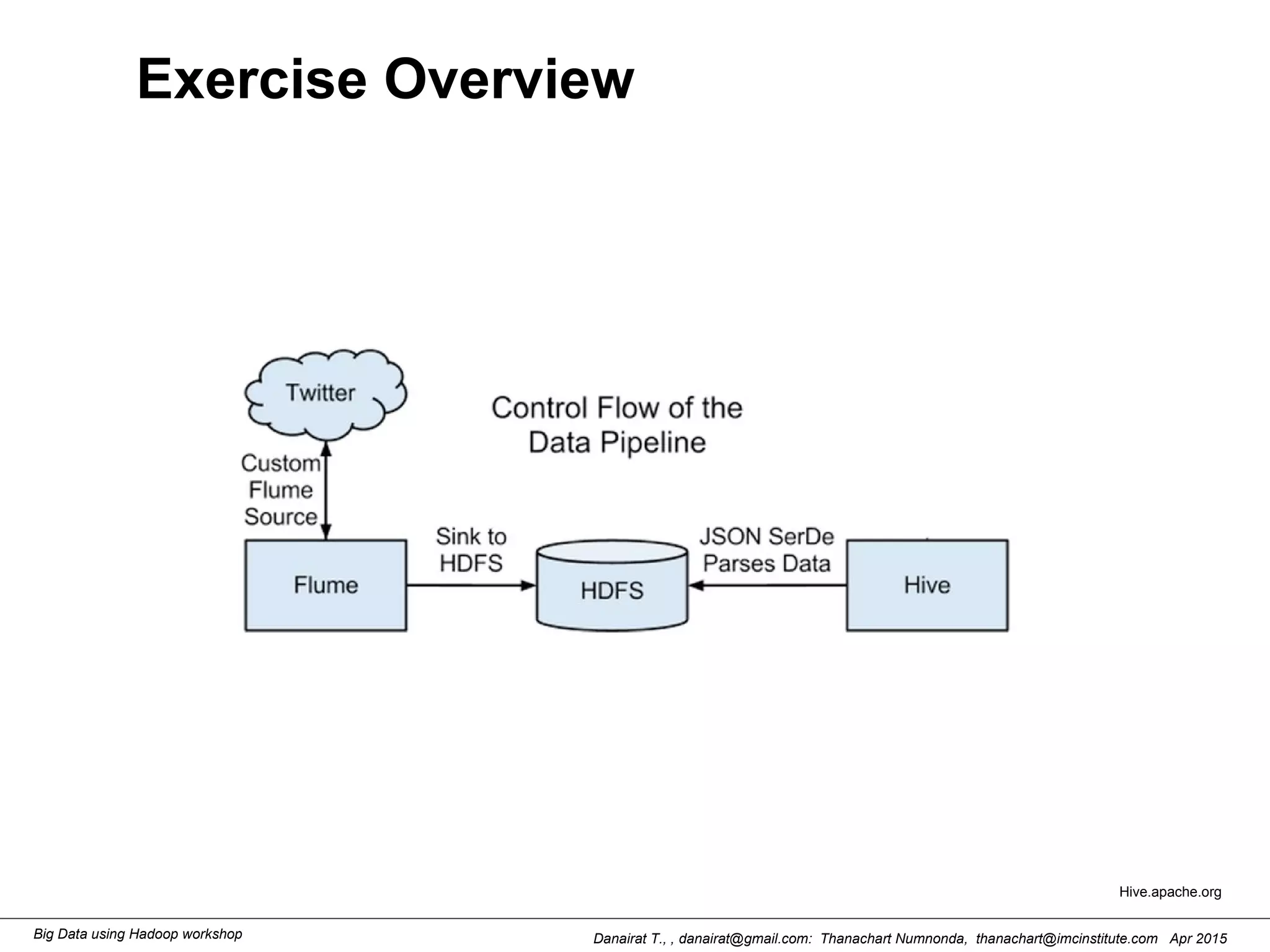

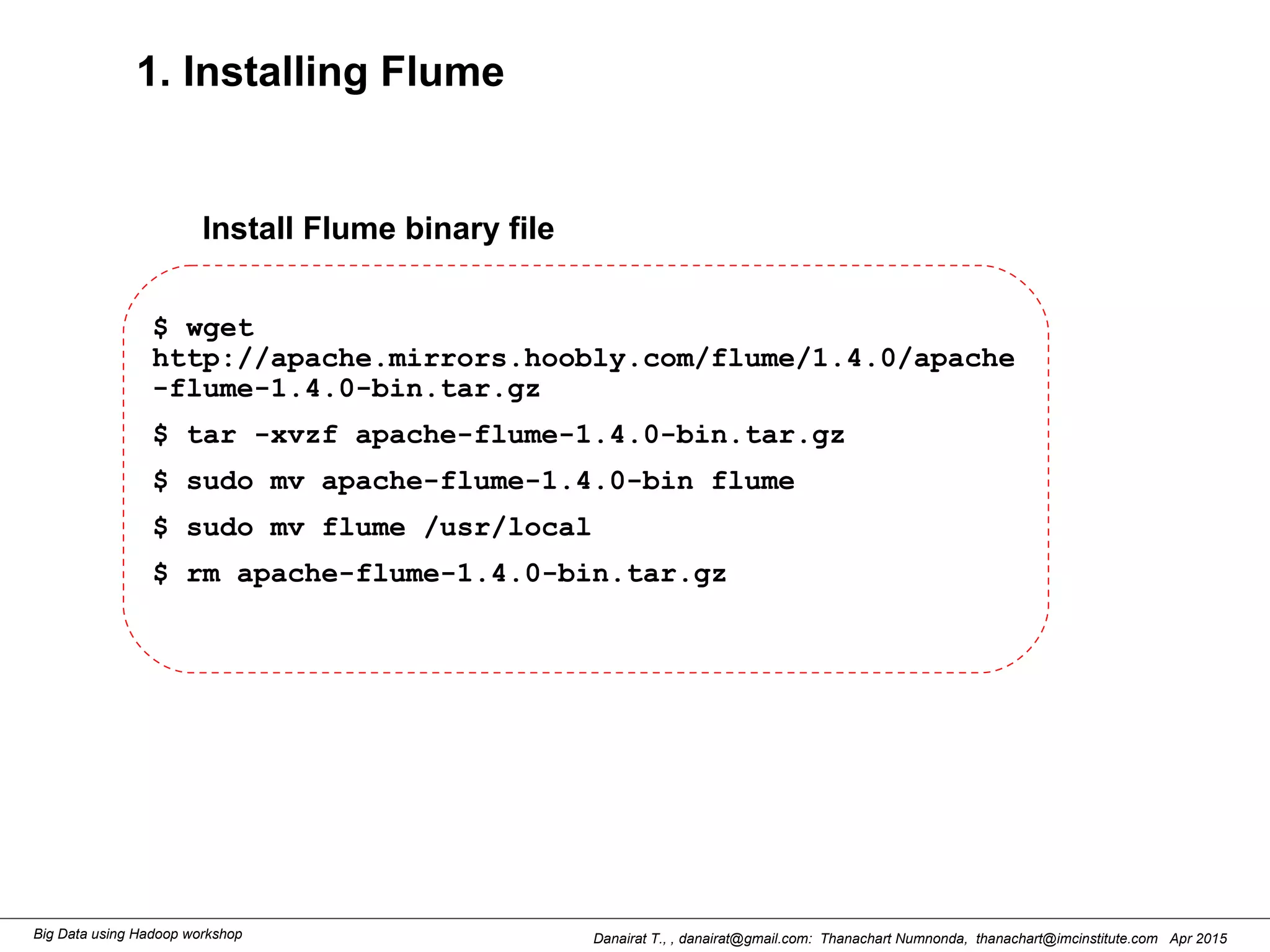

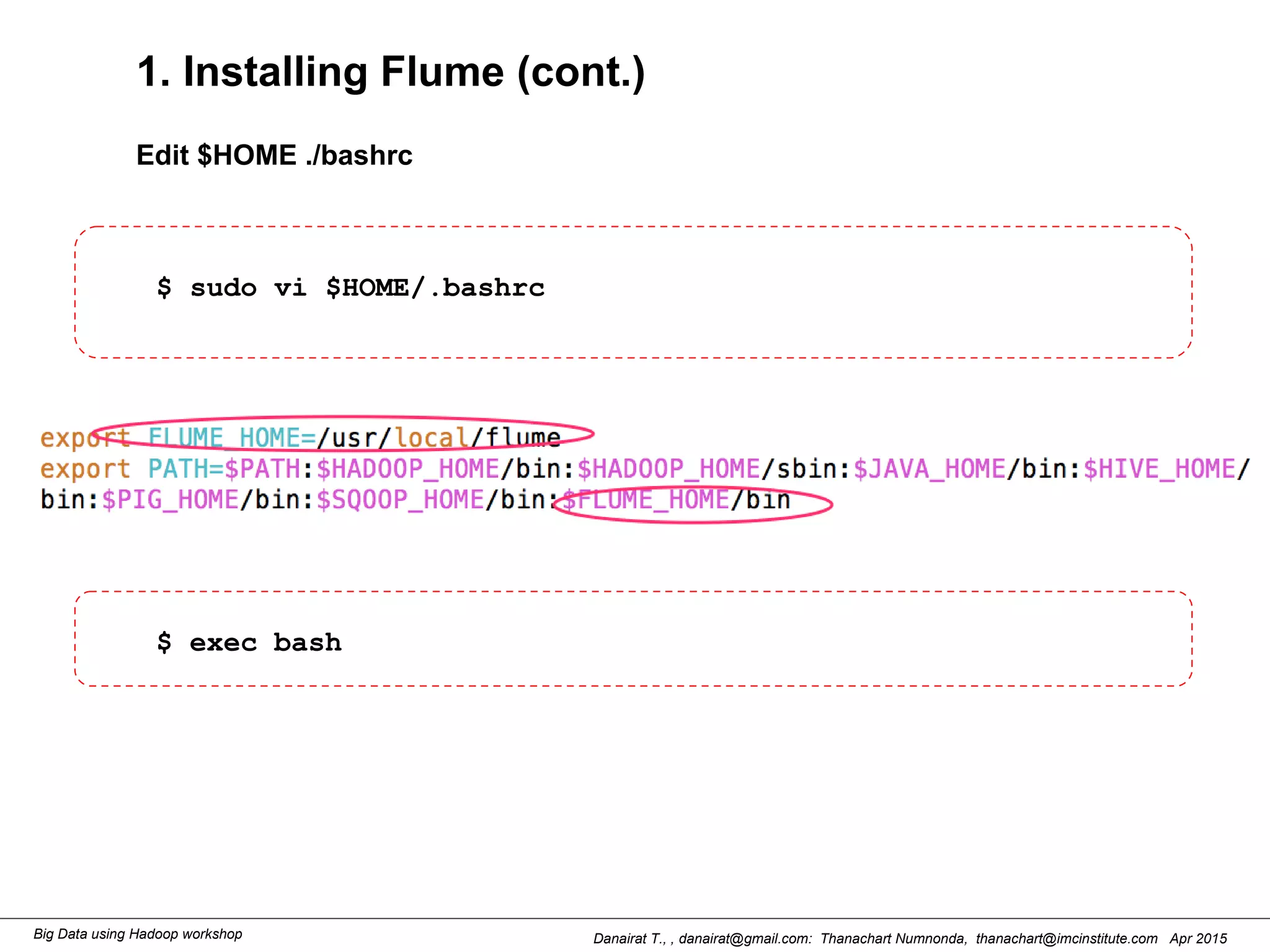

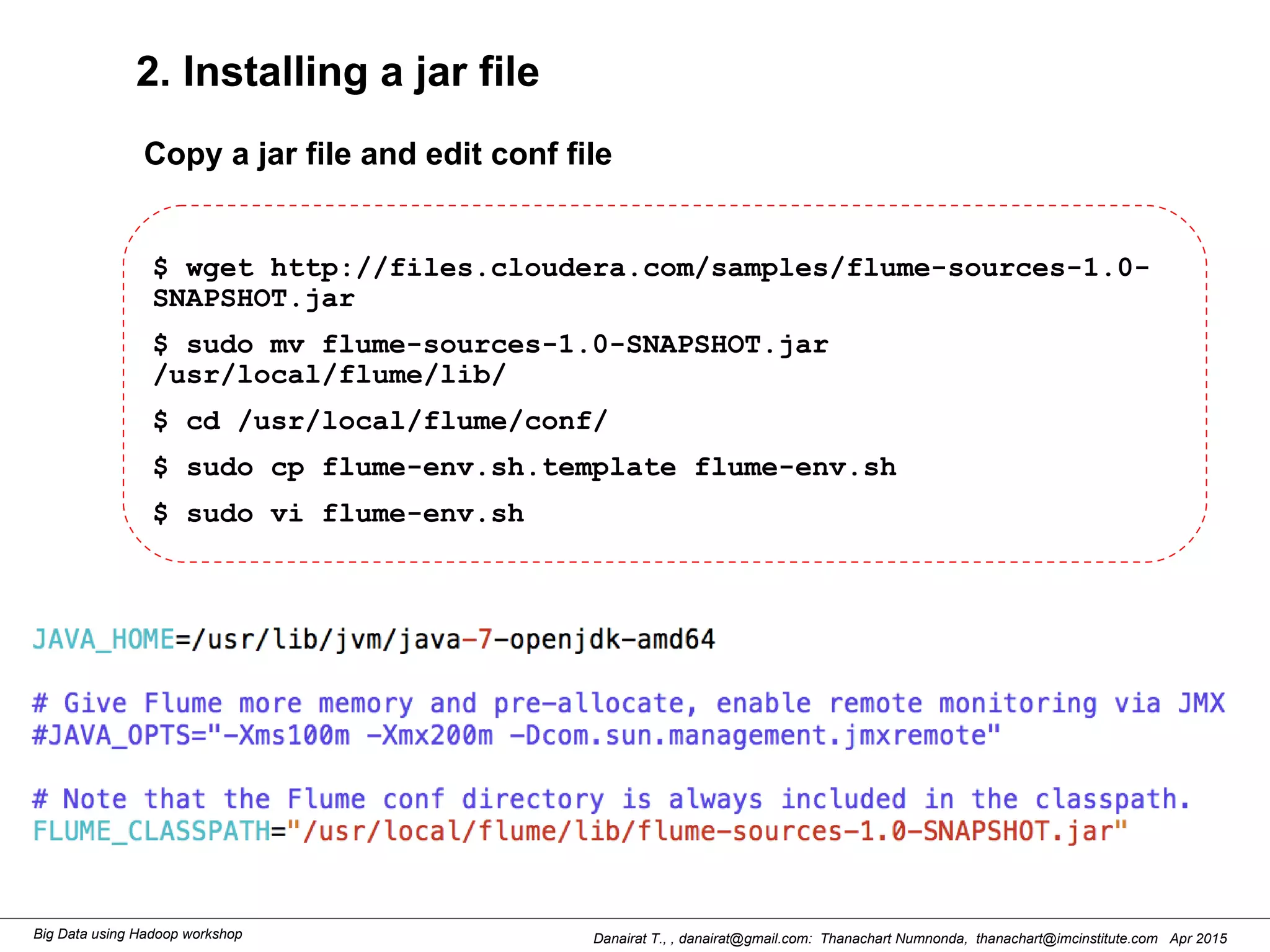

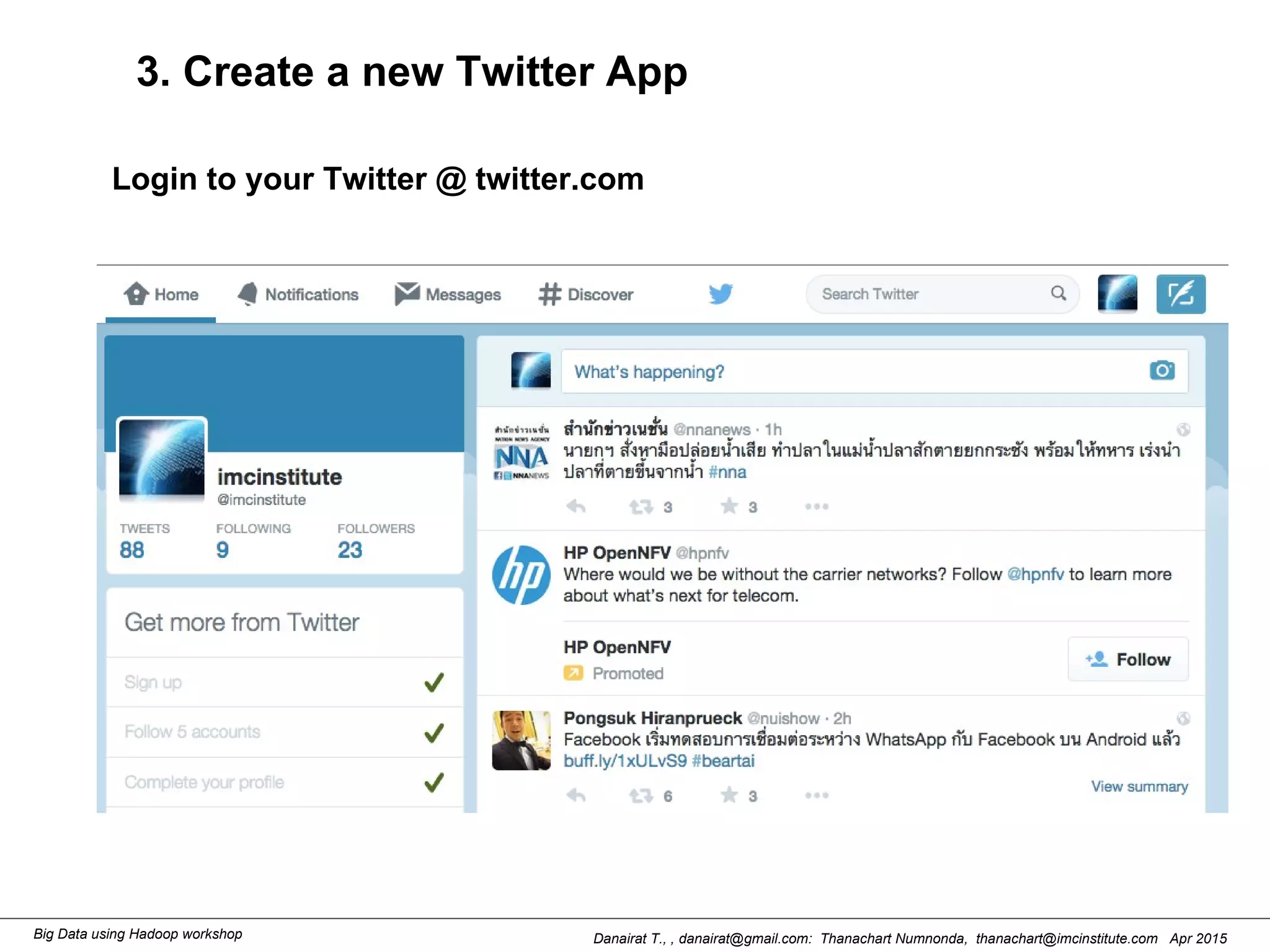

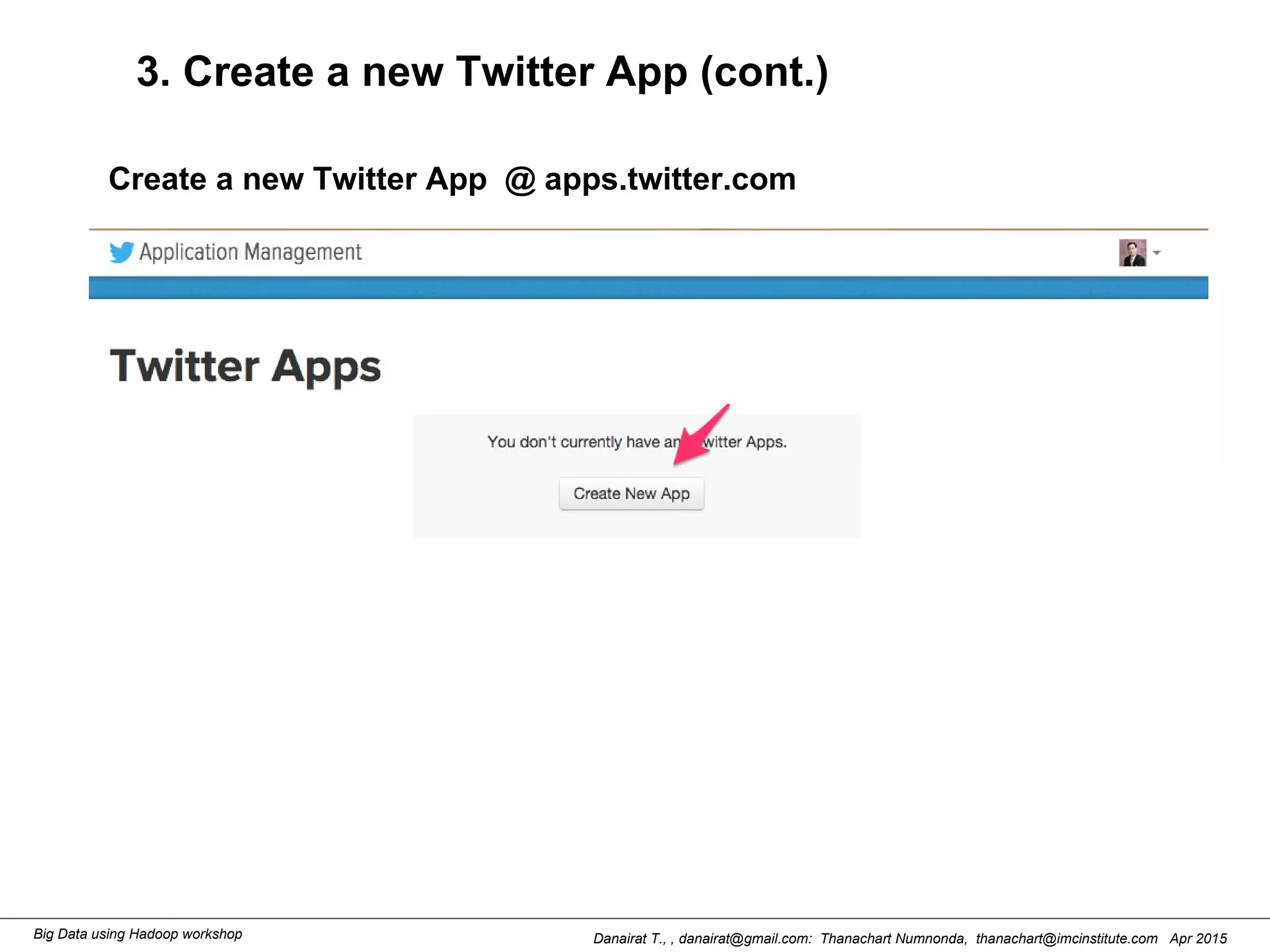

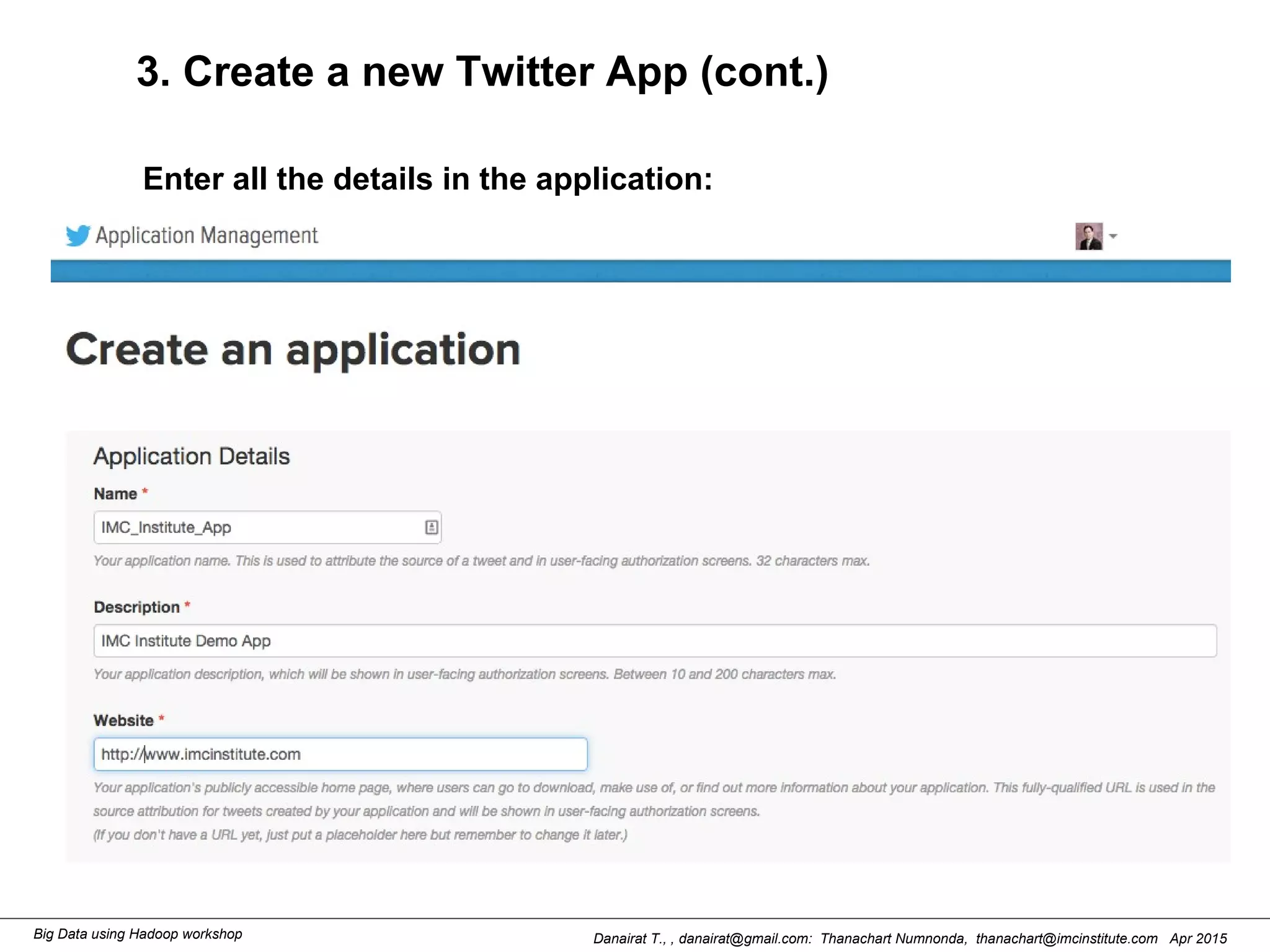

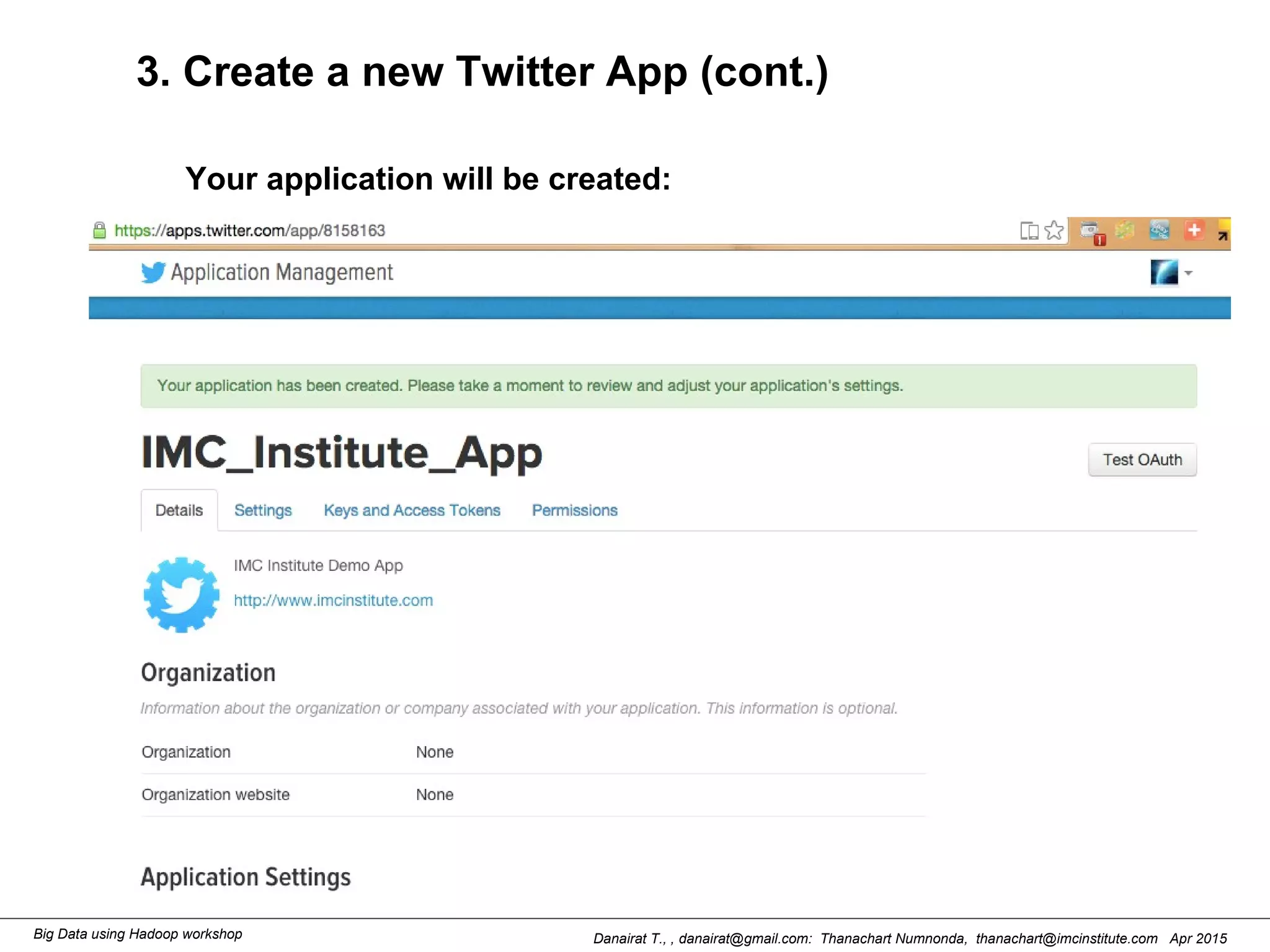

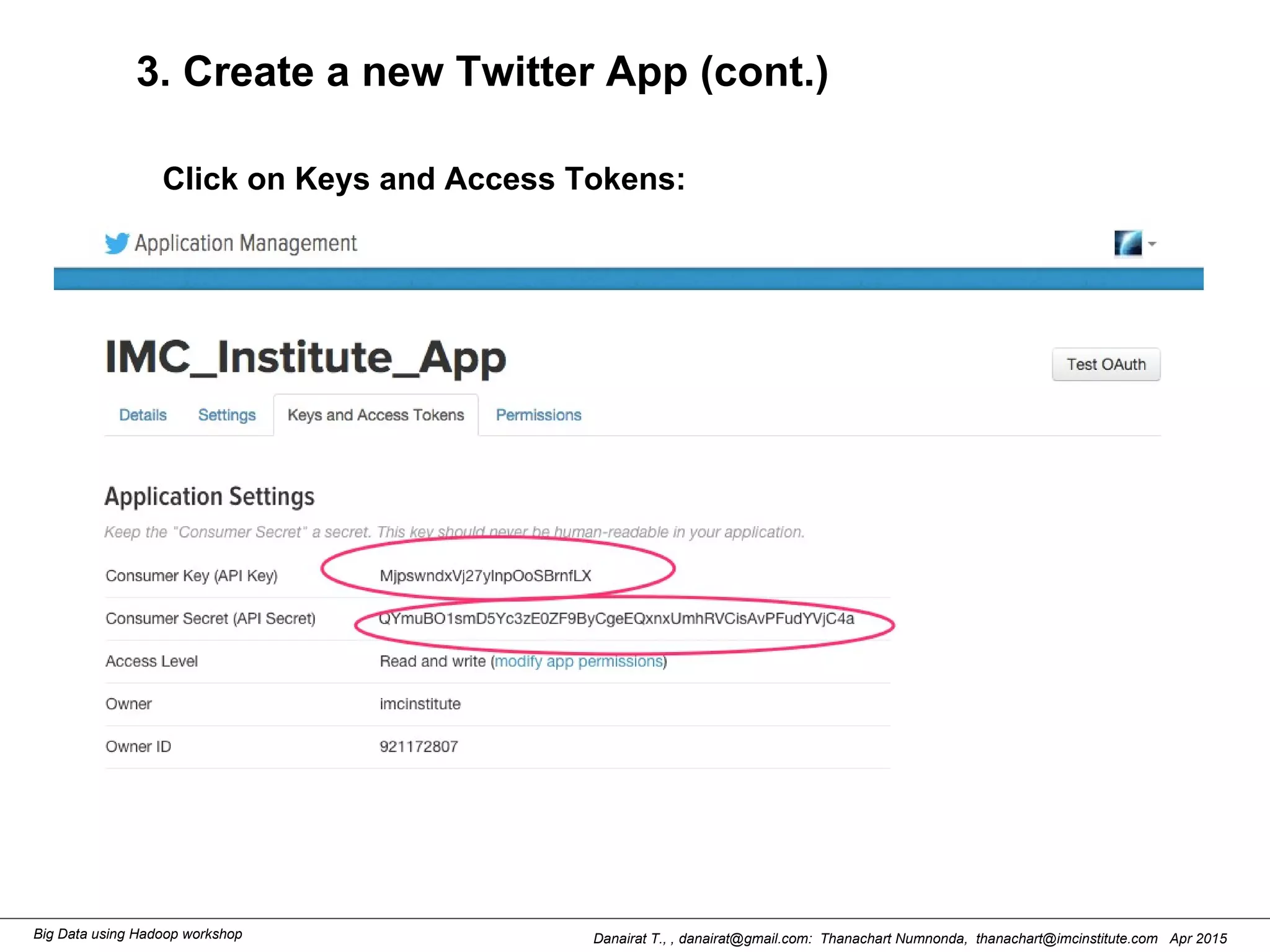

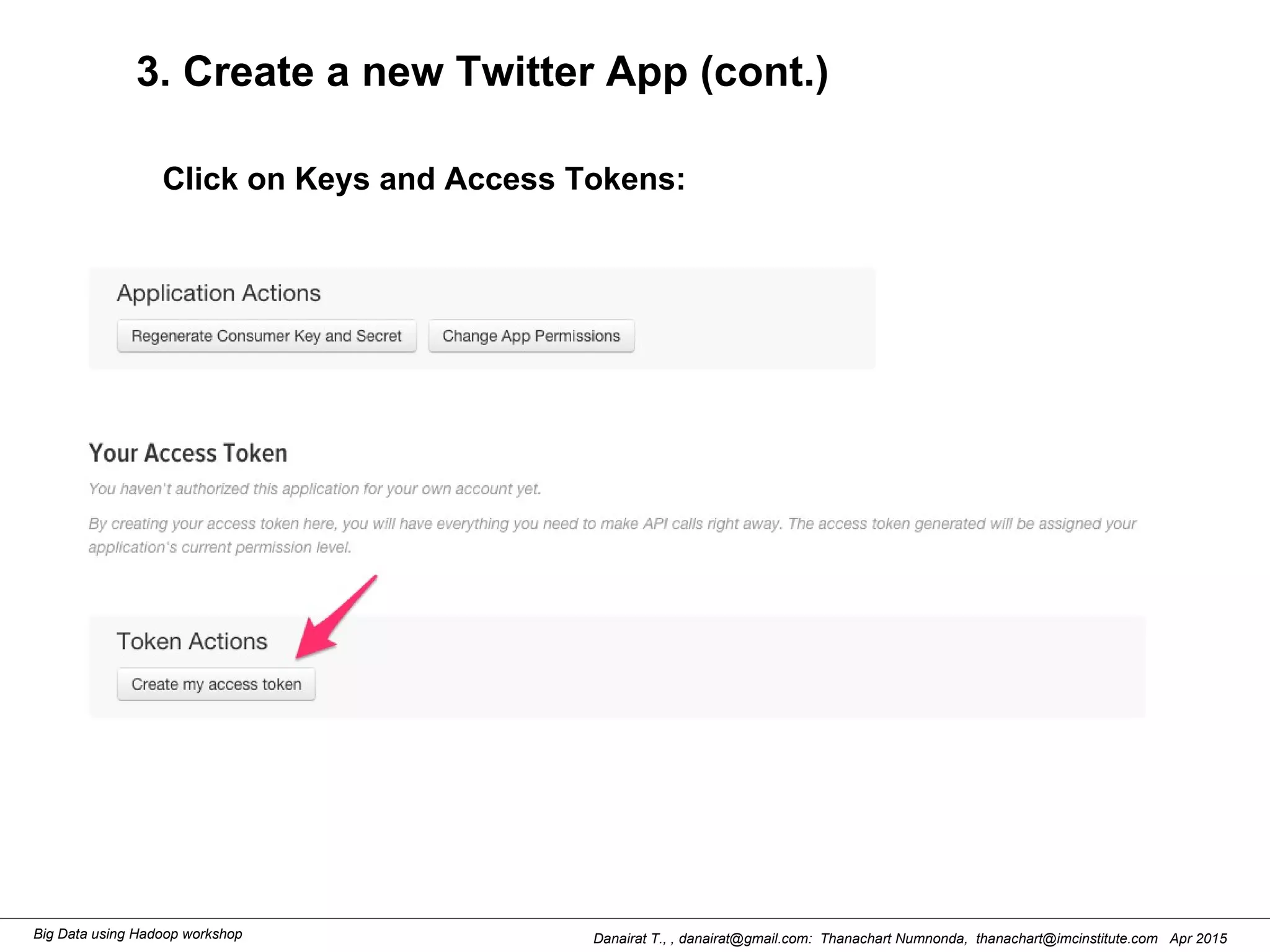

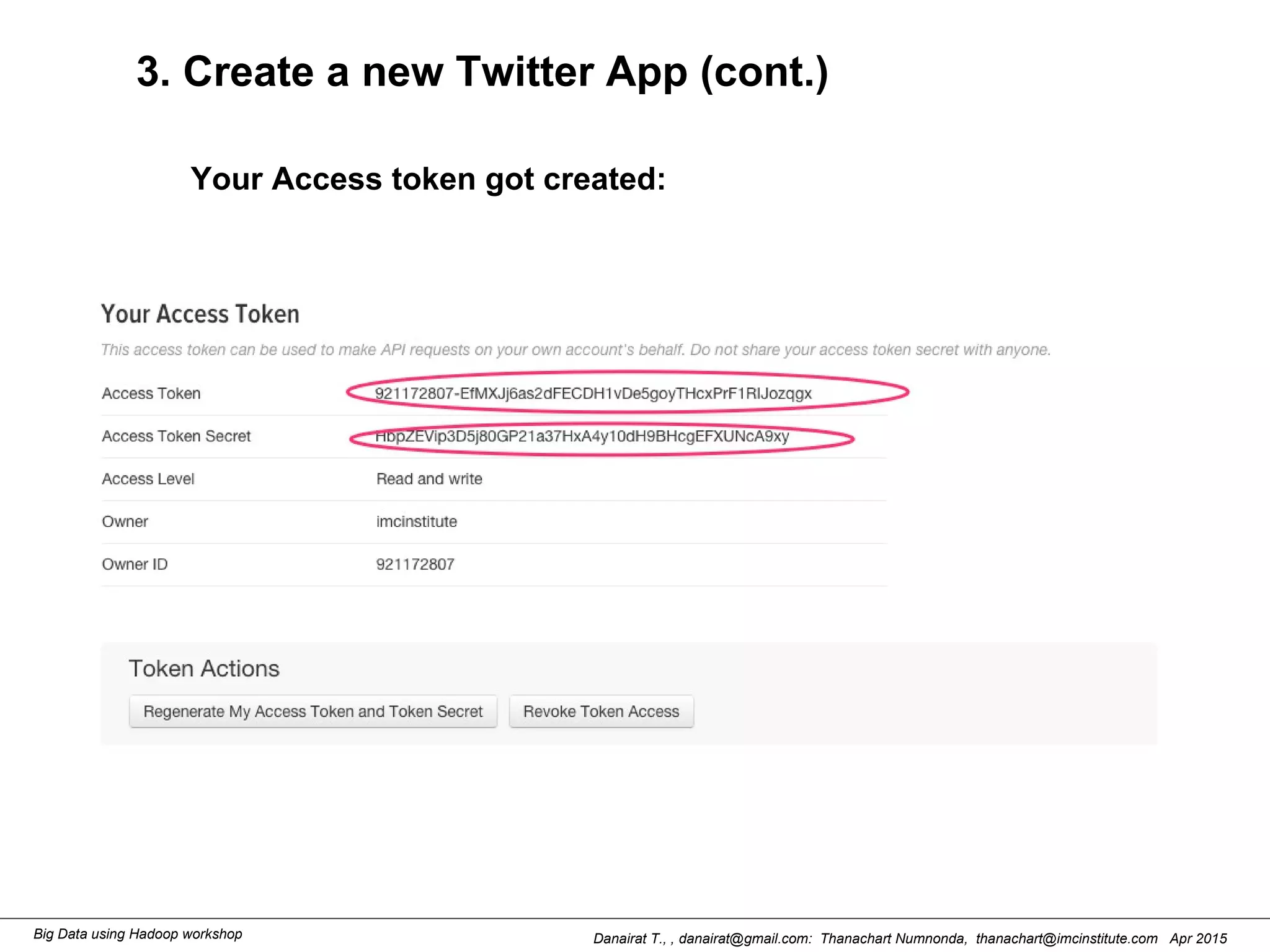

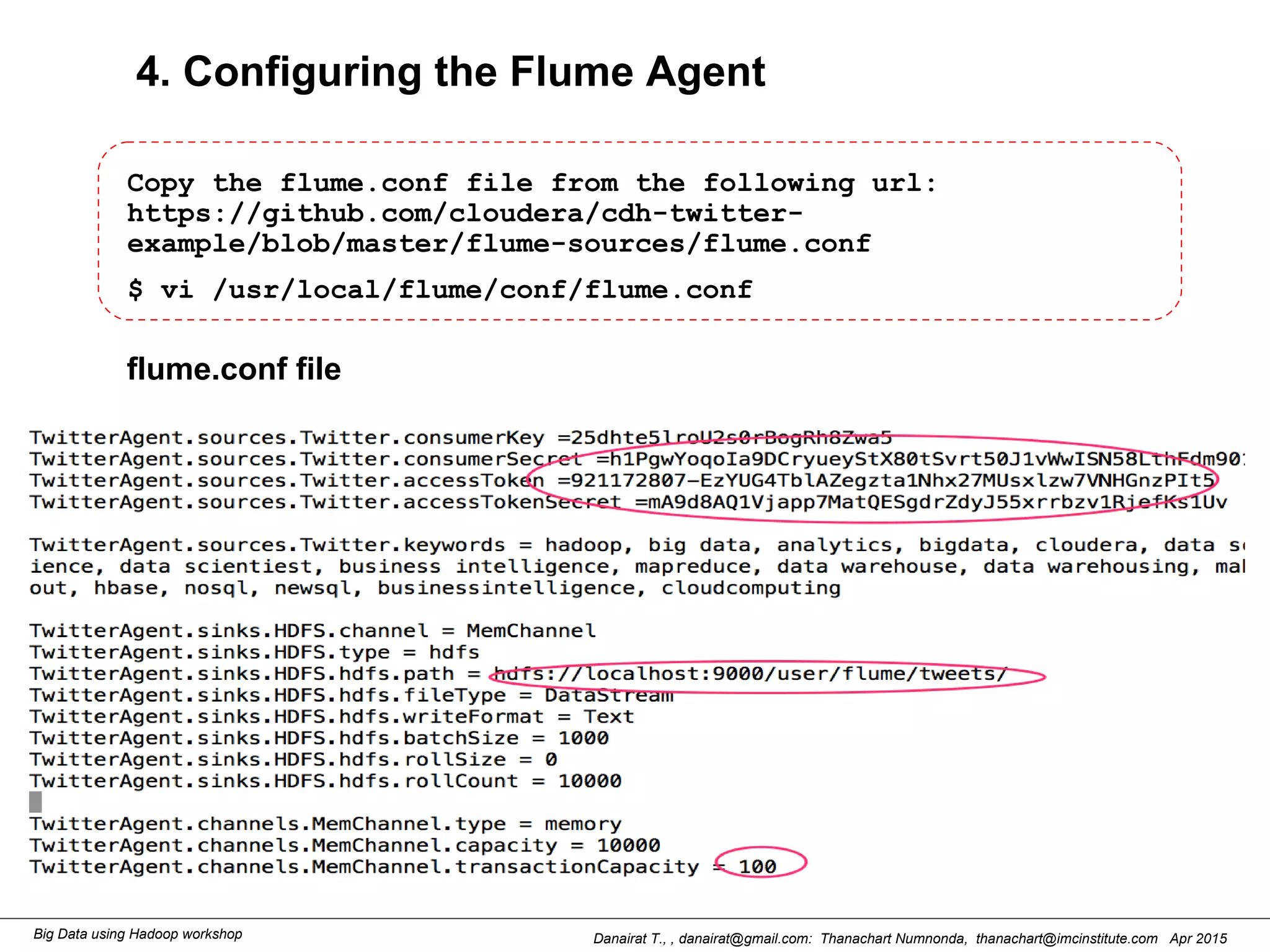

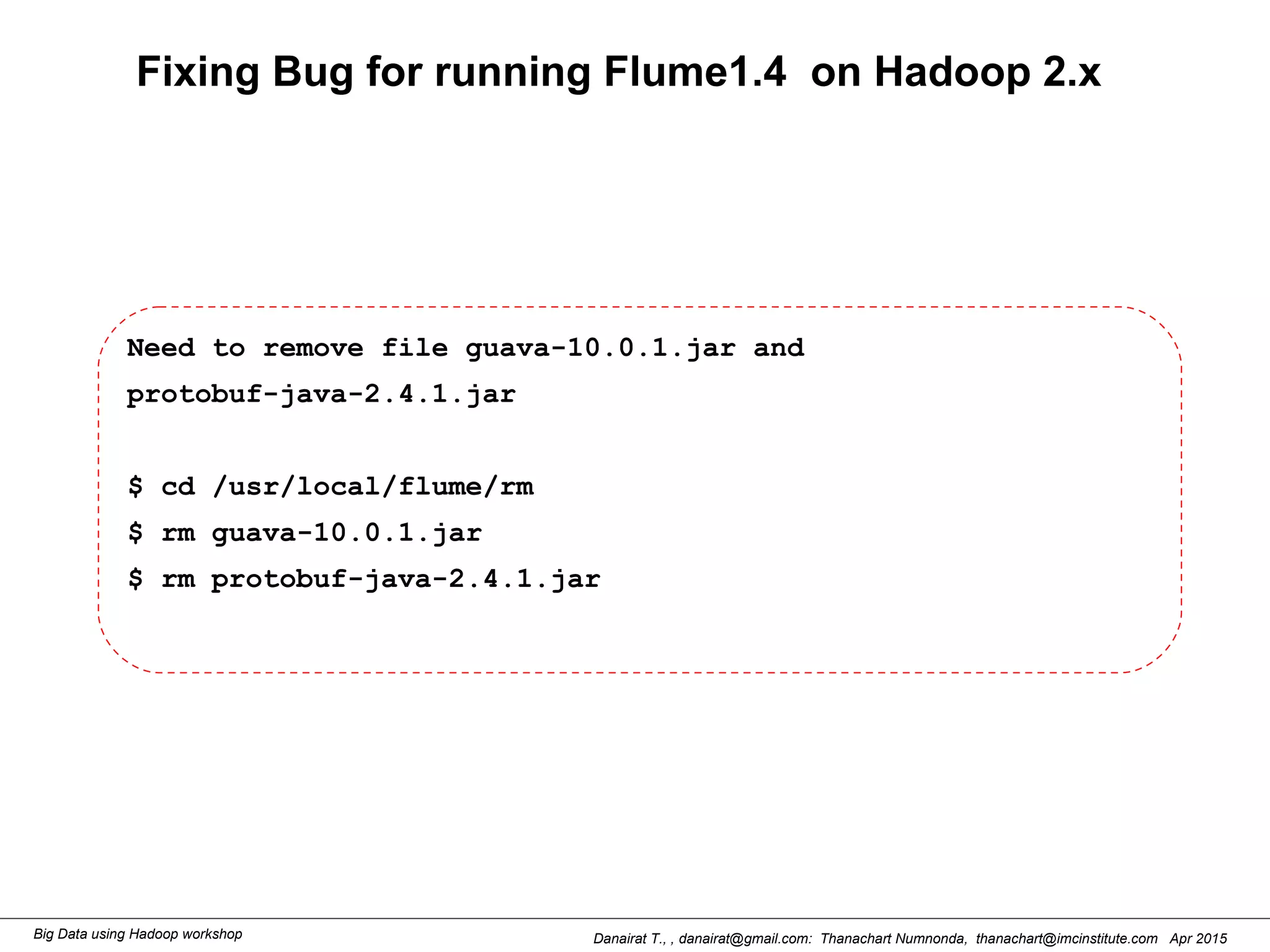

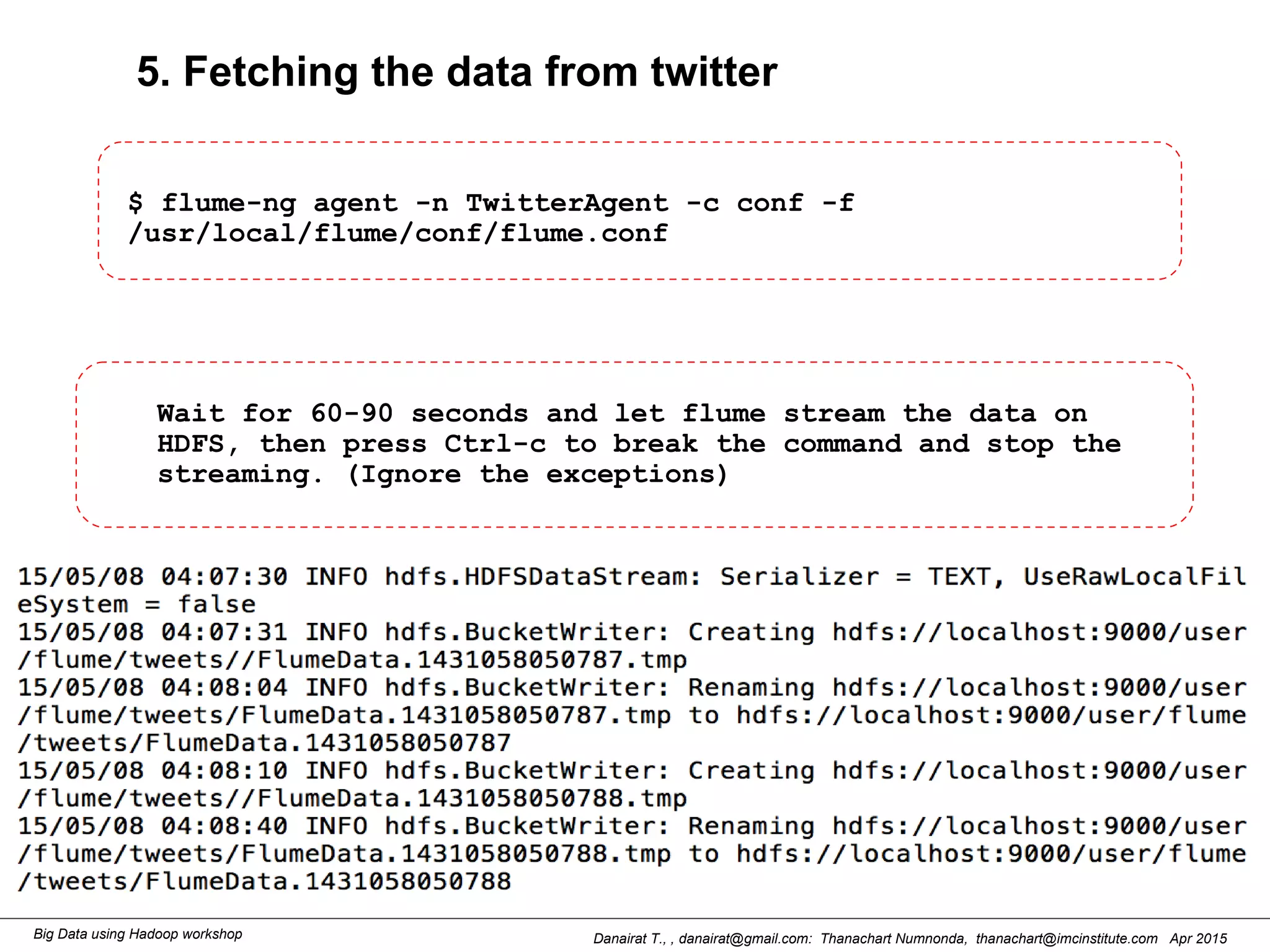

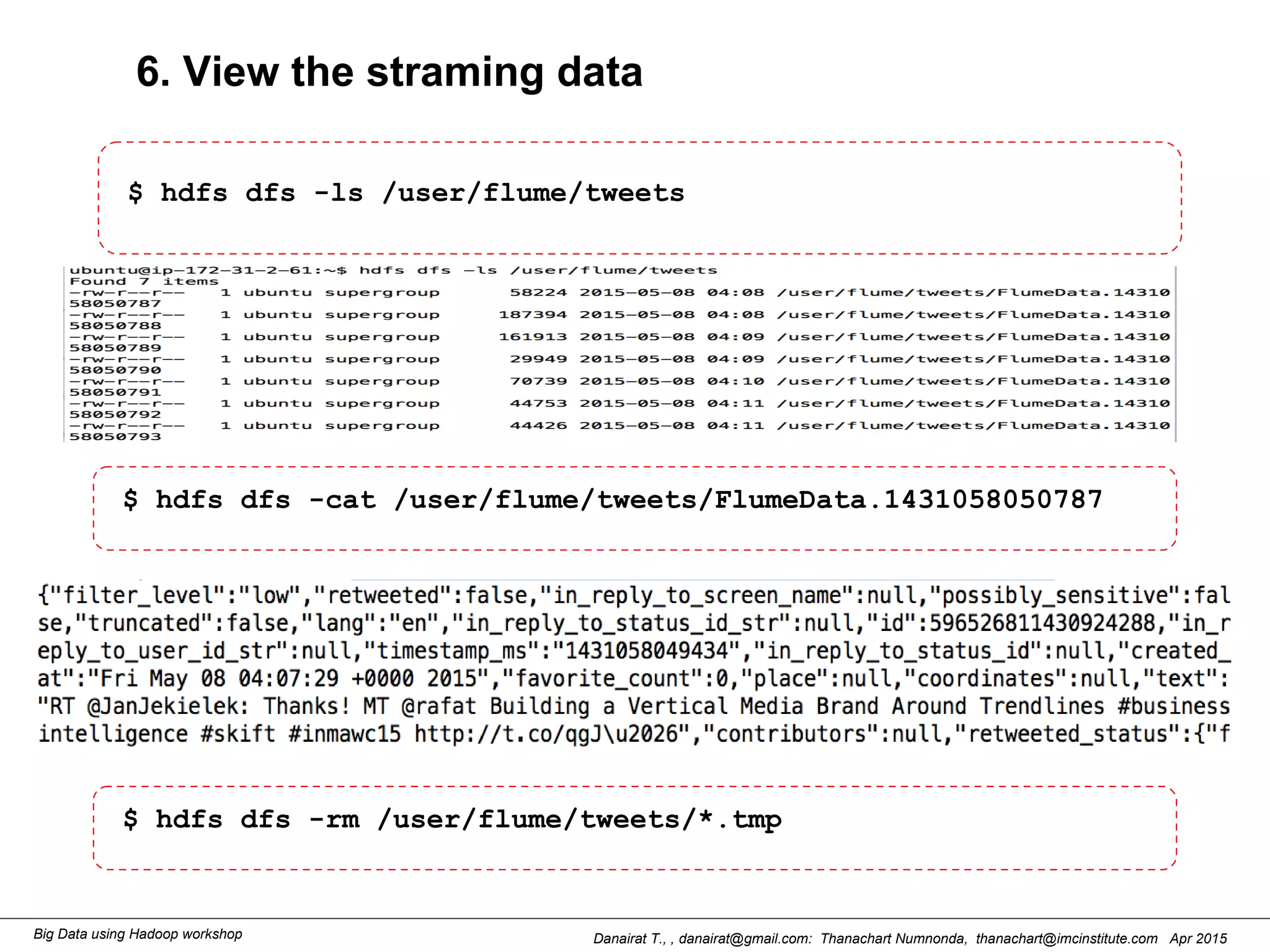

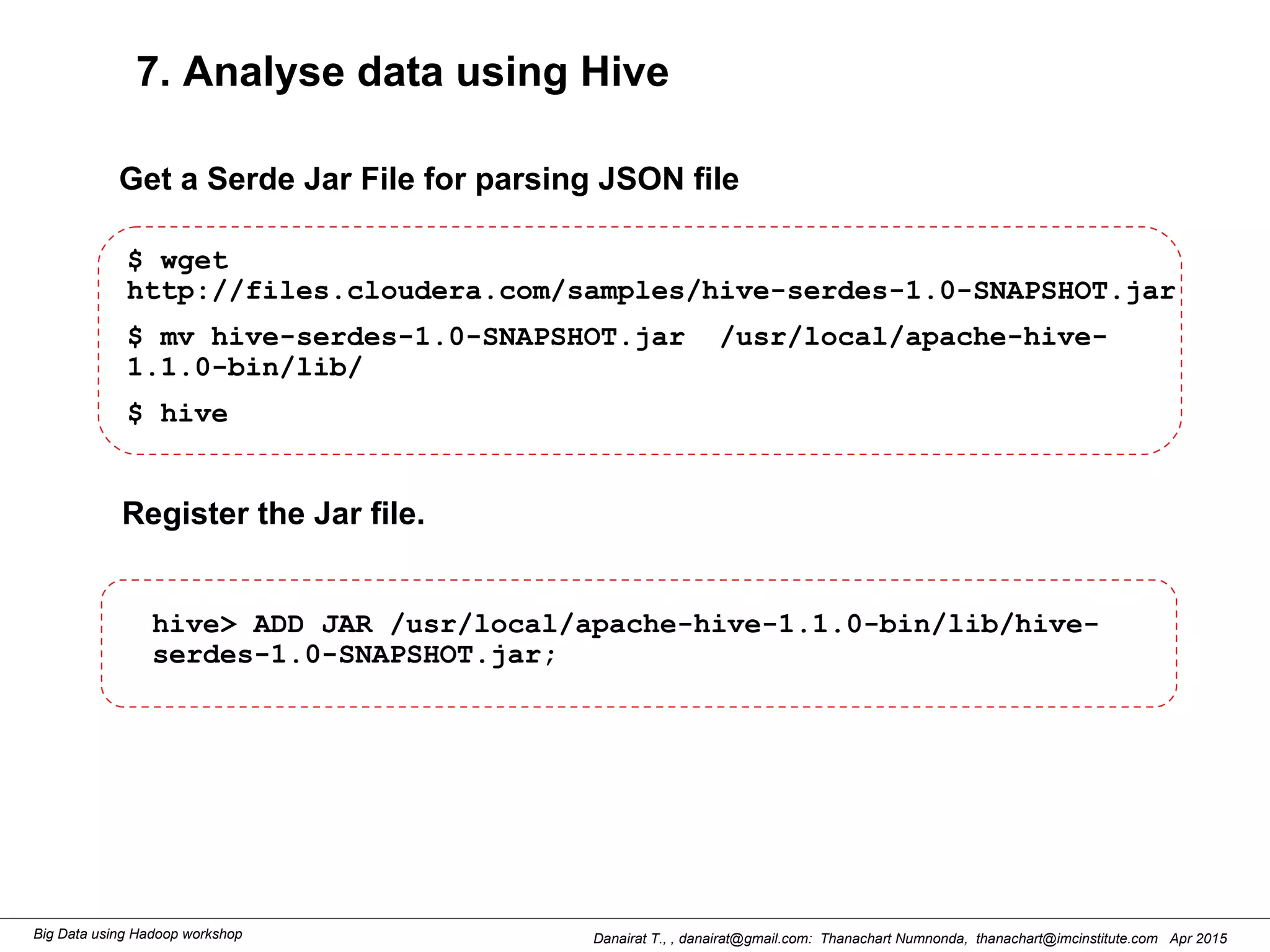

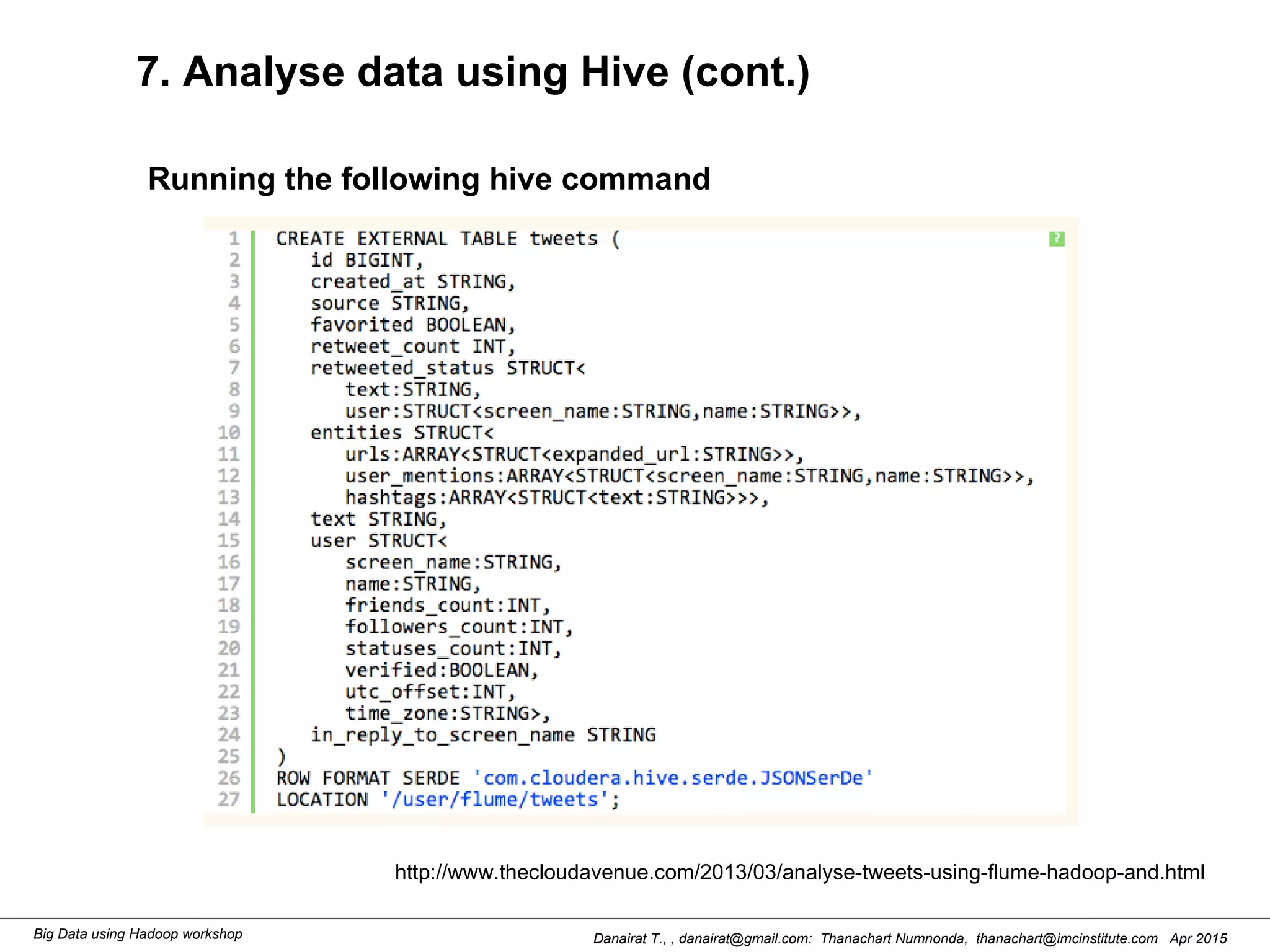

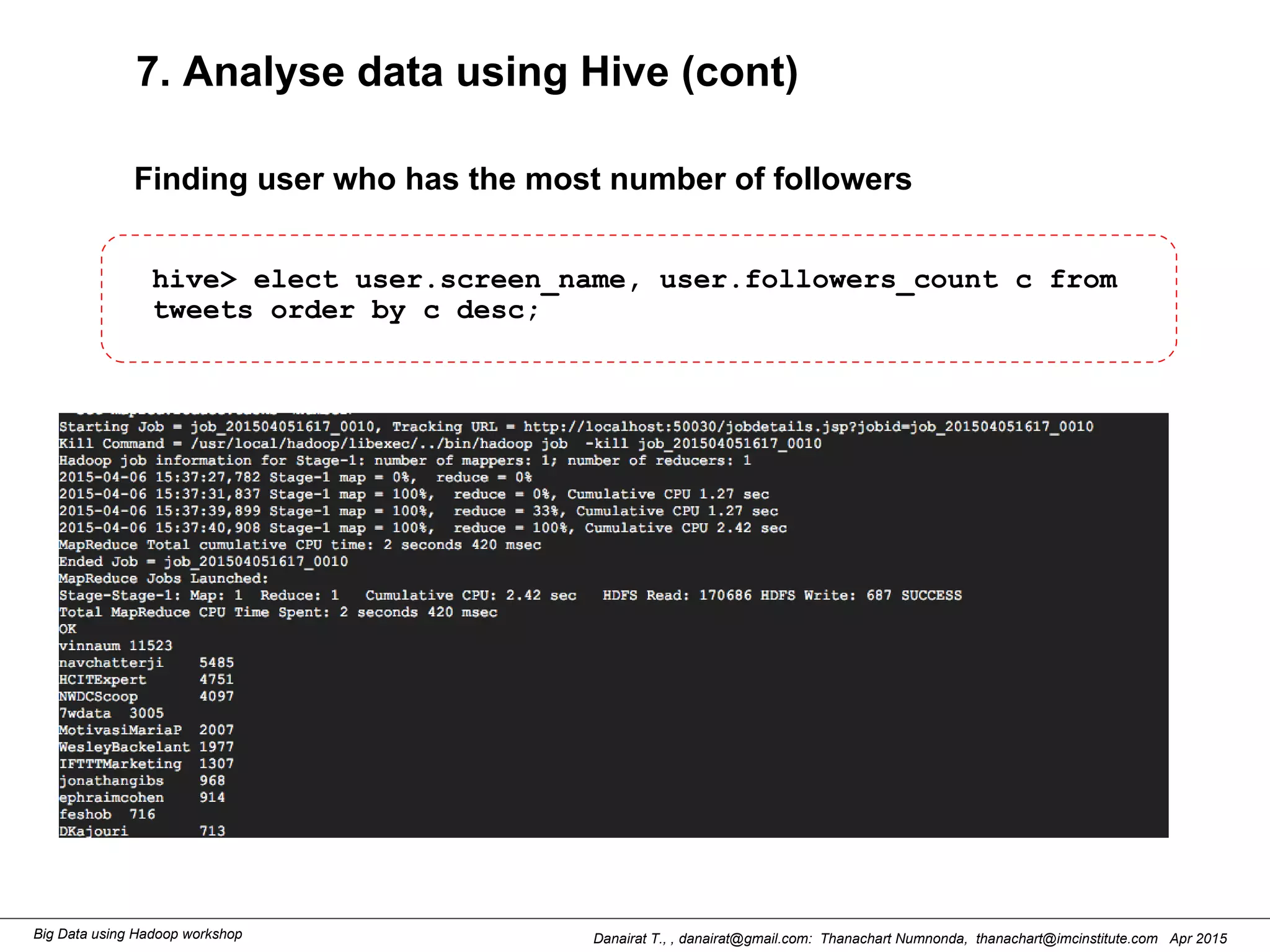

This document outlines steps to analyze tweets using Flume, Hadoop, and Hive. It describes installing Flume and a jar file, creating a Twitter application to get API keys, configuring an Flume agent to fetch tweets from Twitter and store in HDFS, and using Hive to analyze the tweets by user followers count. The workshop instructions provide commands to stream tweets from Twitter to HDFS using Flume, view the stored tweets files, and run queries in Hive to find the user with the most followers.