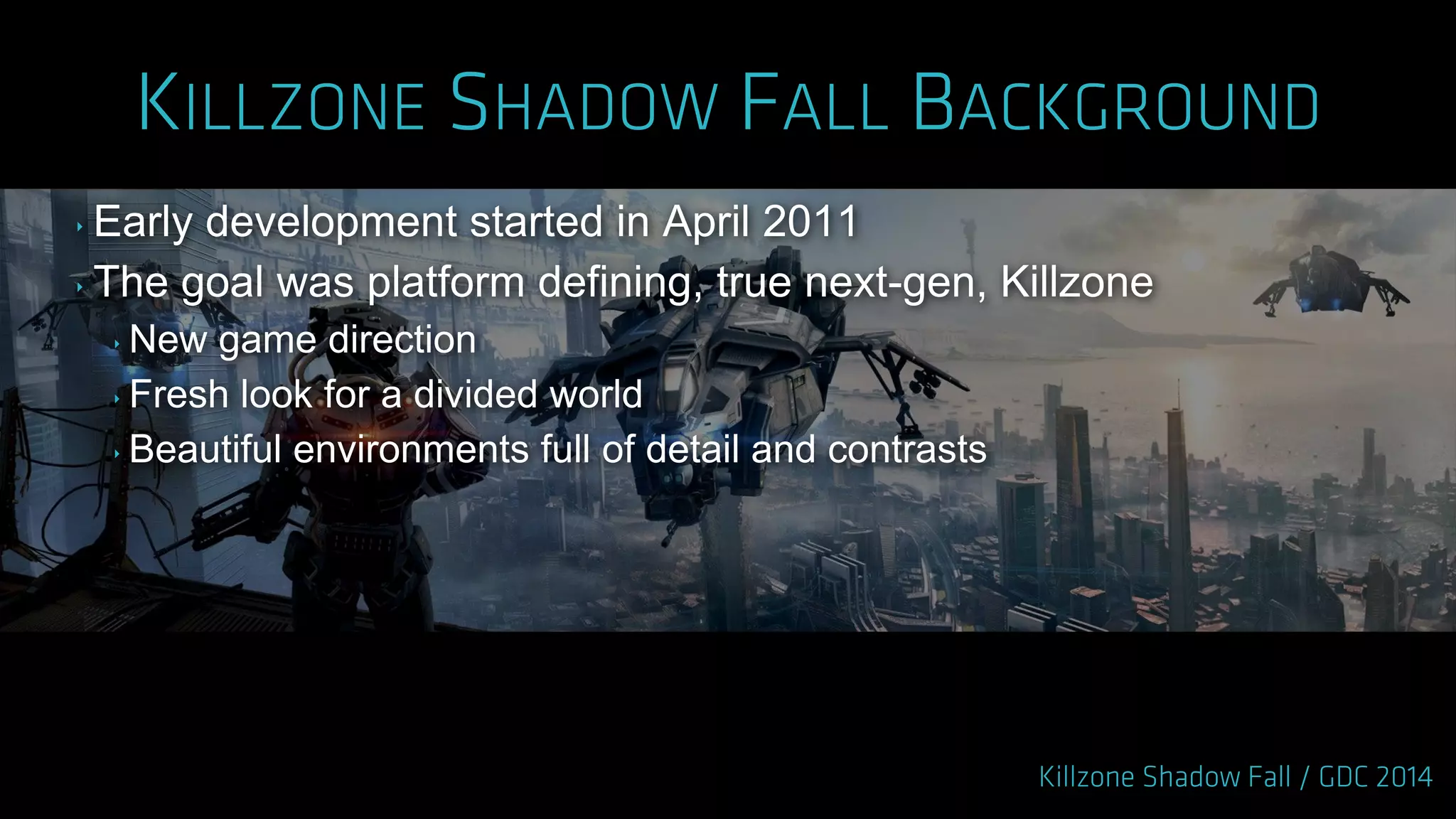

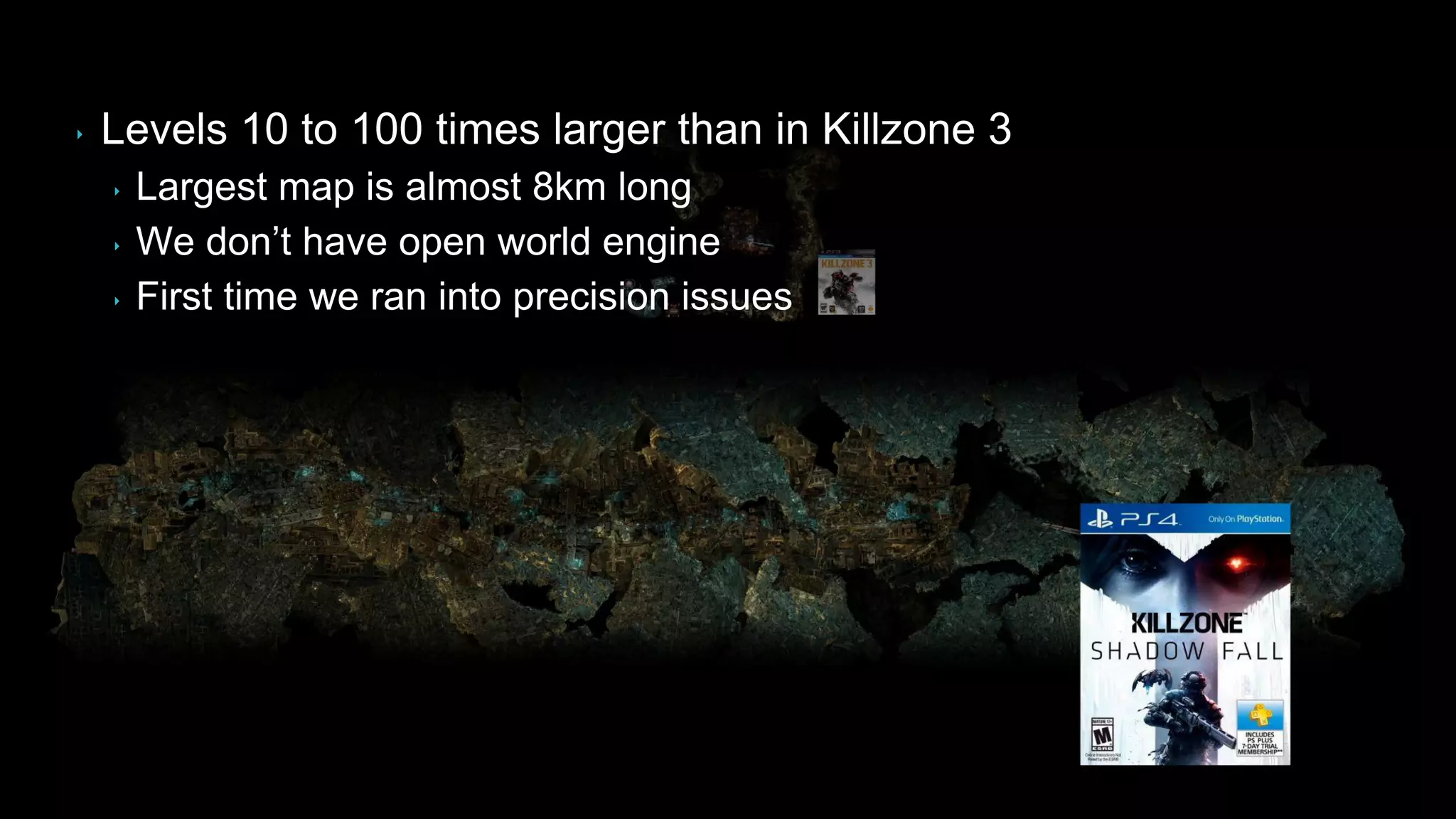

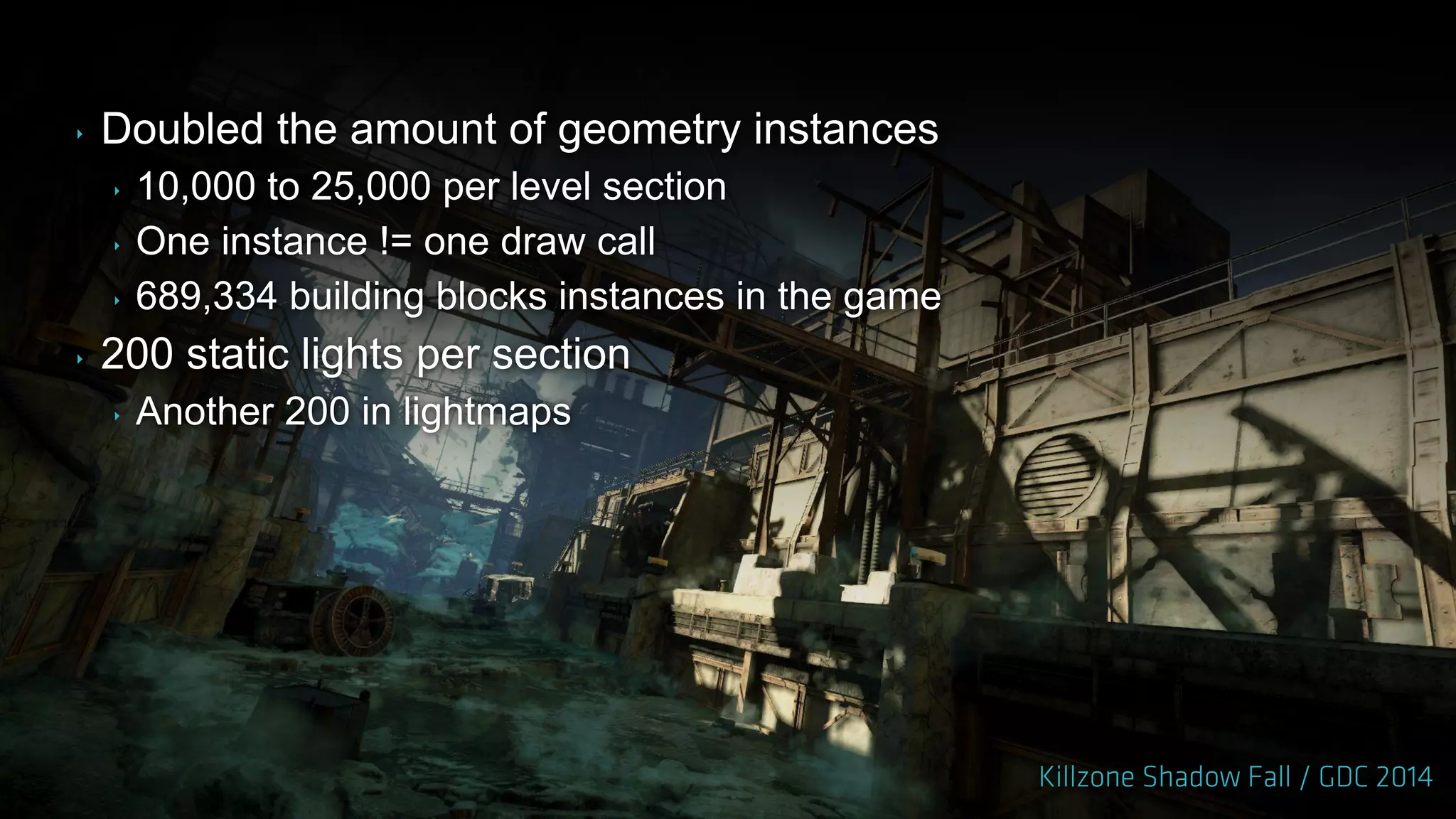

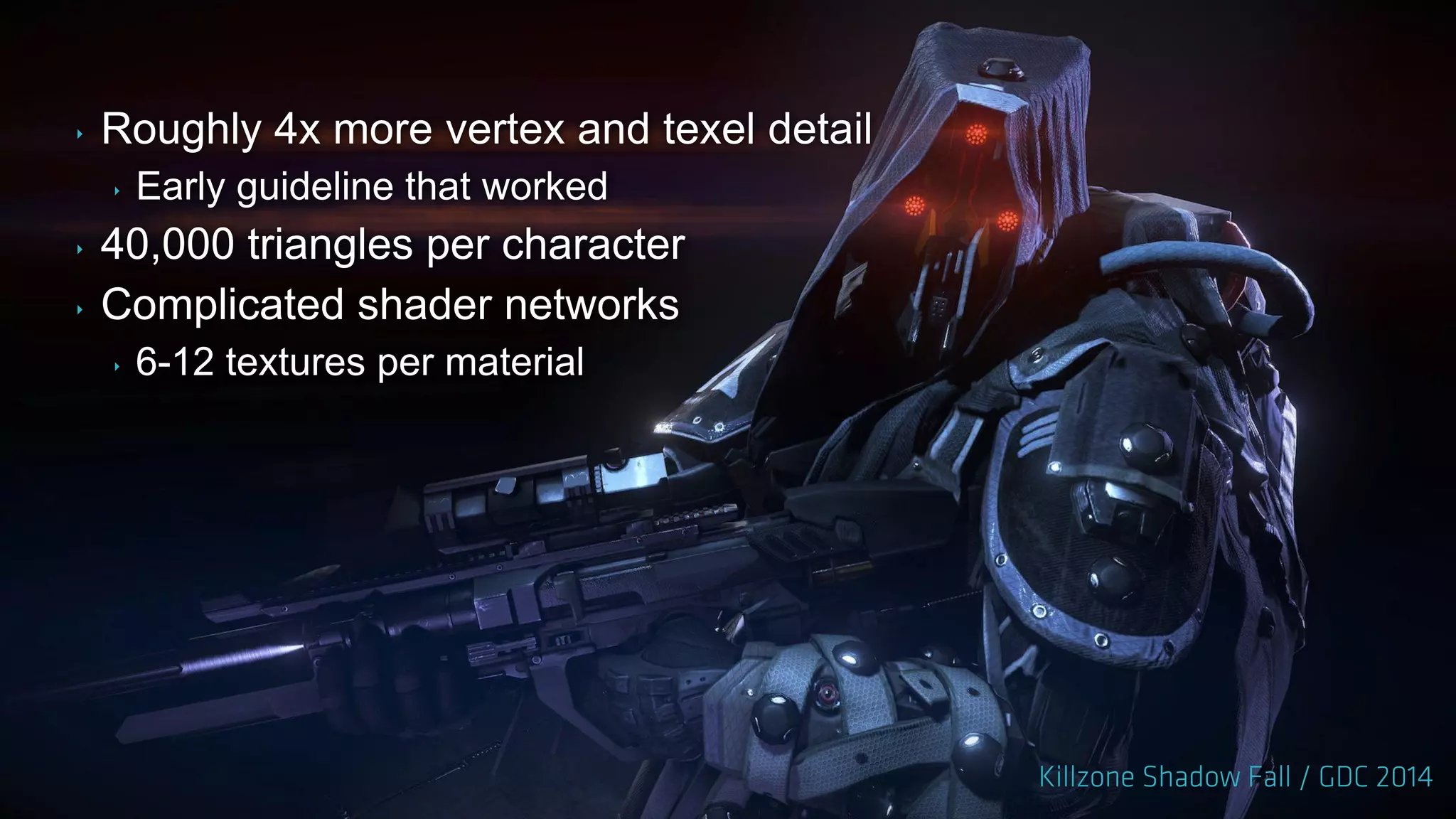

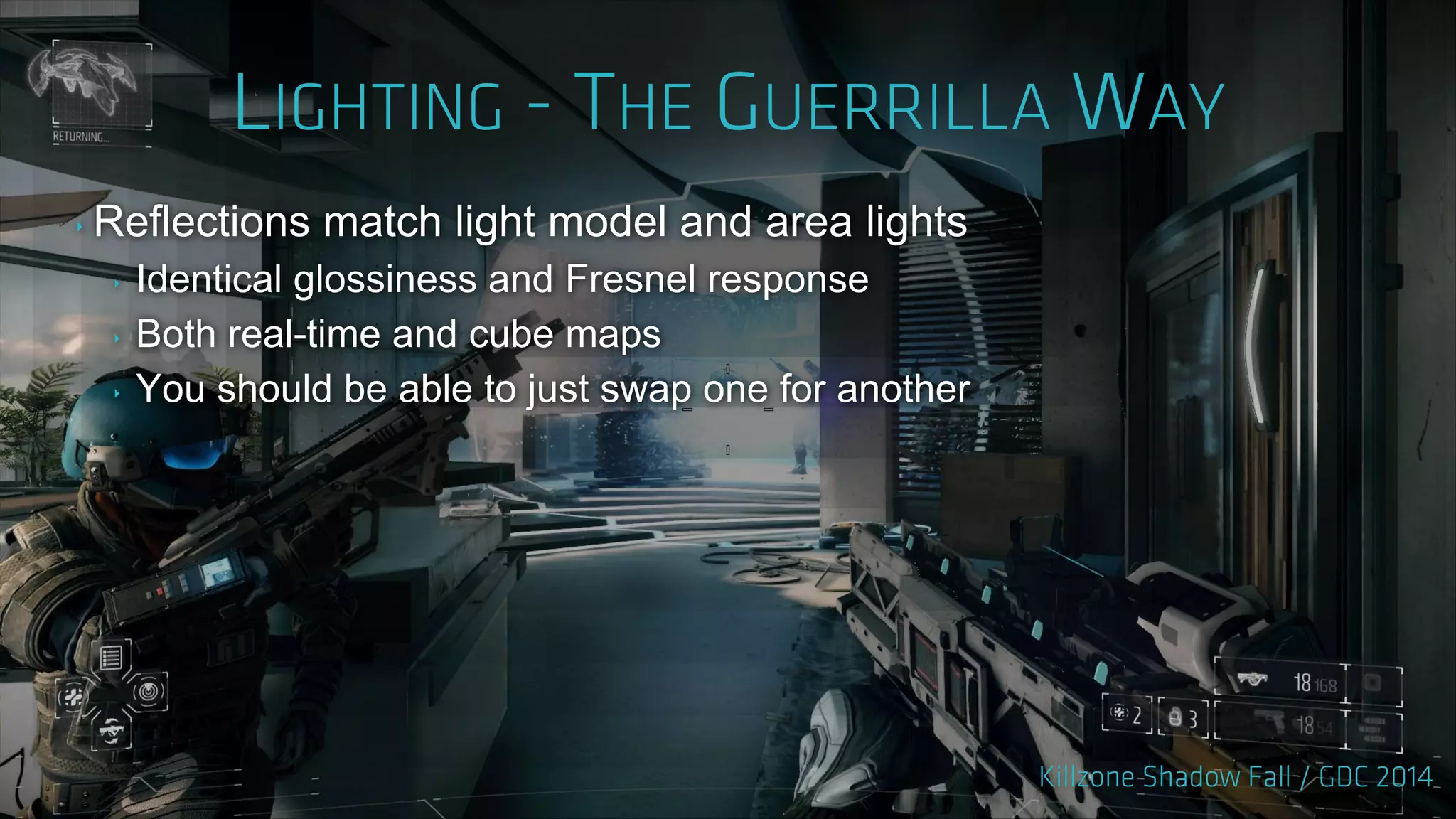

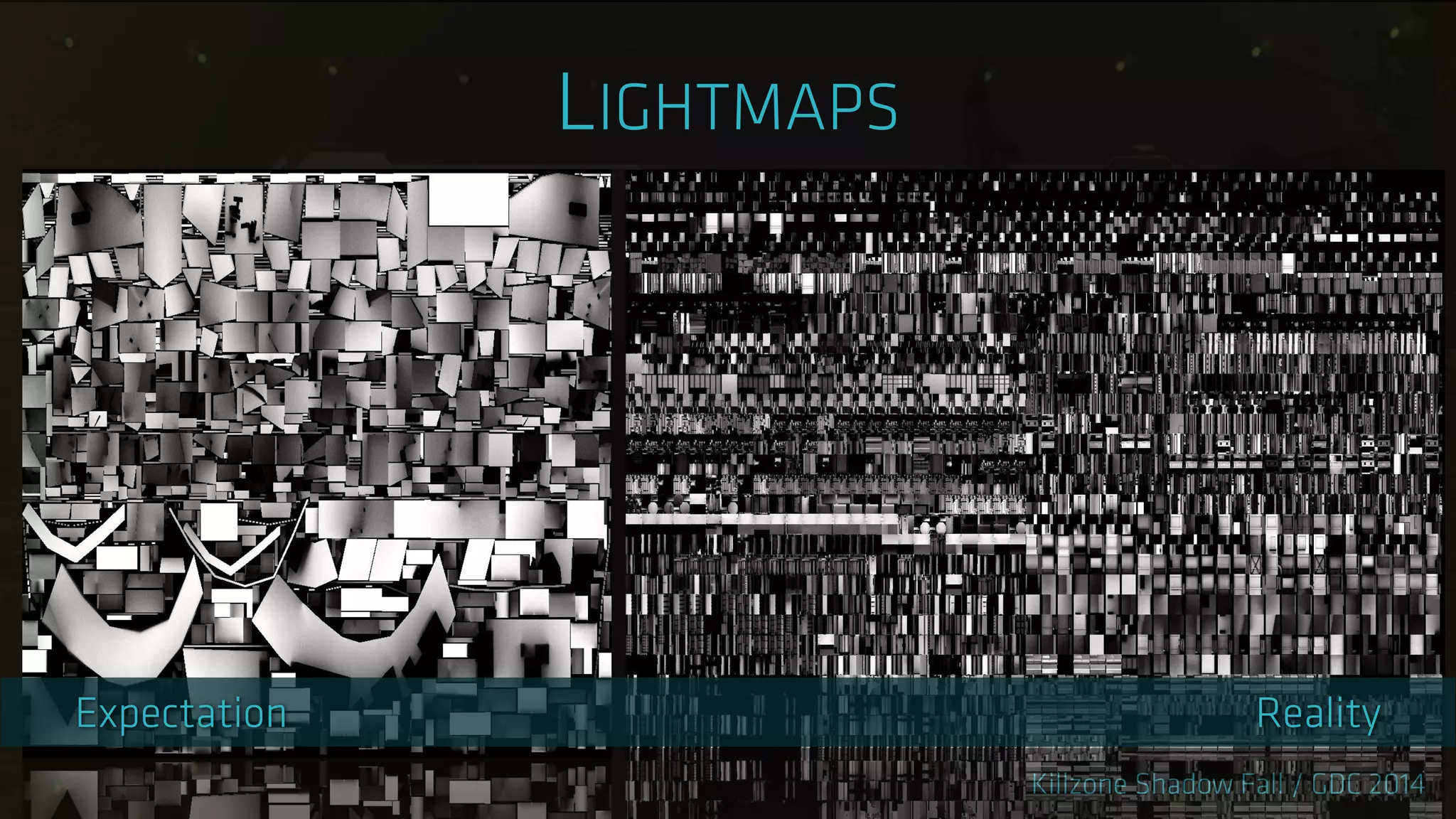

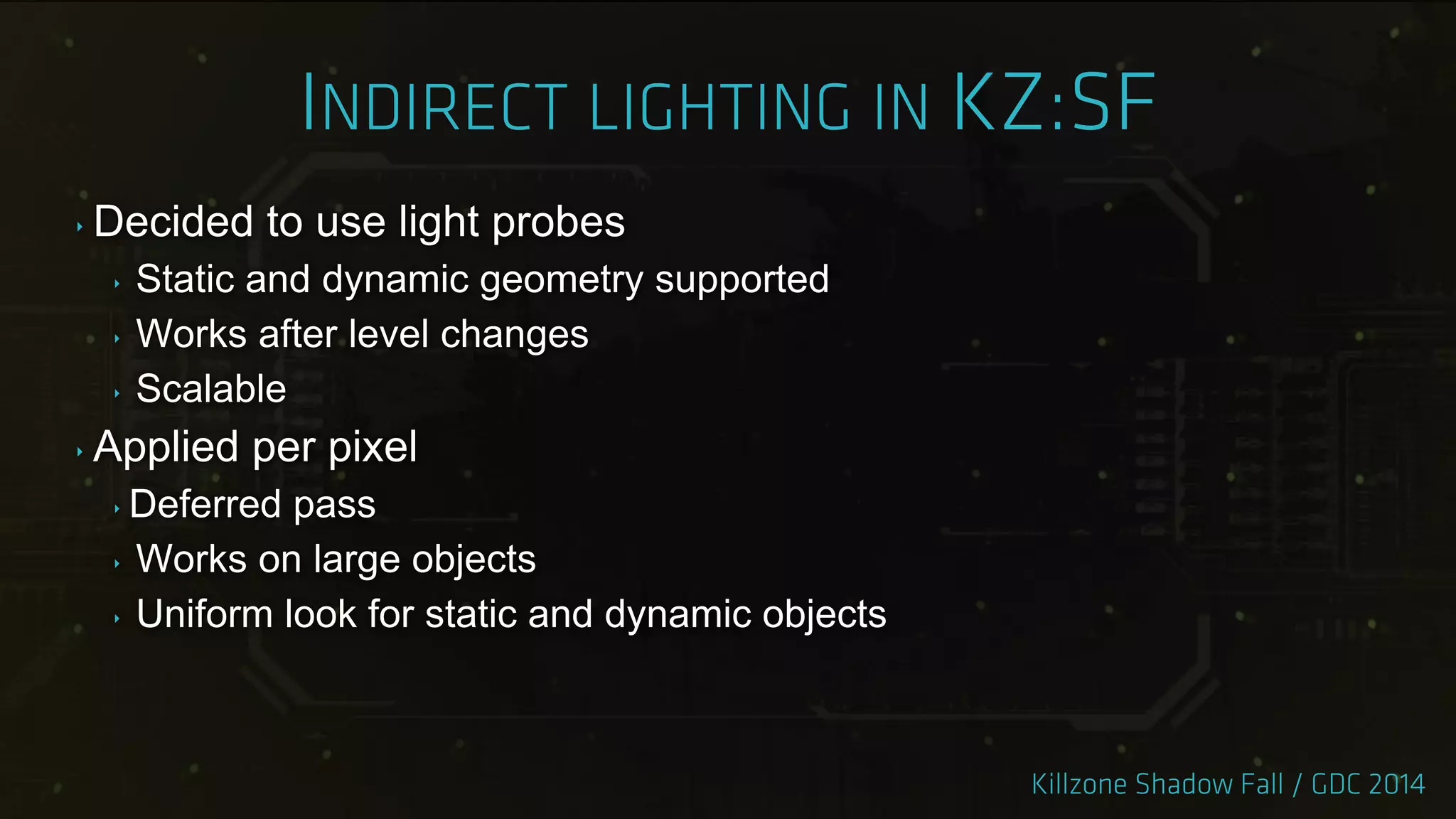

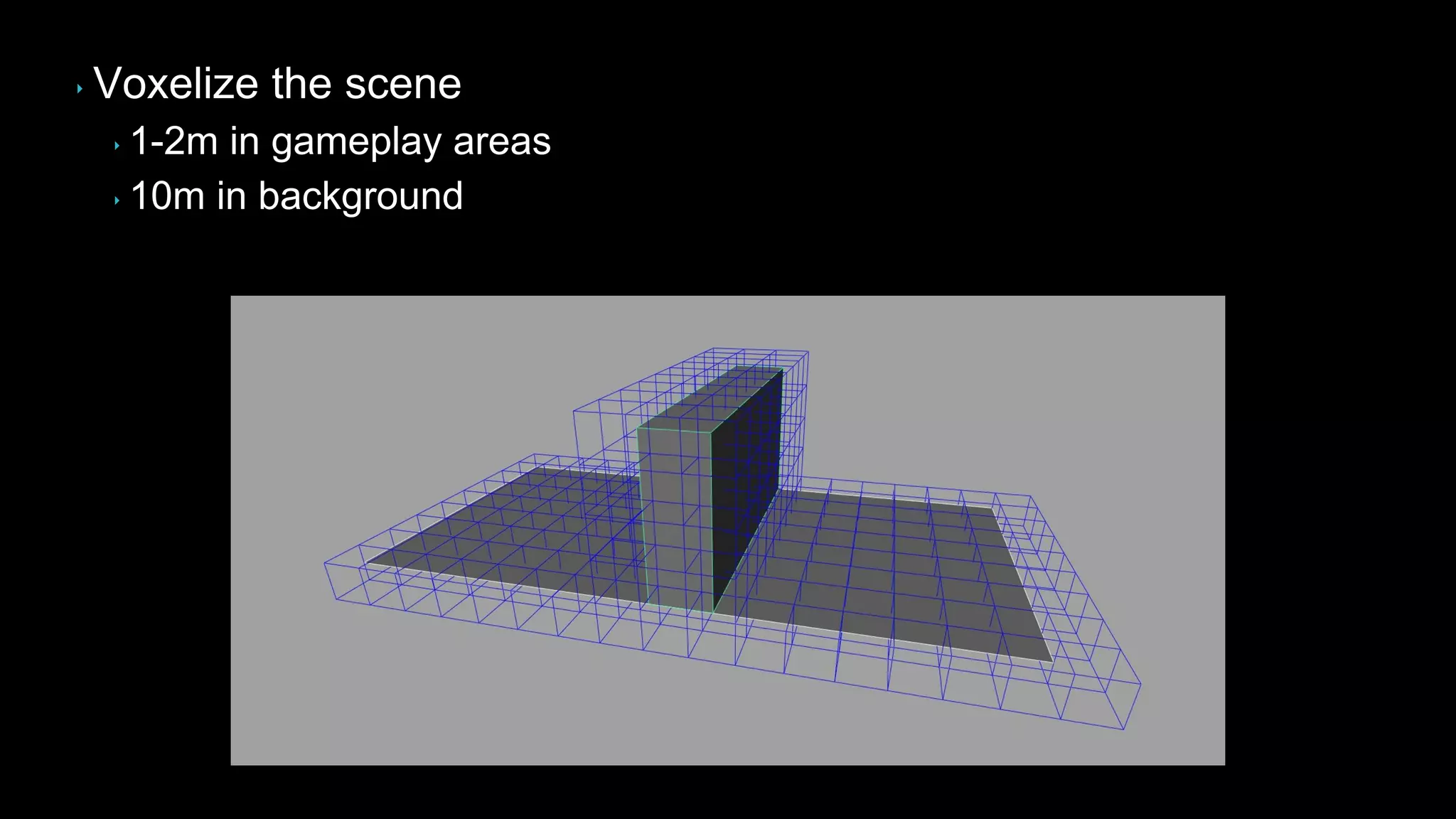

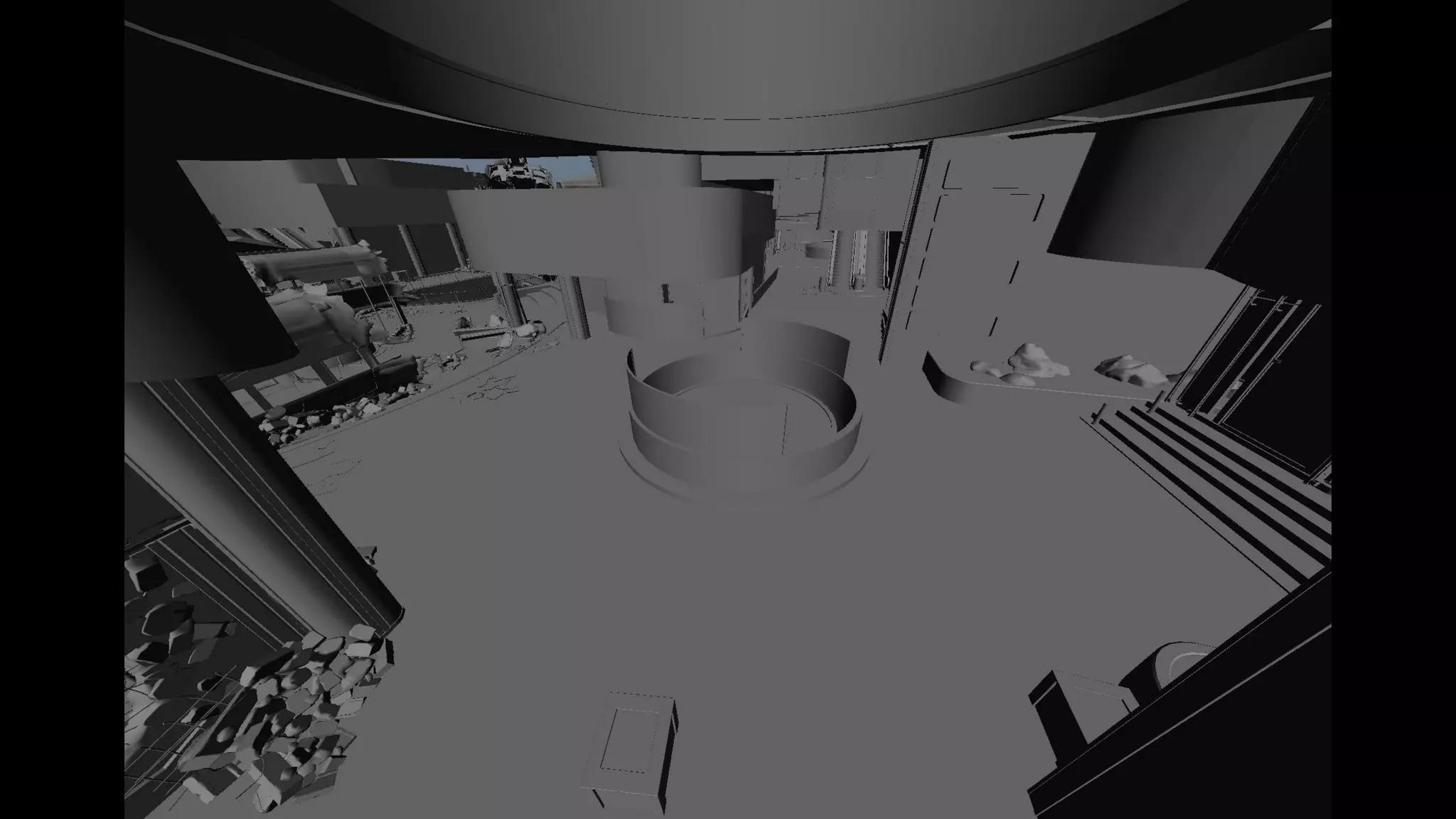

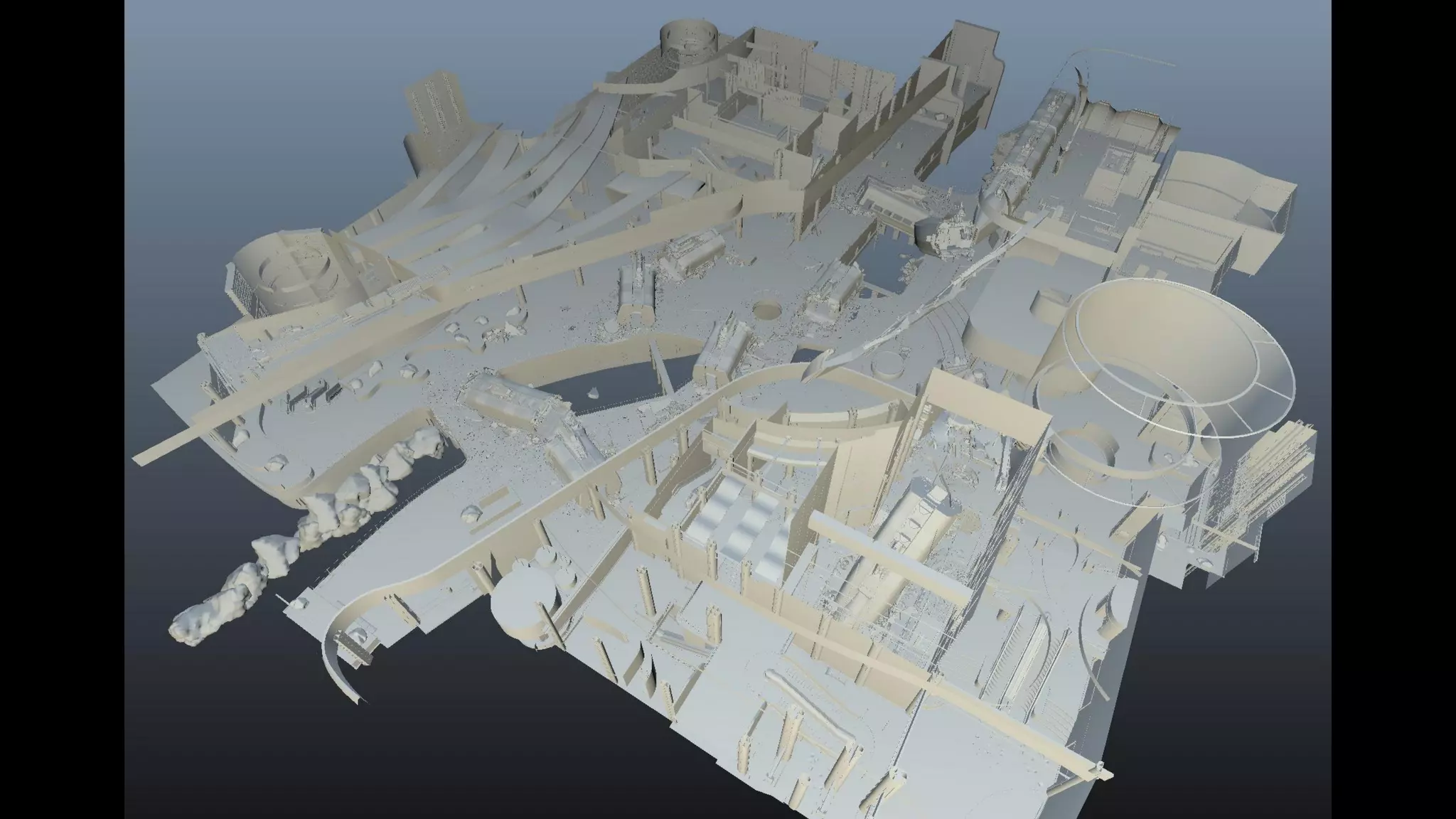

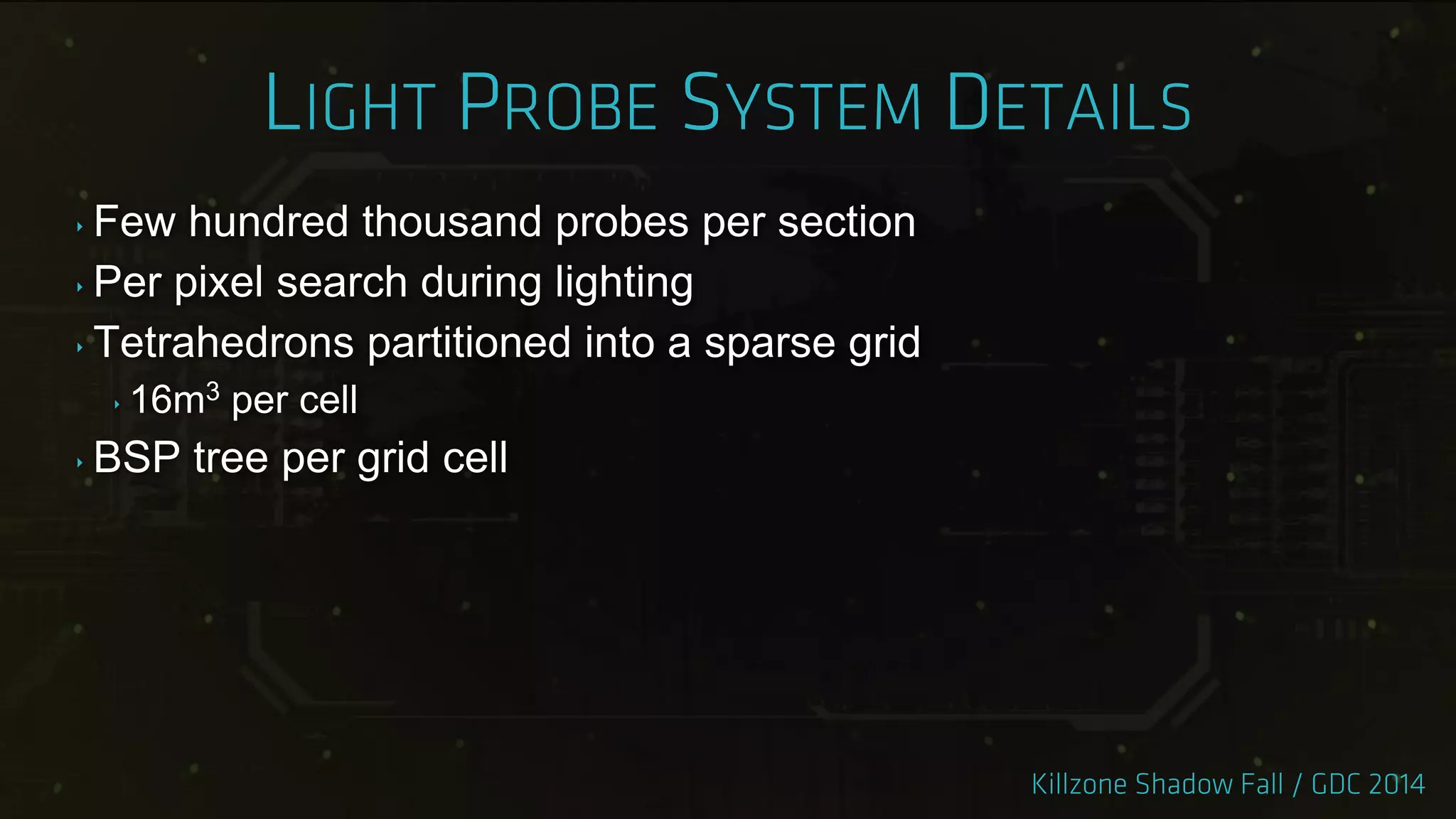

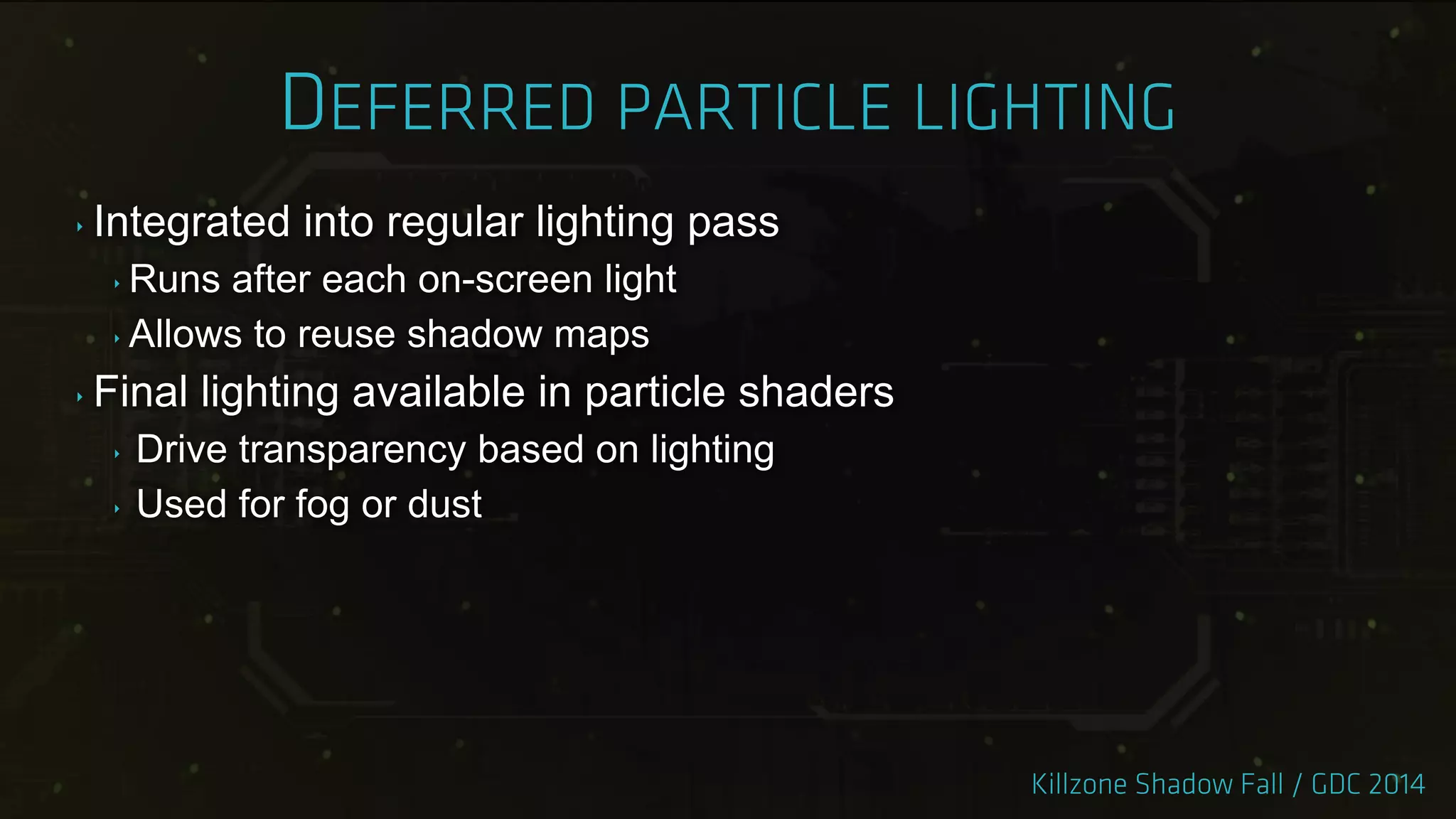

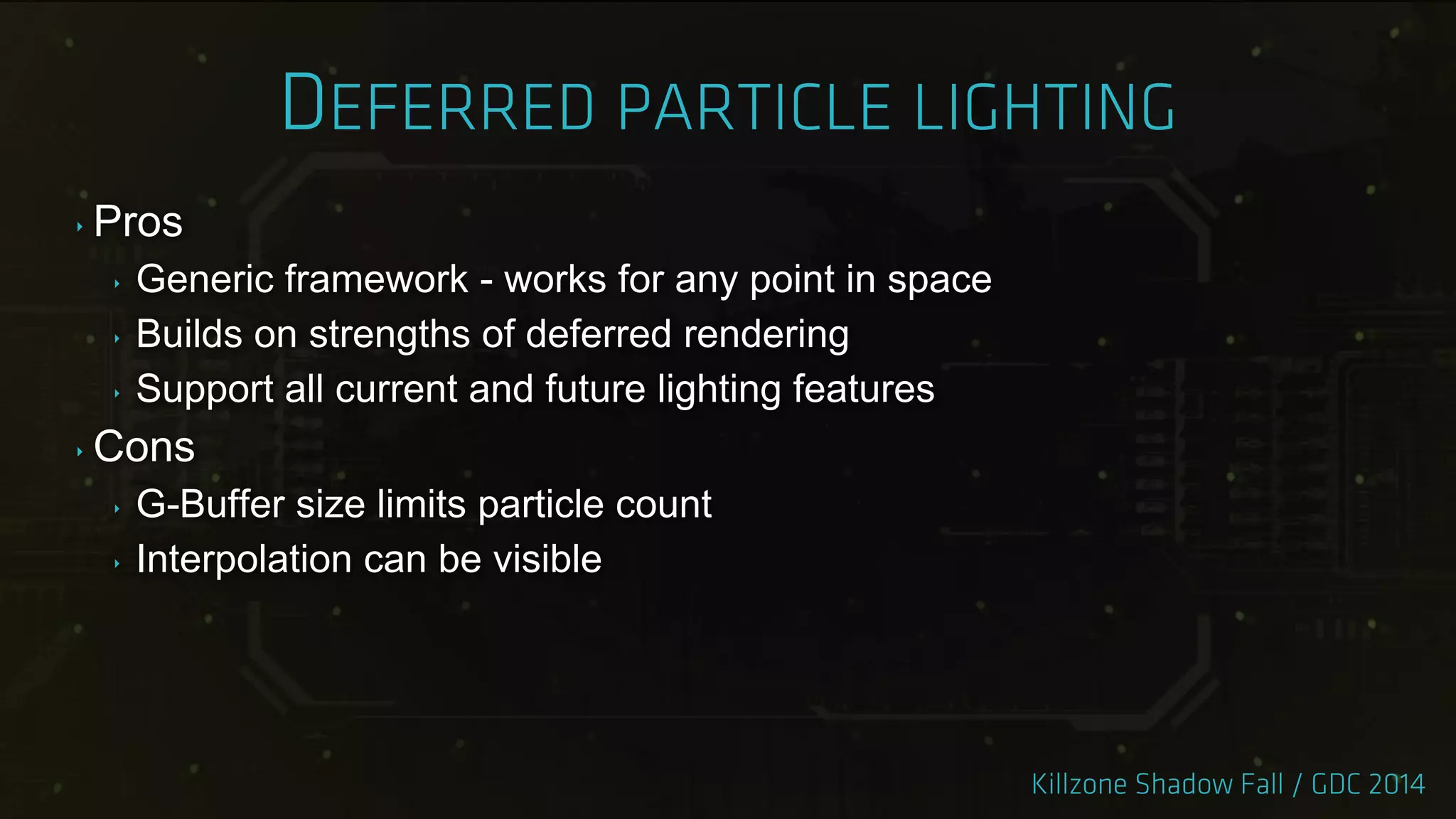

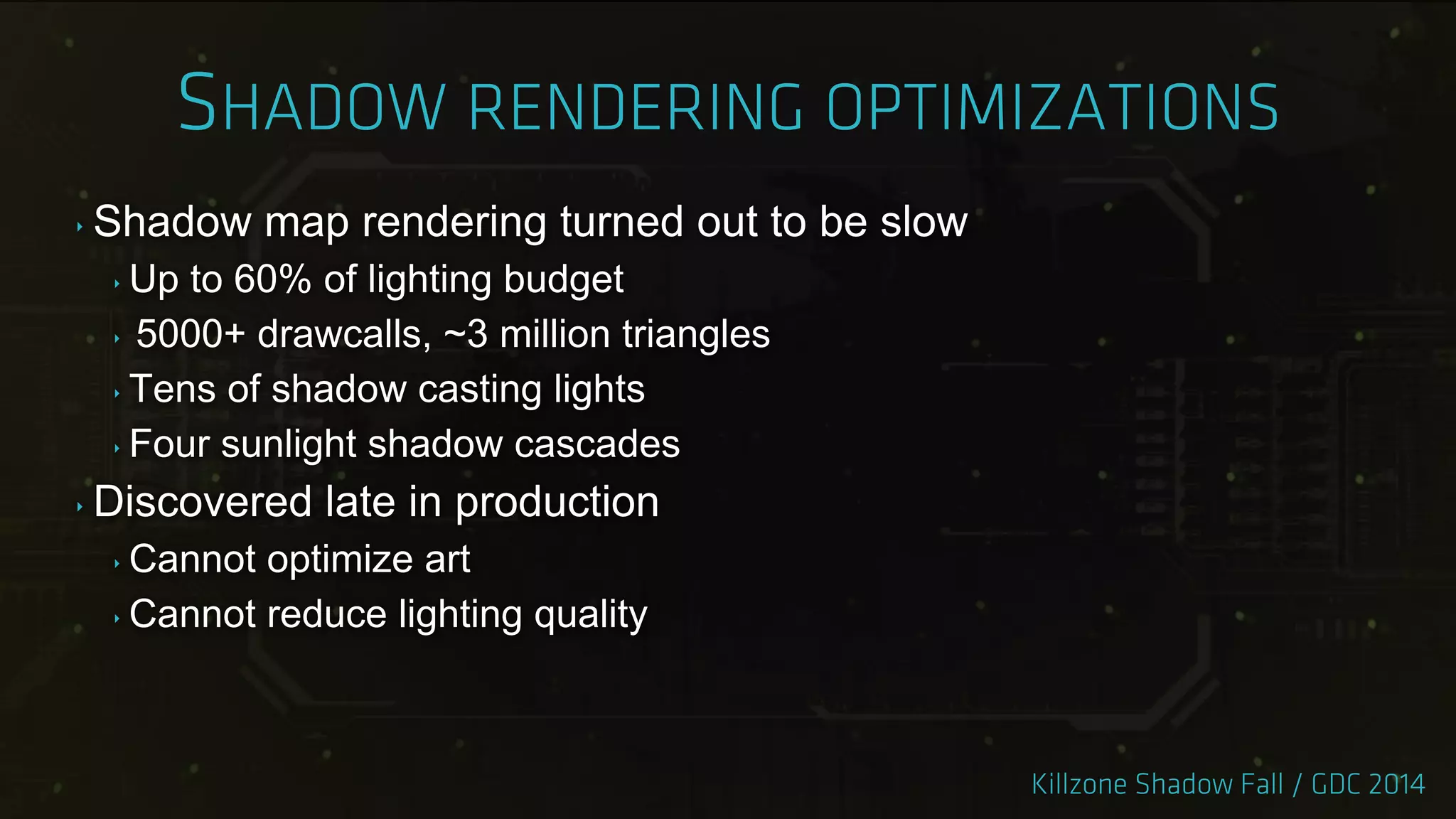

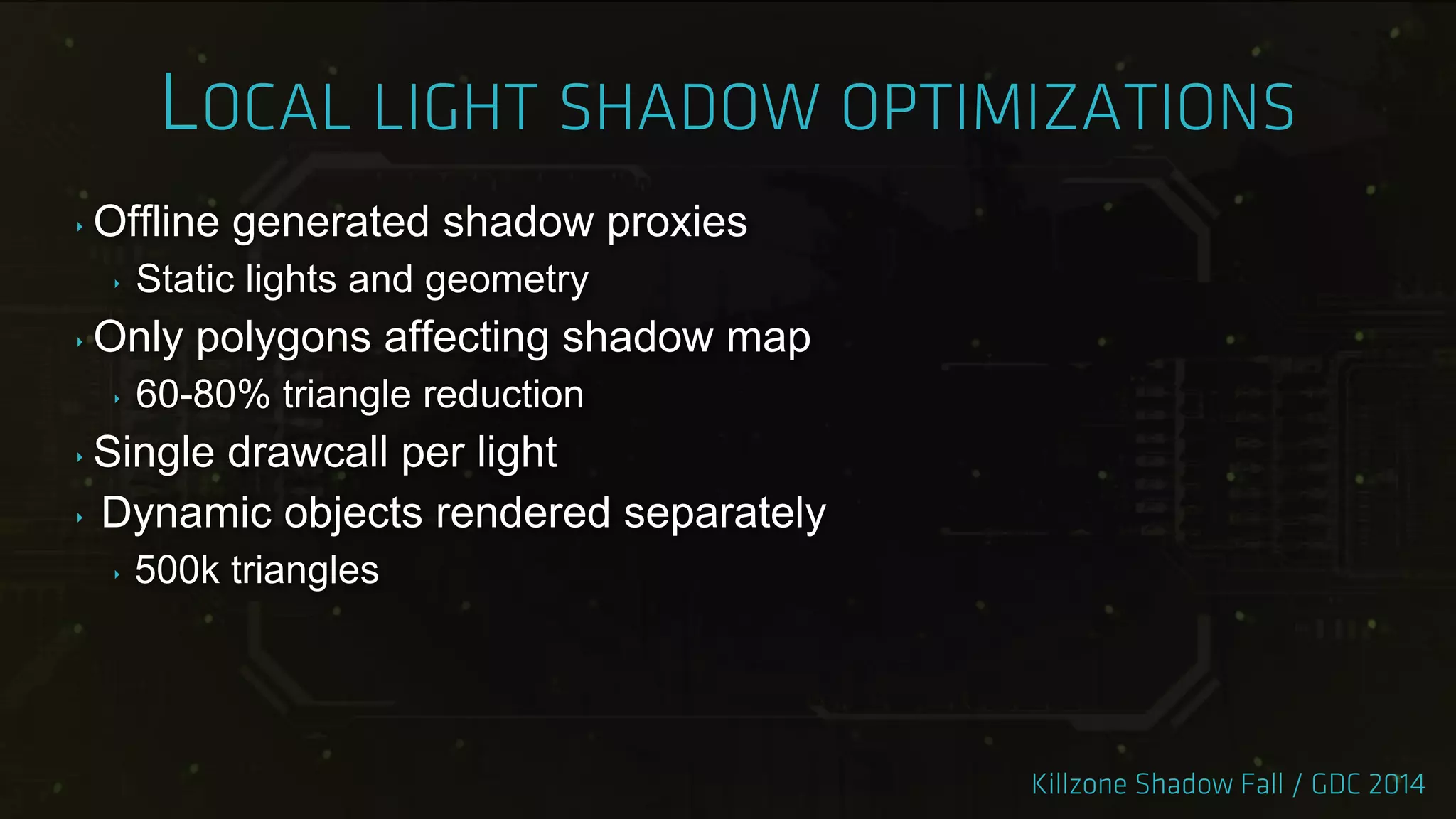

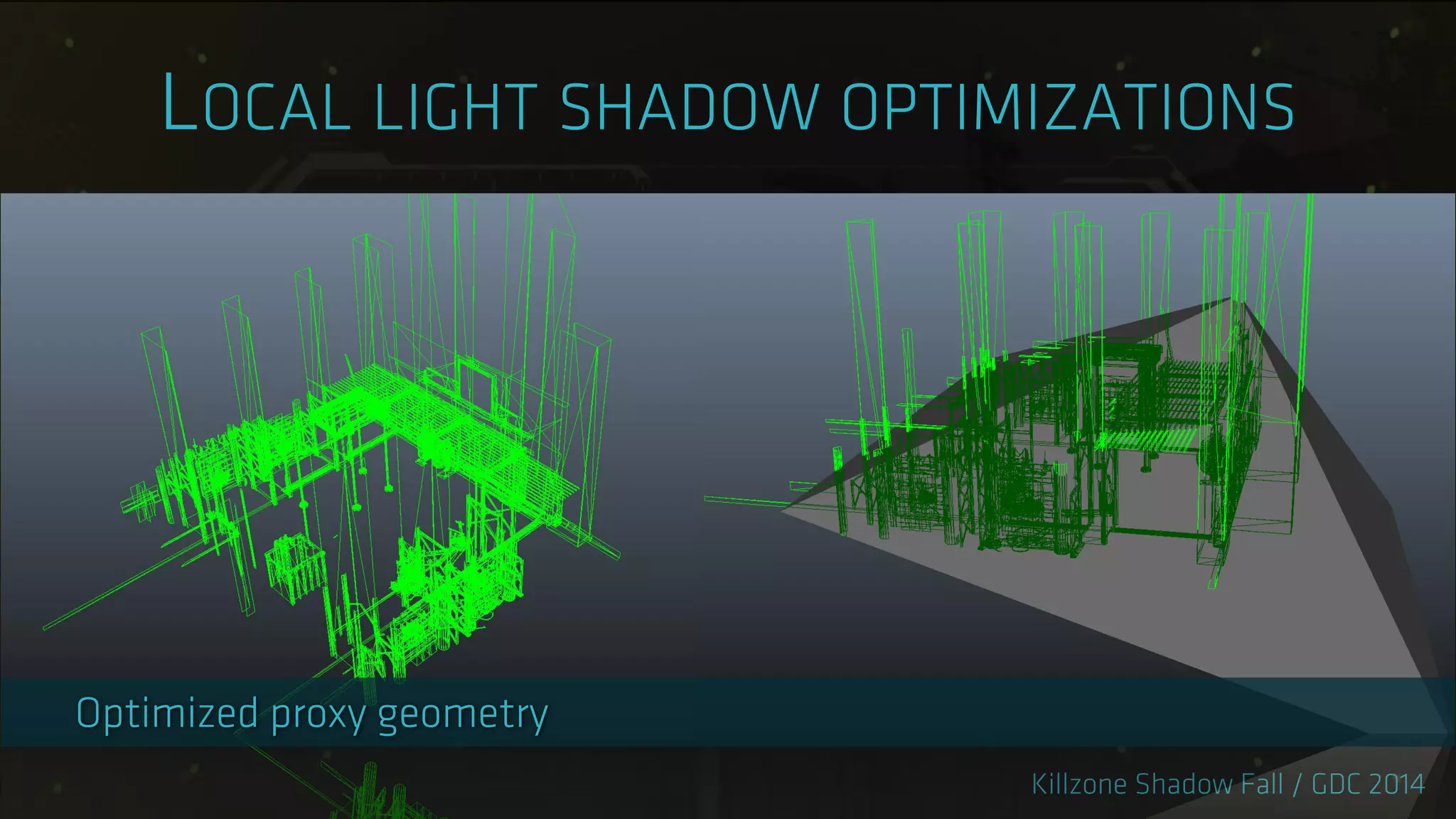

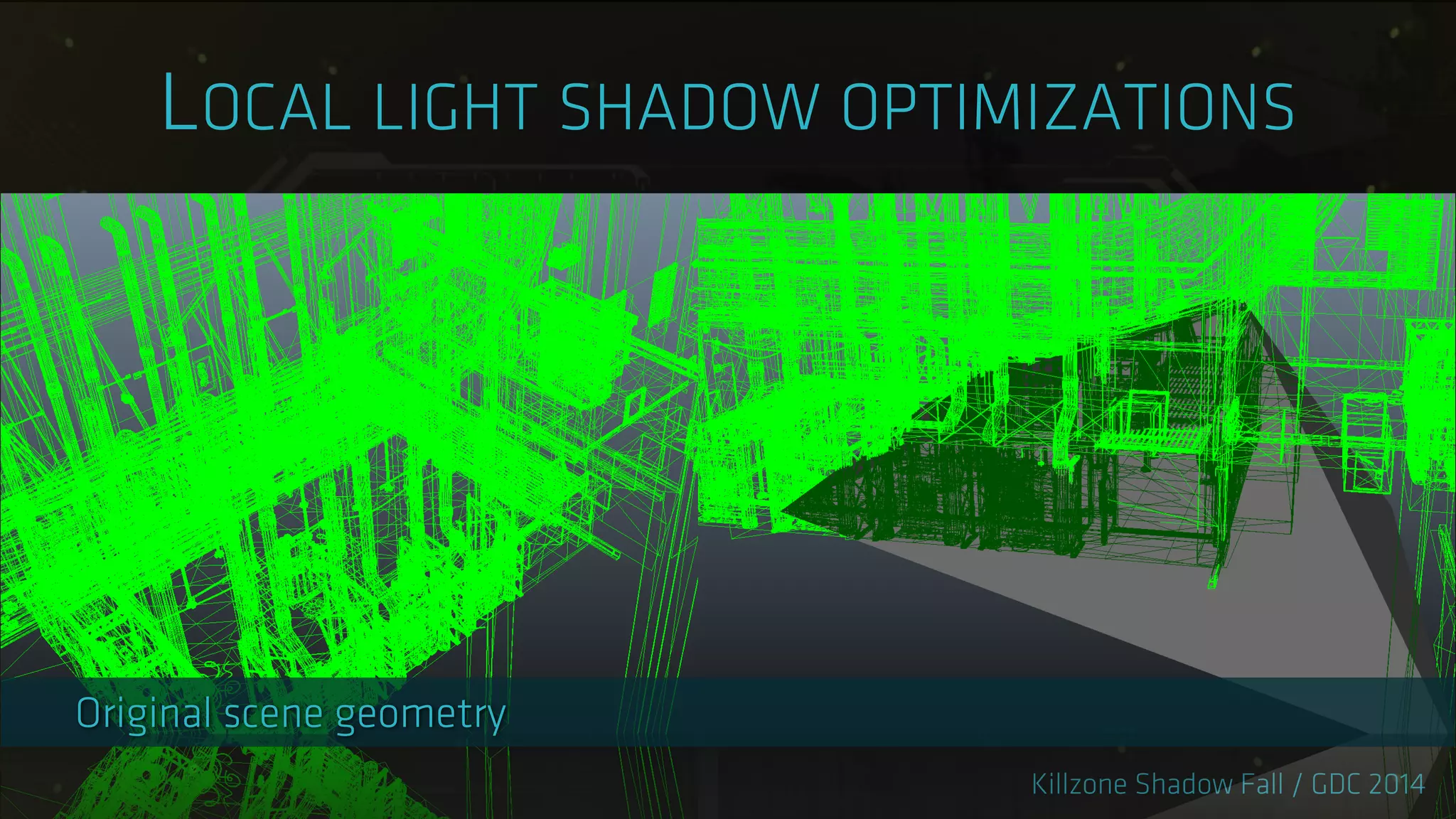

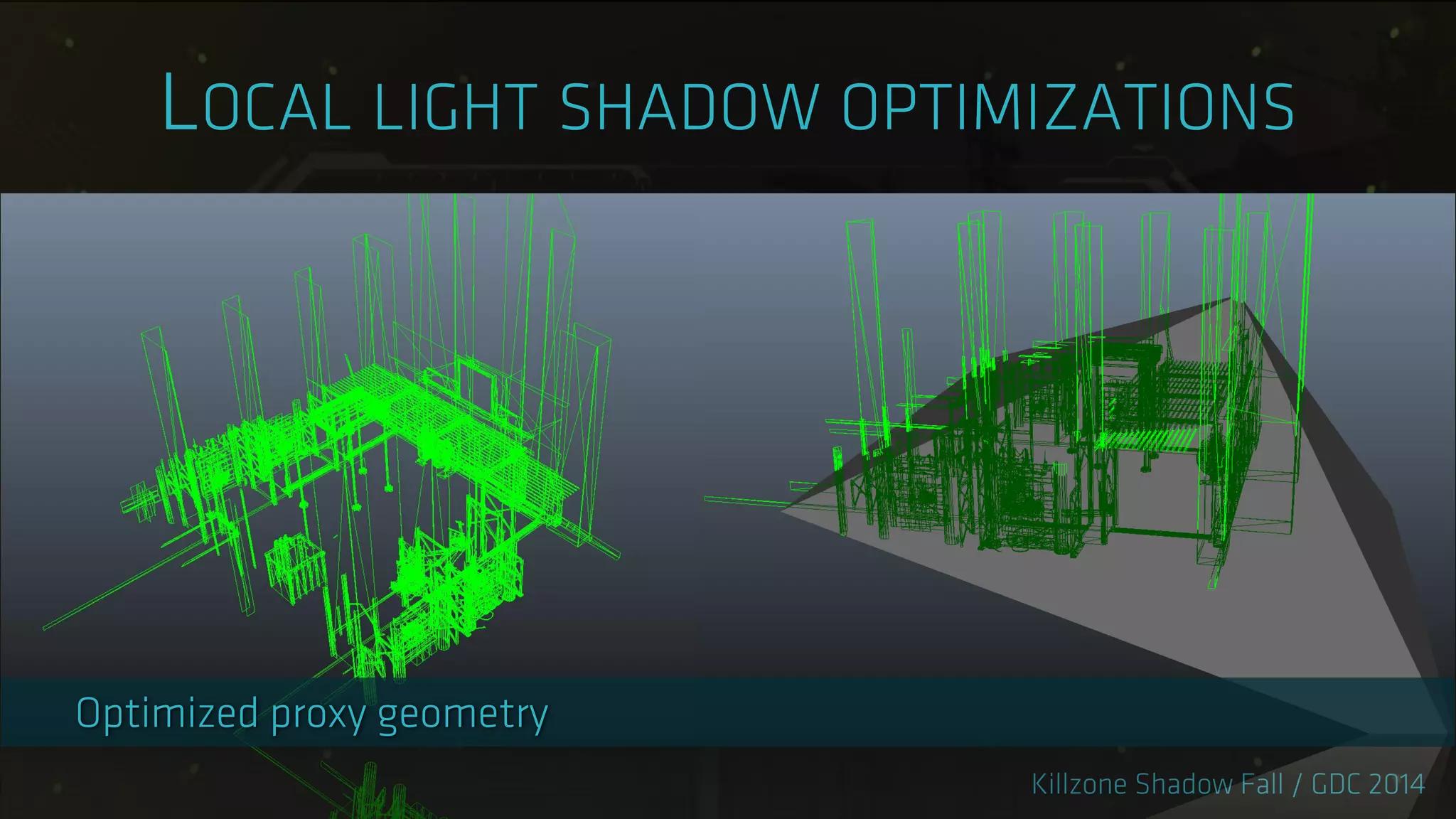

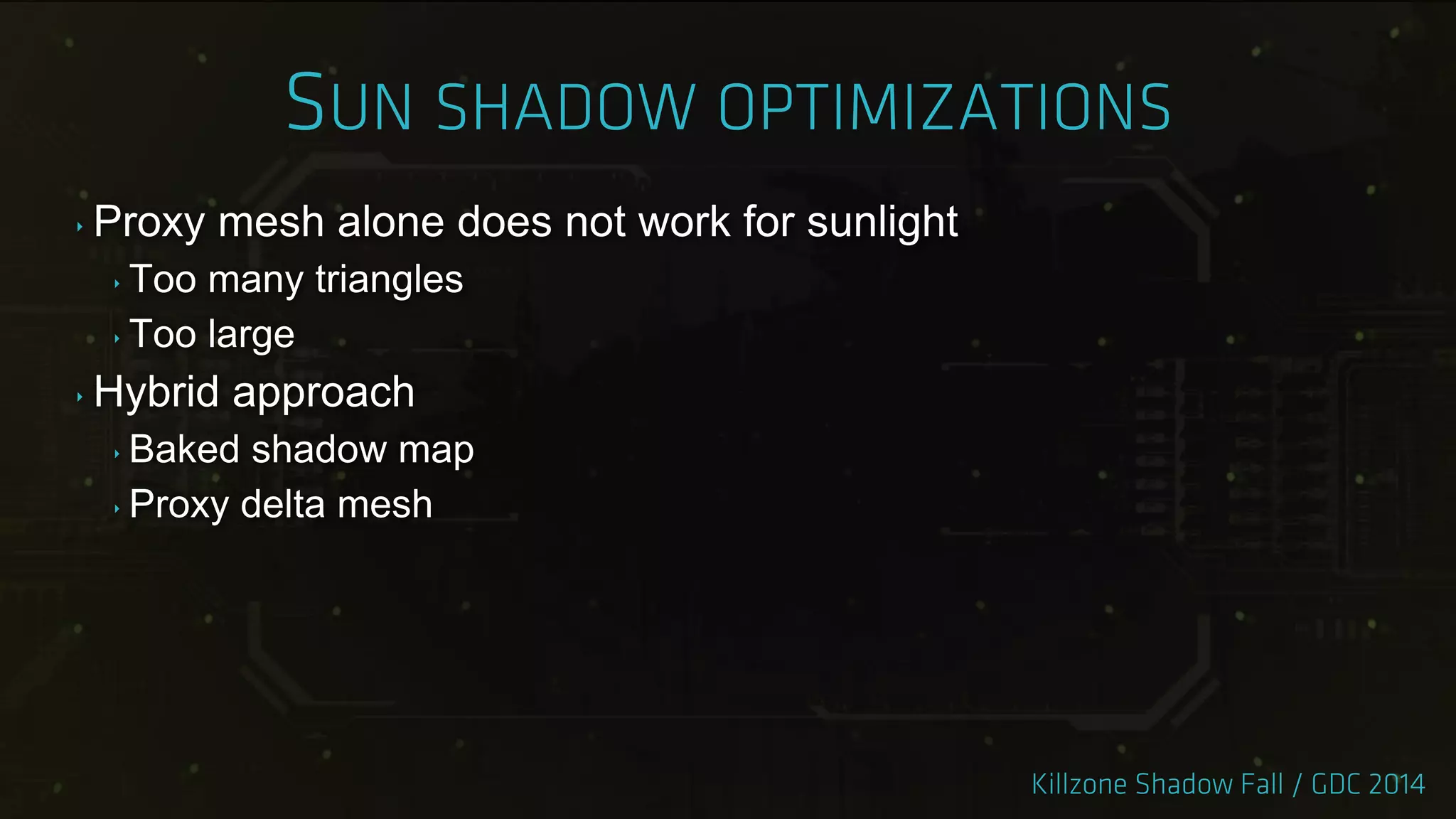

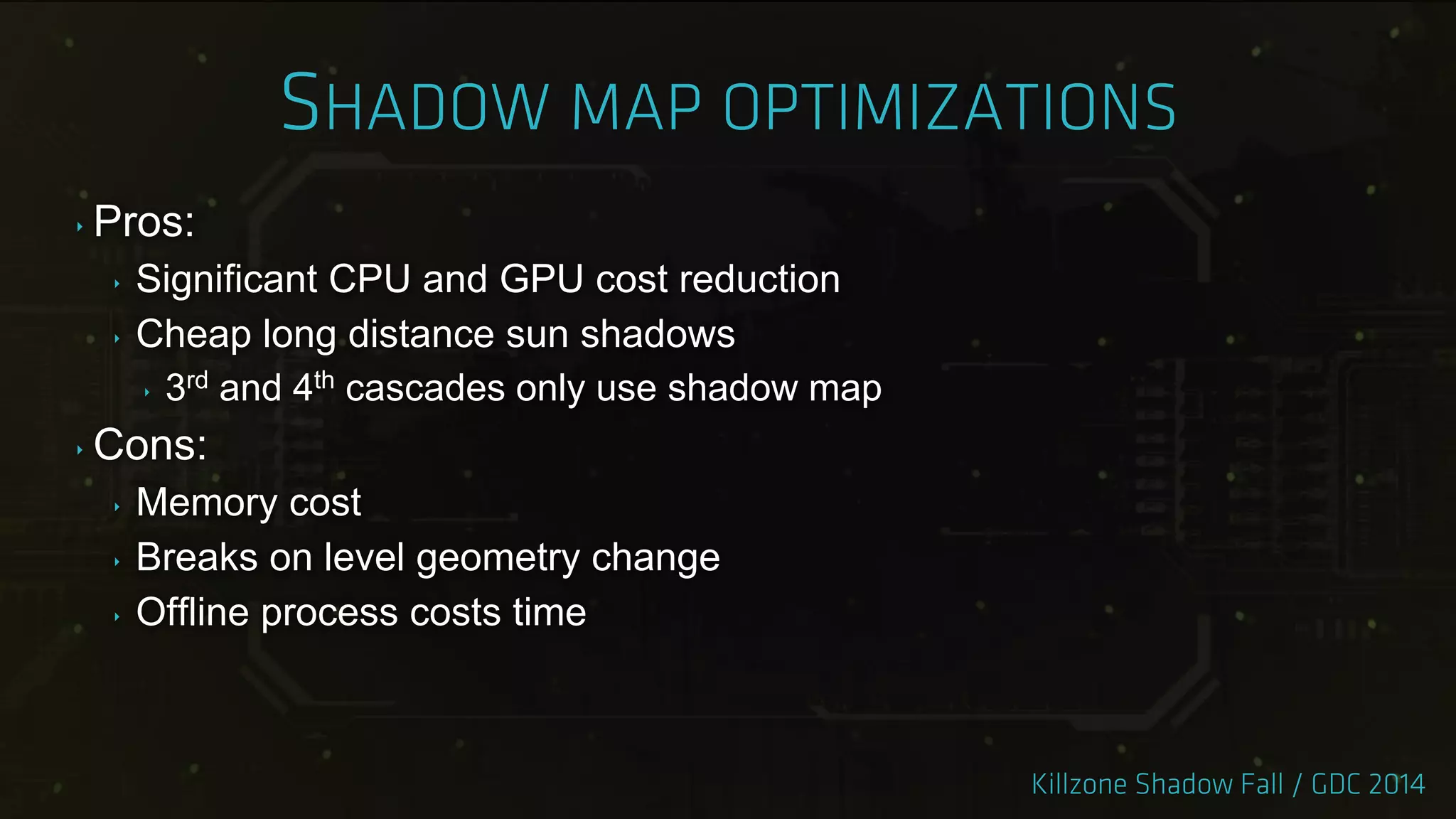

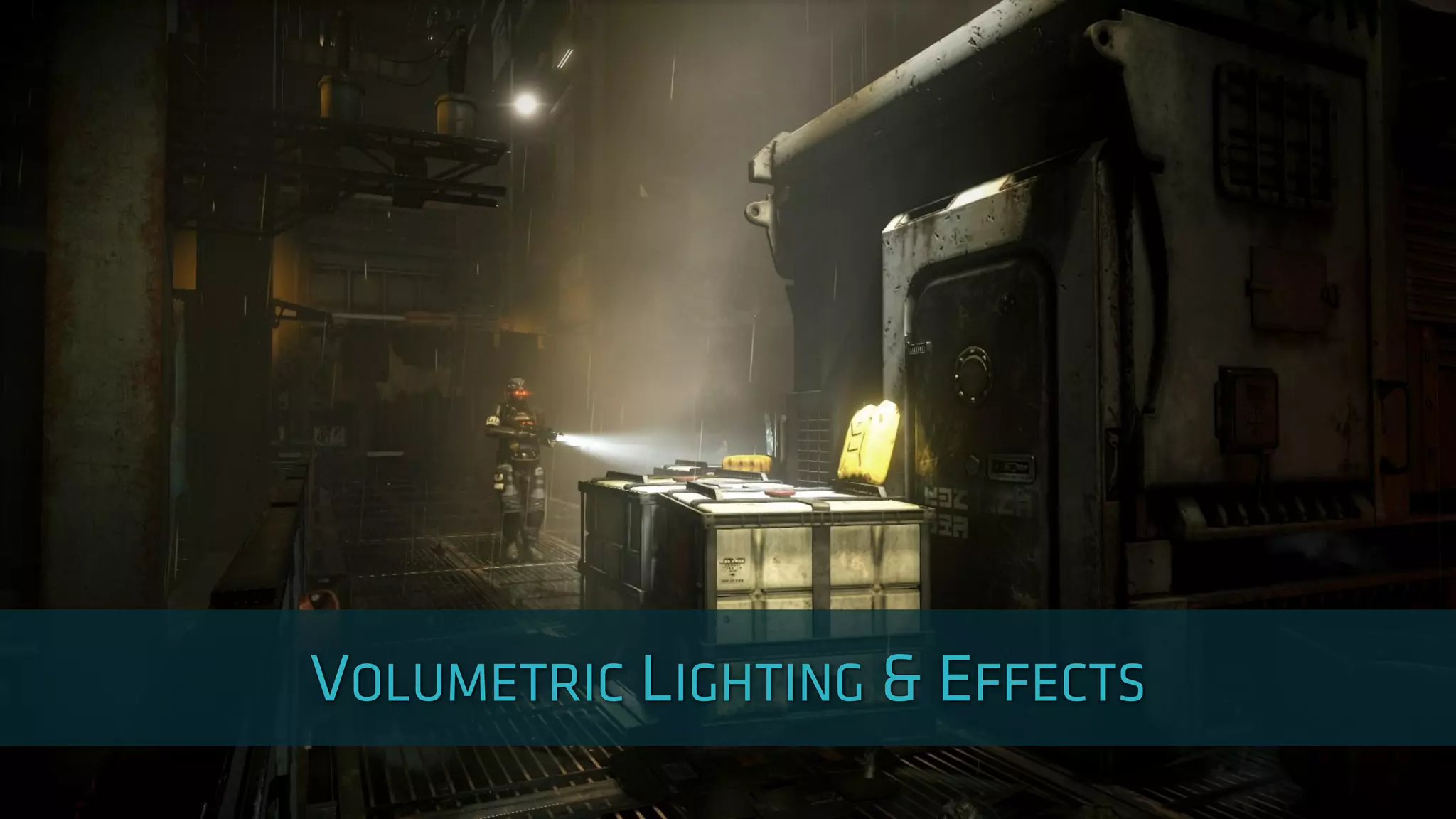

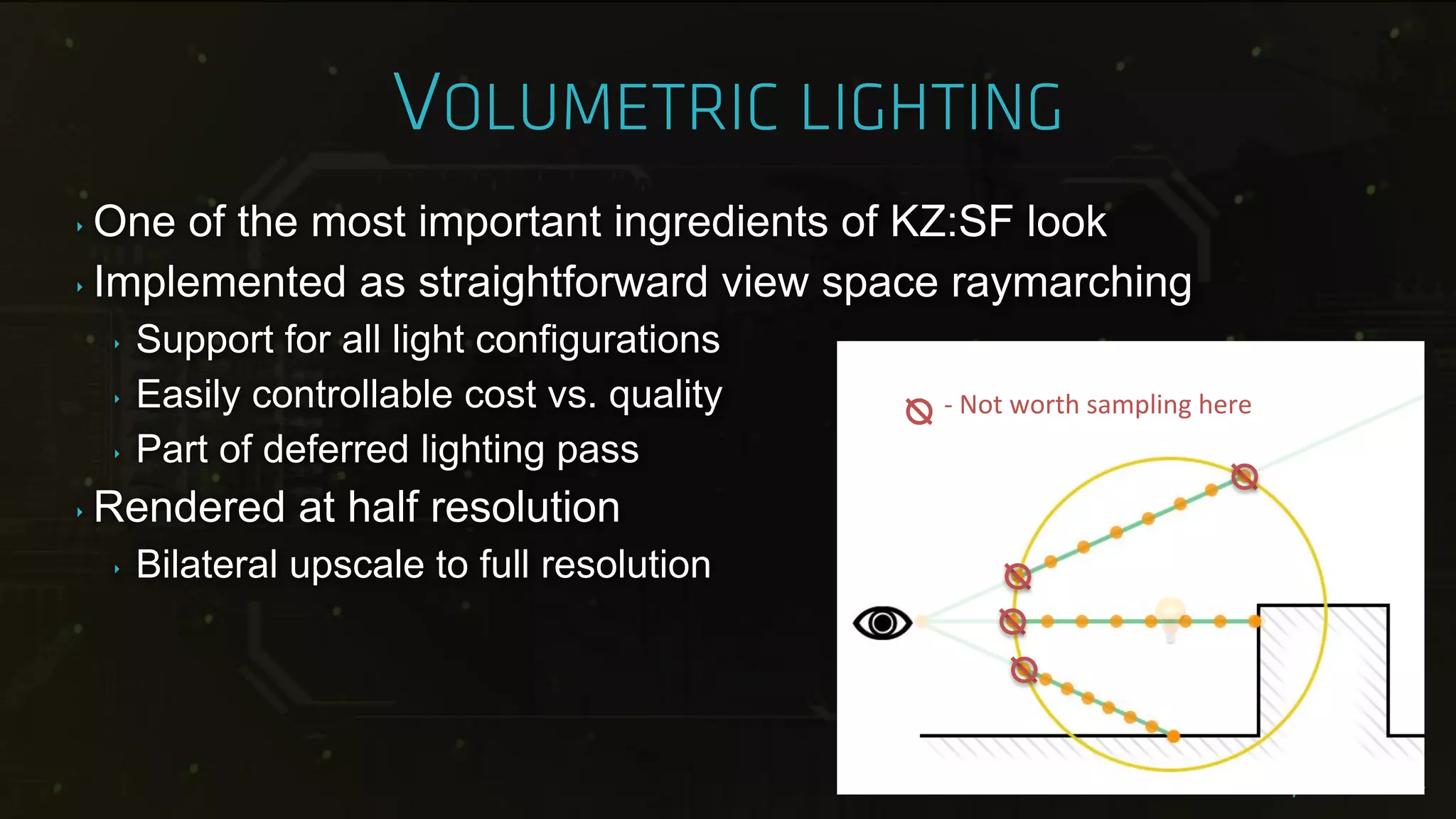

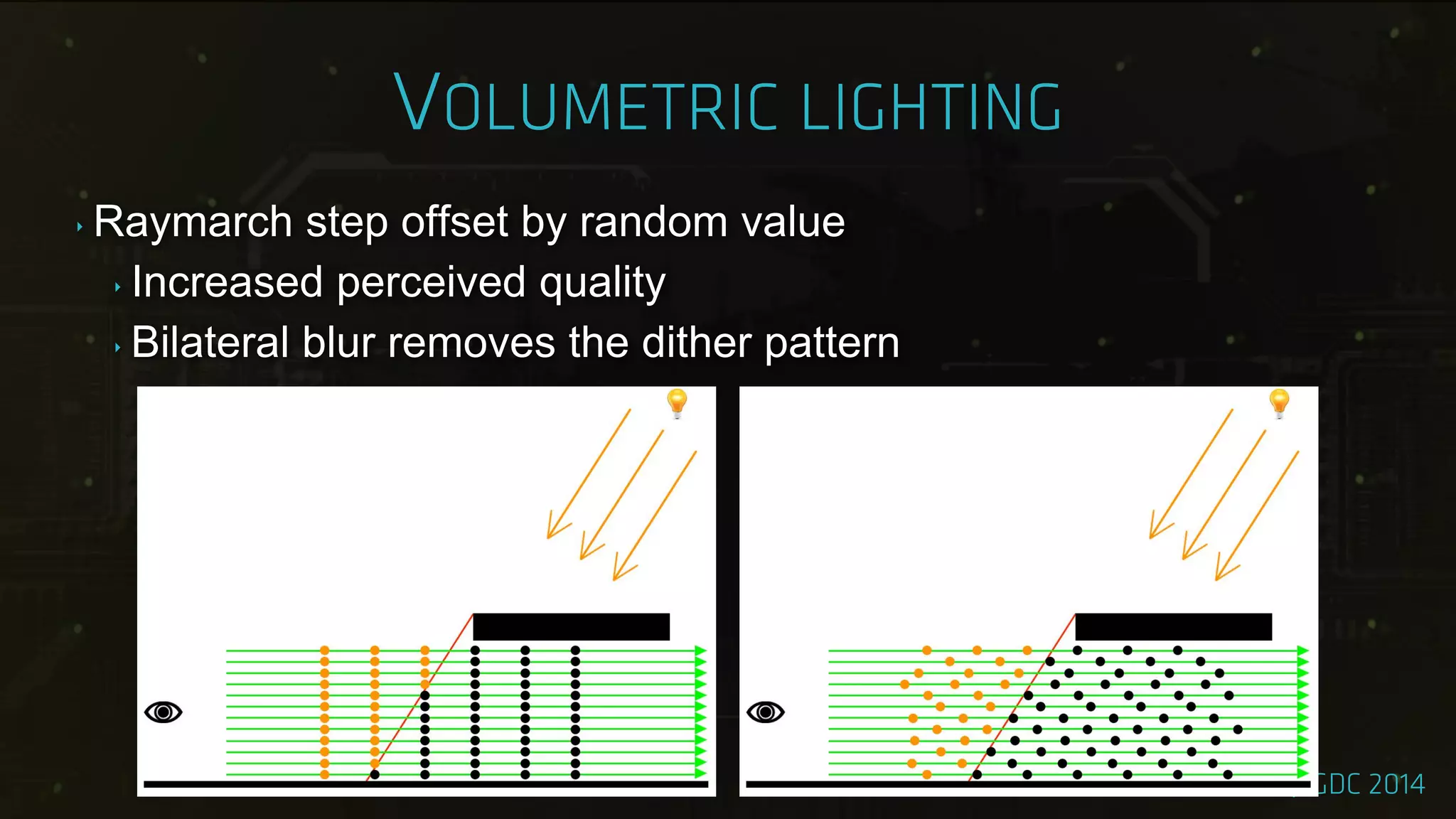

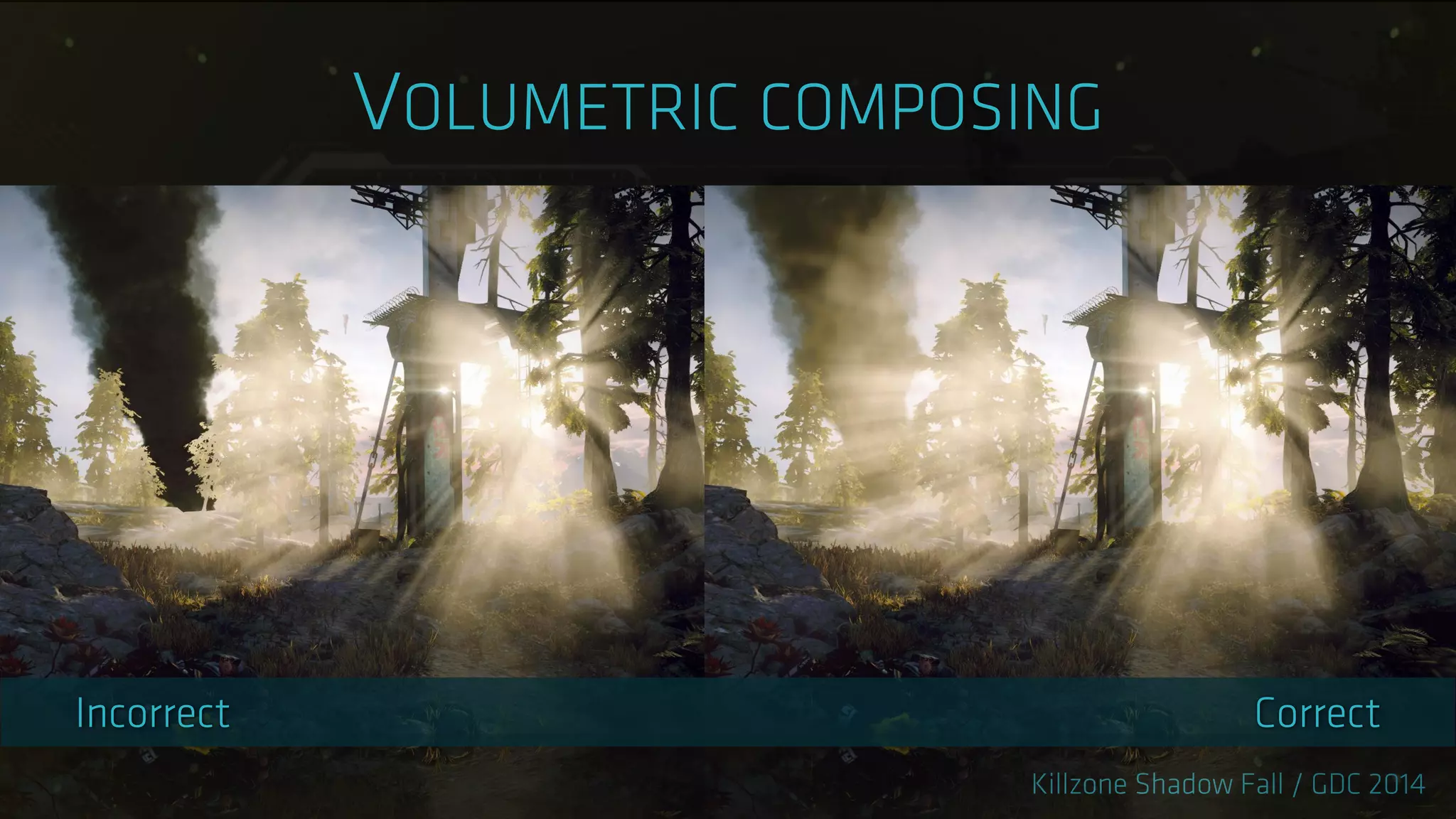

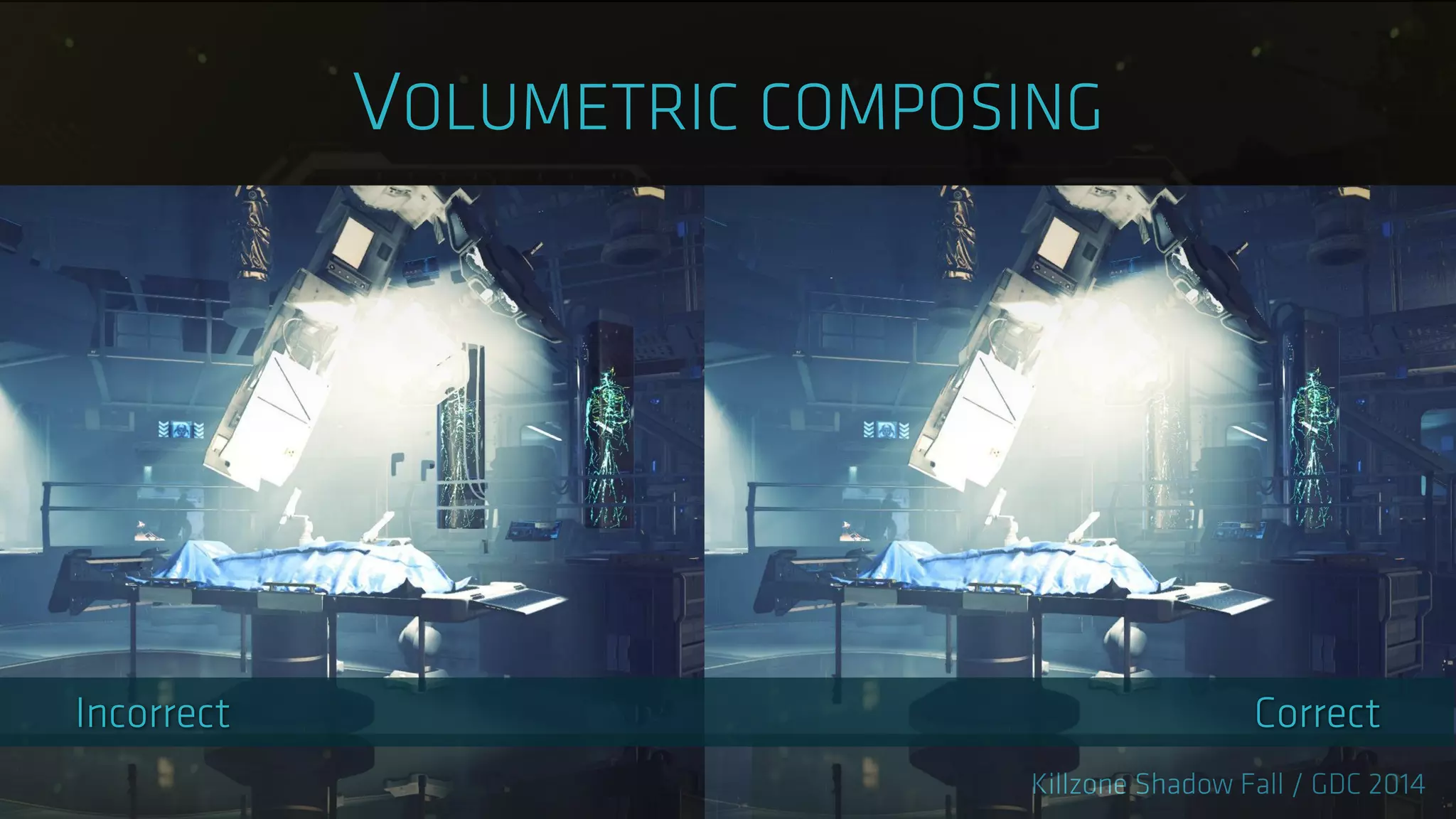

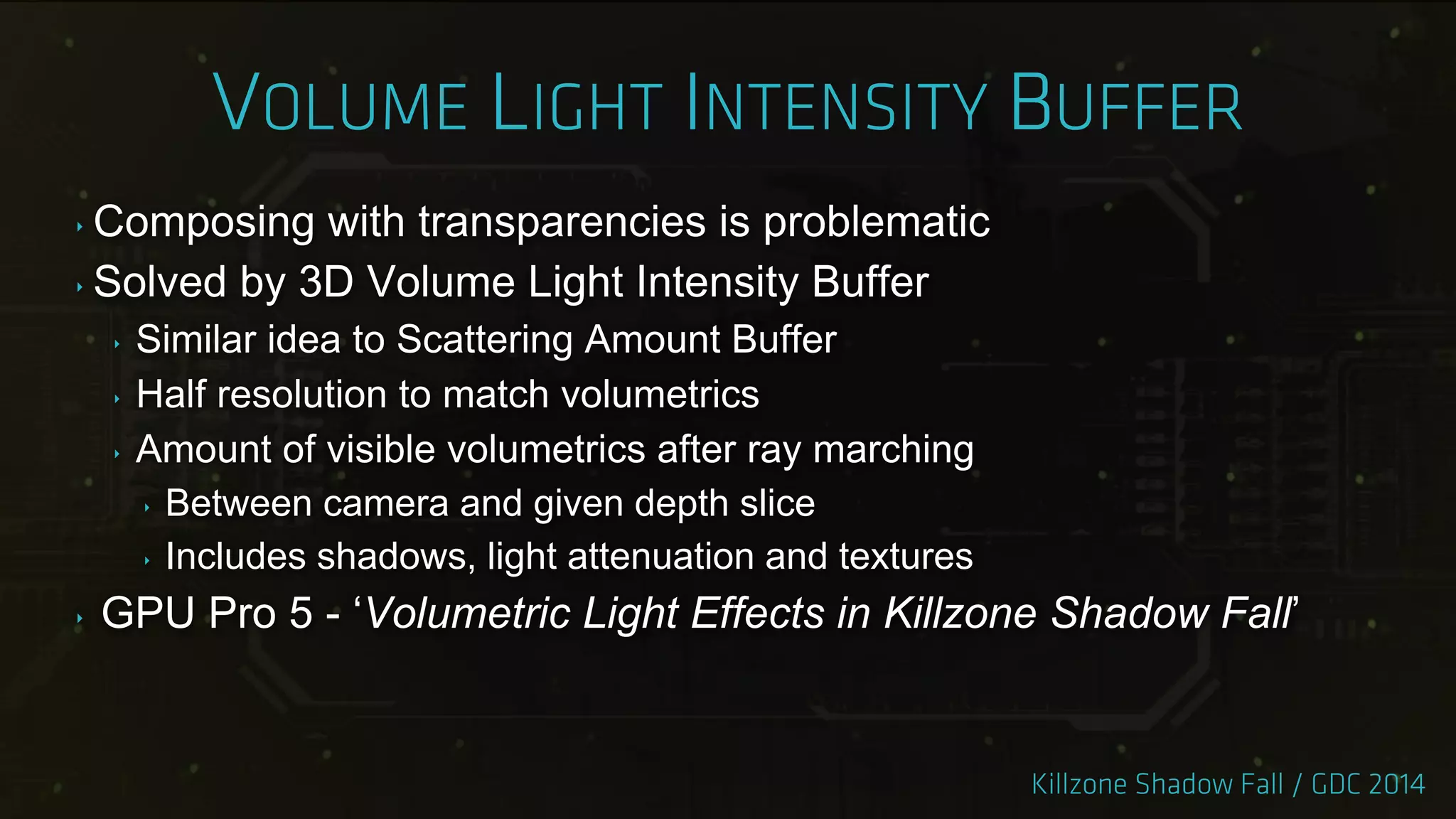

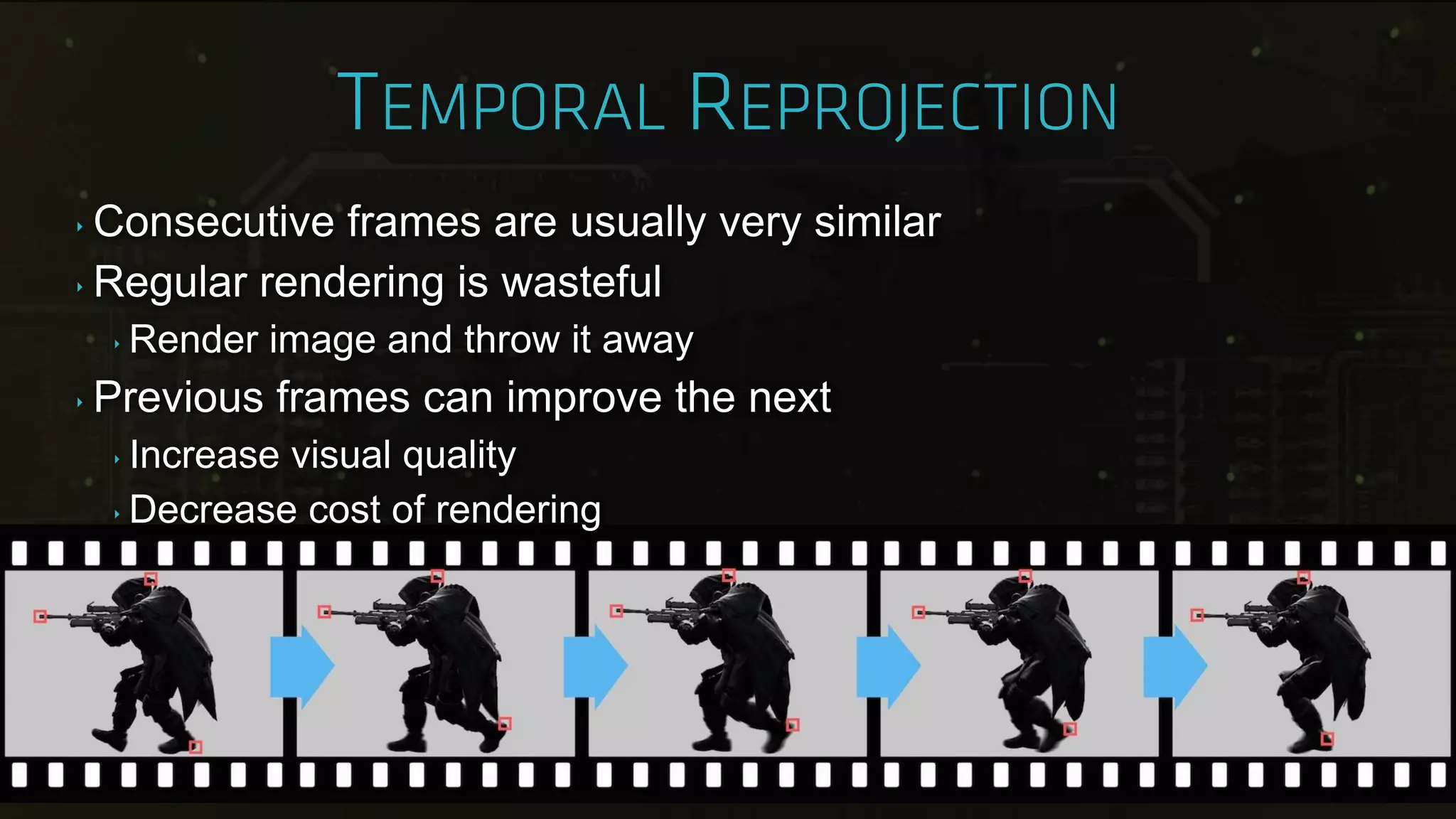

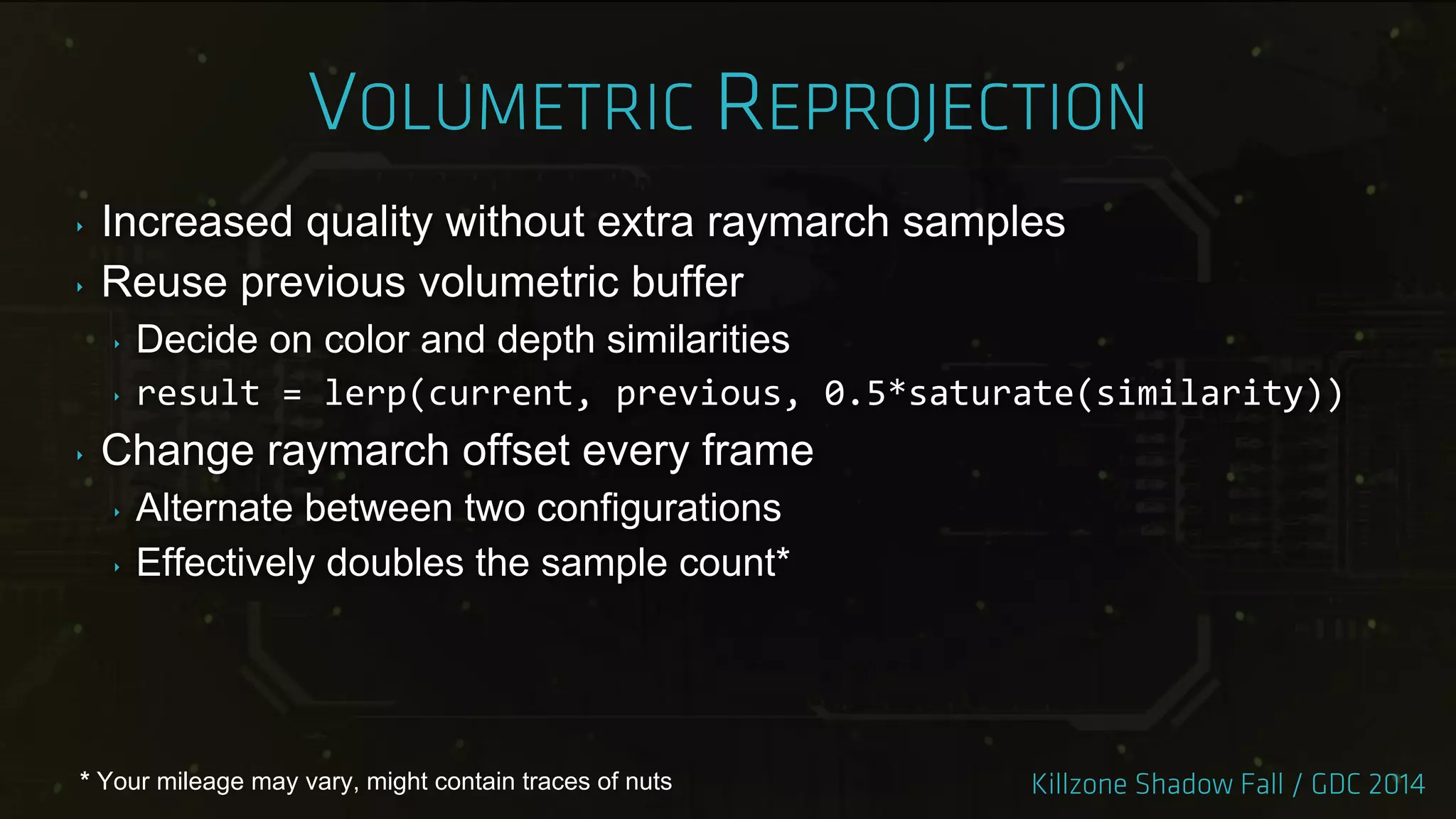

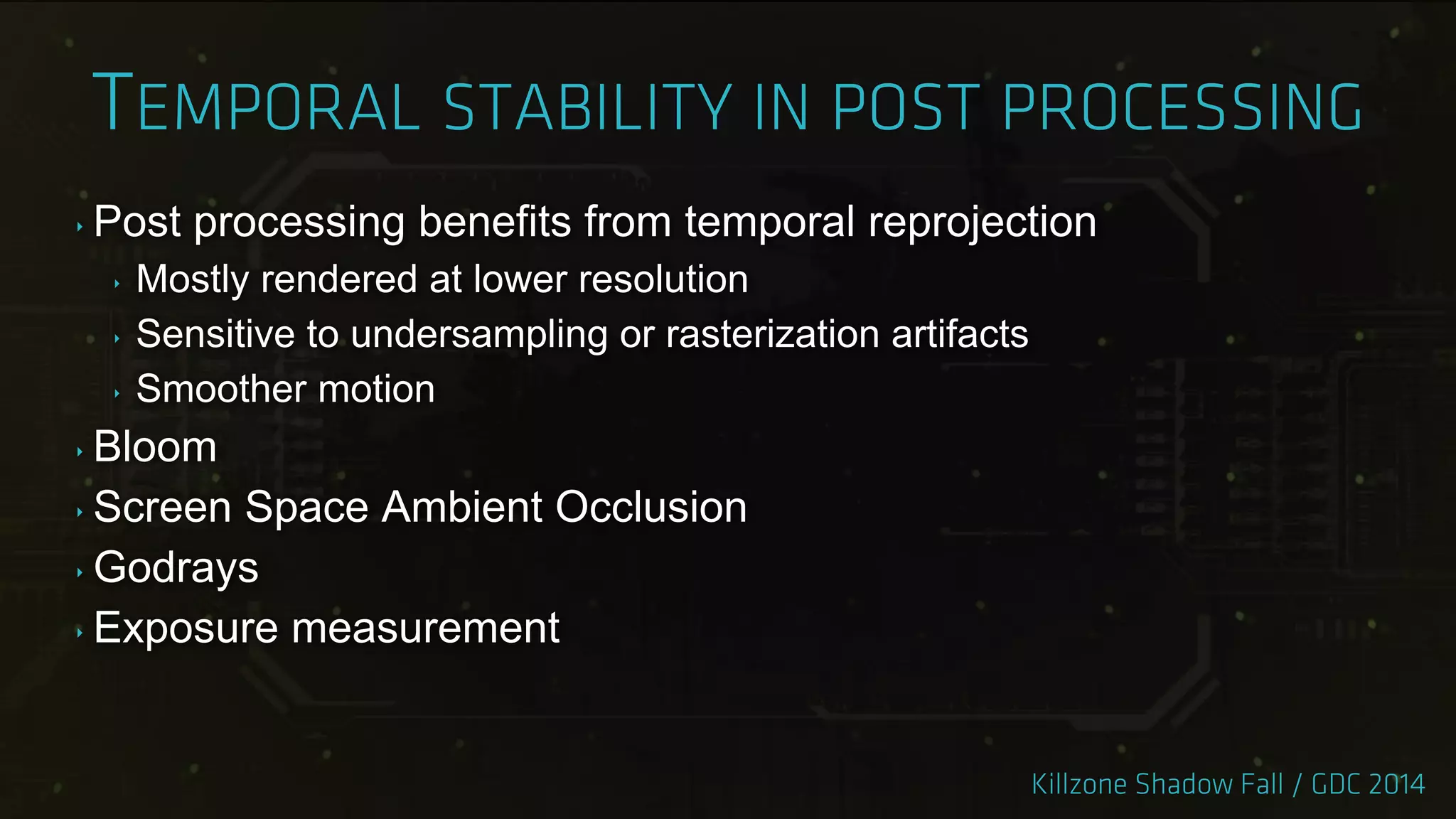

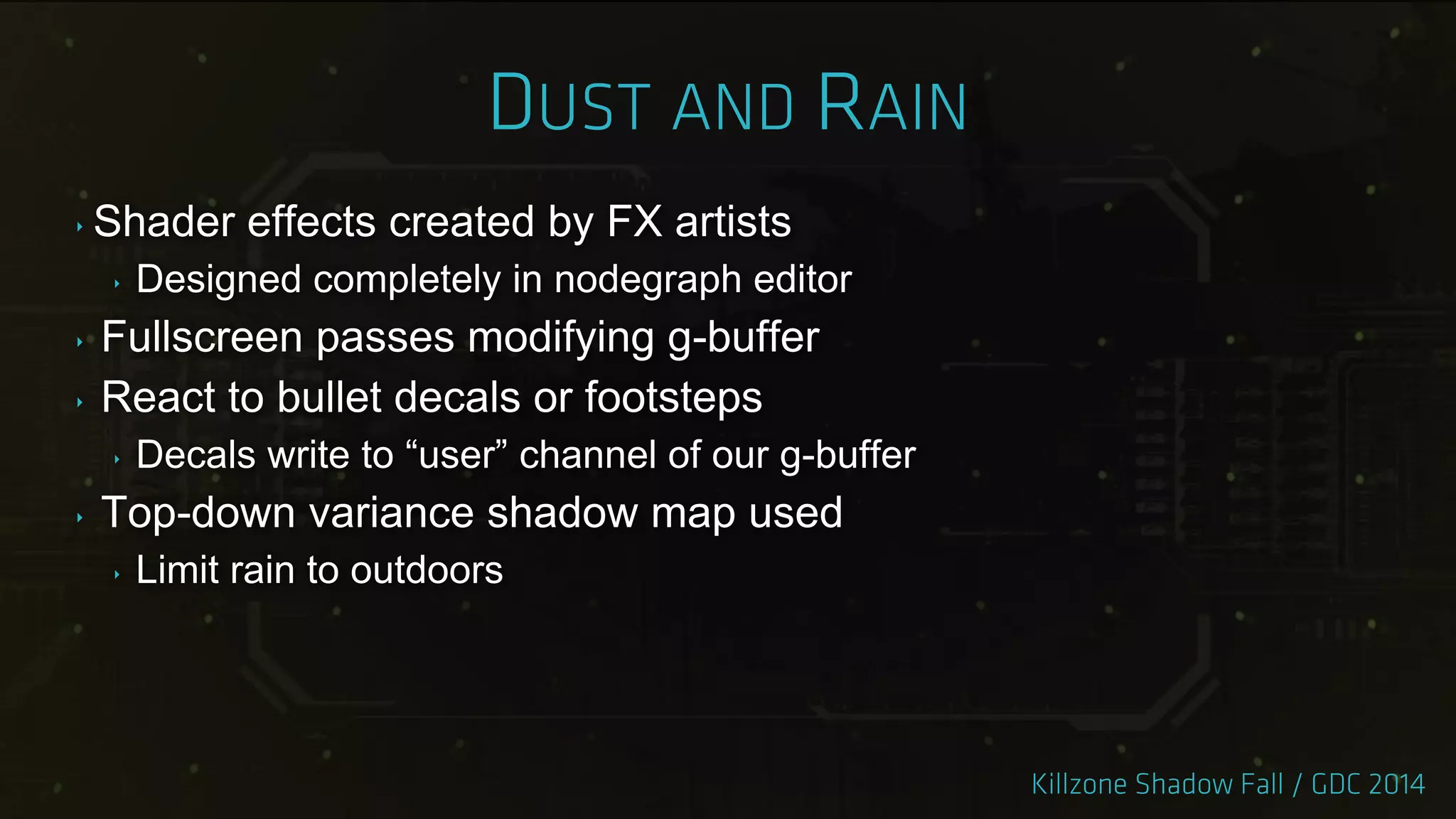

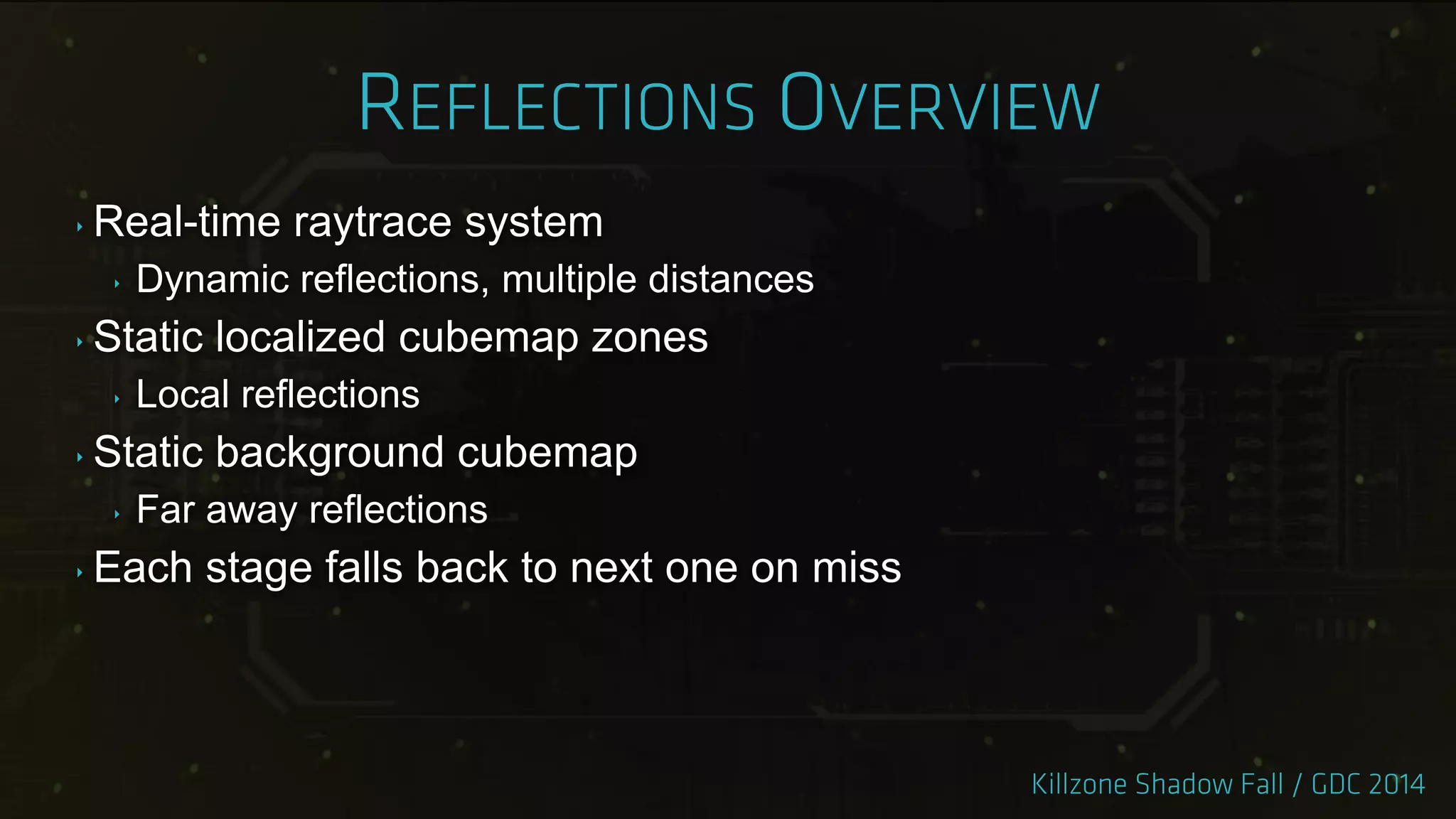

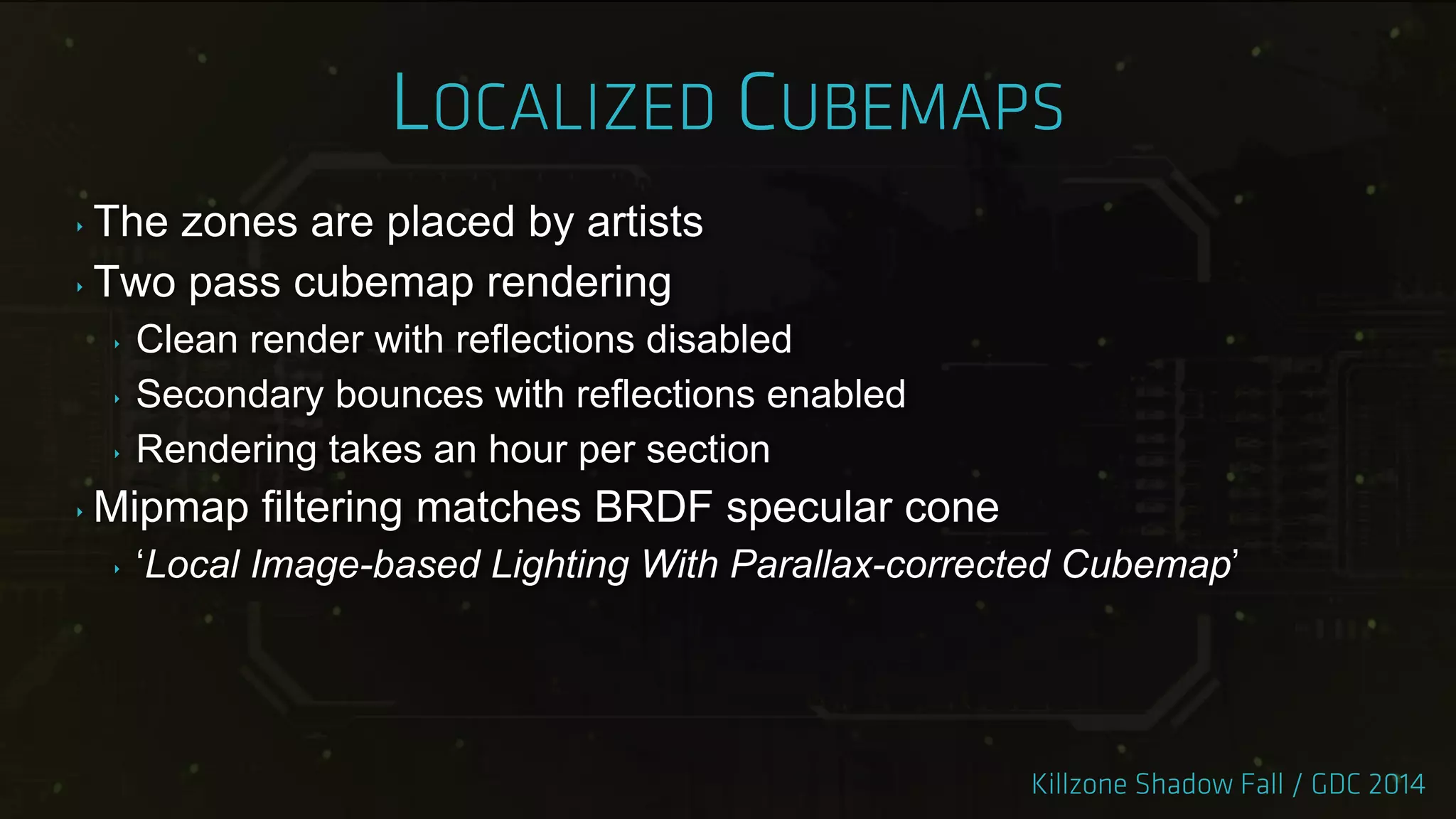

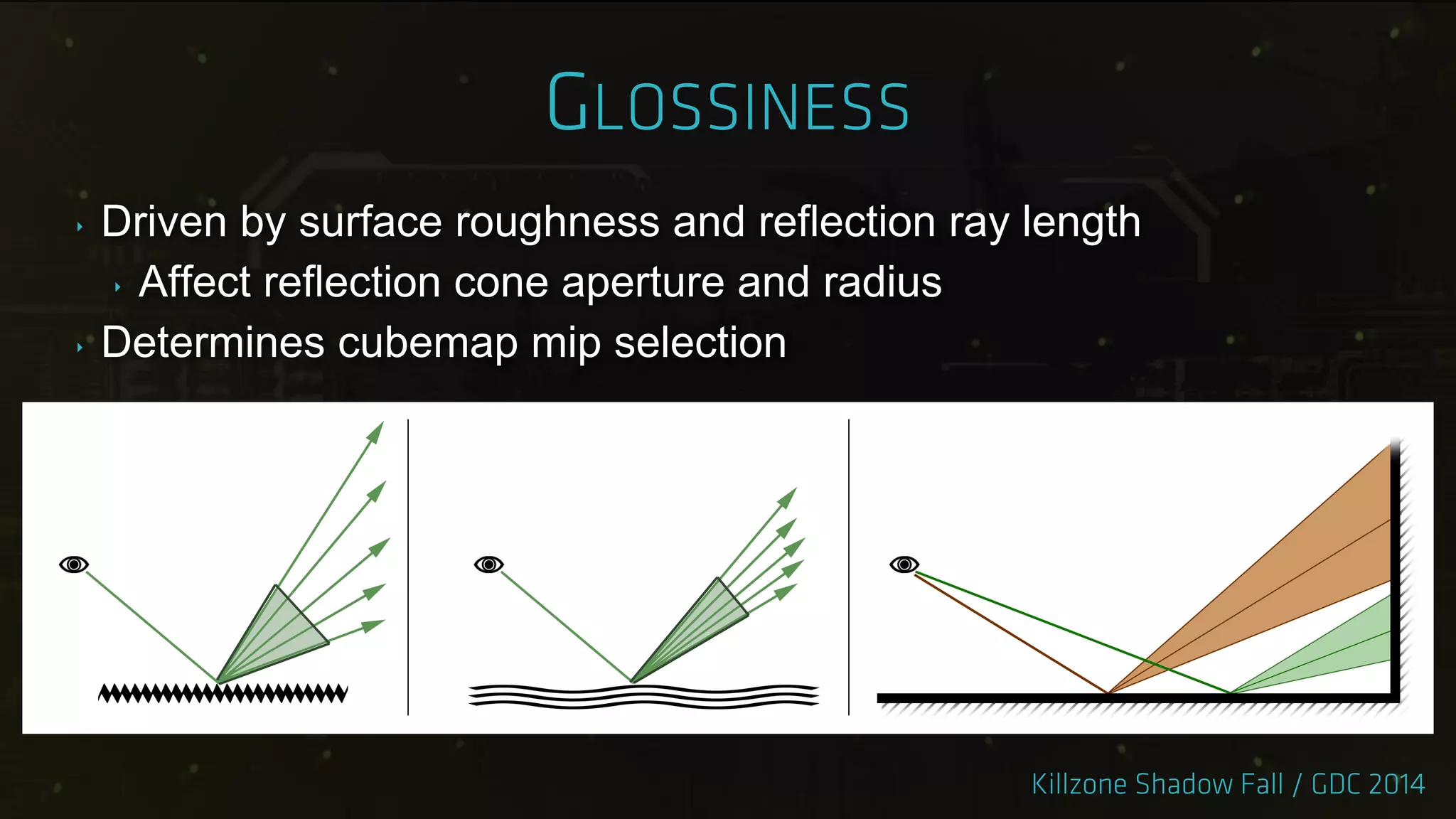

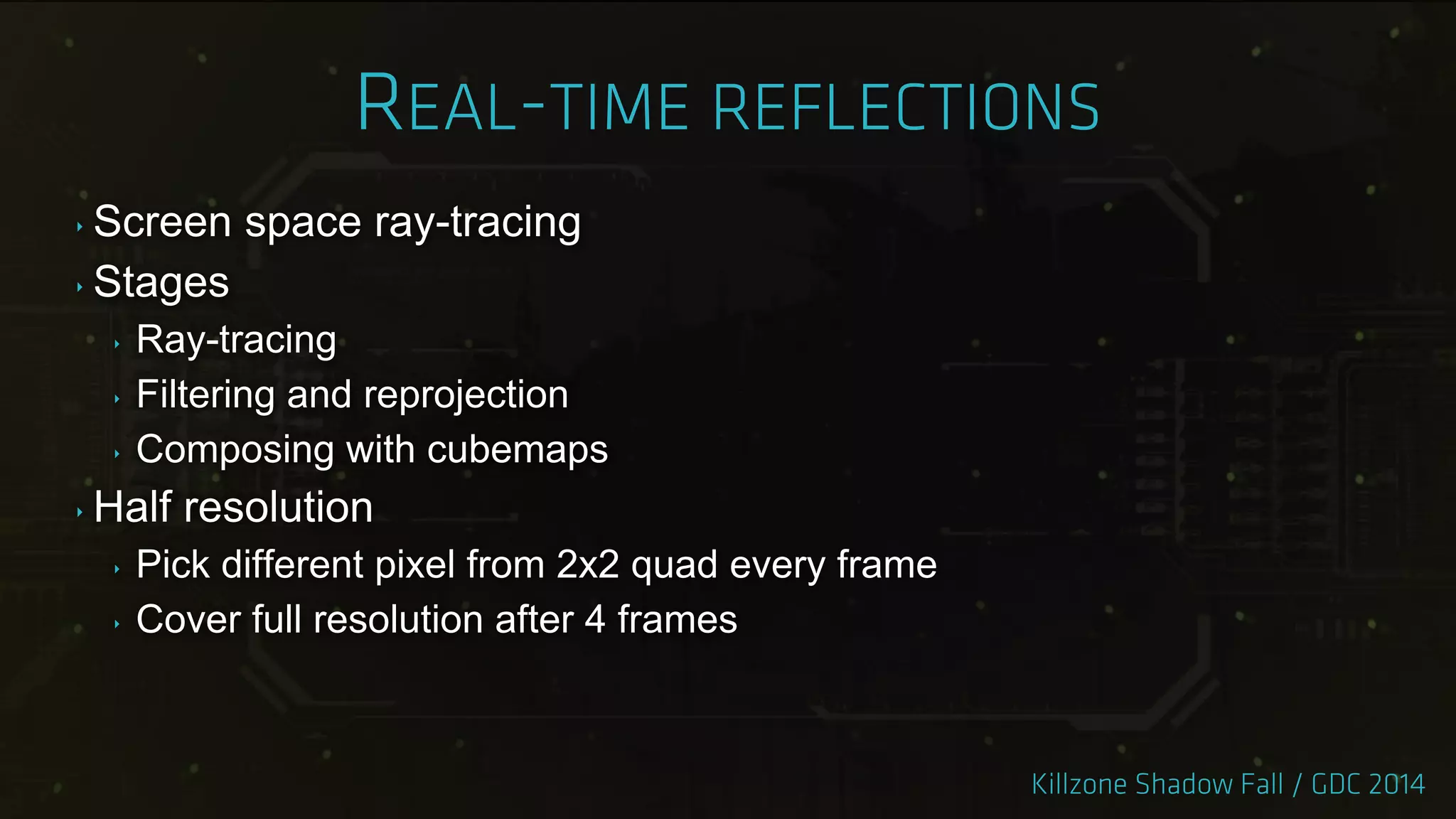

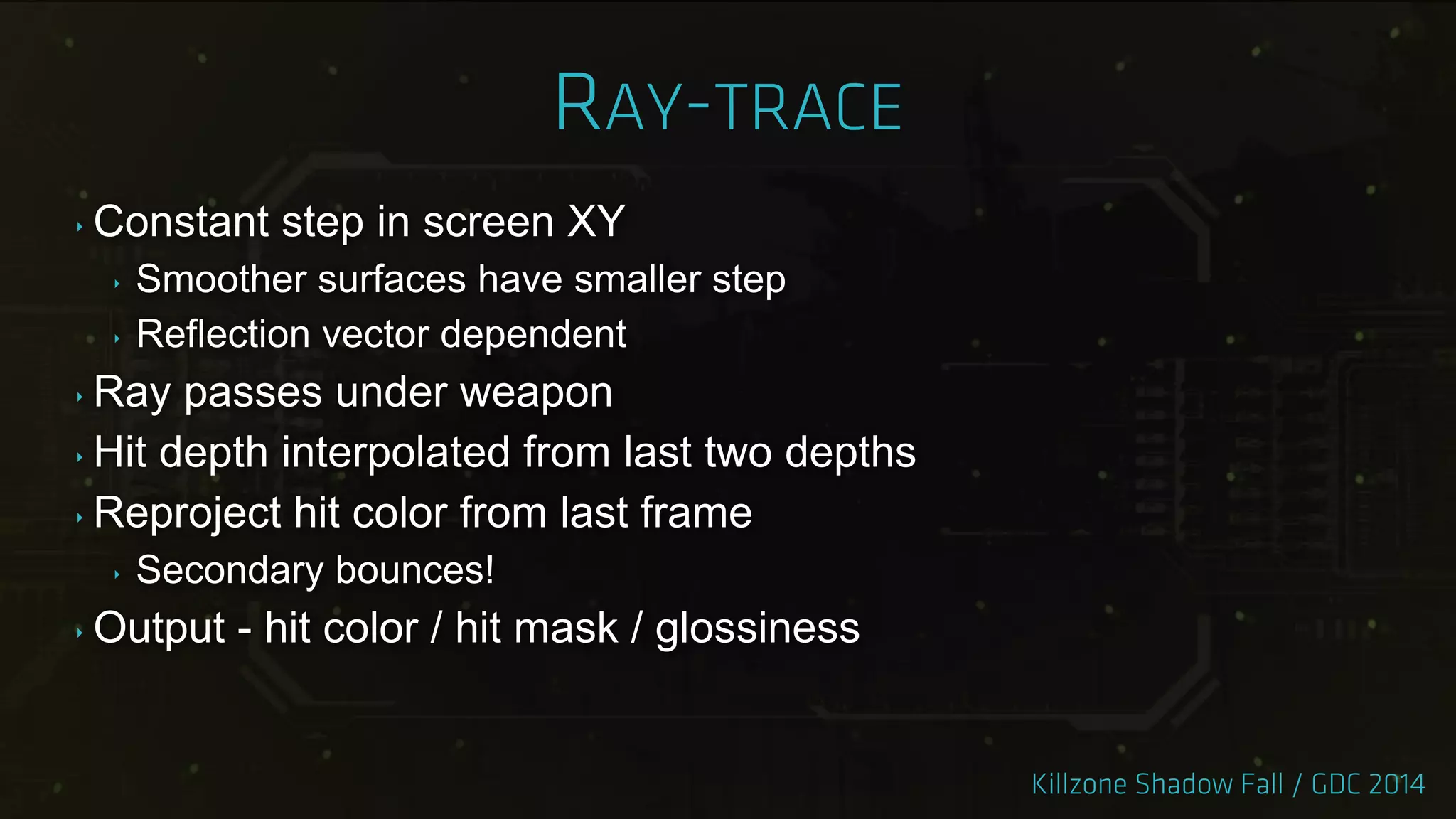

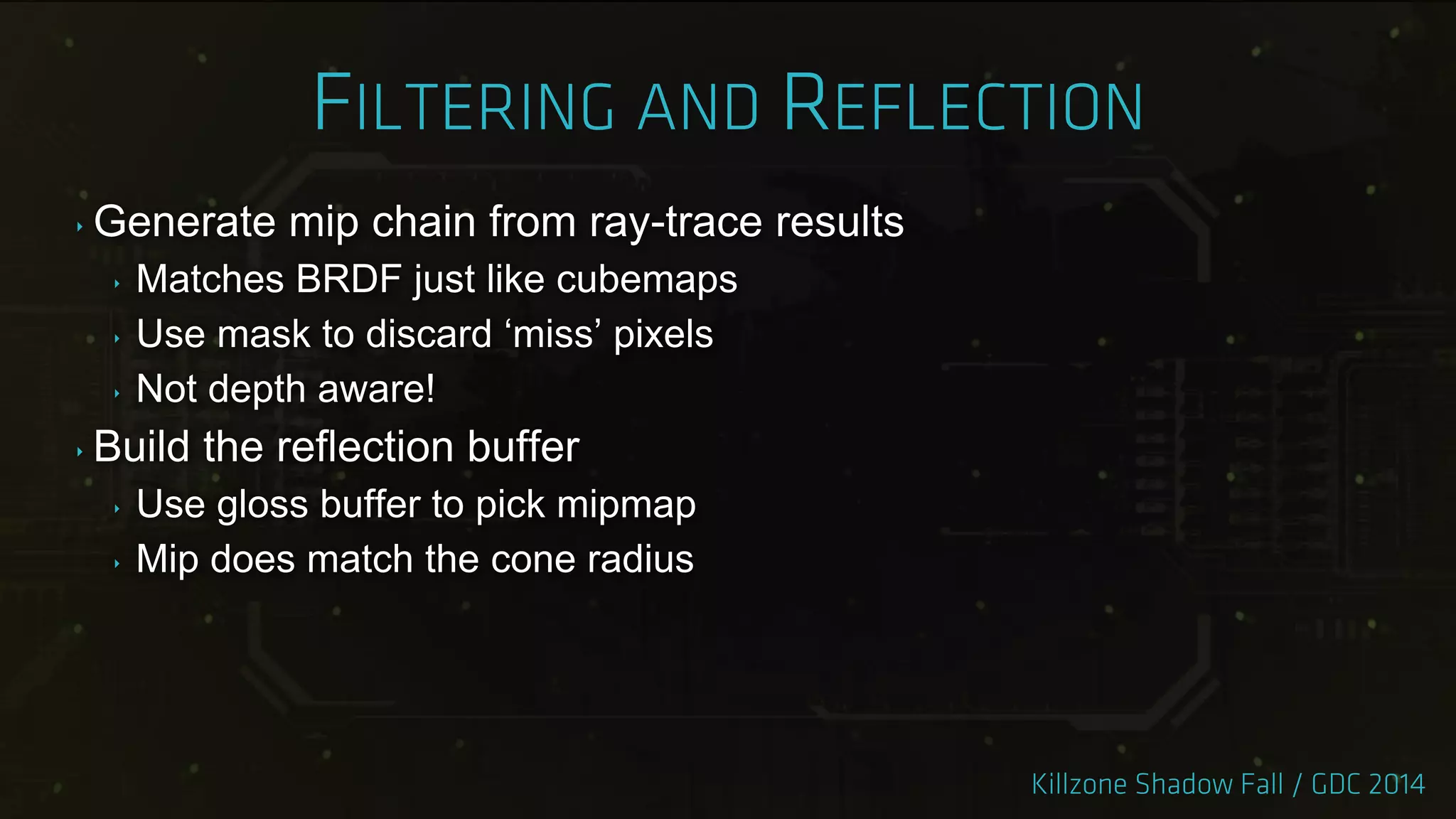

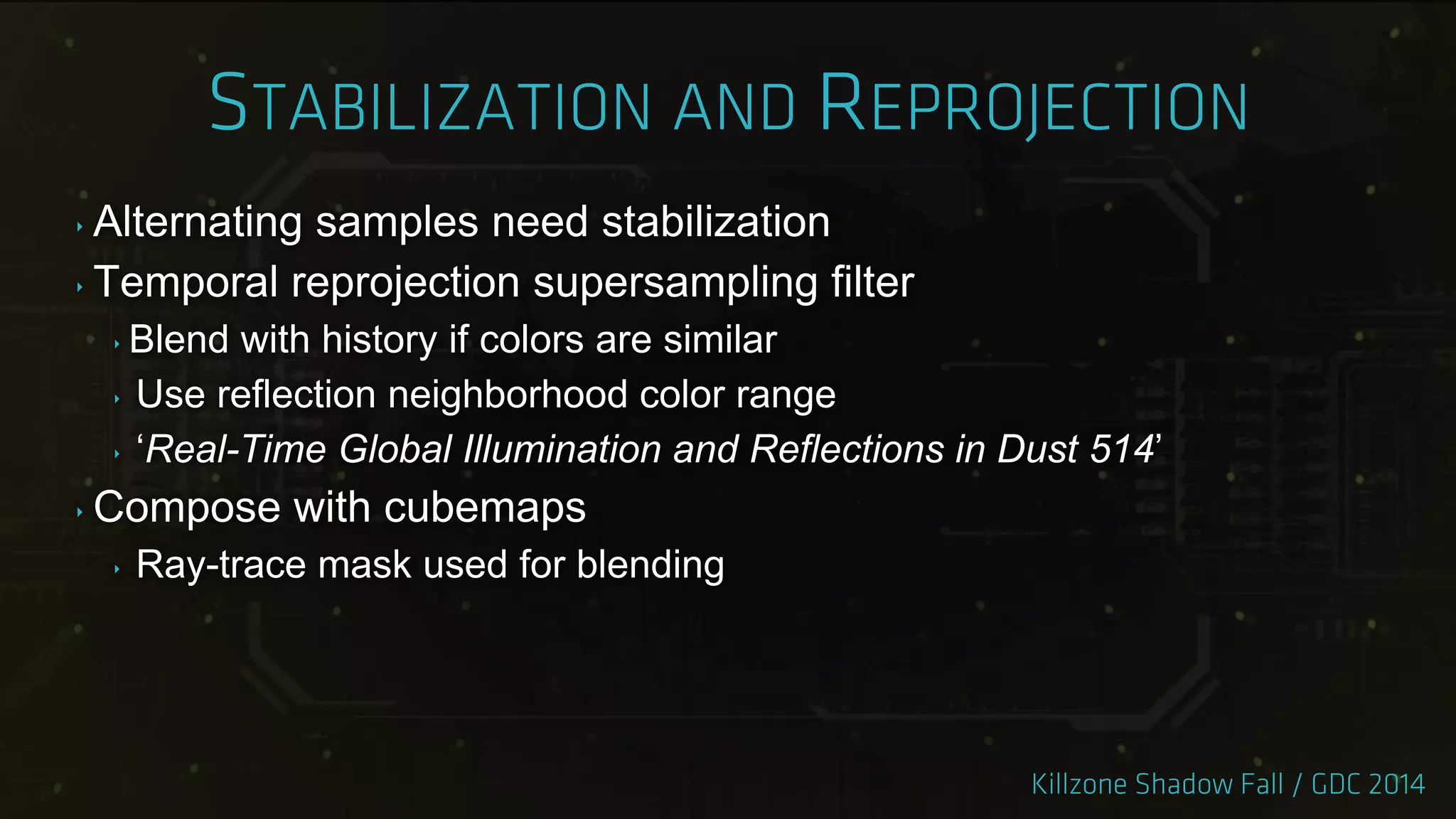

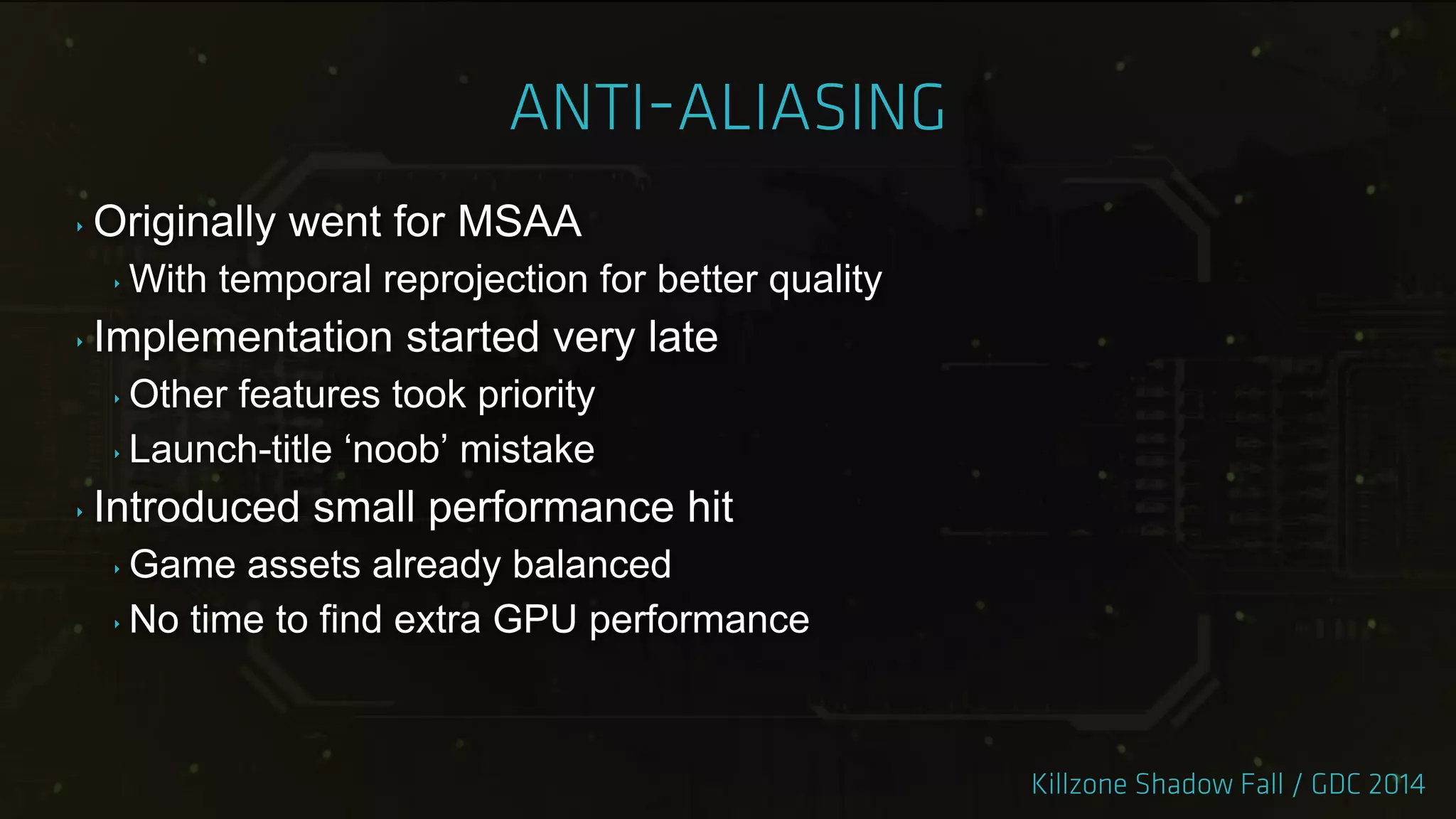

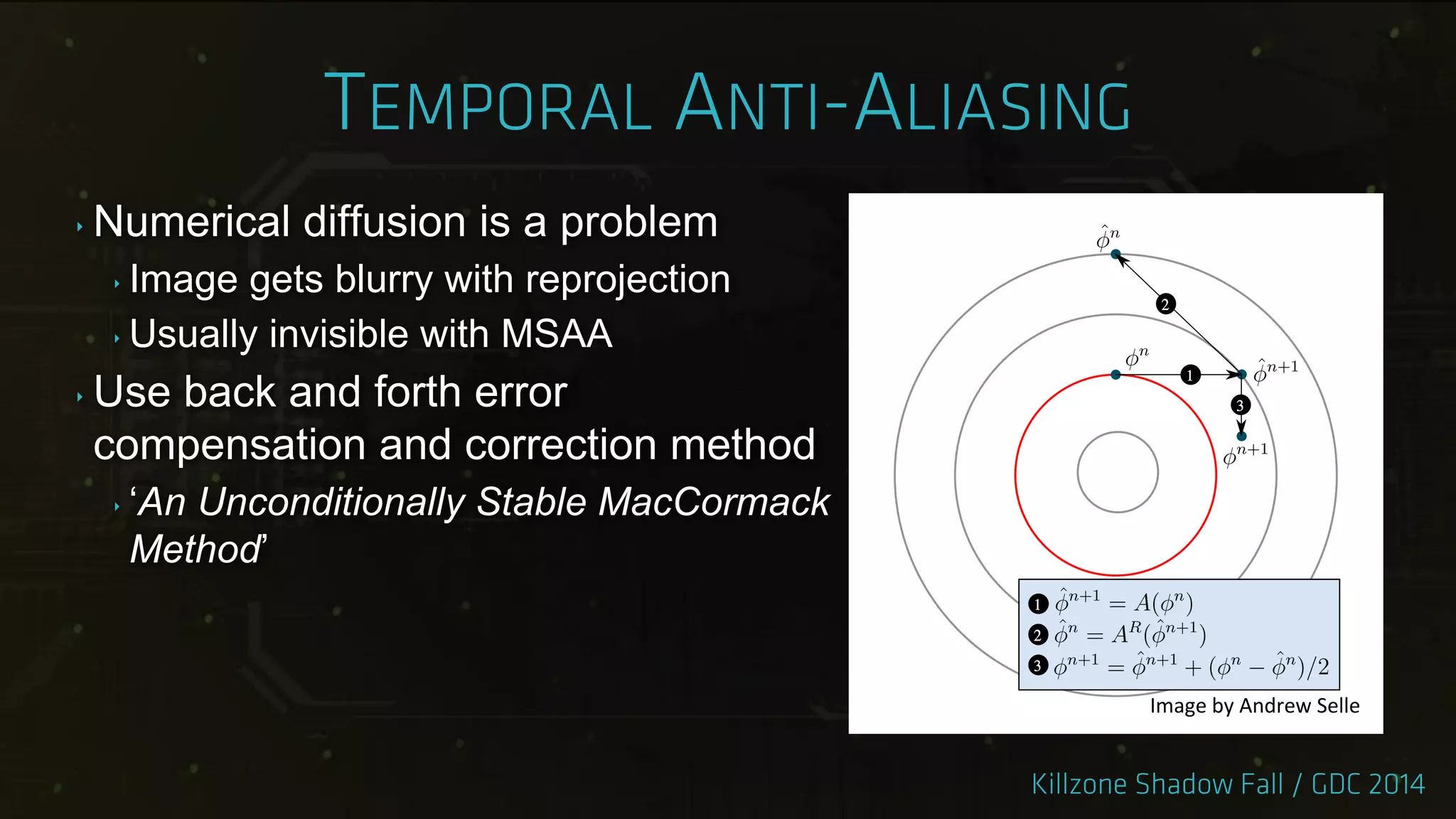

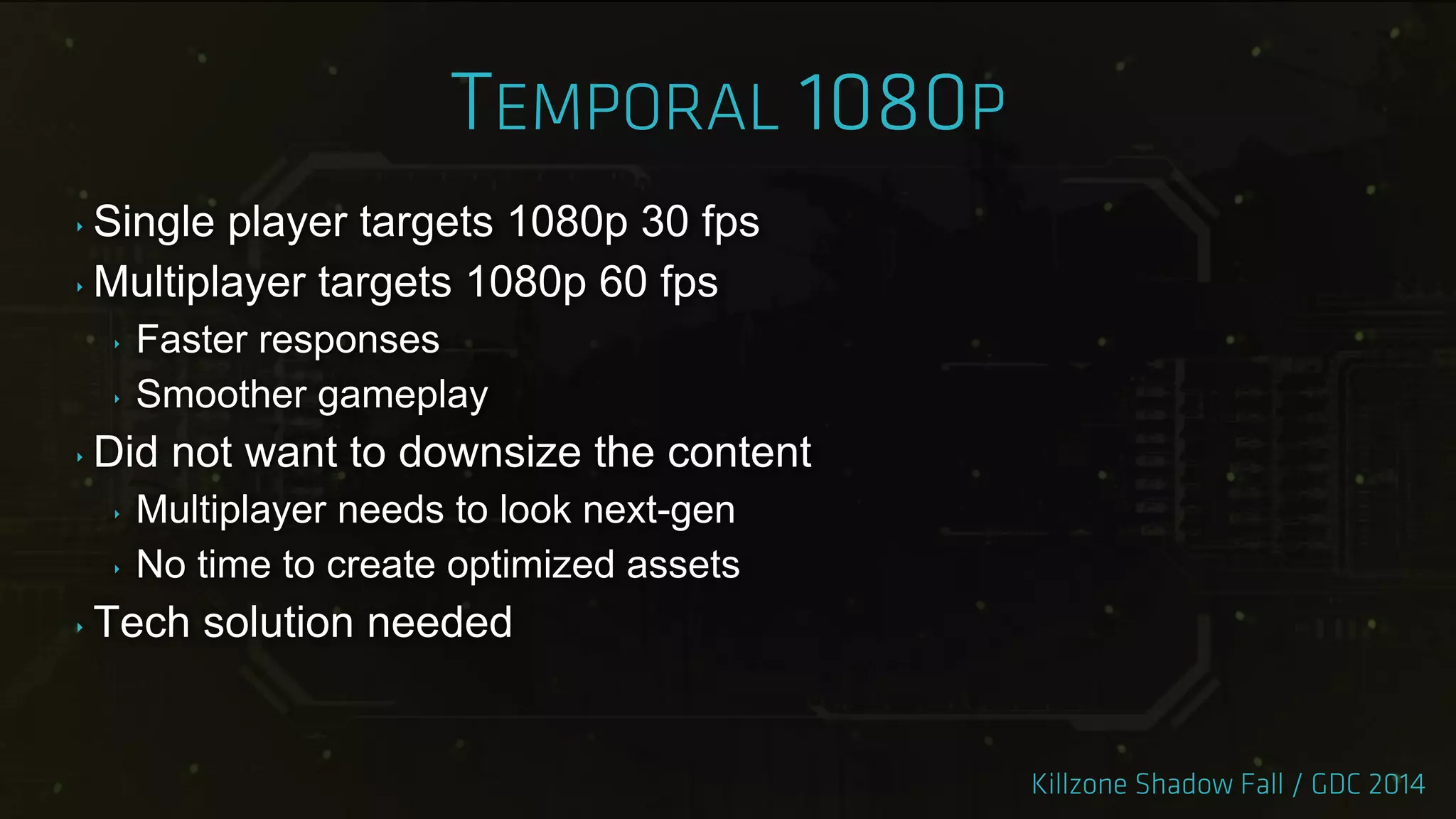

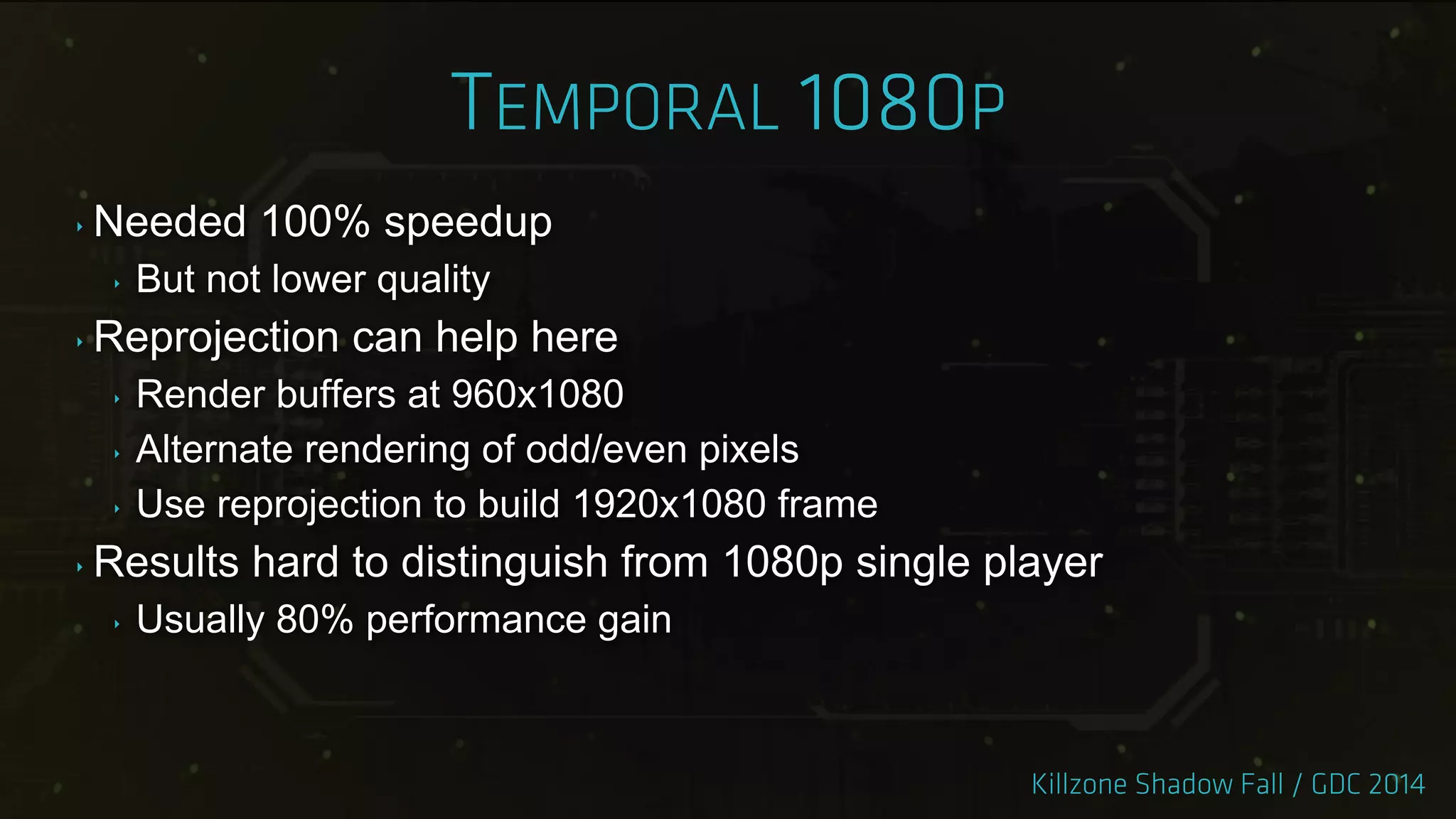

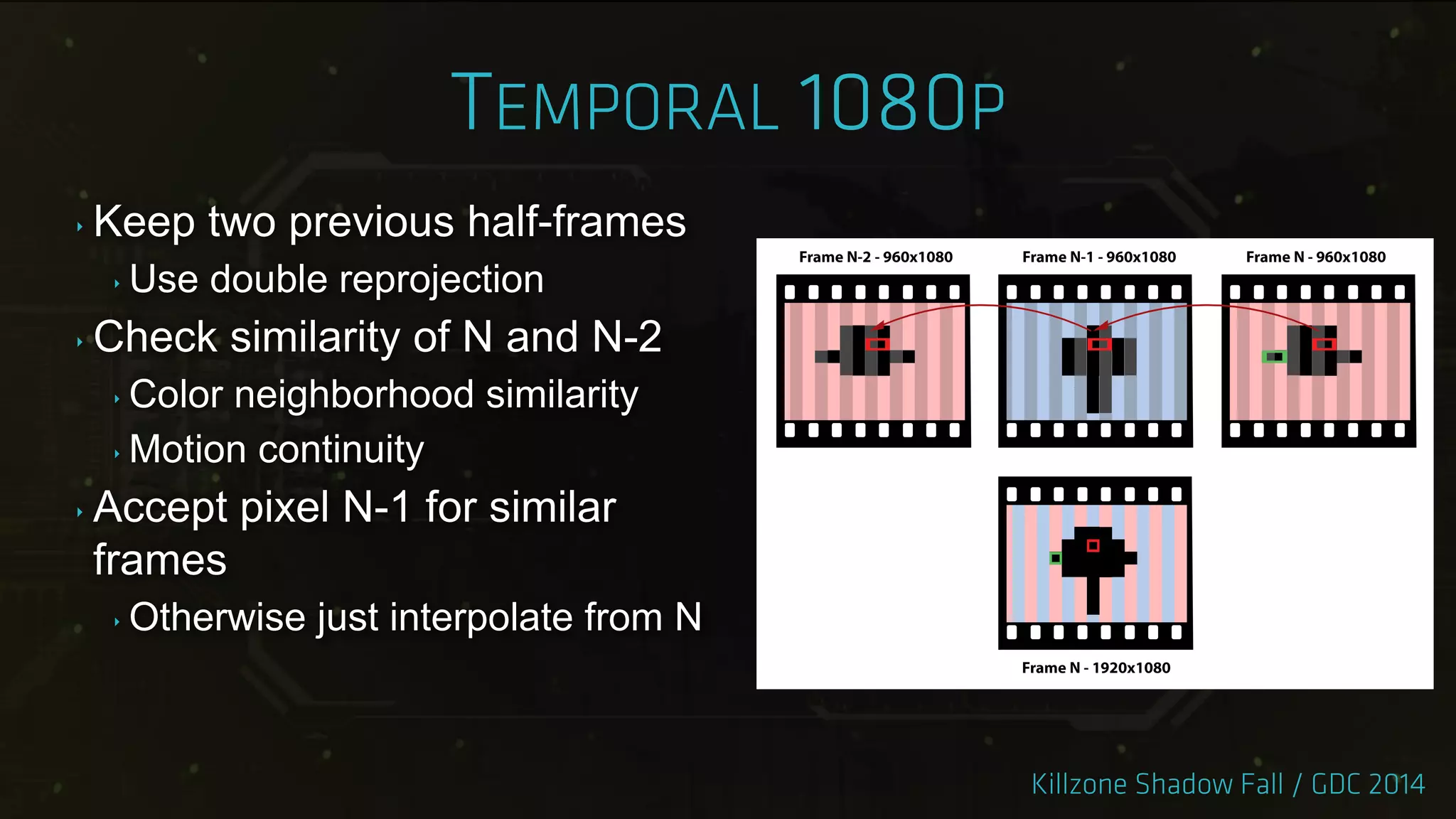

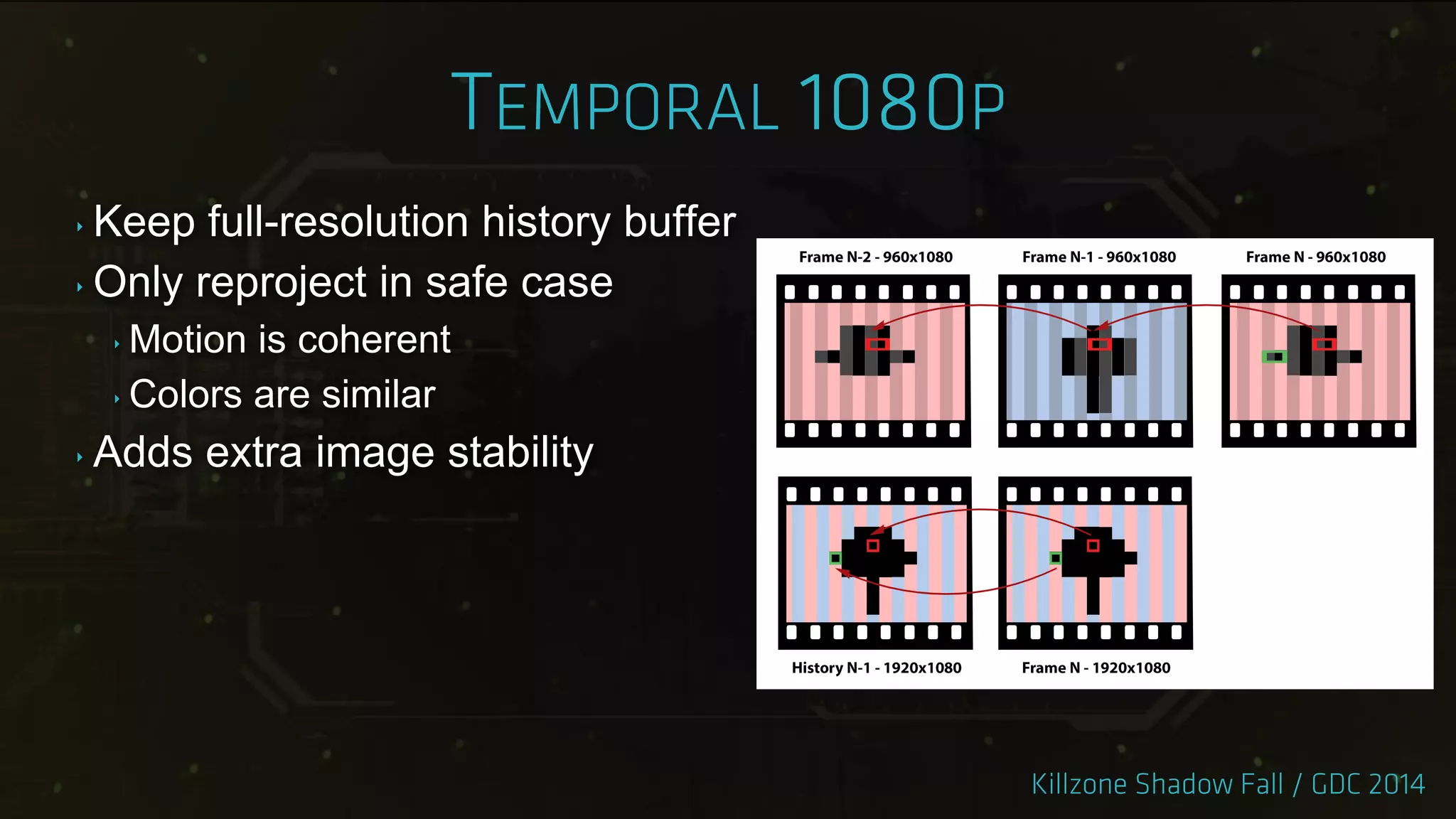

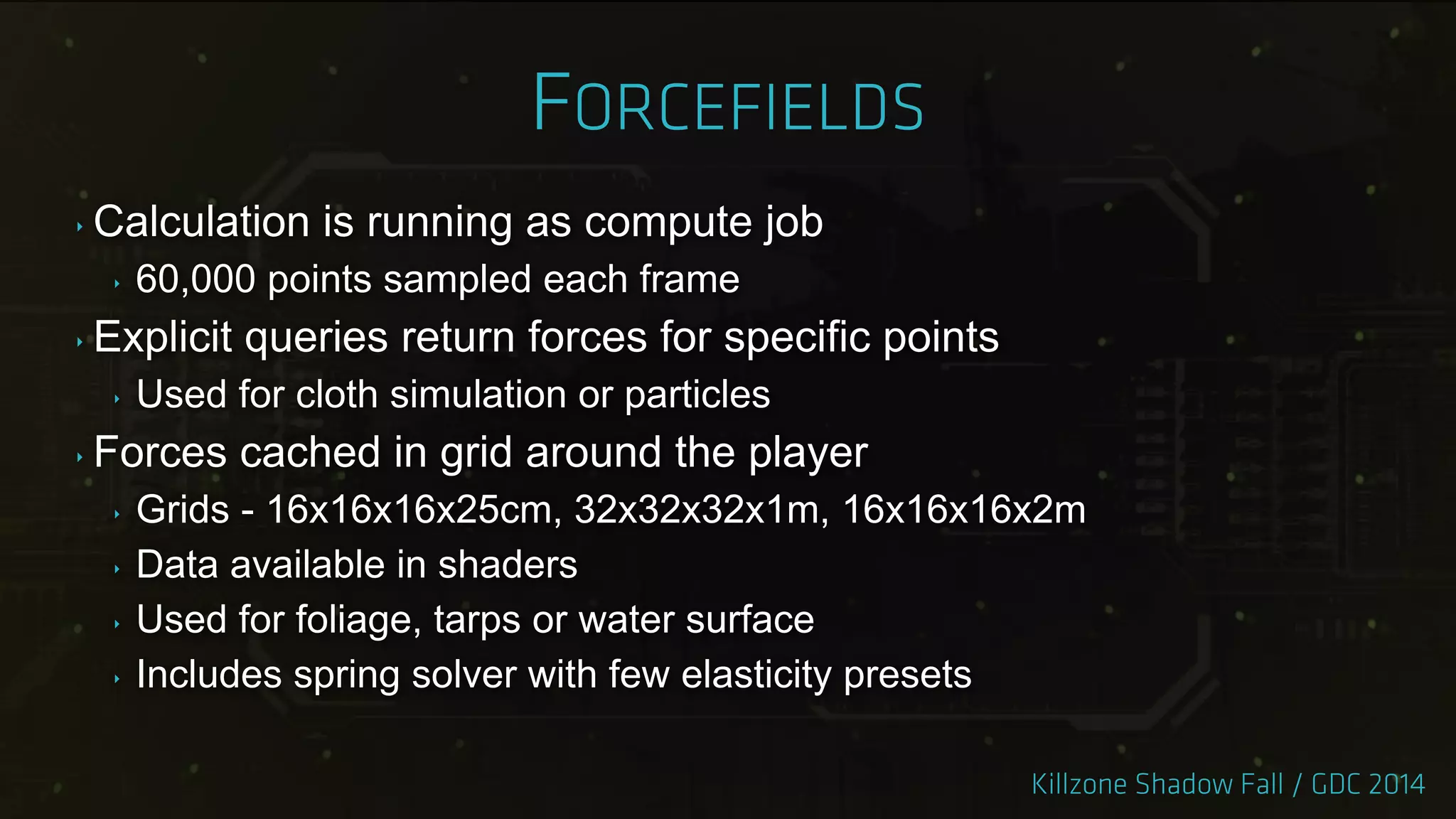

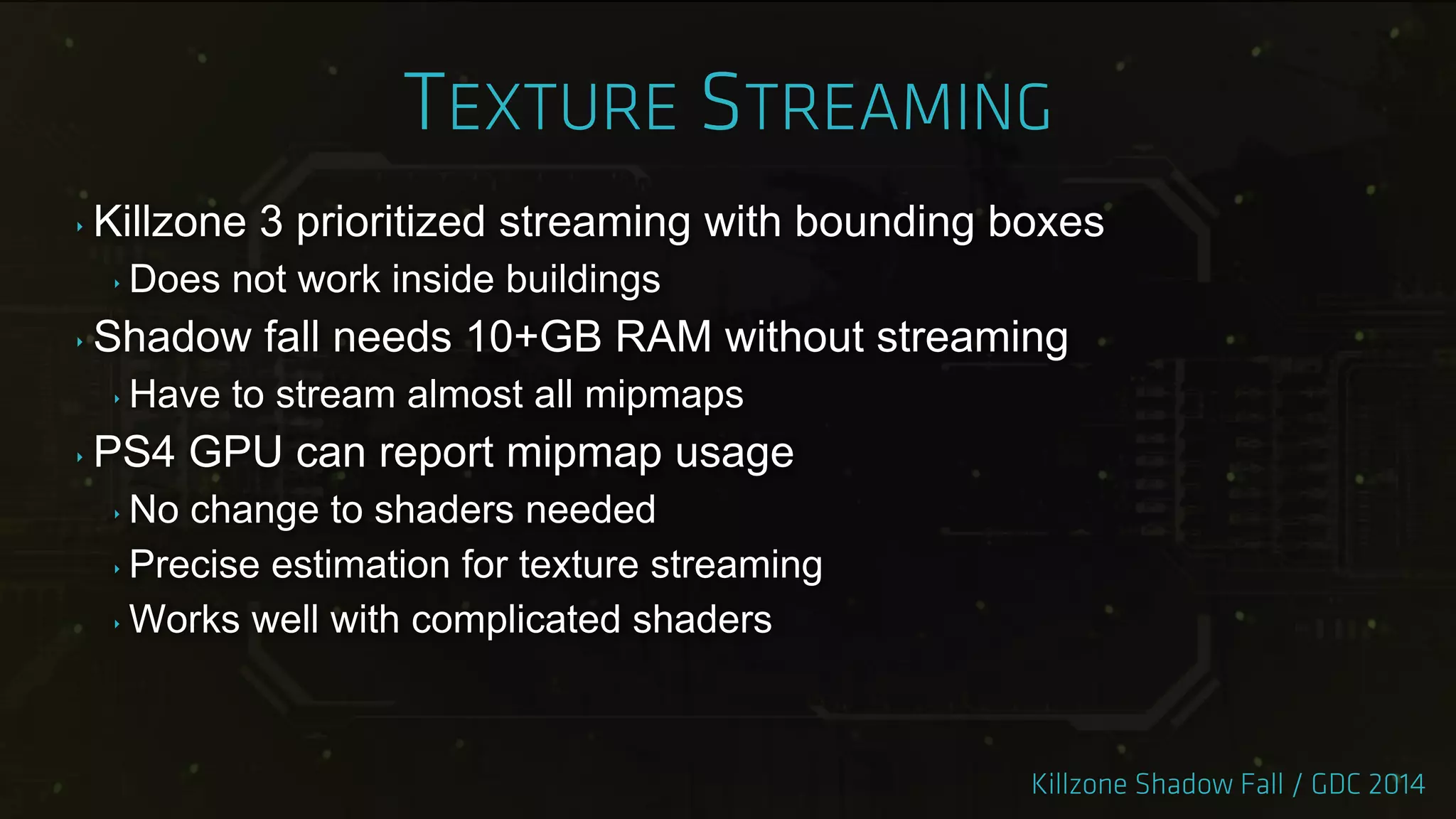

The document details the development and technical advancements of Killzone: Shadow Fall, focusing on next-gen graphics and game design strategies that enhance aesthetic quality and gameplay experience. Key highlights include significant improvements in lighting, shading, and volumetrics, along with a novel approach to rendering and dynamic reflections, providing insight into the challenges faced during production. The integration of various innovative techniques aimed to create a visually immersive world while balancing performance constraints is thoroughly discussed.