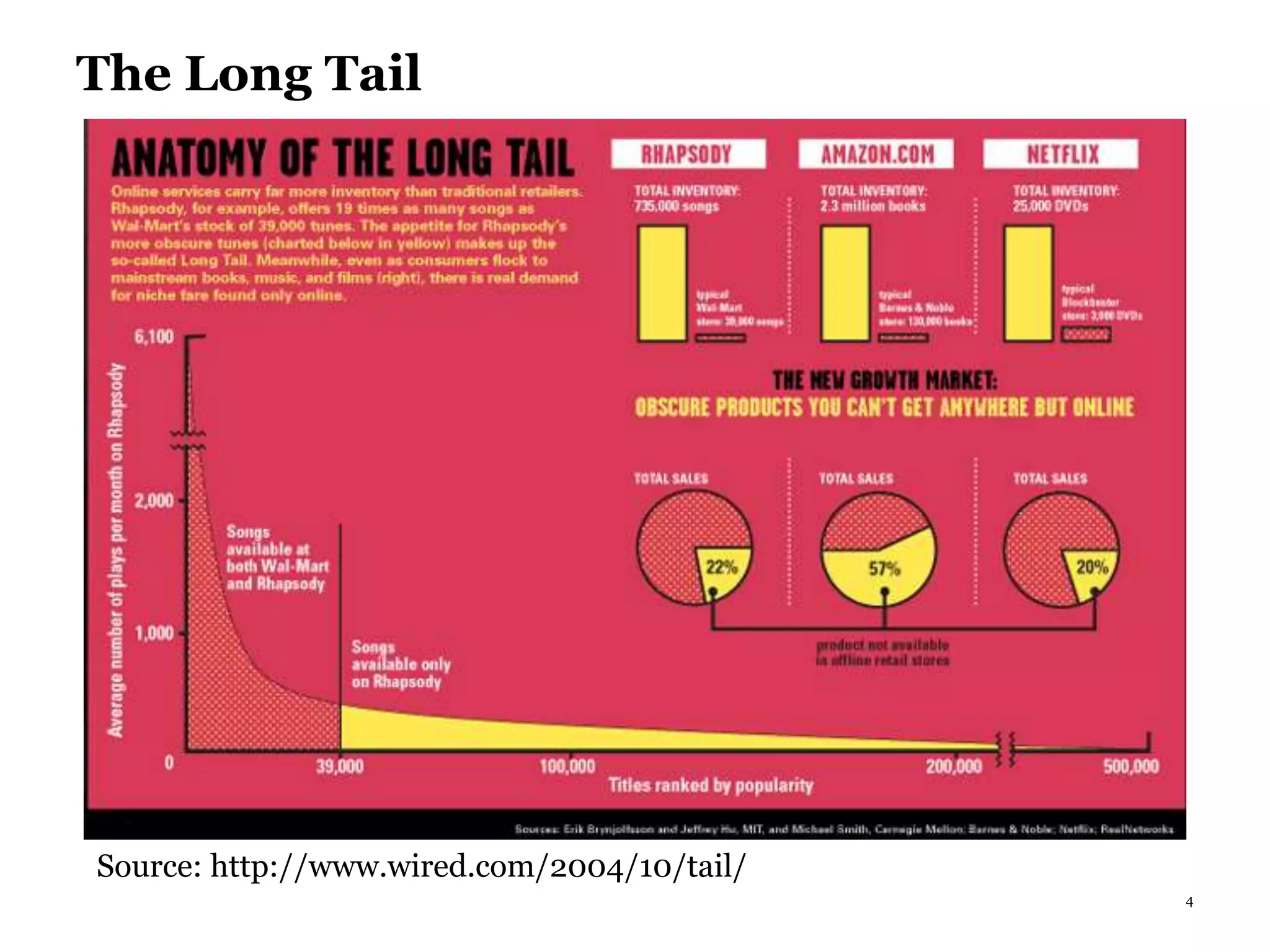

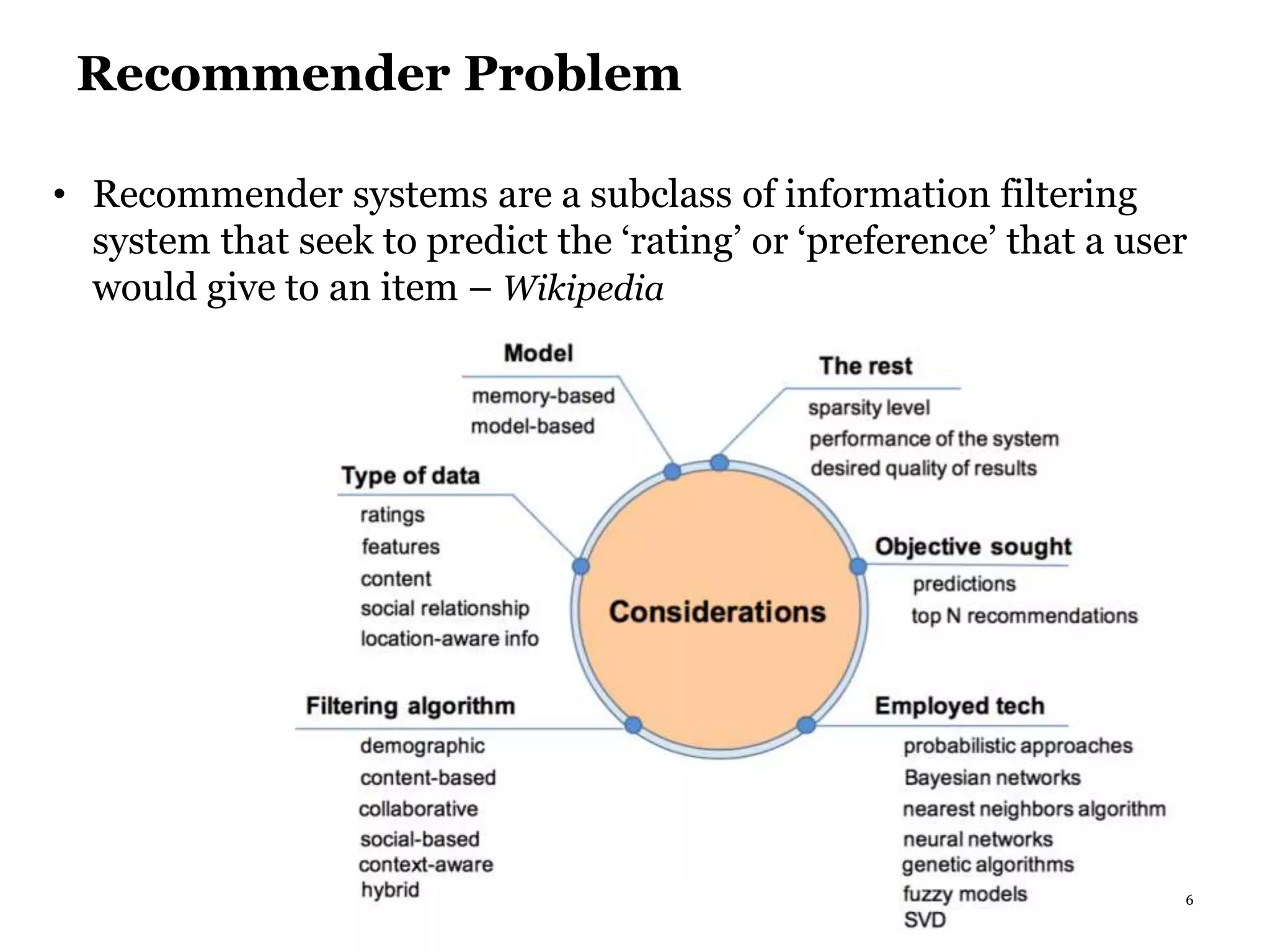

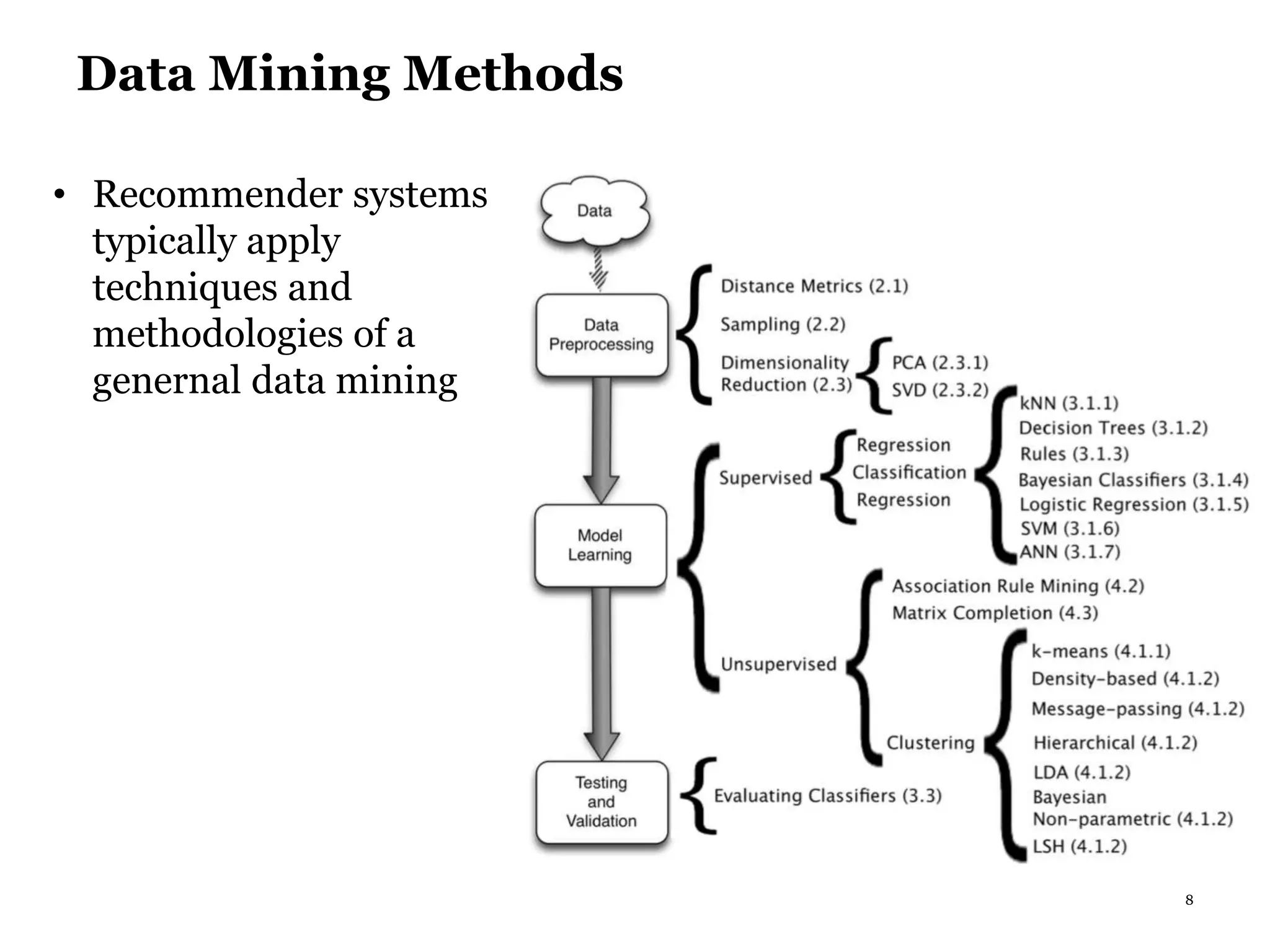

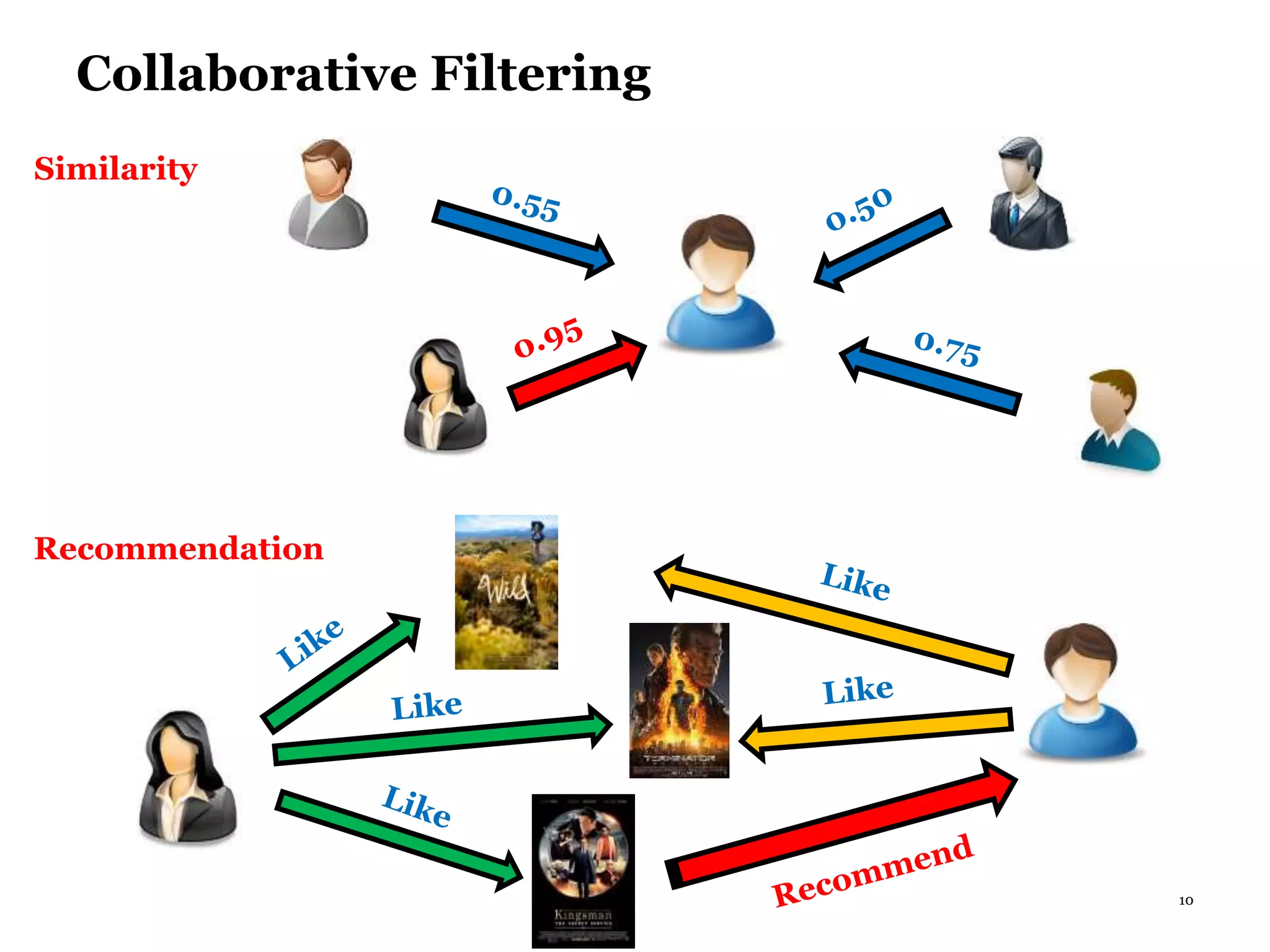

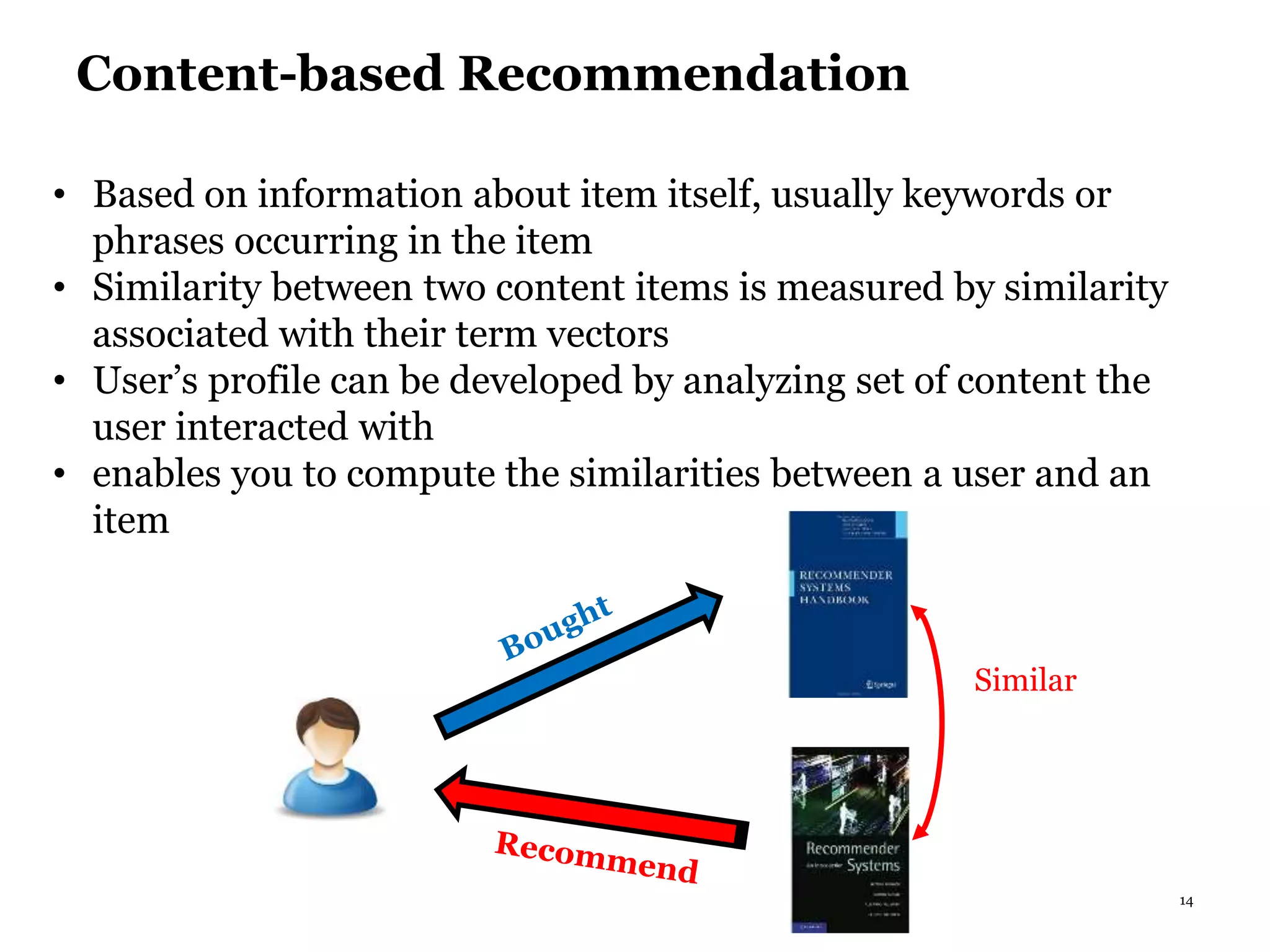

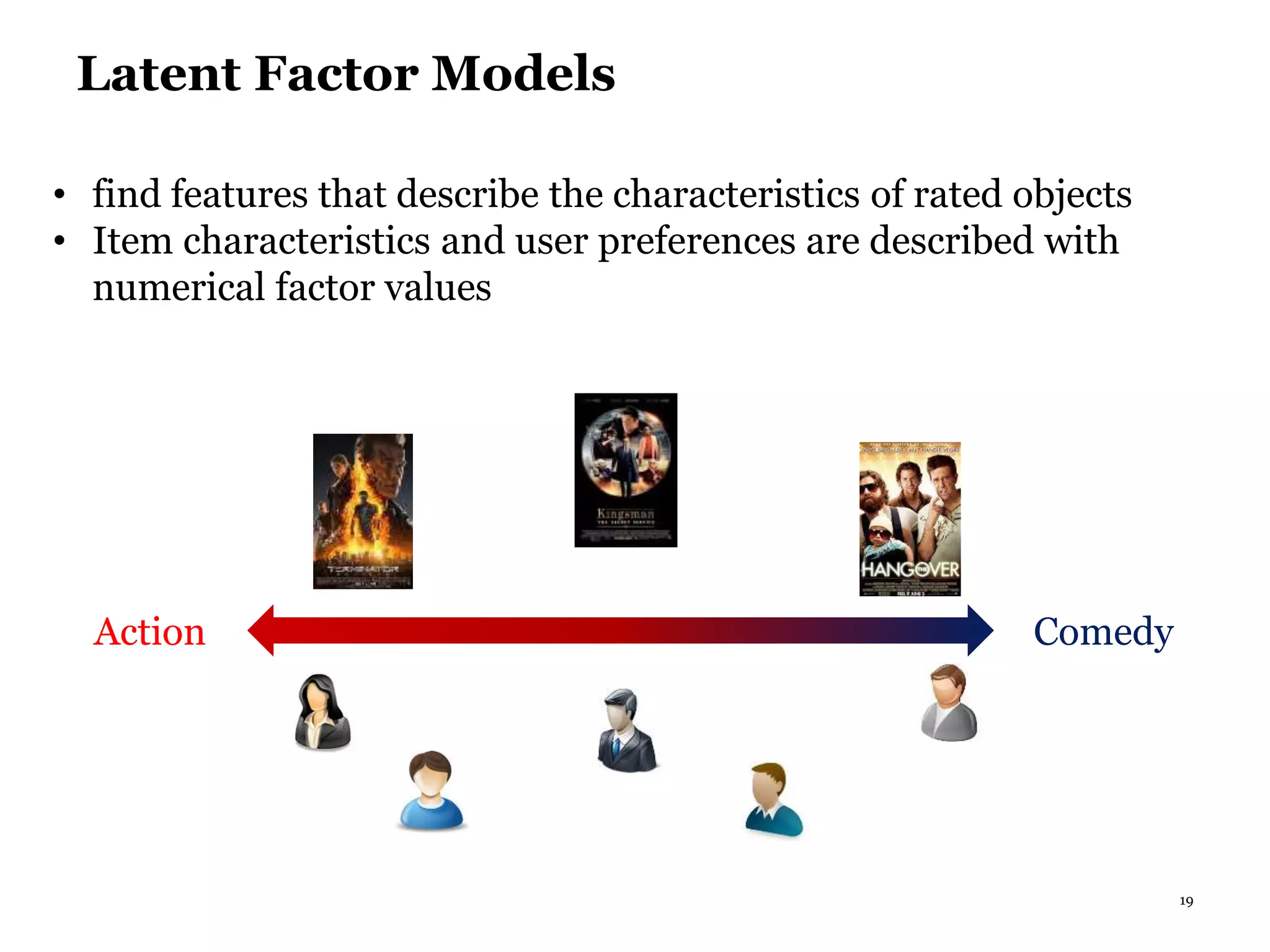

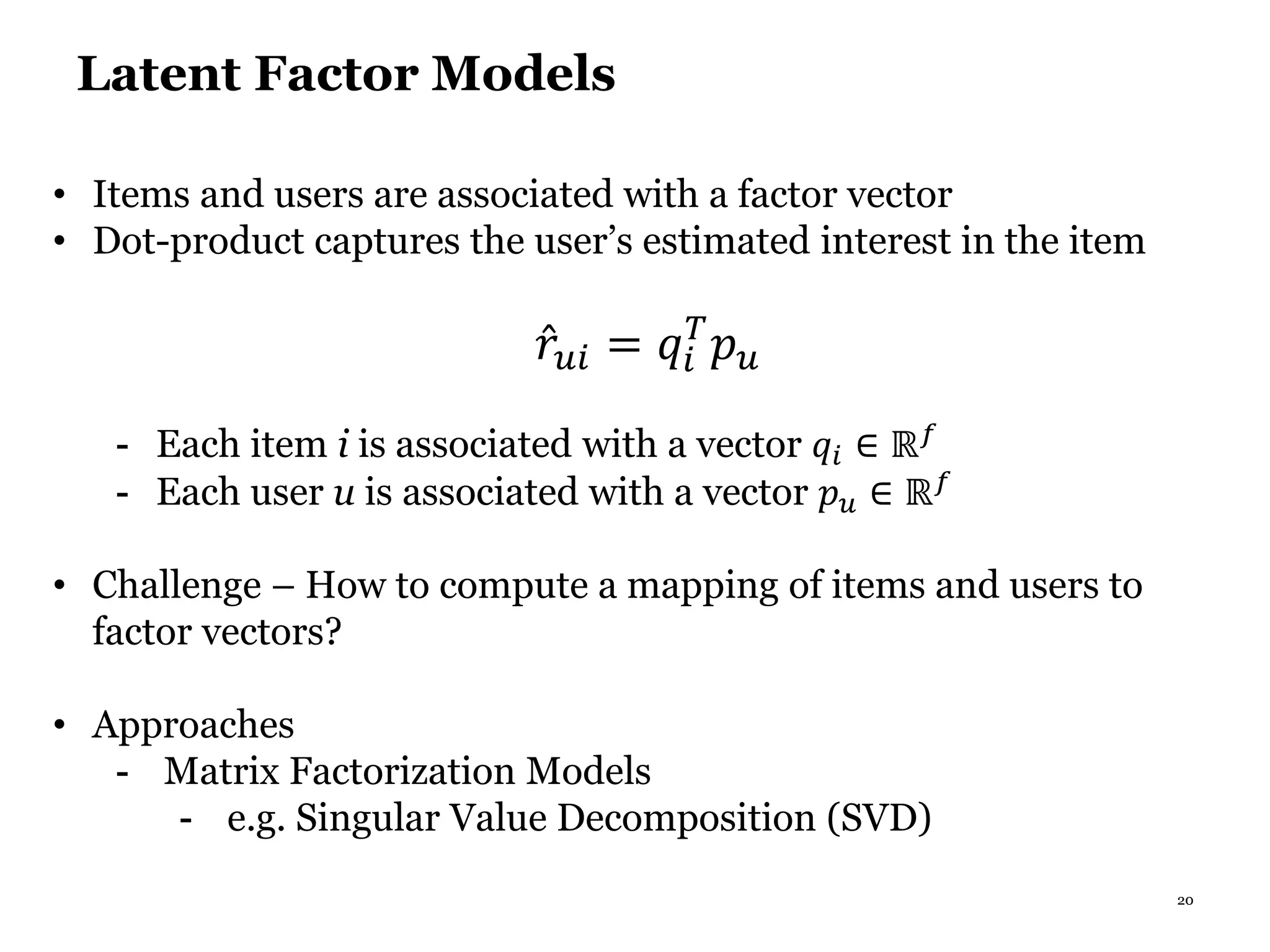

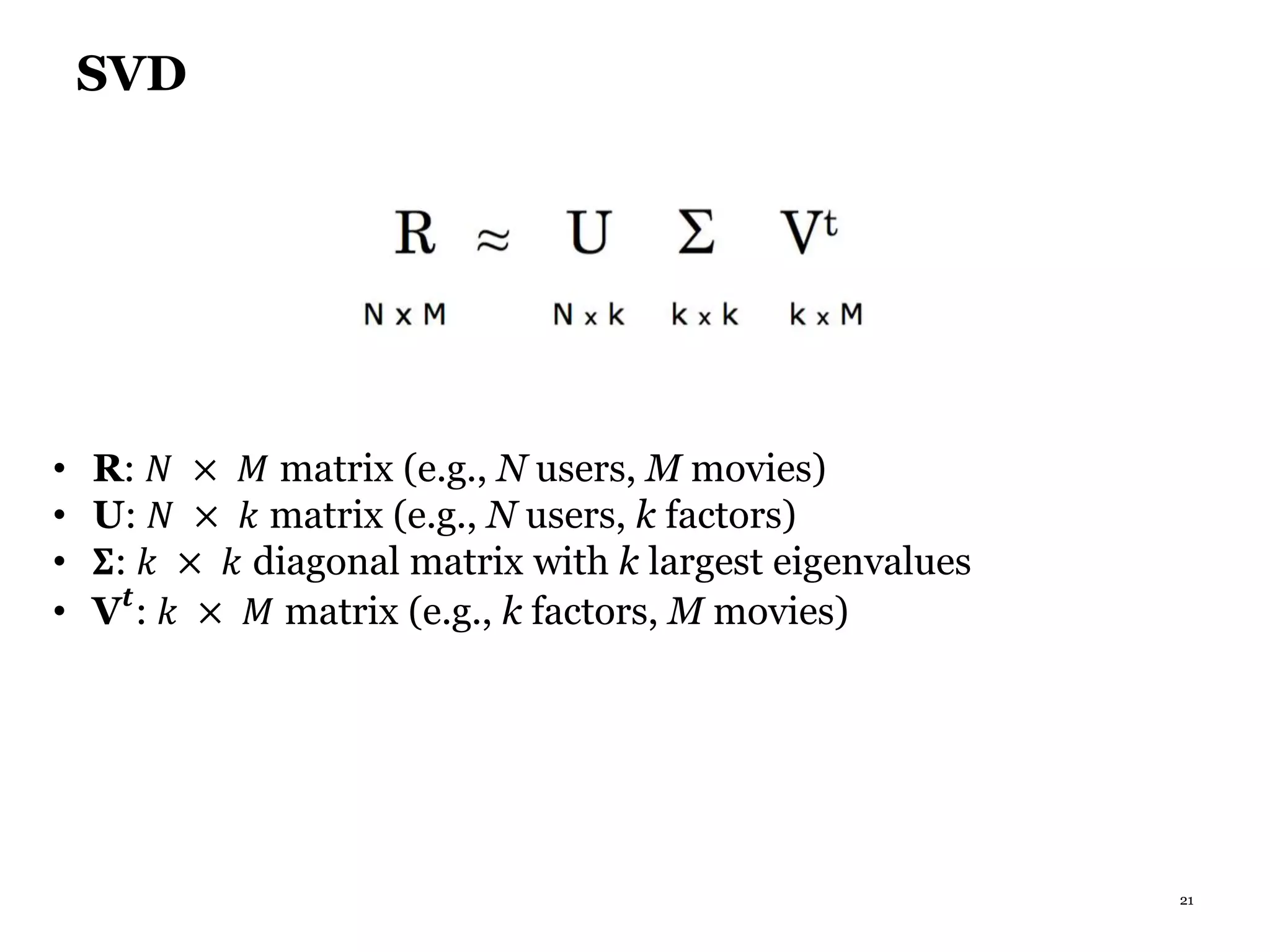

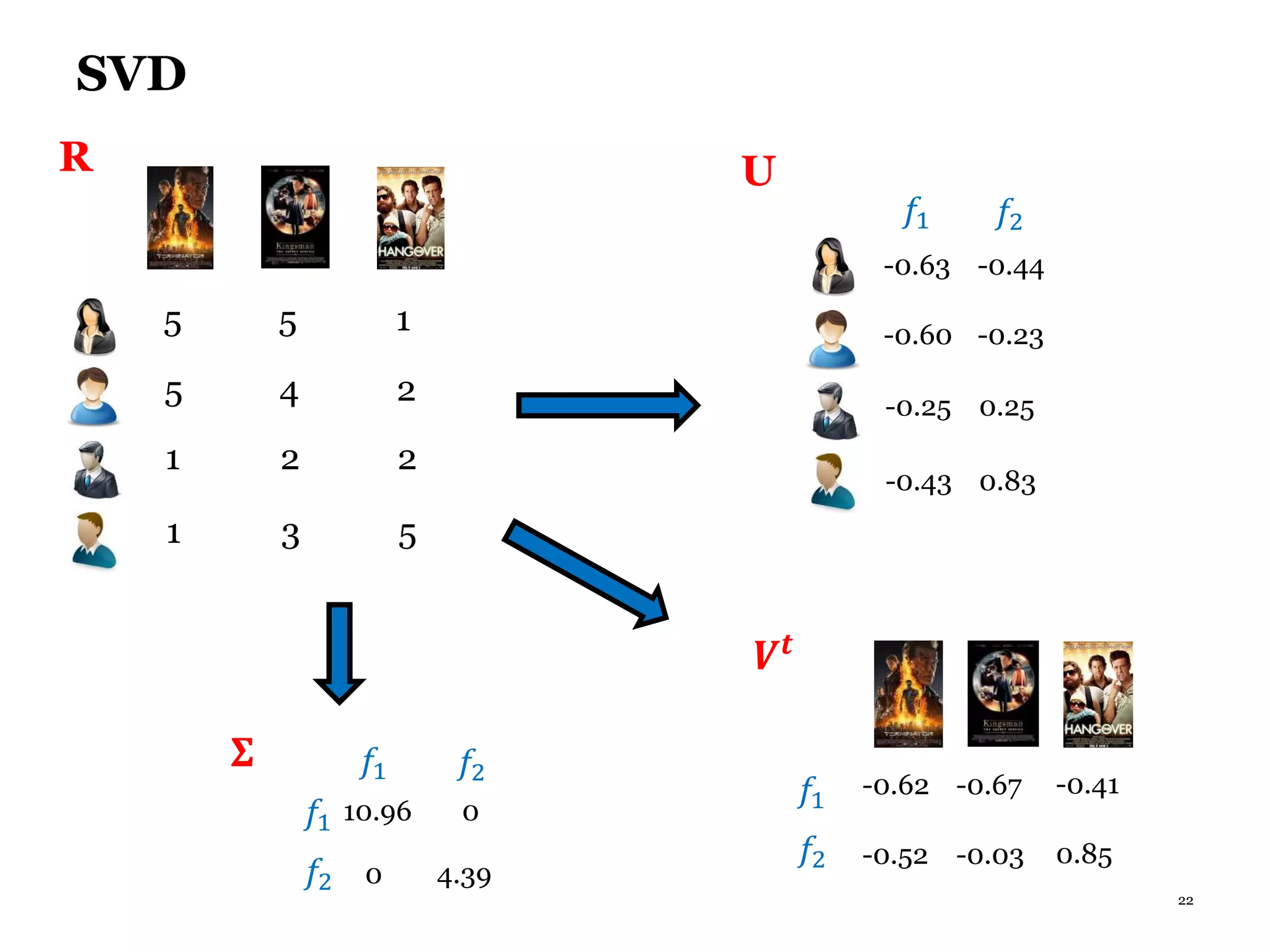

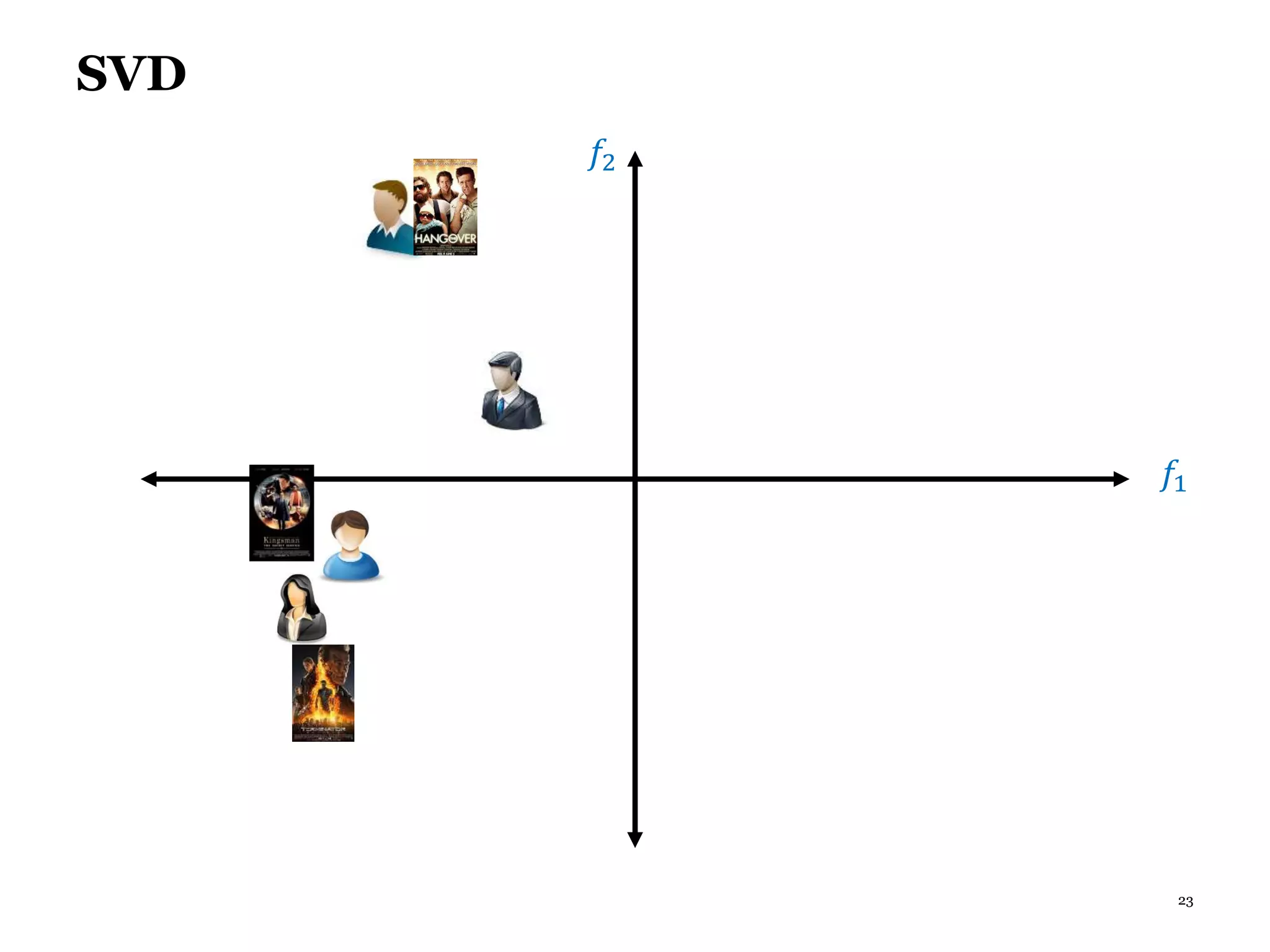

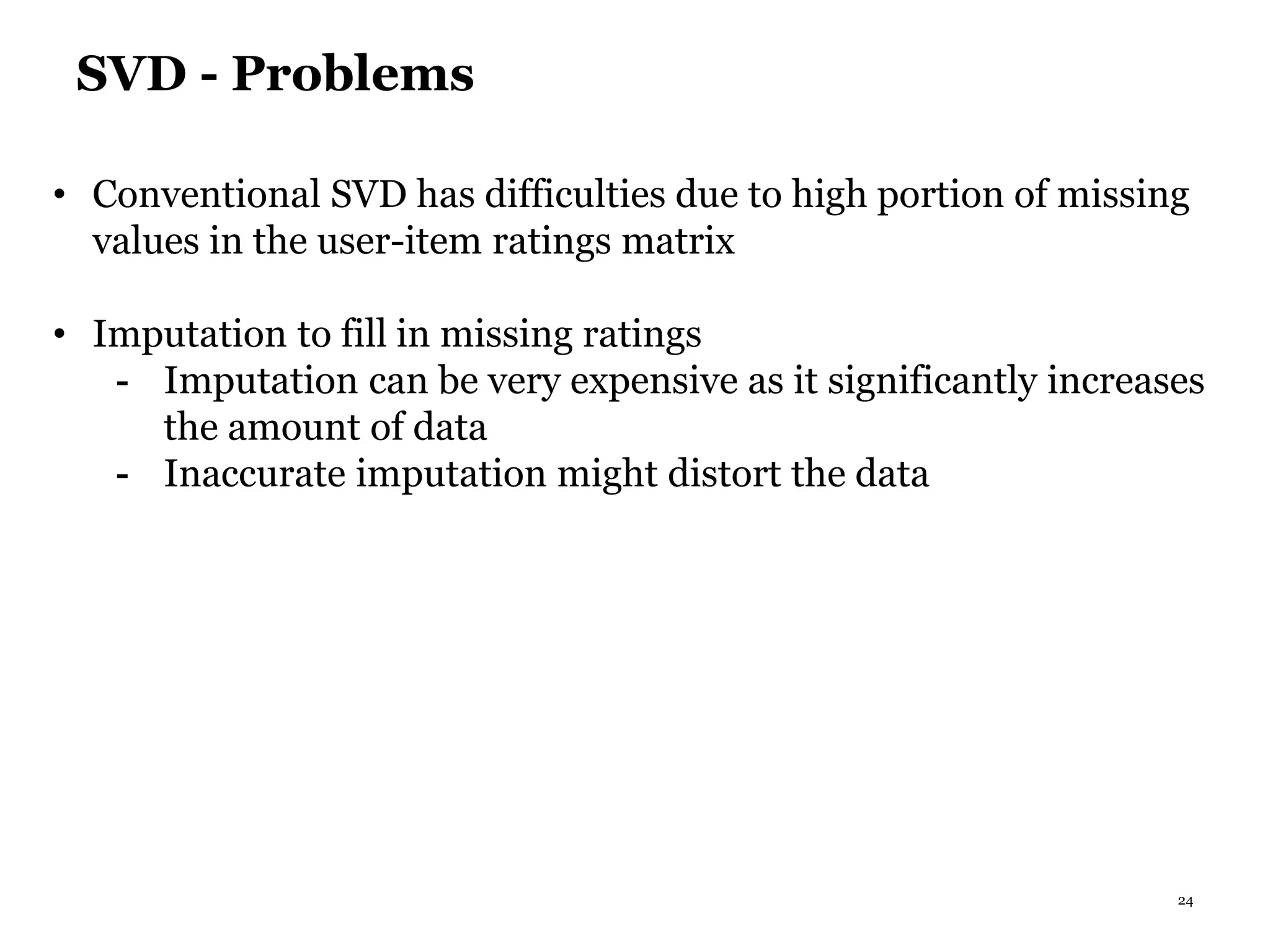

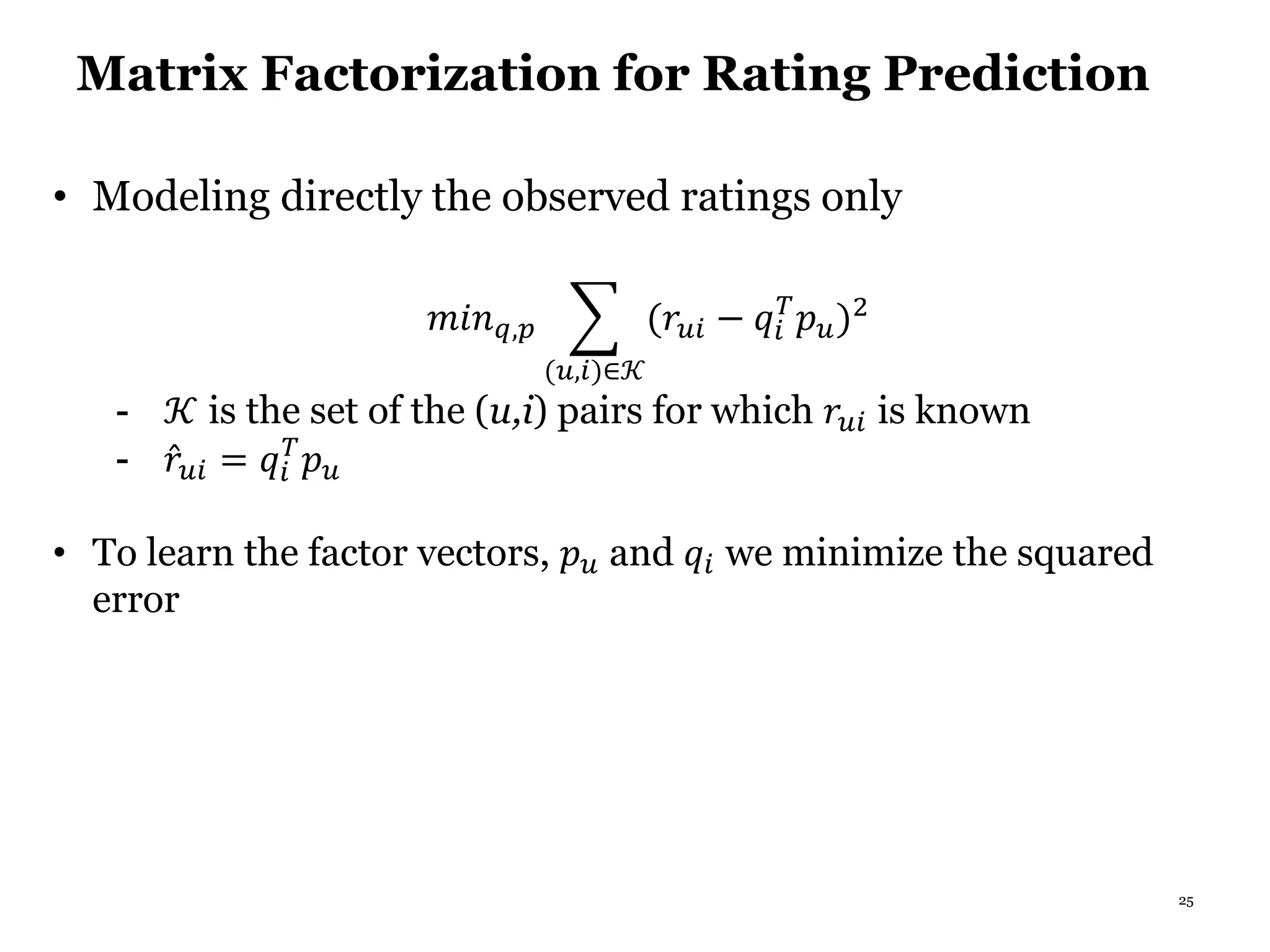

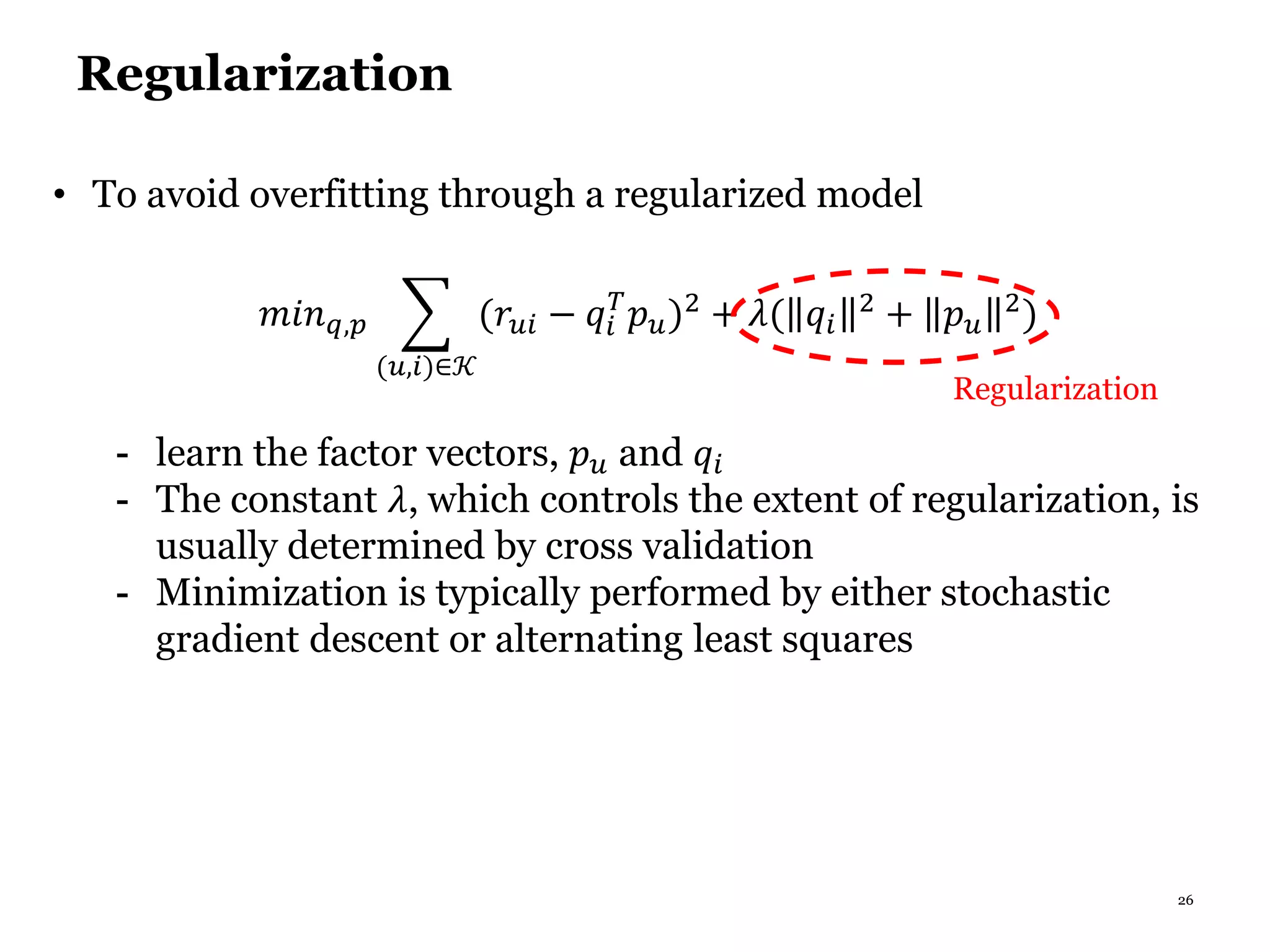

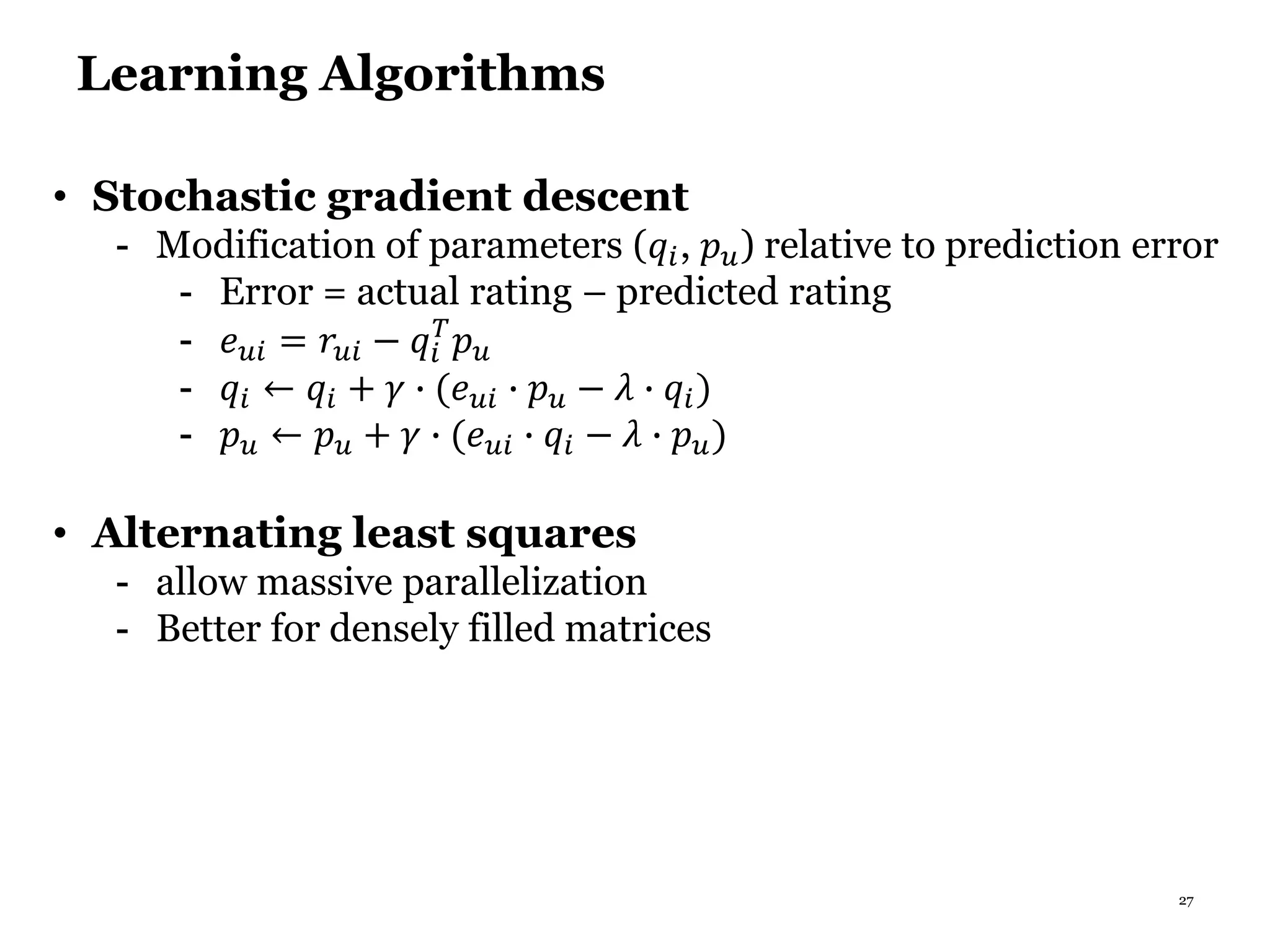

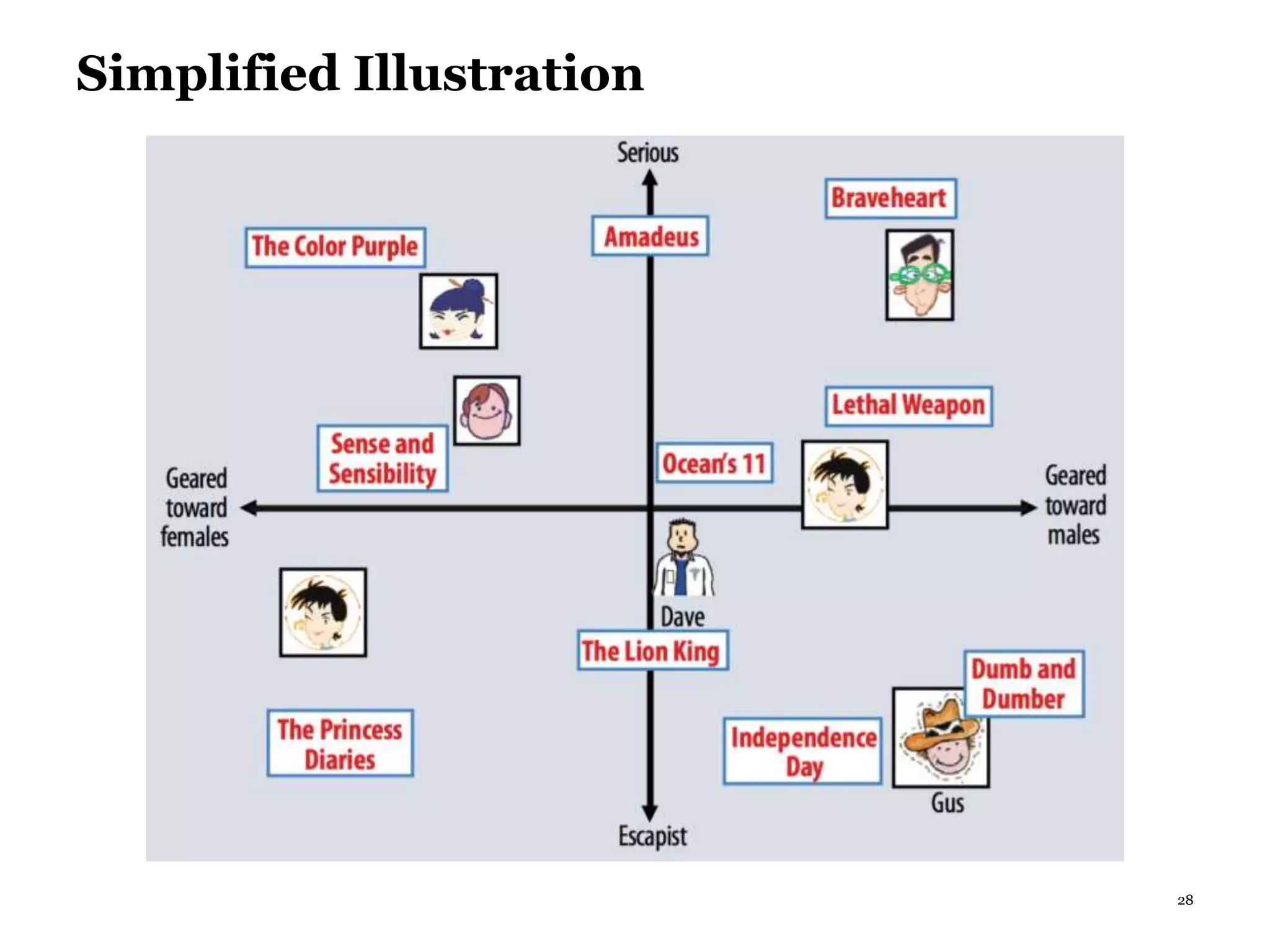

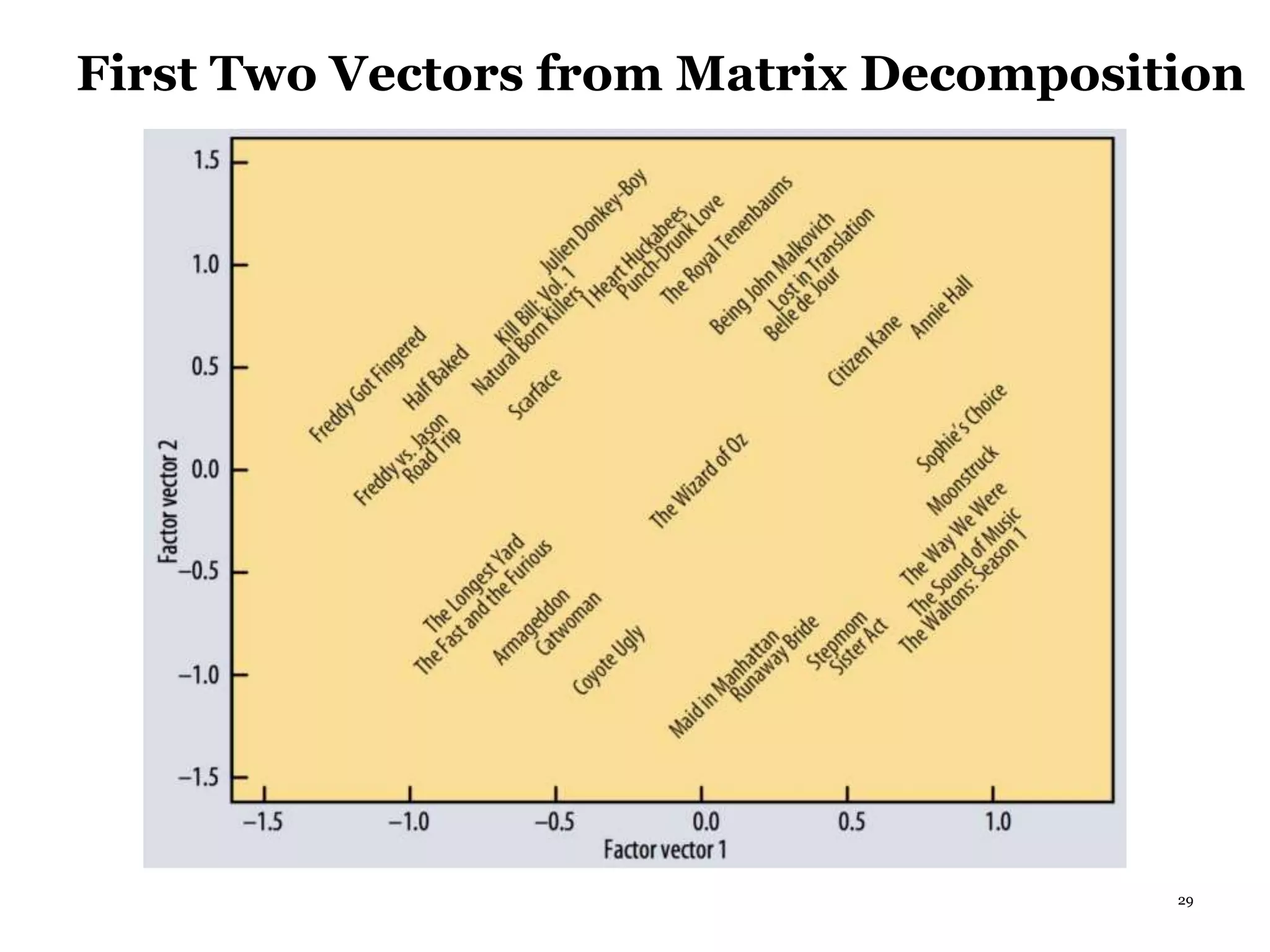

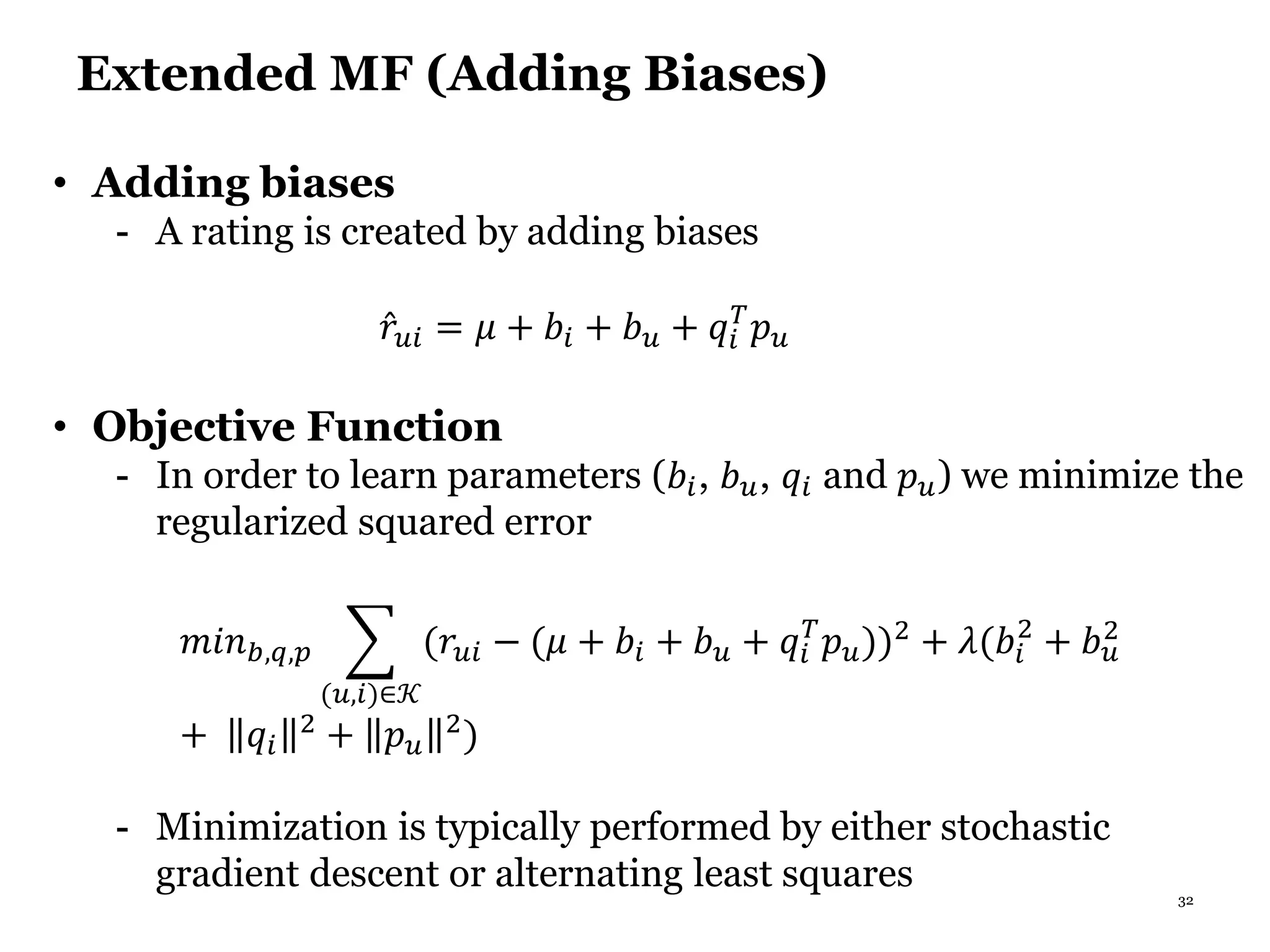

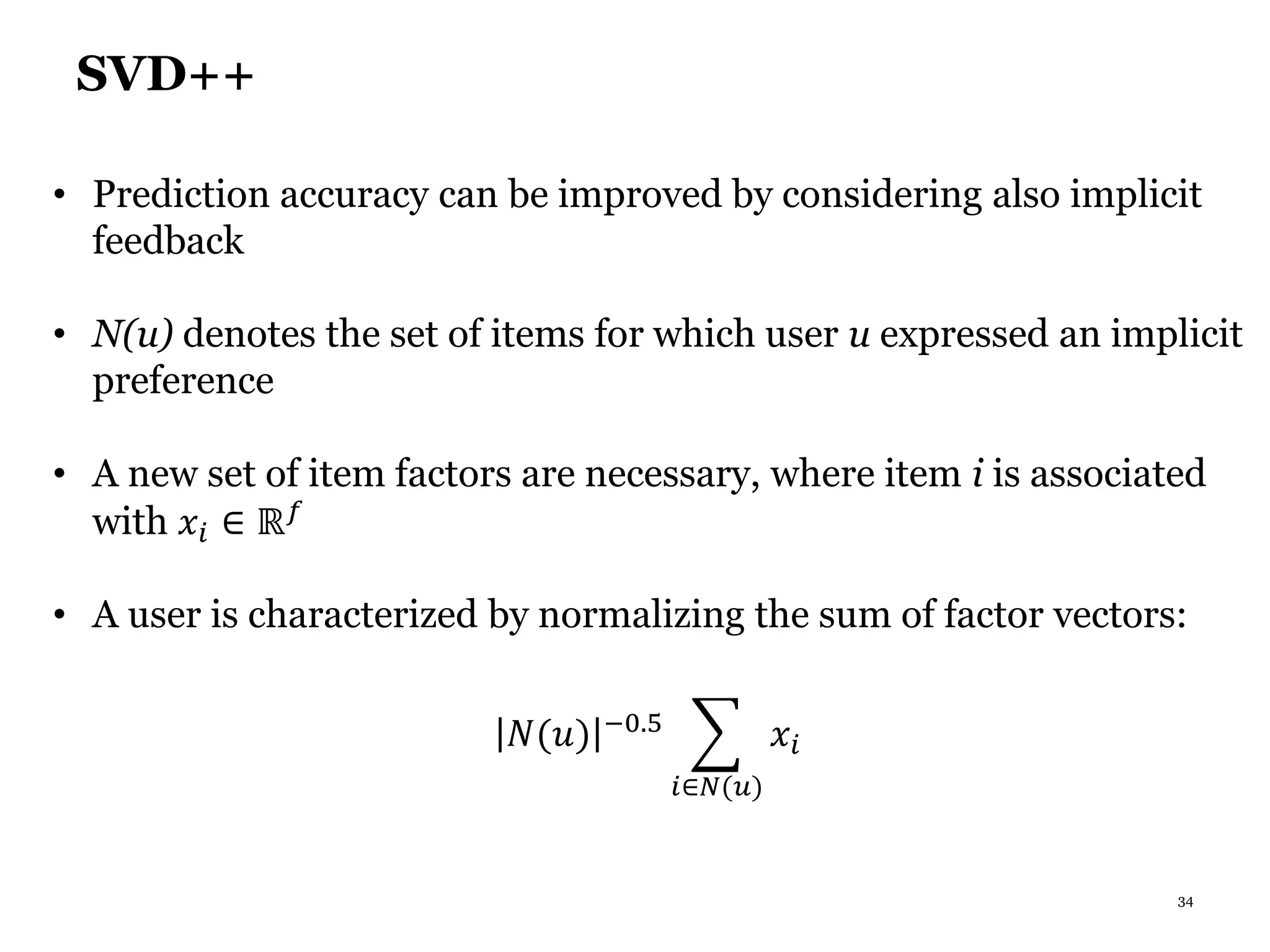

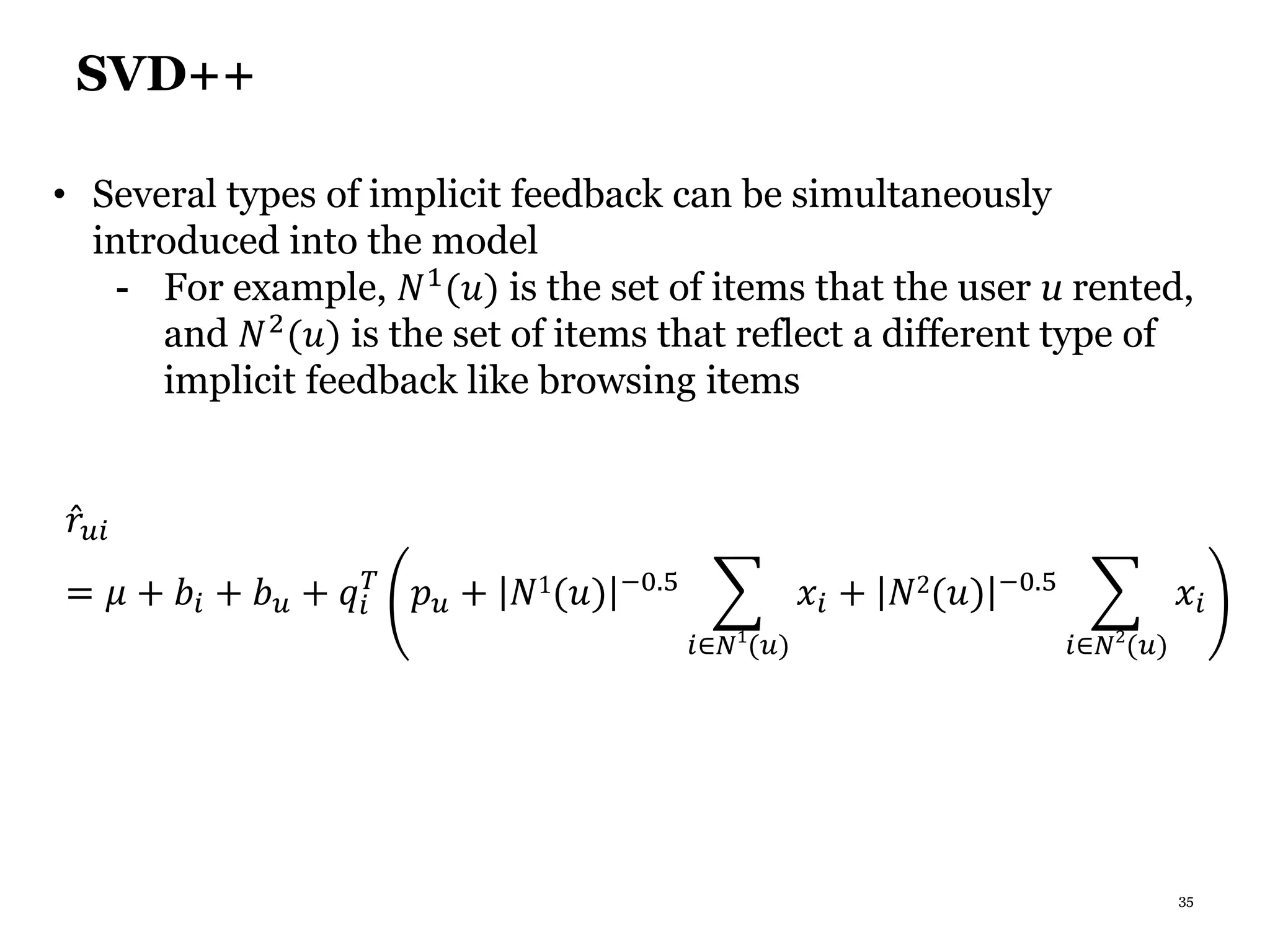

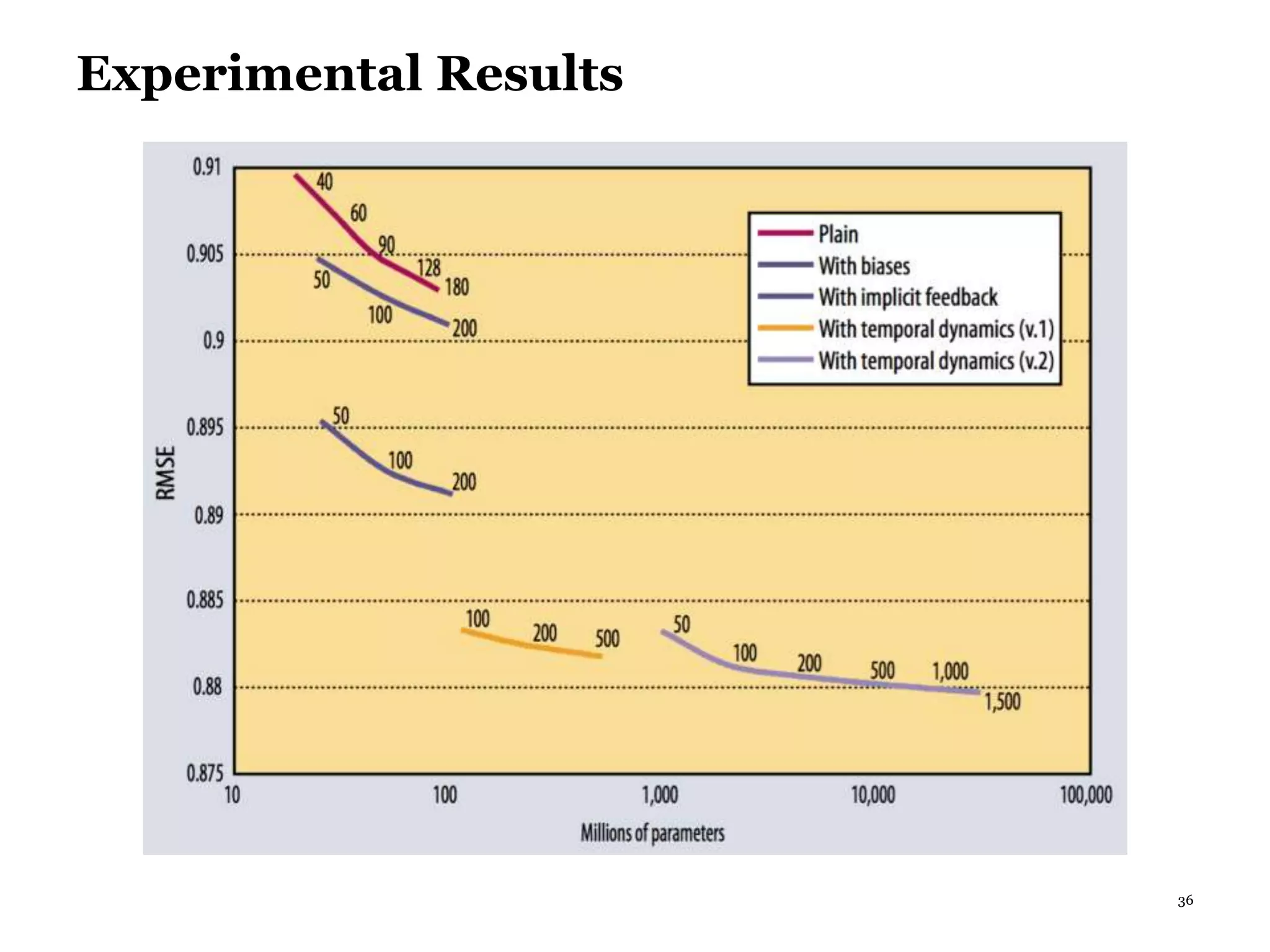

This document summarizes recommender systems, focusing on collaborative filtering techniques. It discusses how recommender systems help with information overload by matching users with relevant items. Collaborative filtering is introduced as a technique that seeks to predict user preferences based on other similar users' ratings. The document then covers various collaborative filtering algorithms like neighborhood models, latent factor models using matrix factorization, and extensions like adding biases and temporal dynamics. It concludes by discussing hybrid methods and providing references for further reading.