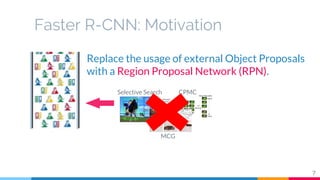

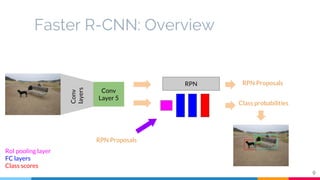

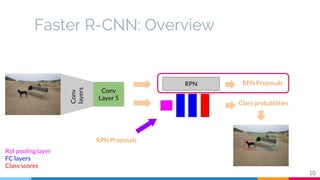

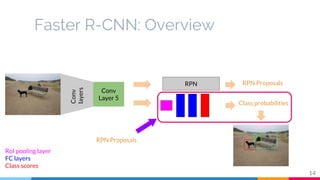

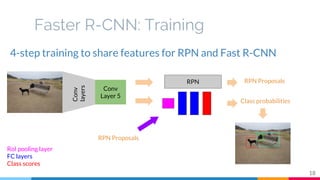

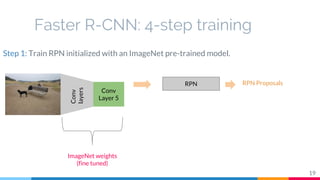

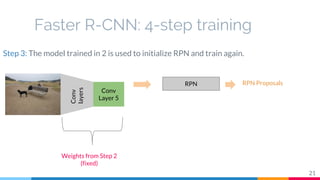

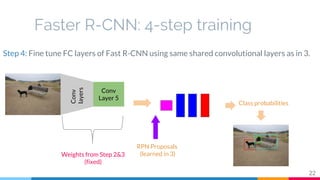

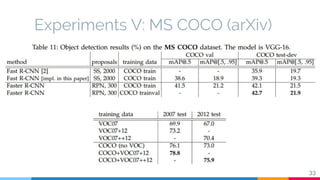

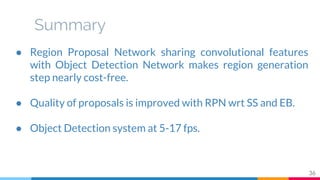

The document discusses Faster R-CNN, a real-time object detection framework that integrates a Region Proposal Network (RPN) to enhance efficiency by sharing convolutional features with the object detection network. It outlines the methodology behind Faster R-CNN, including how the RPN generates proposals, the training steps involved, and experiments that demonstrate its superior proposal quality and detection speed compared to previous methods. Faster R-CNN serves as a foundation for leading object detection systems in significant competitions.

![Faster R-CNN:

Towards Real-Time Object Detection with

Region Proposal Networks

Shaoqing Ren, Kaiming He, Ross Girshick, Jian Sun

[paper@NIPS15][arXiv][python][matlab][slides by R. Girshick]

Slides by Amaia Salvador [GDoc]

Computer Vision Reading Group (01/03/2016)](https://image.slidesharecdn.com/03fasterr-cnn-towardsreal-timeobjectdetectionwithregionproposalnetworks-160301150110/75/Faster-R-CNN-Towards-real-time-object-detection-with-region-proposal-networks-UPC-Reading-Group-1-2048.jpg)

![Summary

37

● Faster R-CNN is the basis of the winners of COCO and

ILSVRC 2015 object detection competitions [1].

● RPN is also used in the winning entries of ILSVRC 2015

localization [1] and COCO 2015 segmentation competitions

[2].

[1] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,”

arXiv:1512.03385, 2015.

[2] J. Dai, K. He, and J. Sun, “Instance-aware semantic segmentation via multi-task

network cascades,” arXiv:1512.04412, 2015.](https://image.slidesharecdn.com/03fasterr-cnn-towardsreal-timeobjectdetectionwithregionproposalnetworks-160301150110/85/Faster-R-CNN-Towards-real-time-object-detection-with-region-proposal-networks-UPC-Reading-Group-37-320.jpg)