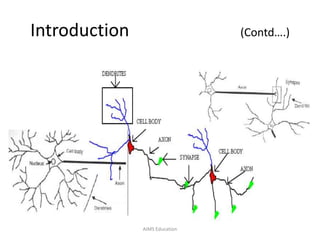

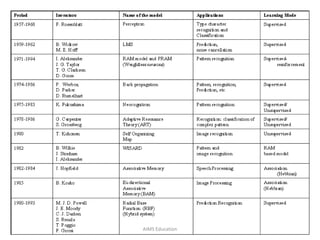

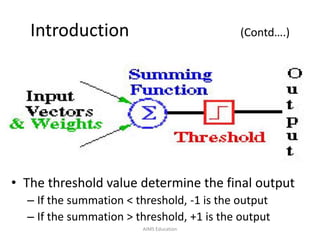

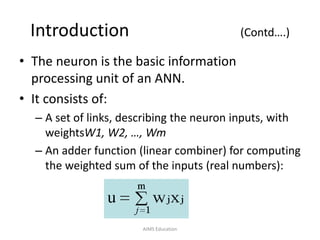

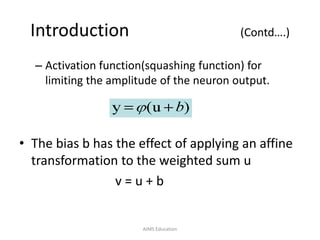

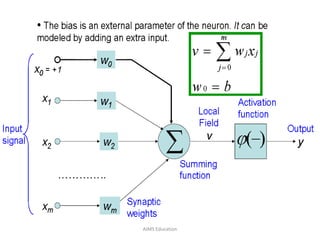

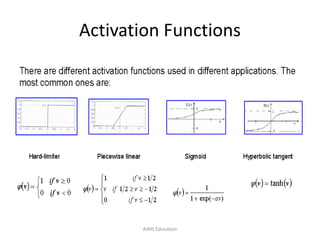

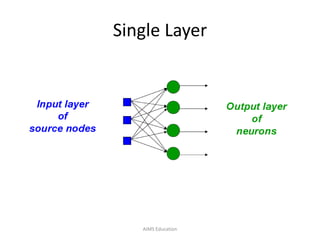

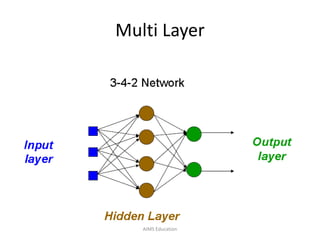

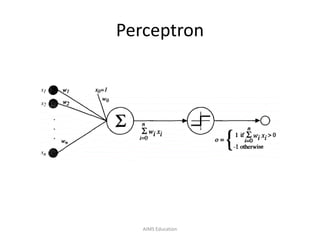

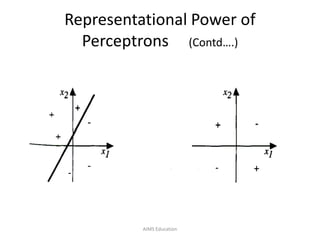

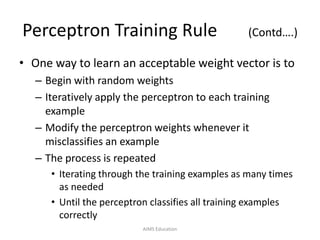

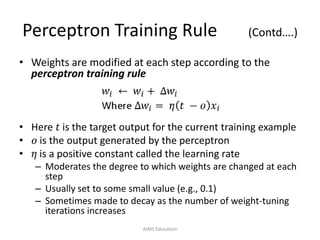

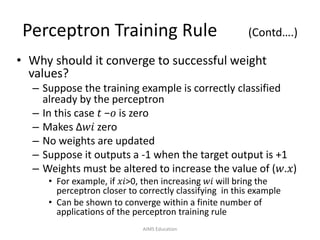

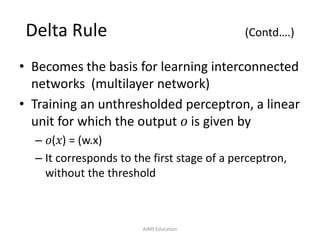

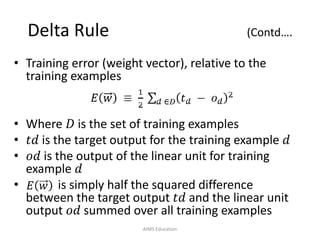

The document provides an overview of artificial neural networks (ANNs), detailing their structure, design, and applications in pattern recognition and data classification. It discusses the basic components of ANNs such as neurons, activation functions, and the training process, emphasizing the importance of weights and learning methods like the perceptron and delta rule. Additionally, it highlights suitable problems for neural network learning, indicating their robustness to noise and the challenges posed by the interpretability of learned models.