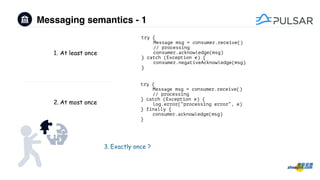

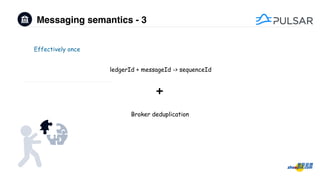

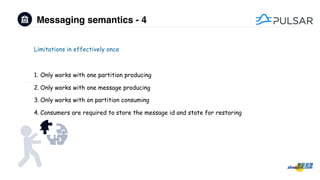

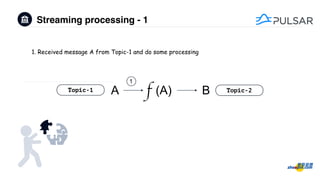

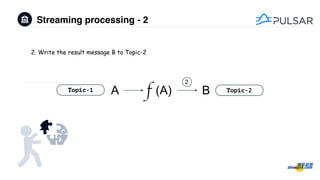

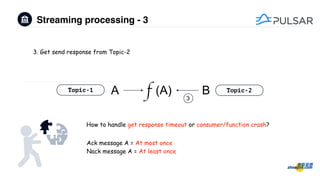

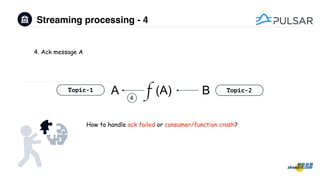

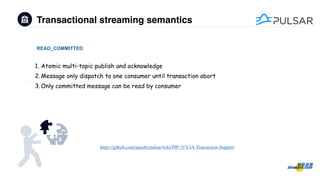

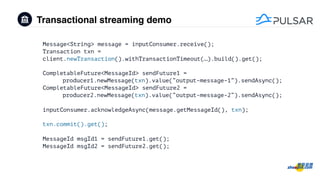

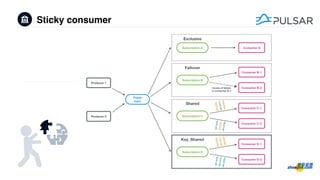

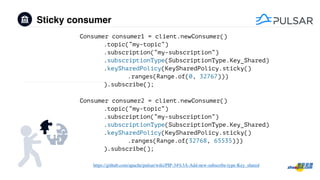

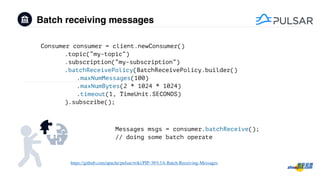

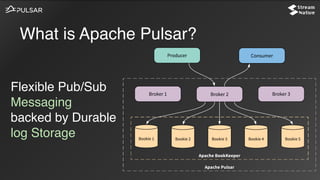

This document provides a preview of new features in Apache Pulsar 2.5.0, including transactional streaming, sticky consumers, batch receiving, and namespace change events. It also discusses messaging semantics like at least once, at most once, and effectively once delivery. Transactional streaming allows atomic multi-topic publishes and acknowledgments. Sticky consumers improve partitioning for key-based topics. Batch receiving allows consuming messages in batches. Namespace change events provide notifications of namespace changes.