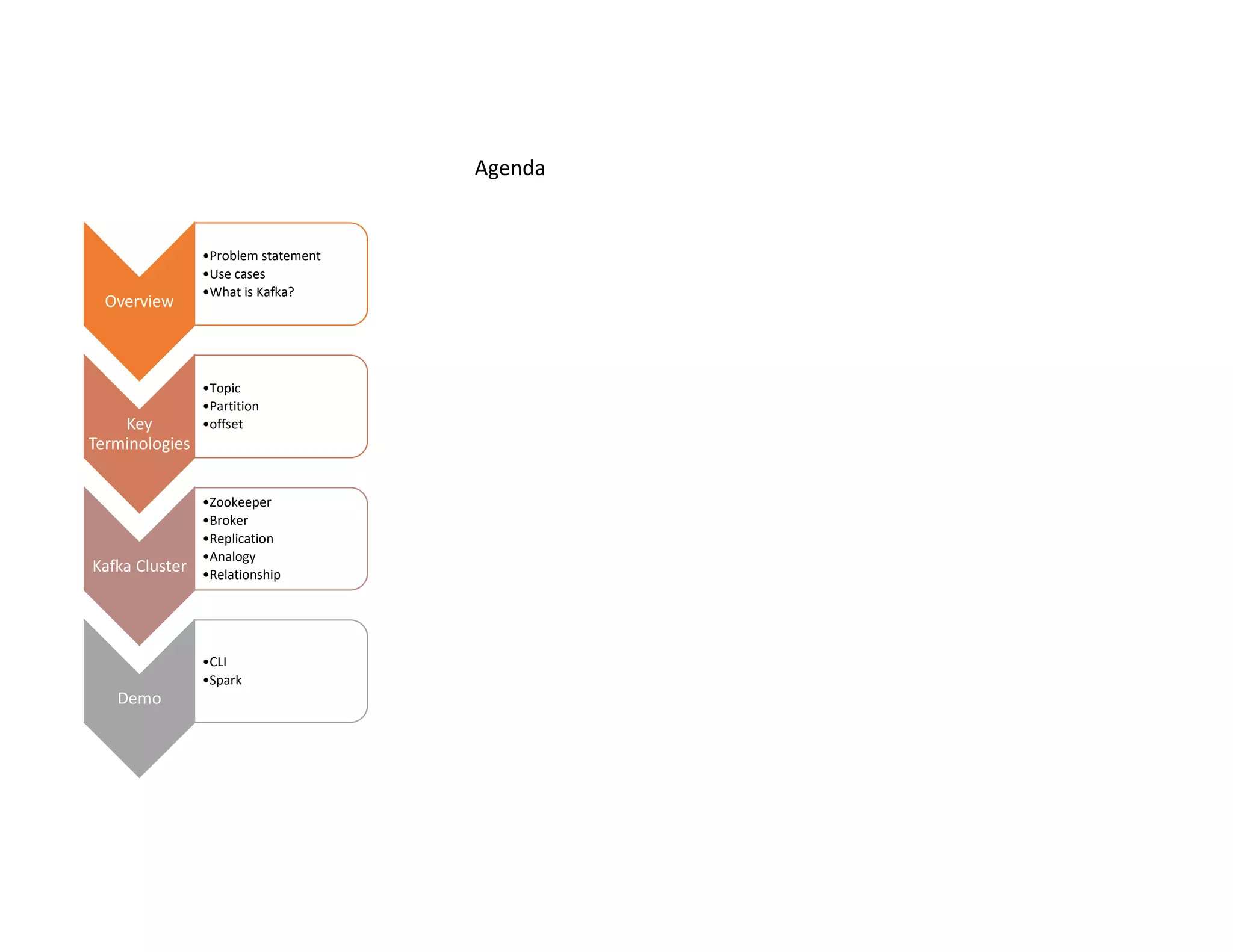

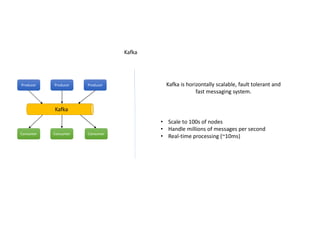

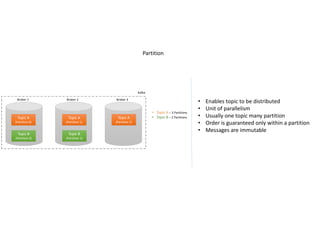

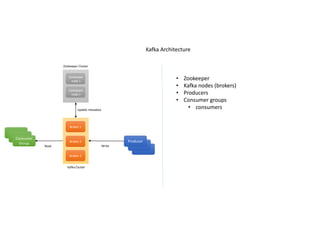

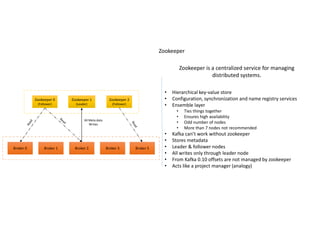

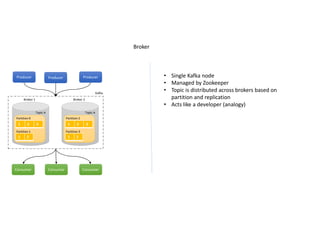

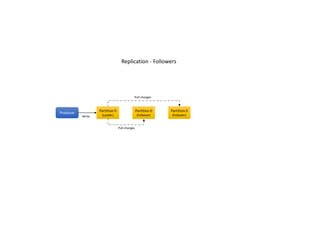

The document provides an overview of Kafka including its problem statement, use cases, key terminologies, architecture, and components. It defines topics as streams of data that can be split into partitions with a unique offset. Producers write data to brokers which replicate across partitions for fault tolerance. Consumers read data from partitions in a consumer group. Zookeeper manages the metadata and brokers act as the developers while topics are analogous to modules with partitions as tasks.

![Kafka

Broker 1 Broker 2 Broker 3

Topic B

(Partition 0)

[Leader]

Topic B

(Partition 0)

[Leader]

Topic B

(Partition 0)

[Follower]

• Topic B – 2 Partitions

• Replication factor of 2

Topic B

(Partition 1)

[Leader]

Topic B

(Partition 1)

[Leader]

Topic B

(Partition 1)

[Follower]

Producer

Consumer

Group

Replication

• Copy of a partition in another broker

• Enables fault tolerant

• Follower partition replicates from leader

• Only leader serves both producer and

consumer

• ISR – In Sync Replica](https://image.slidesharecdn.com/kafkatechnicaloverview-190507041236/85/Kafka-Technical-Overview-12-320.jpg)

![Dev Team

Developer 1 Developer 2 Developer 3

Module B

(Task 0)

[Leader]

Module B

(Task 0)

[Leader]

Module B

(Task 0)

[Follower]

• Module B – 2 parallel task

• 1 back resource for module B

Module B

(Task 1)

[Leader]

Module B

(Task 1)

[Leader]

Module B

(Task 1)

[Follower]

Manager

(Leader)

Manager

(Leader)

Task

Assigner

Testing

Team

Replication – IT team analogy](https://image.slidesharecdn.com/kafkatechnicaloverview-190507041236/85/Kafka-Technical-Overview-13-320.jpg)

![Kafka

Broker 1 Broker 2 Broker 3

Topic B

(Partition 0)

[Leader]

Topic B

(Partition 0)

[Leader]

Topic B

(Partition 0)

[Leader]

Topic B

(Partition 0)

[Leader]

• Topic B – 2 Partitions

• Replication factor of 2Topic B

(Partition 1)

[Leader]

Topic B

(Partition 1)

[Leader]

Topic B

(Partition 0)

[Follower]

Replication

Producer

Consumer

Group

All Reads

Topic B

(Partition 1)

[Follower]

Replication

Replication

Replication – Leader election](https://image.slidesharecdn.com/kafkatechnicaloverview-190507041236/85/Kafka-Technical-Overview-15-320.jpg)