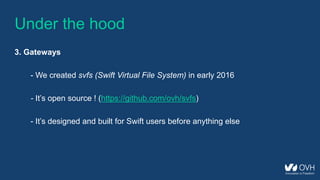

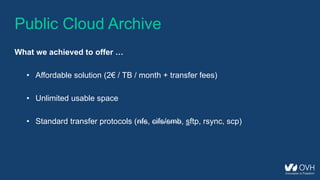

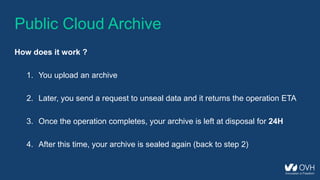

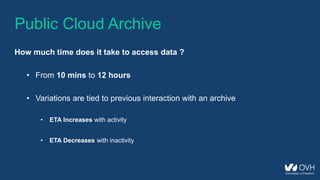

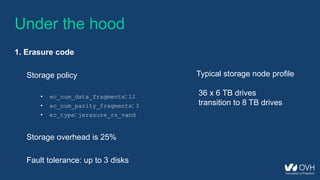

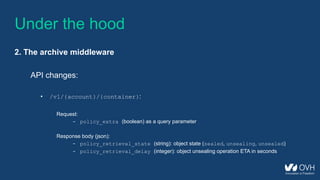

This document summarizes the key aspects of a public cloud archive storage solution. It offers affordable and unlimited storage using standard transfer protocols. Data is stored using erasure coding for redundancy and fault tolerance. Accessing archived data takes 10 minutes to 12 hours depending on previous access patterns, with faster access for inactive archives. The solution uses middleware to handle sealing and unsealing archives along with tracking access patterns to regulate retrieval times.

![Under the hood

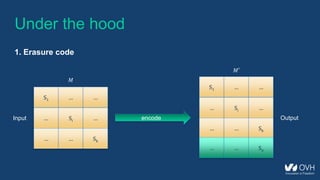

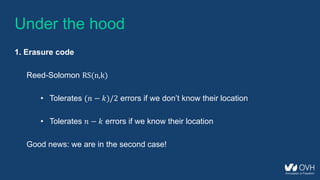

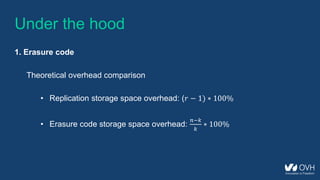

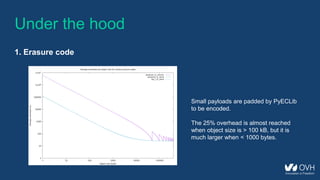

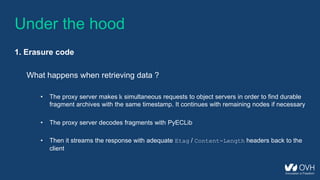

1. Erasure code

from pyeclib.ec_iface import ECDriver

def main():

# Pick an EC library and configure it

driver = ECDriver(k=12, m=3, ec_type='jerasure_rs_vand')

# Encode

fragments = driver.encode('Hello World!')

print "Fragment count: %d" % len(fragments)

print "Fragment size: %d" % len(fragments[0])

print "Message size: %d" % sum([len(fragment) for fragment in fragments])

if __name__ == '__main__':

main()

Fragment count: 15

Fragment size: 82

Message size: 1230](https://image.slidesharecdn.com/openstackmeetuplyon2017-09-28-170929115552/85/Openstack-meetup-lyon_2017-09-28-13-320.jpg)

![from pyeclib.ec_iface import ECDriver

def main():

# Pick an EC library and configure it

driver = ECDriver(k=12, m=3, ec_type='jerasure_rs_vand')

# Encode

fragments = driver.encode('Hello World!')

# Delete fragments (0, 1, 2)

del(fragments[:3])

print "Fragment count: %d" % len(fragments)

print "Original message: " + driver.decode(fragments)

if __name__ == '__main__':

main()

Under the hood

1. Erasure code

Fragment count: 12

Original message: Hello World!](https://image.slidesharecdn.com/openstackmeetuplyon2017-09-28-170929115552/85/Openstack-meetup-lyon_2017-09-28-14-320.jpg)

![from pyeclib.ec_iface import ECDriver

def main():

# Pick an EC library and configure it

driver = ECDriver(k=12, m=3, ec_type='jerasure_rs_vand’)

# Encode

fragments = driver.encode('Hello World!')

# Delete fragments (0, 1, 2, 3)

del(fragments[:4])

# Try to decode

try:

print "Original message: " + driver.decode(fragments)

except Exception as e:

print e

if __name__ == '__main__':

main()

Under the hood

1. Erasure code

Not enough fragments given in ECPyECLibDriver.decode](https://image.slidesharecdn.com/openstackmeetuplyon2017-09-28-170929115552/85/Openstack-meetup-lyon_2017-09-28-15-320.jpg)

![Under the hood

2. The archive middleware

GET /v1/AUTH_e80c212388cd4d509abc959643993b9f/archives?policy_extra=true HTTP/1.1

Host: storage.gra1.cloud.ovh.net

Accept: application/json

X-Auth-Token: 3caec5b614a94326b0e9b847661e3d6a

[

{

"hash" : "l0dad6ursvjudy1ea4xyfftbwdsfqhqq",

"policy_retrieval_state" : "unsealing",

"bytes" : 1024,

"last_modified" : "2017-02-24T10:09:12.026940",

"policy_retrieval_delay" : 1851,

"name" : "archive1.zip",

"content_type" : "application/octet-stream"

}

]](https://image.slidesharecdn.com/openstackmeetuplyon2017-09-28-170929115552/85/Openstack-meetup-lyon_2017-09-28-21-320.jpg)