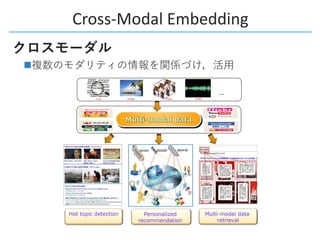

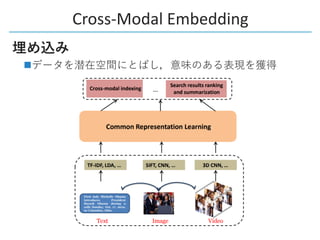

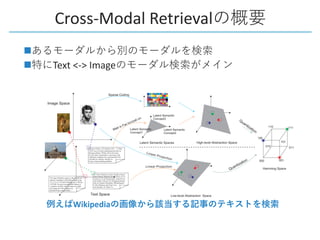

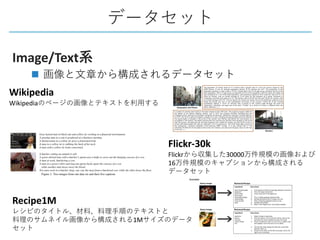

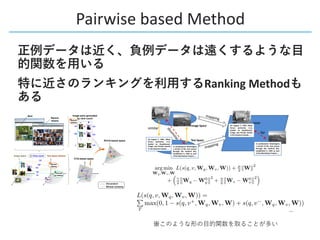

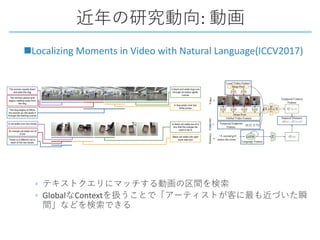

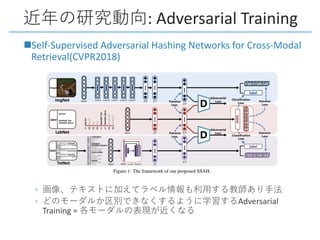

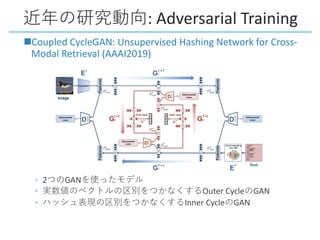

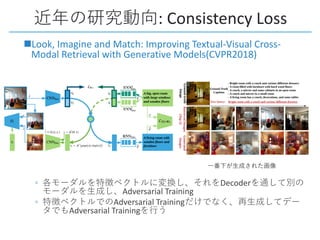

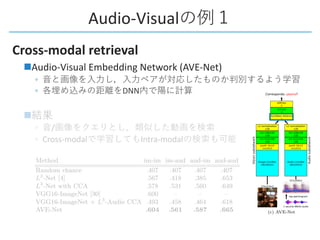

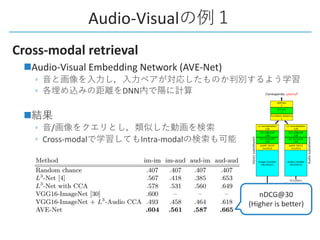

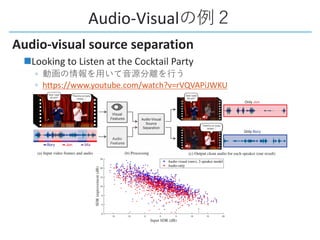

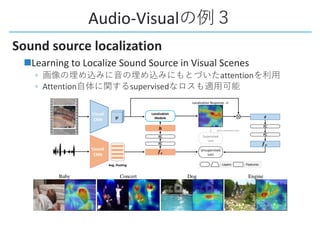

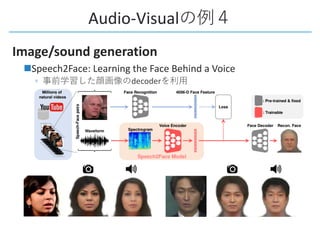

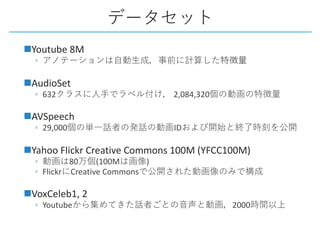

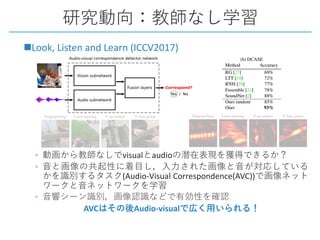

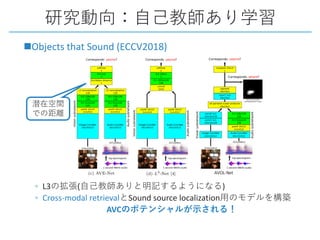

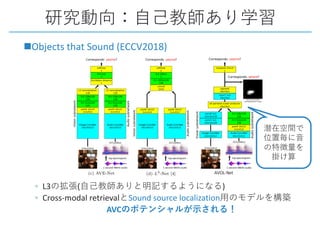

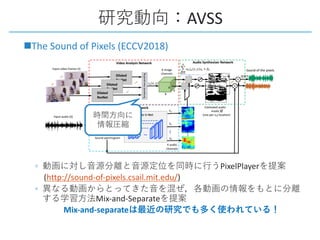

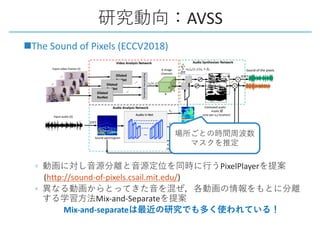

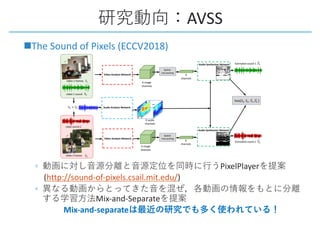

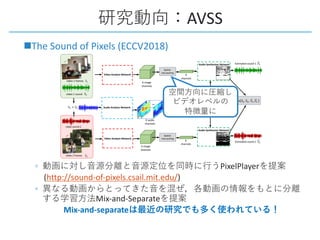

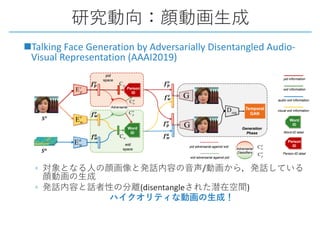

This document provides a survey of research on cross-modal embedding and retrieval between different media types such as text, images, video, and audio. It discusses several key areas including cross-modal retrieval which aims to retrieve relevant items across media types, audio-visual embedding to learn joint representations of audio and video, and applications such as sound localization, generation, and separation using audio-visual models. The document also summarizes several influential papers that use techniques such as adversarial training, consistency losses, and multimodal pretraining.