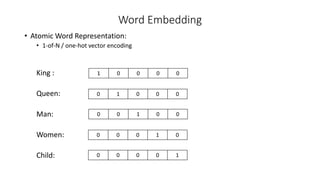

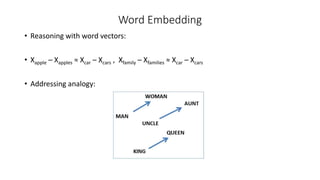

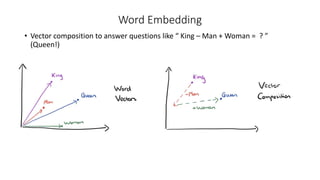

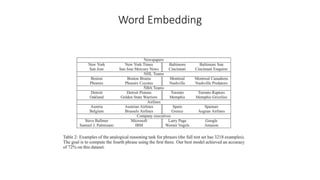

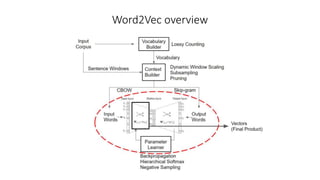

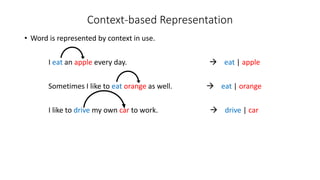

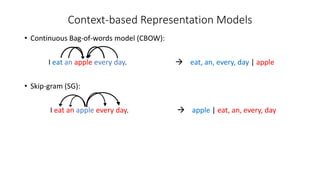

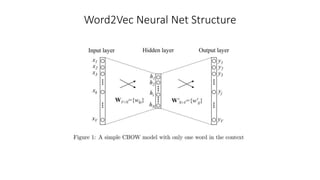

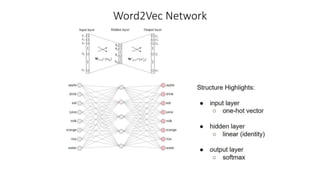

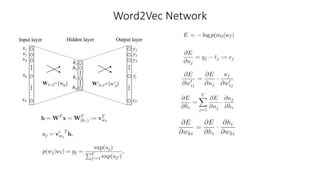

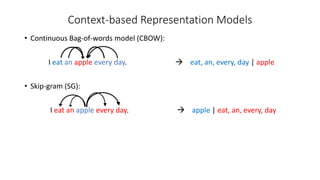

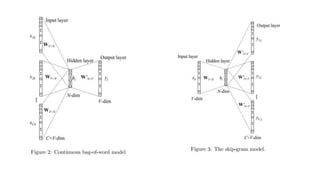

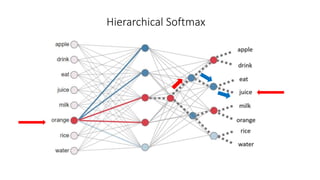

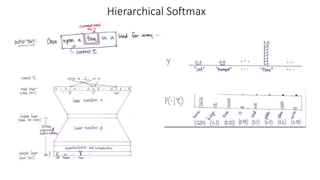

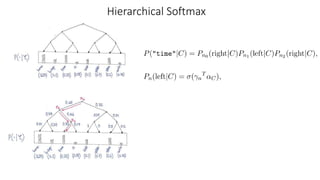

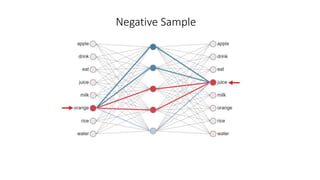

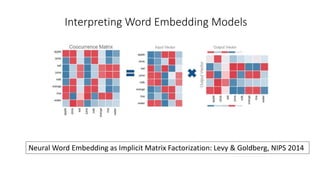

This document provides an overview of Word2Vec network structure for generating word embeddings. It discusses how Word2Vec uses a neural network model like CBOW or Skip-gram to represent words as dense vectors based on their surrounding context words in a corpus. The Word2Vec neural network is trained to learn vector representations that are useful for performing analogy-style questions like "King - Man + Woman = Queen". The document also briefly outlines techniques like hierarchical softmax and negative sampling that allow Word2Vec training to scale to large corpora.