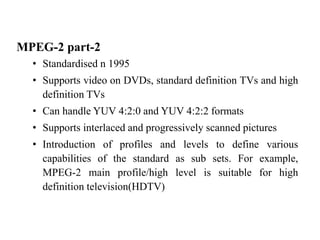

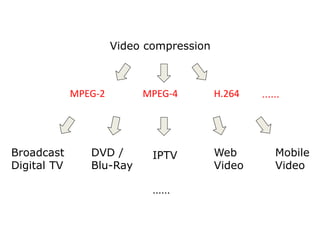

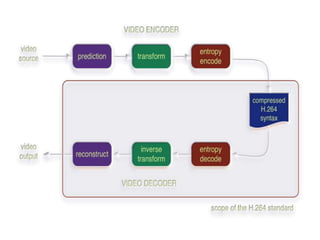

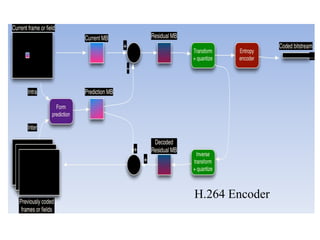

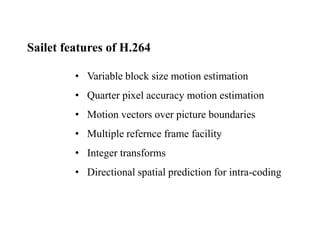

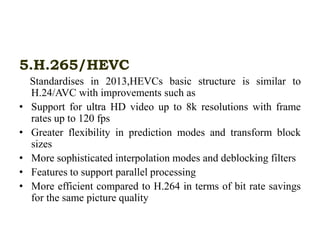

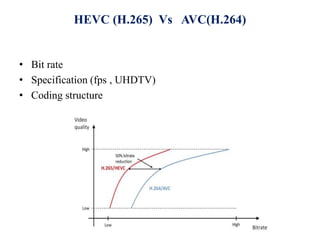

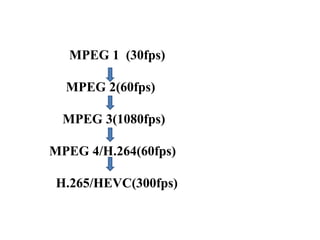

Video coding standards define bitstream structures and decoding methods for video compression. Popular standards include MPEG-1/2/4 and H.264/HEVC developed by ISO/IEC and ITU-T. Standards are developed through identification of requirements, algorithm development, selection of core techniques, validation testing, and publication. They enable interoperability and future decoding of emerging standards. [/SUMMARY]