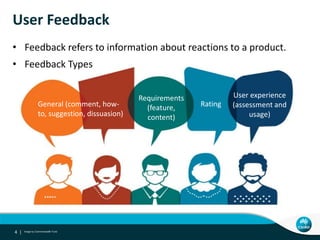

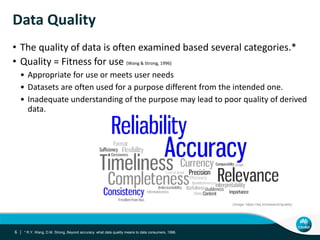

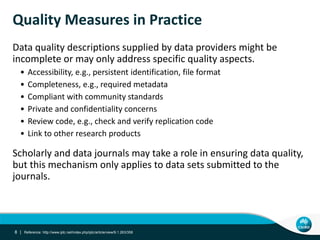

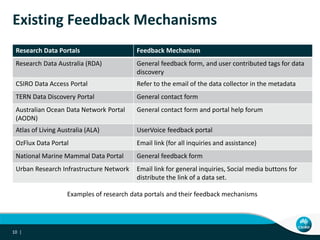

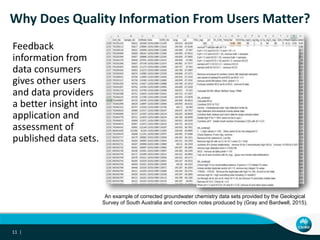

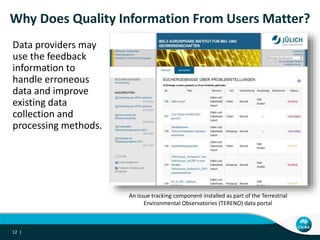

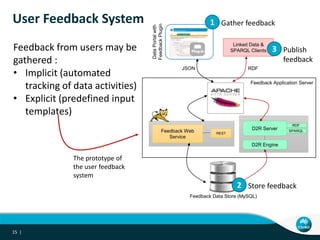

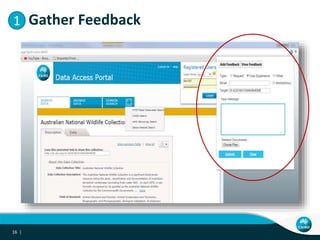

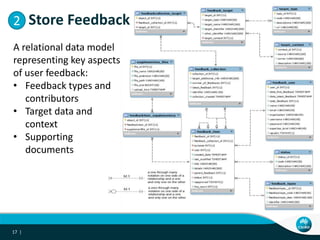

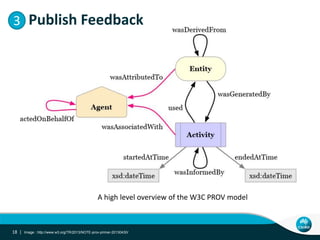

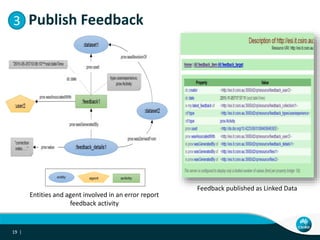

The document discusses approaches to enhance the collection and dissemination of Earth science data quality information, emphasizing the importance of user feedback in assessing and improving data quality. It outlines a systematic method for capturing user feedback linked to specific datasets, utilizing a prototype user feedback system built with open-source technologies. The conclusions highlight the potential for this feedback to improve data collection and processing methods while promoting integration with other sources on the web.