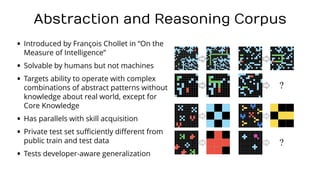

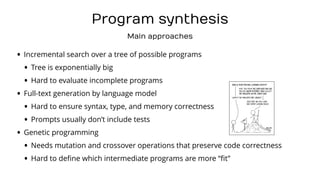

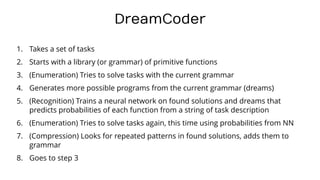

The document outlines Andrey Zakharovich's journey into program synthesis and his exploration of Dreamcoder and ARC. It discusses the challenges and techniques involved in program synthesis, including defining tasks, enumerating possible programs, and leveraging neural networks for function recognition and compression. Additionally, it highlights the obstacles faced during the transition to Dreamcoder, the potential for collaboration, and references to related works.

![DreamCoder

• Takes a set of tasks of the same type and a

grammar

• Programs are expressions of typed lambda calculus

with De Bruijn indexing

• Search starts from a single Hole ( ?? ) of expected

type

• All primitives from the grammar are checked if they

can unify with the hole type

• All possibilities are weighted according to the

grammar, partial solutions are stored in a priority

queue

• When all holes are

fi

lled, the program is checked

against all unsolved tasks

Enumeration

(

?

?

[list(int)

-

>

list(int)] )

(lambda

?

?

[list(int)] )

(lambda empty) (lambda $0)

(lambda (cons

?

?

[int]

?

?

[list(int)] )

…

(lambda (cons 0

?

?

[list(int)] ) …](https://image.slidesharecdn.com/andreyzakharevichmlstdreamcoderarc-220705065812-07cf87e0/85/Program-Synthesis-DreamCoder-and-ARC-8-320.jpg)