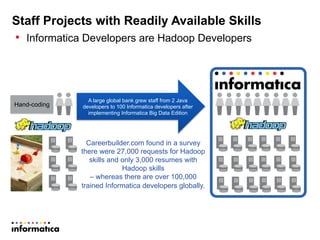

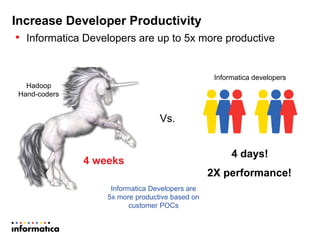

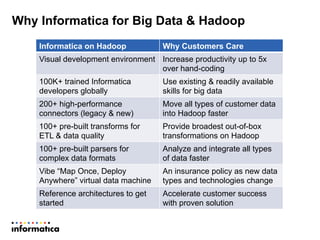

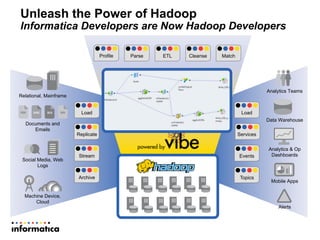

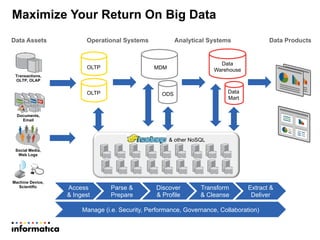

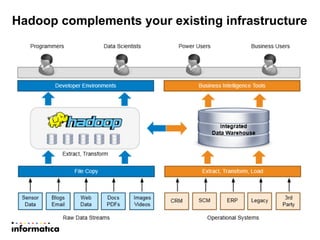

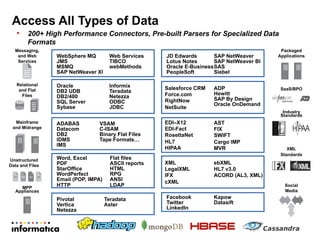

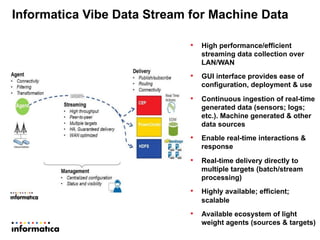

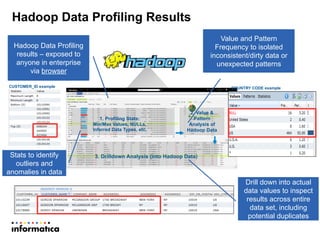

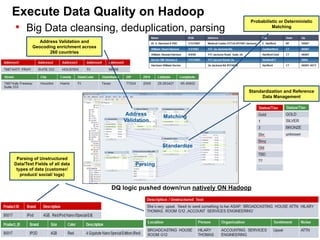

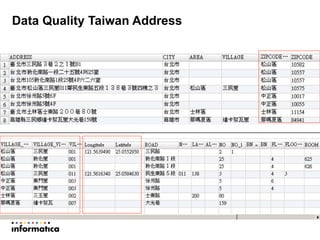

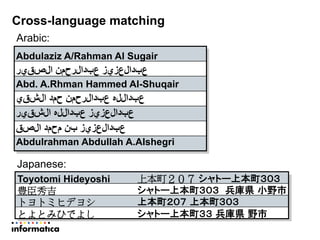

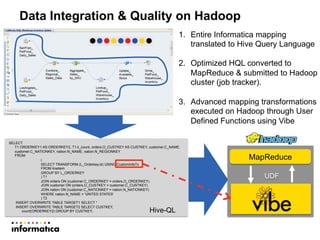

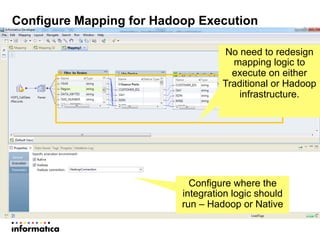

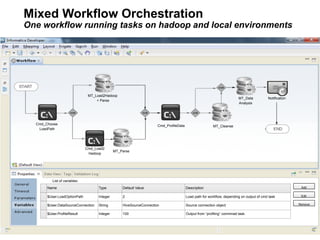

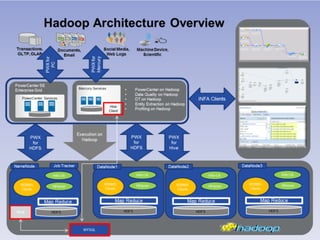

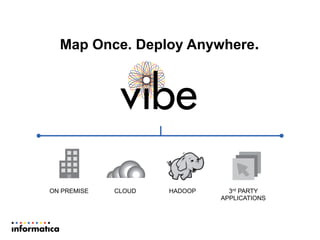

This document discusses building a new generation of intelligent data platforms. It emphasizes that most big data projects spend 80% of time on data integration and quality. It also notes that Informatica developers are 5 times more productive than those coding by hand for Hadoop. The document promotes Informatica's tools for enabling existing developers to work with big data platforms like Hadoop through visual interfaces and pre-built connectors and transformations.