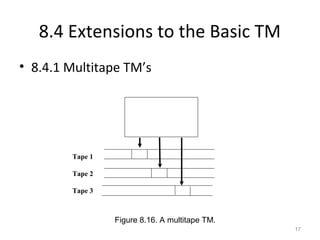

This document discusses programming techniques for Turing machines (TMs), including storing data in states, using multiple tracks, and implementing subroutines. It also covers extensions to basic TMs, such as multitape and nondeterministic TMs. Restricted TMs like those with semi-infinite tapes, stack machines, and counter machines are also examined. Finally, the document informally argues that TMs and modern computers are equally powerful models of computation.

![8.3 Programming Techniques for TM’s

• 8.3.1 Storage in the State

– Technique:

use the finite control of a TM to hold a finite amount

of data, in addition to the state (which represents a

position in a TM “program”).

– Method:

think of the state as [q, A, B, C], for example, when

think of the finite control to hold three data

elements A, B, and C. See the figure in the next page

(Figure 8.13)

9](https://image.slidesharecdn.com/tmtechniques-160202065532/85/TM-Techniques-9-320.jpg)

![8.3 Programming Techniques for TM’s

• 8.3.1 Storage in the State

– Example 8.6:

Design a TM to recognize 01*

+ 10*.

The set of states

are of the form [qi, X] where qi = q1, q2; X = 0, 1, B.

• The control portion (state) remembers what the

TM is doing (q0 = not read 1st

symbol; q1 = reverse).

• The data portion remembers the first symbol

seen (0, or 1).

11](https://image.slidesharecdn.com/tmtechniques-160202065532/85/TM-Techniques-11-320.jpg)

![8.3 Programming Techniques for TM’s

• 8.3.1 Storage in the State

– Example 8.6 (cont’d):

The transition function δ is as follows.

• δ([q0

, B], a) = ([q1

, a], a, R) for a = 0, 1. --- Copying the

symbol it scanned.

• δ([q1

, a],a) = ([q1

, a],a, R) wherea is the complement of

a = 0, 1. --- Skipping symbols which are complements of

the 1st

symbol read (stored in the state as a).

• δ([q1

, a], B) = ([q1

, B], B, R) for a = 0, 1. --- Entering the 12](https://image.slidesharecdn.com/tmtechniques-160202065532/85/TM-Techniques-12-320.jpg)

![8.3 Programming Techniques for TM’s

• 8.3.1 Storage in the State

– Example 8.6 (cont’d):

Why does not the TM designed by adding data in

states in the above way increase computing power?

Answer: The states [qi, X] with qi= q1, q2; X = a, b, B, is

just a kind of state labeling, so they can be

transformed, for example, into p1 = [q0, a], p2 = [q0, b],

p3 = [q0, B], …. Then, everything is the same as a

common TM.

13](https://image.slidesharecdn.com/tmtechniques-160202065532/85/TM-Techniques-13-320.jpg)

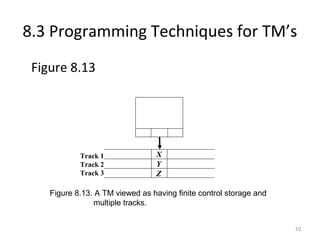

![8.3 Programming Techniques for TM’s

• 8.3.2 Multiple Tracks

– We may think the tape of a TM as composed of

several tracks.

– For example, if there are three tracks, we may use

the tape symbol [X, Y, Z] (like that in Figure 8.13).

– Example 8.7 --- see the textbook. The TM

recognizes the non-CFL language

L = {wcw | w is in (0 + 1)+

}.

– Why does not the power of the TM increase in

this way?

Answer: just a kind of tape symbol labeling.

14](https://image.slidesharecdn.com/tmtechniques-160202065532/85/TM-Techniques-14-320.jpg)