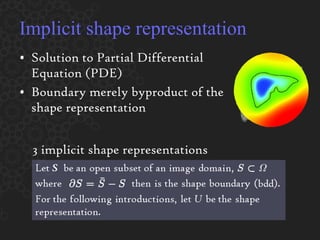

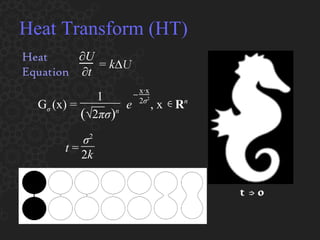

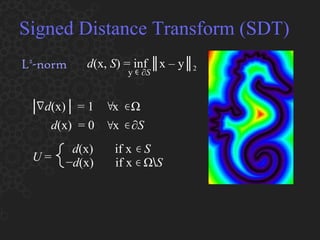

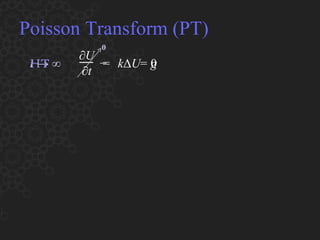

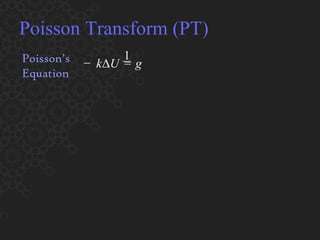

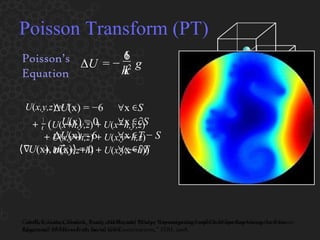

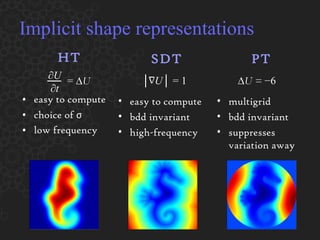

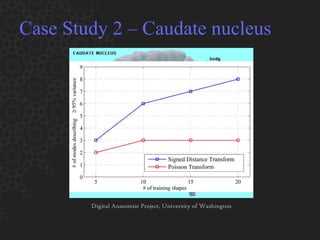

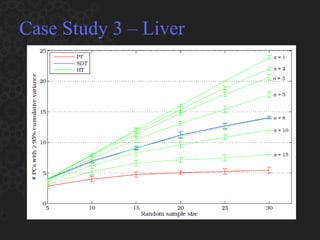

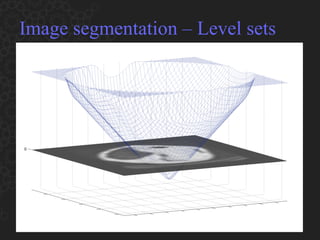

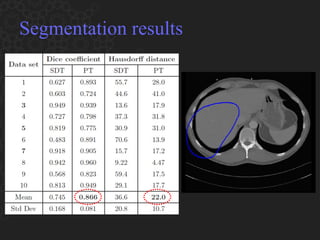

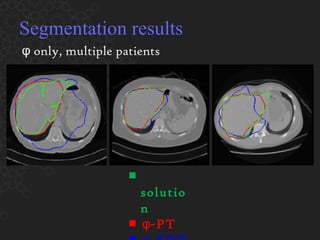

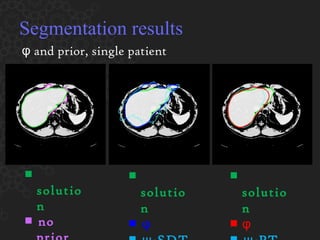

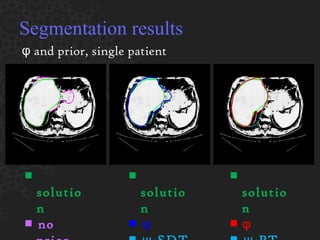

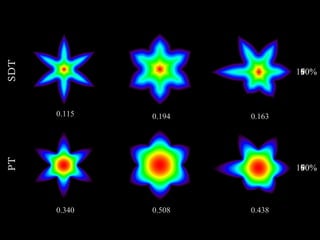

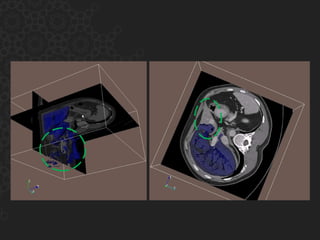

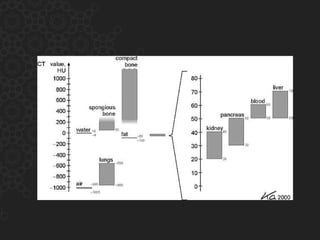

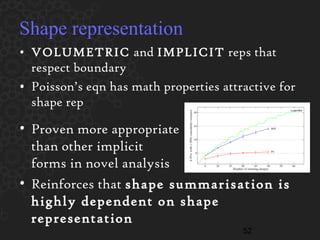

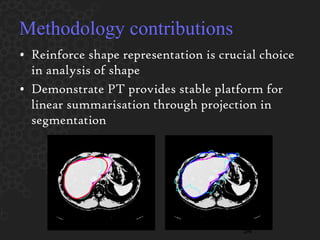

1. The document discusses implicit shape representations for liver segmentation from CT scans, comparing heat, signed distance, and Poisson transforms.

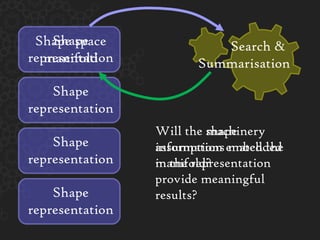

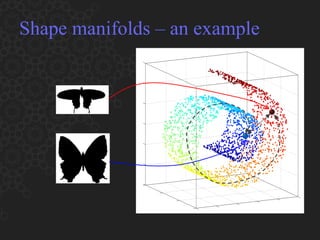

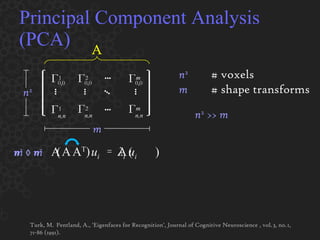

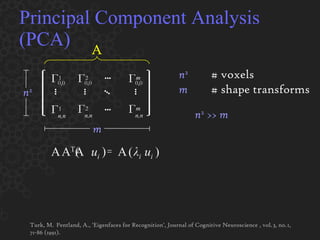

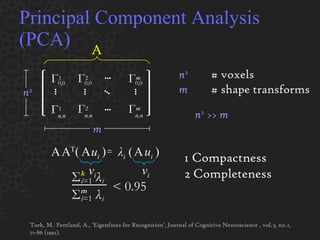

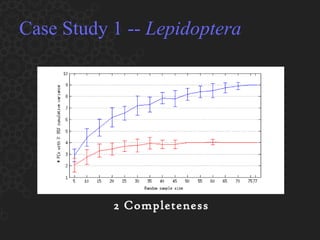

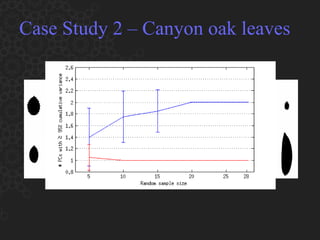

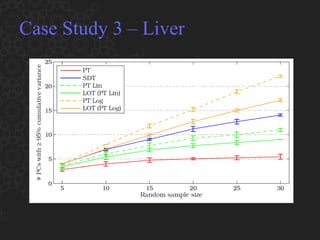

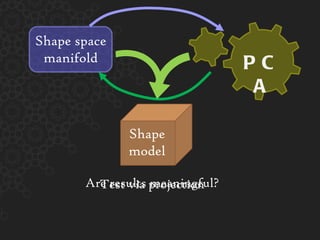

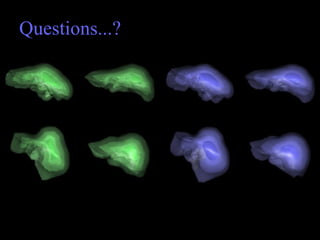

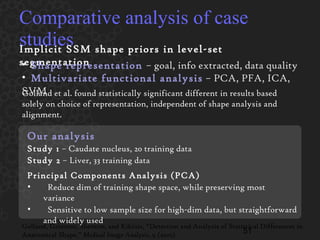

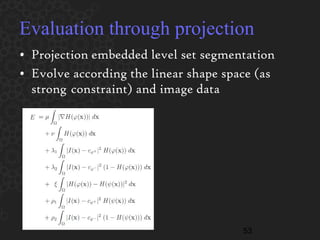

2. It evaluates these representations using principal component analysis to build a linear shape space model from training data.

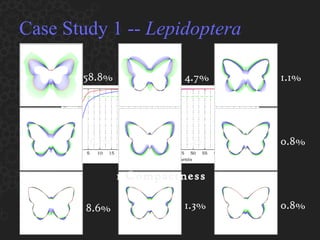

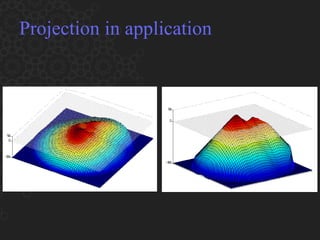

3. Results show the Poisson transform provides the most stable and effective implicit representation for segmentation, outperforming other methods in experiments projecting new shapes into the learned shape space.