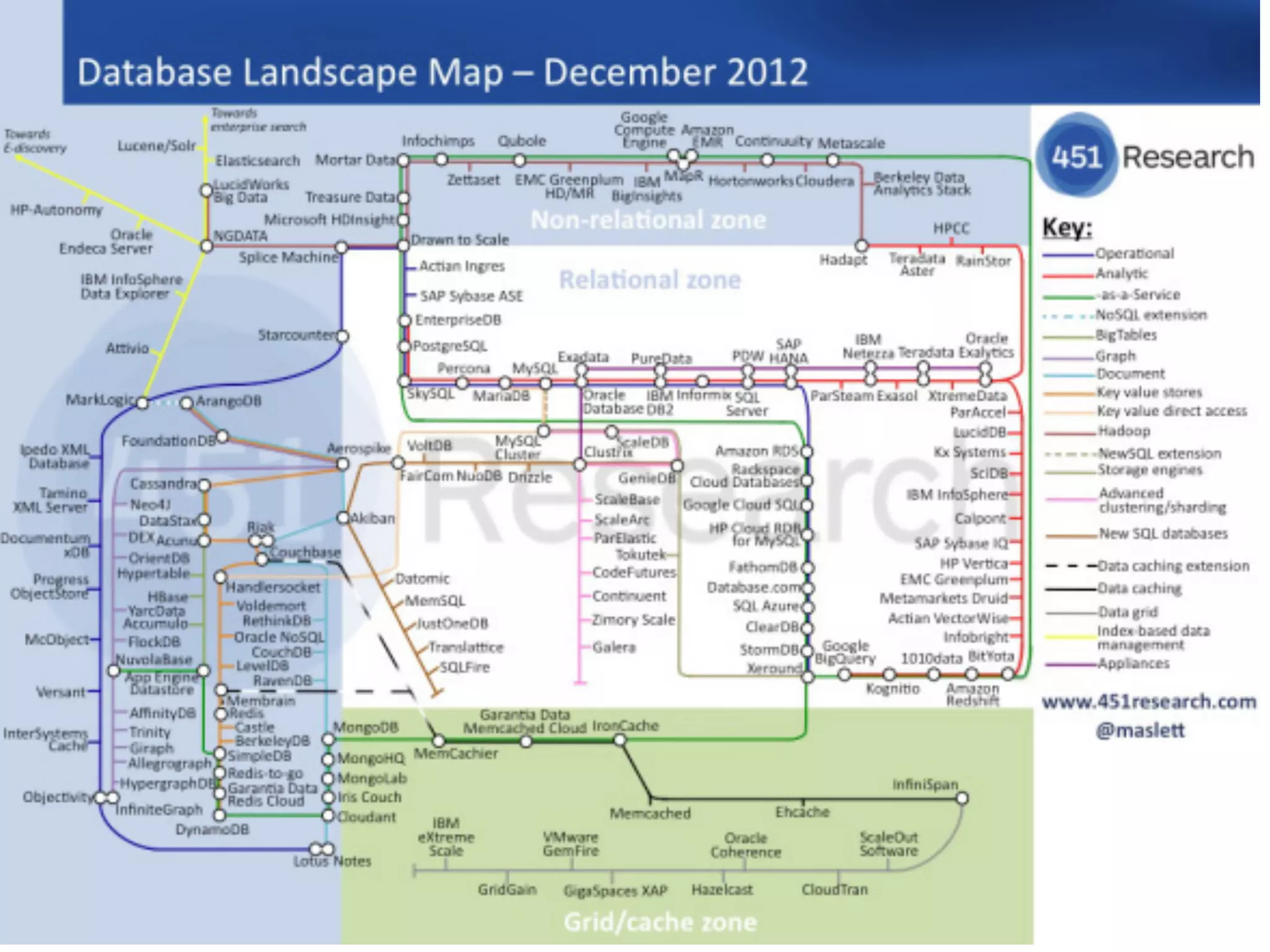

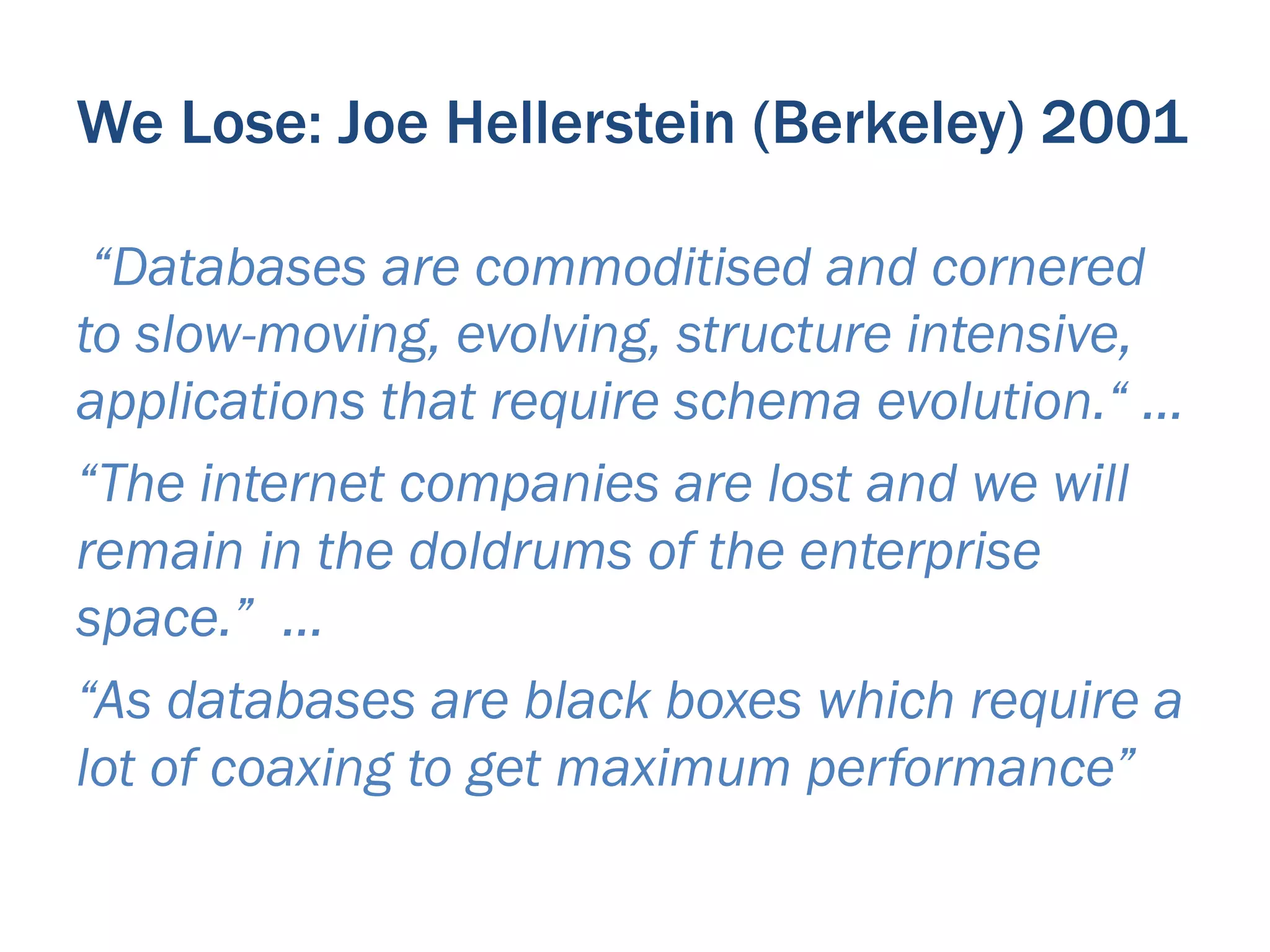

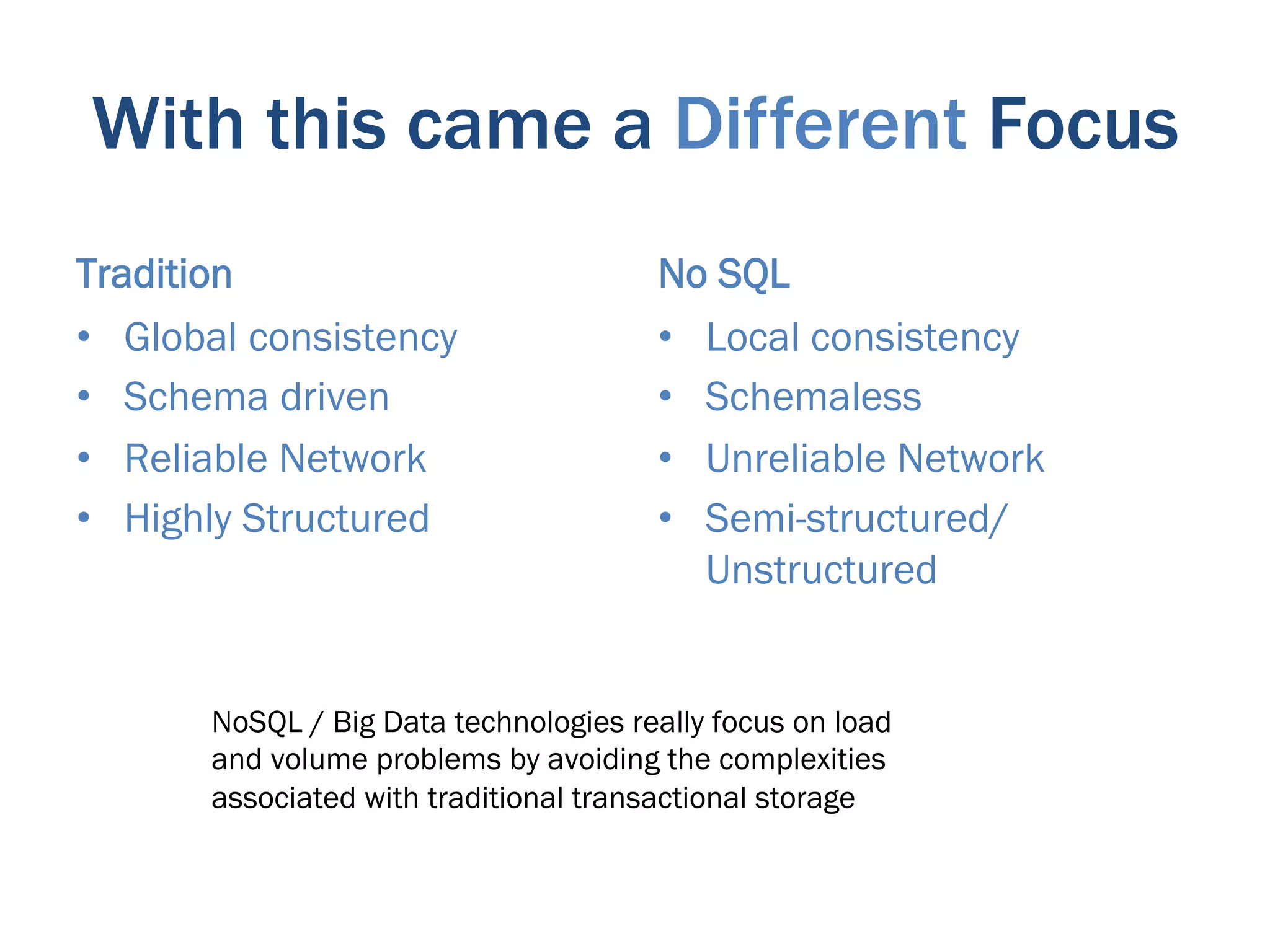

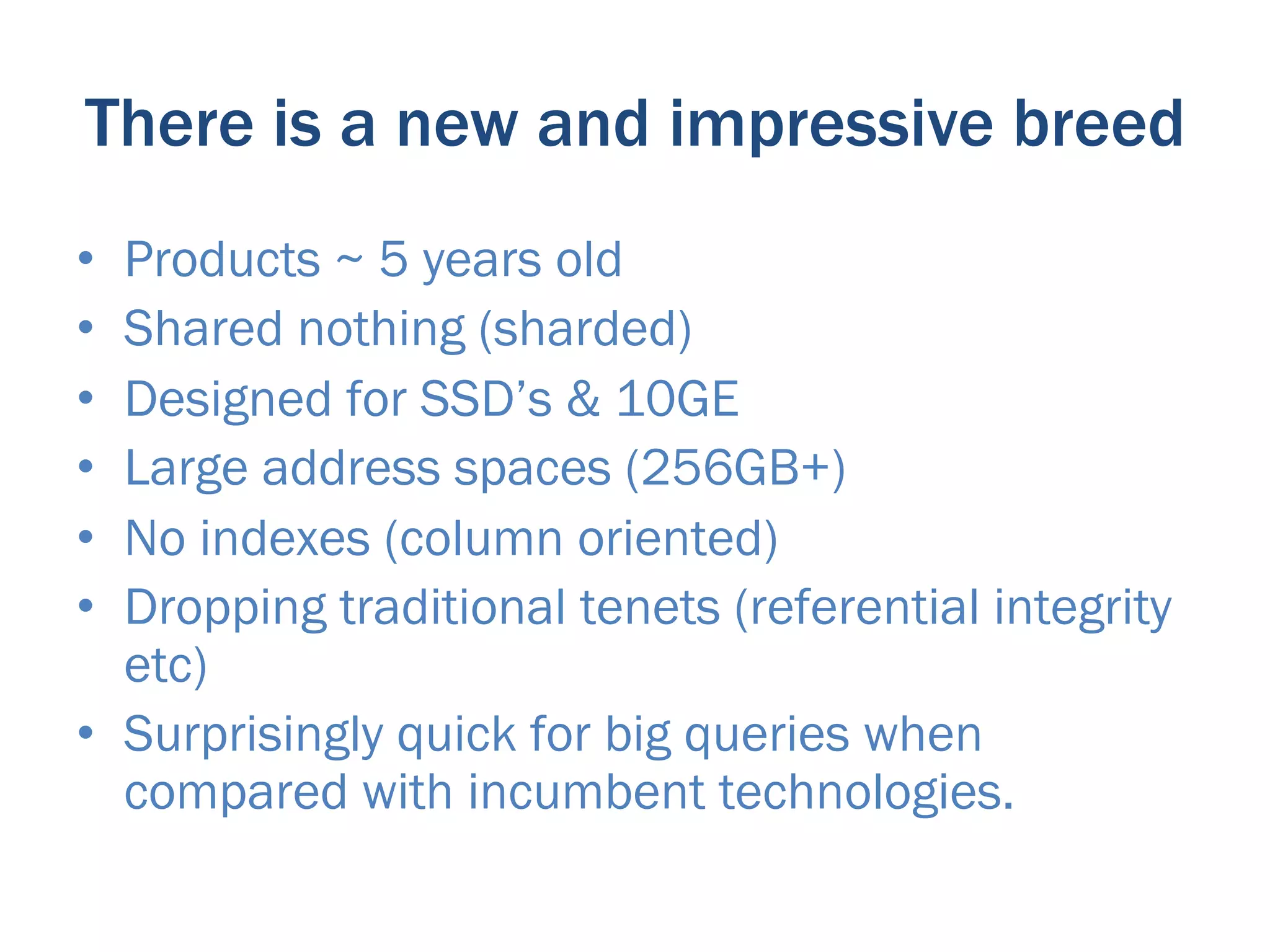

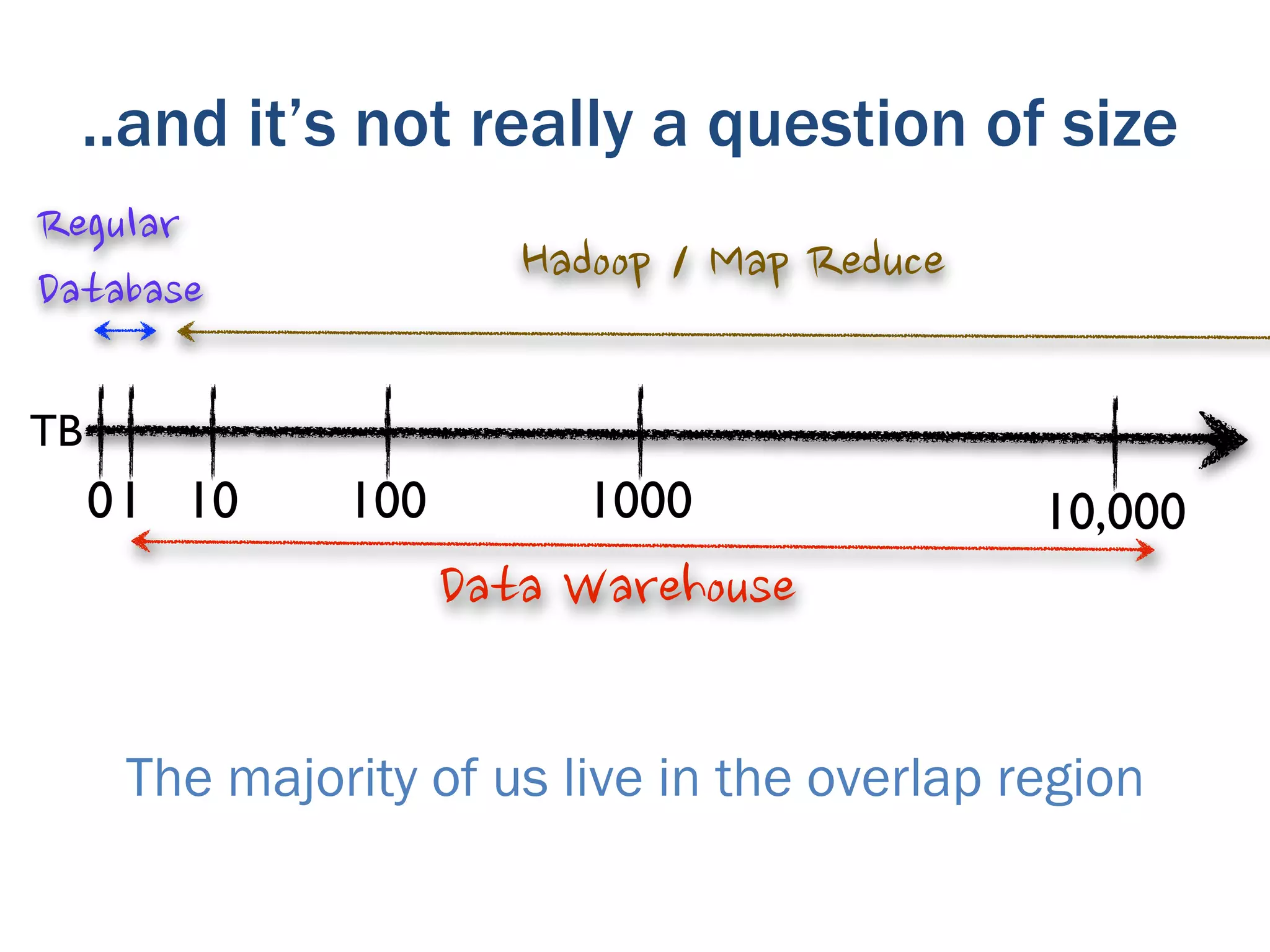

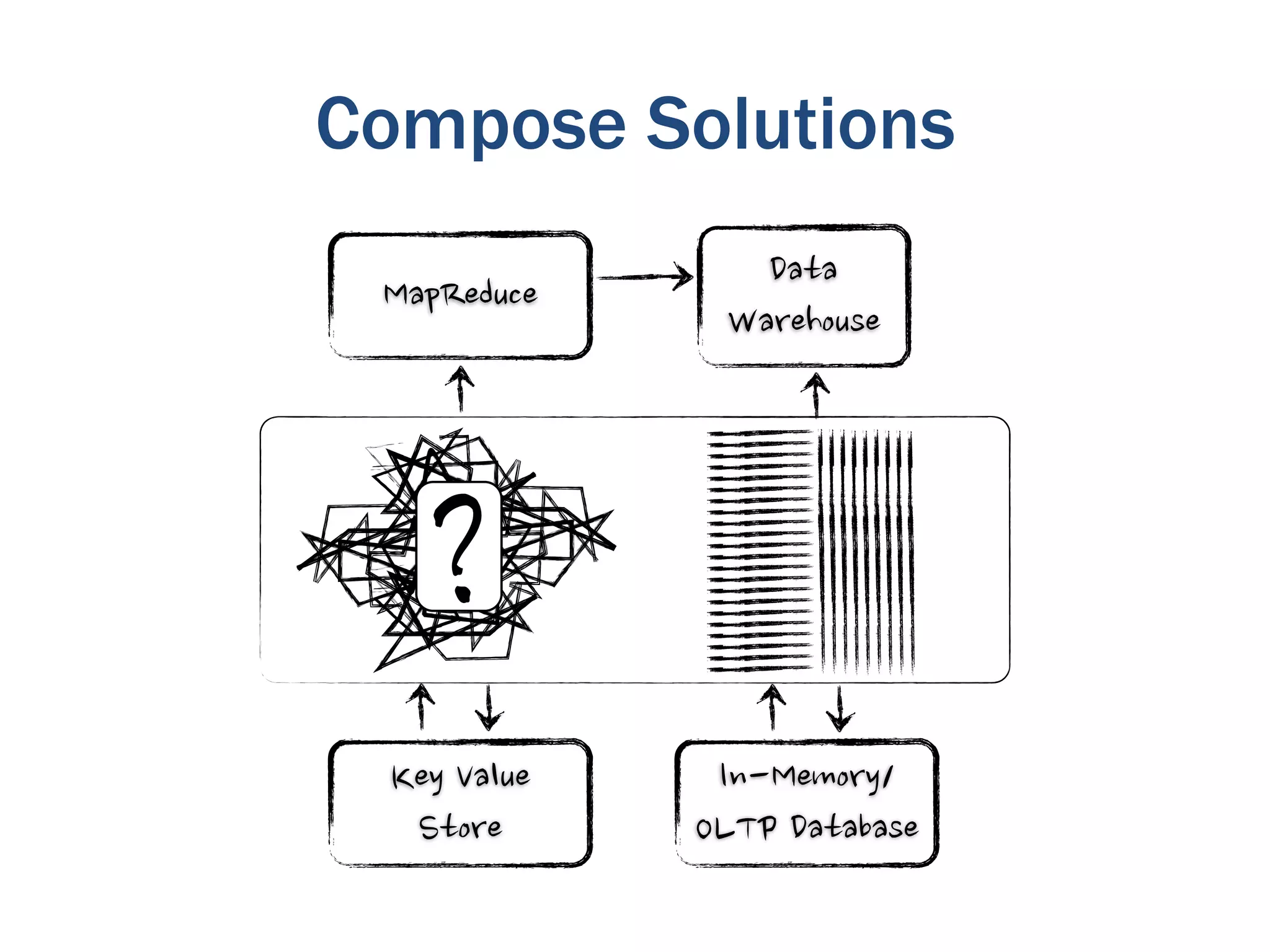

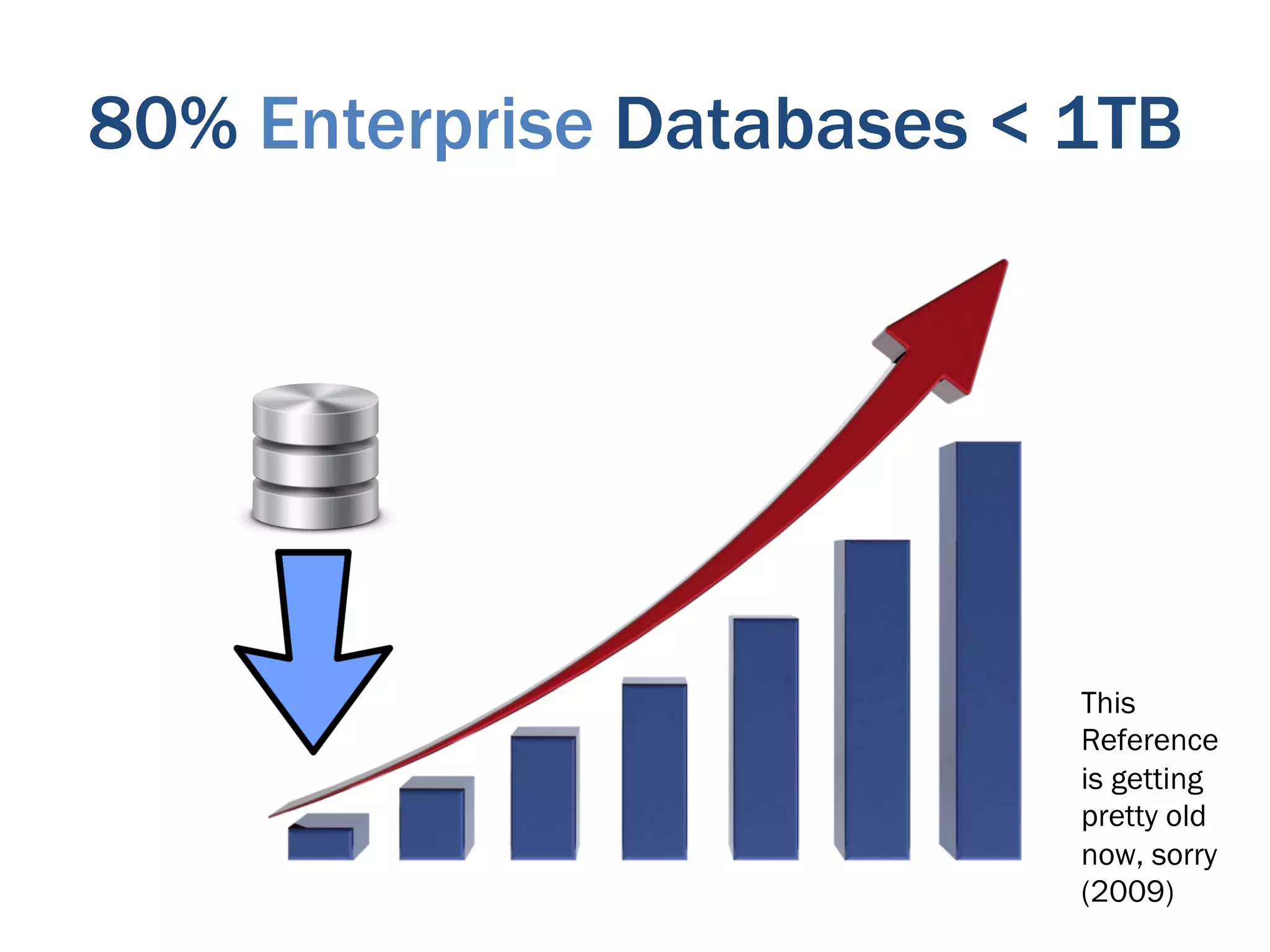

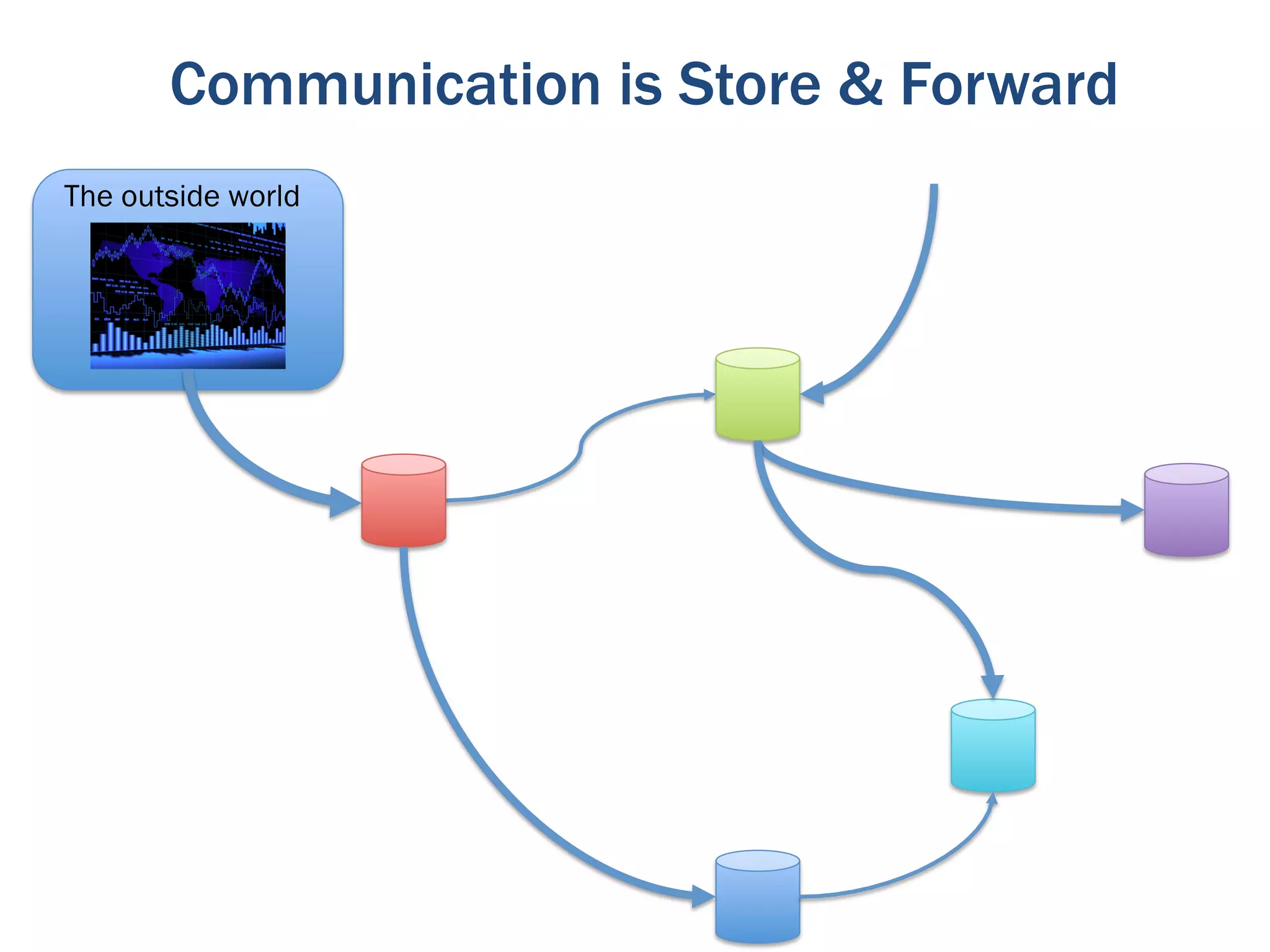

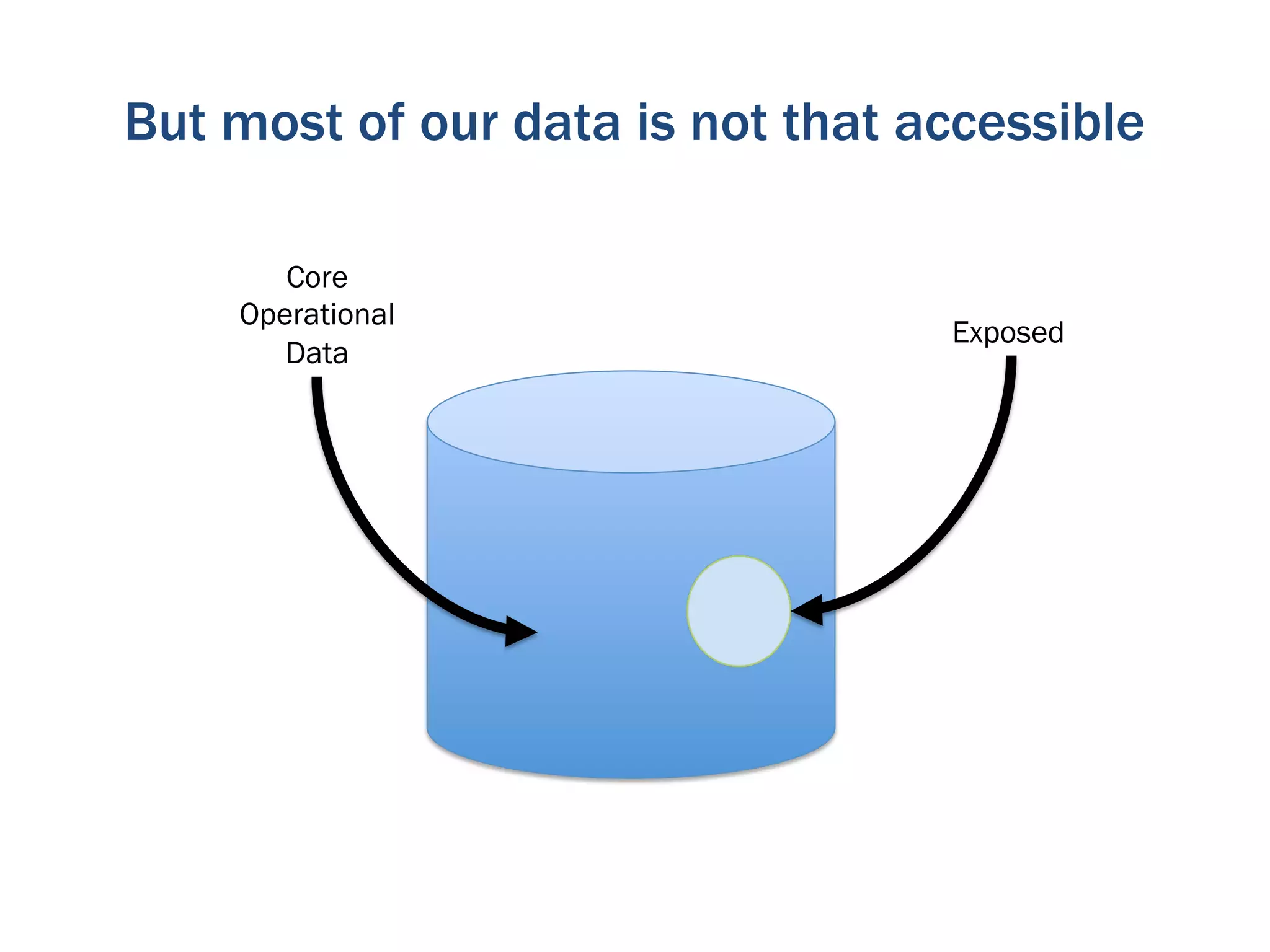

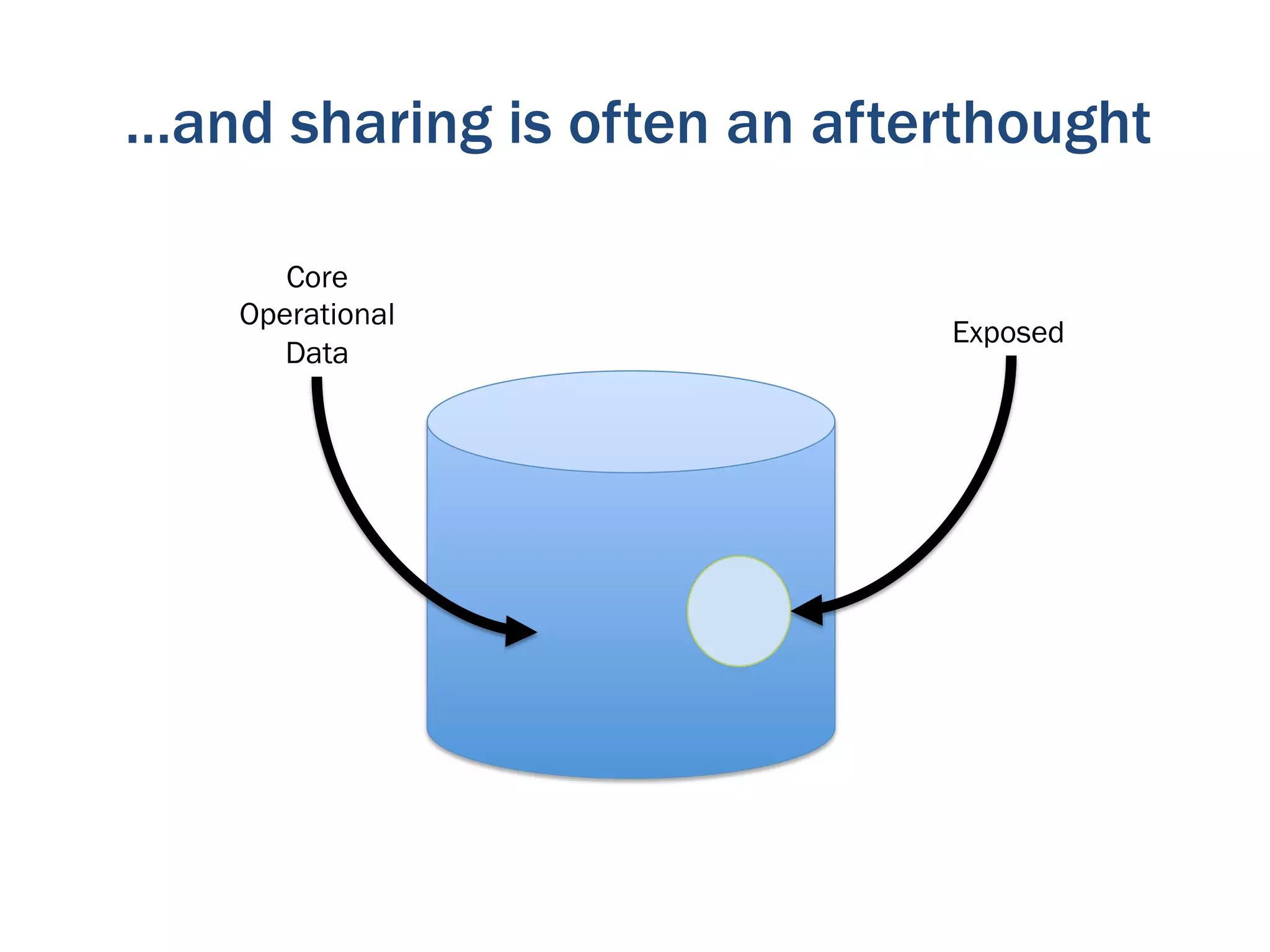

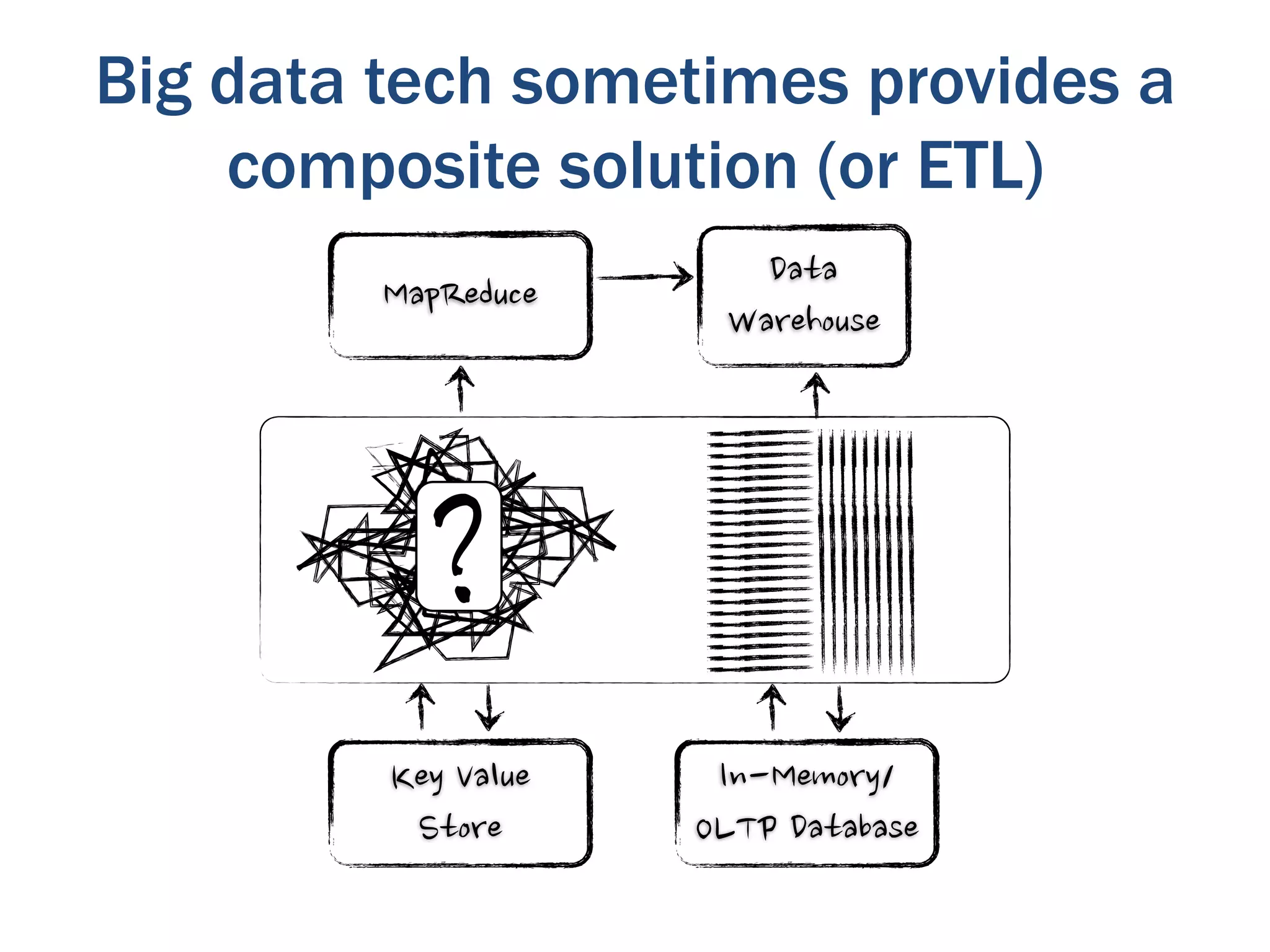

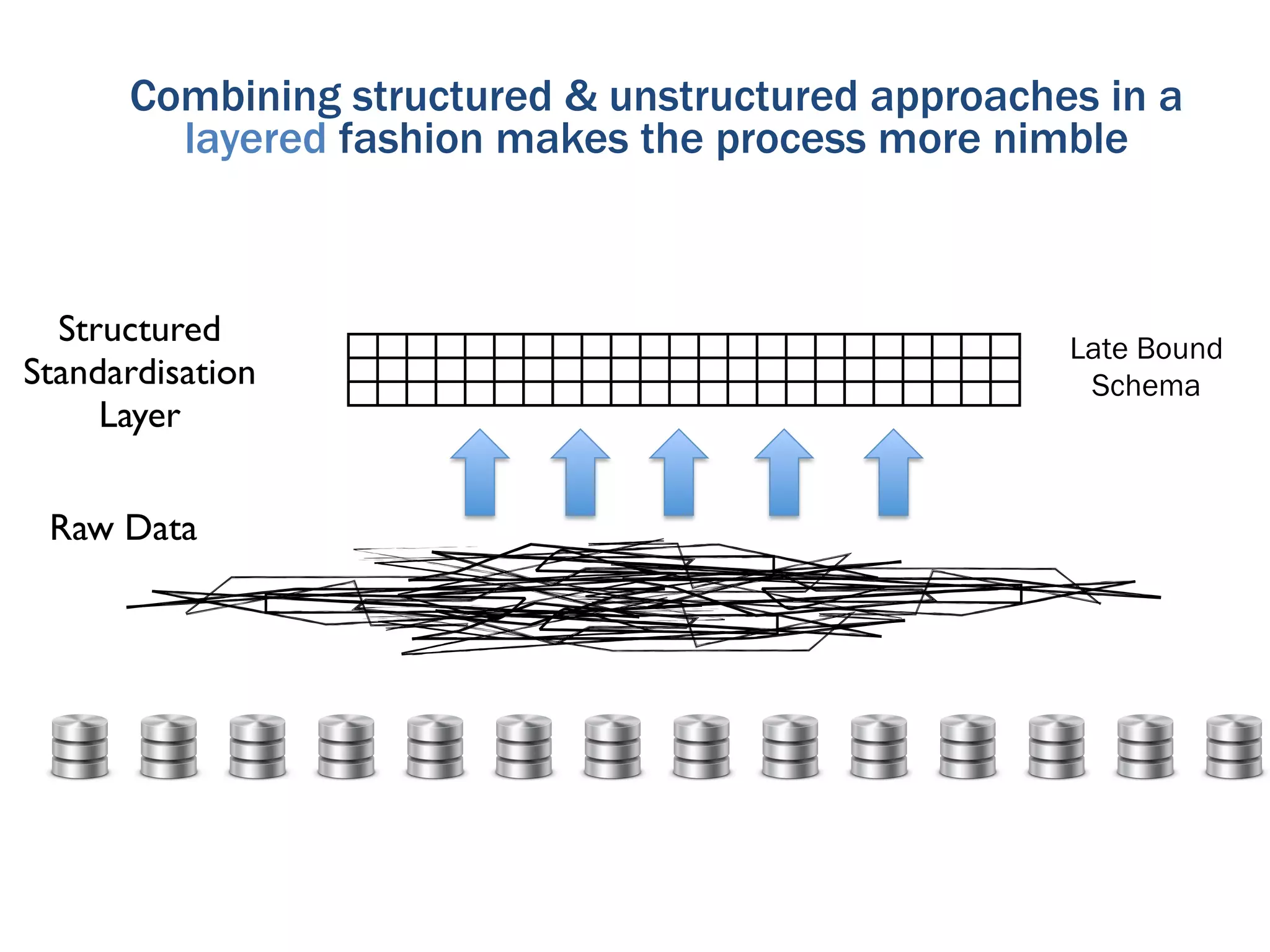

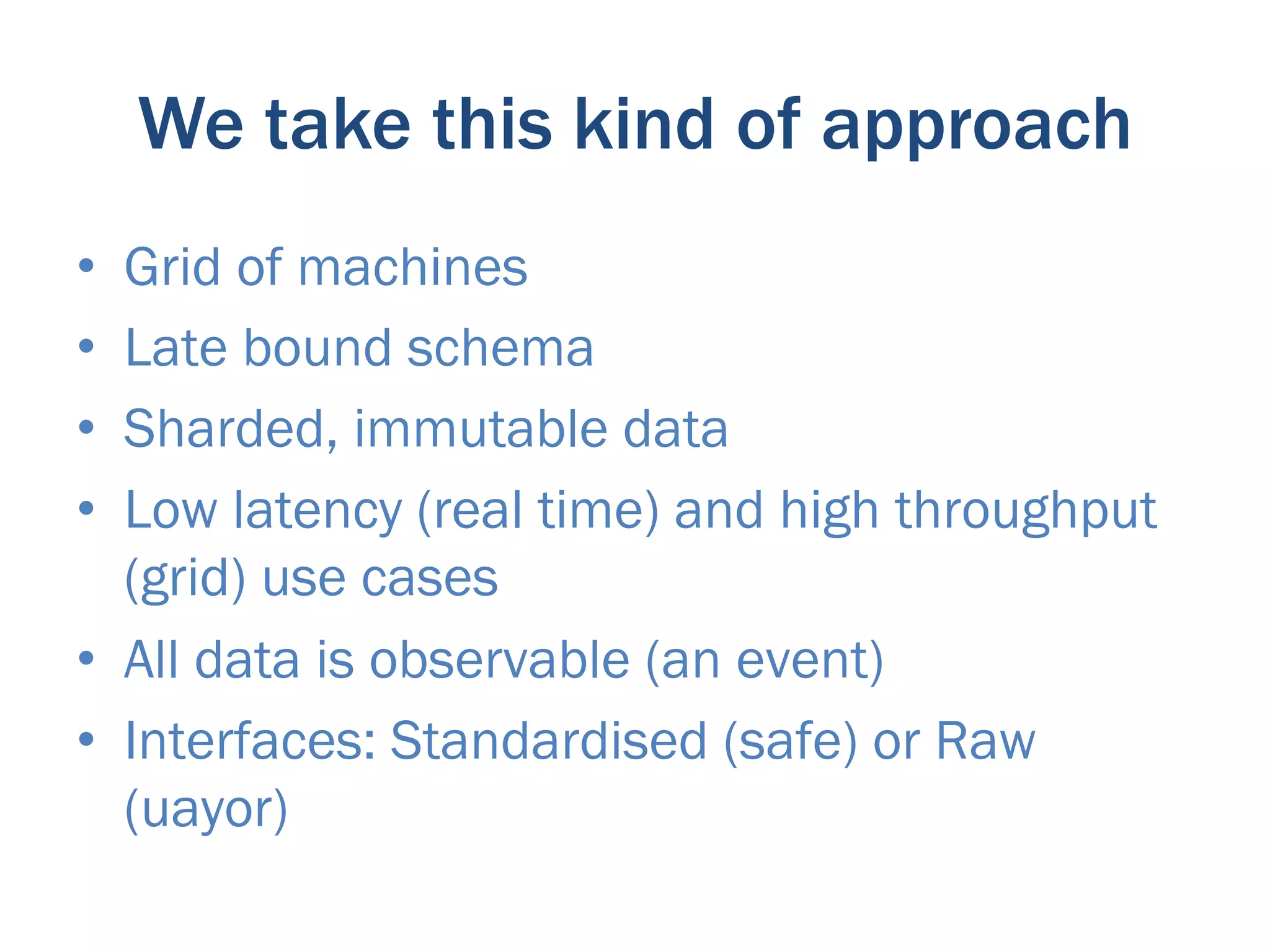

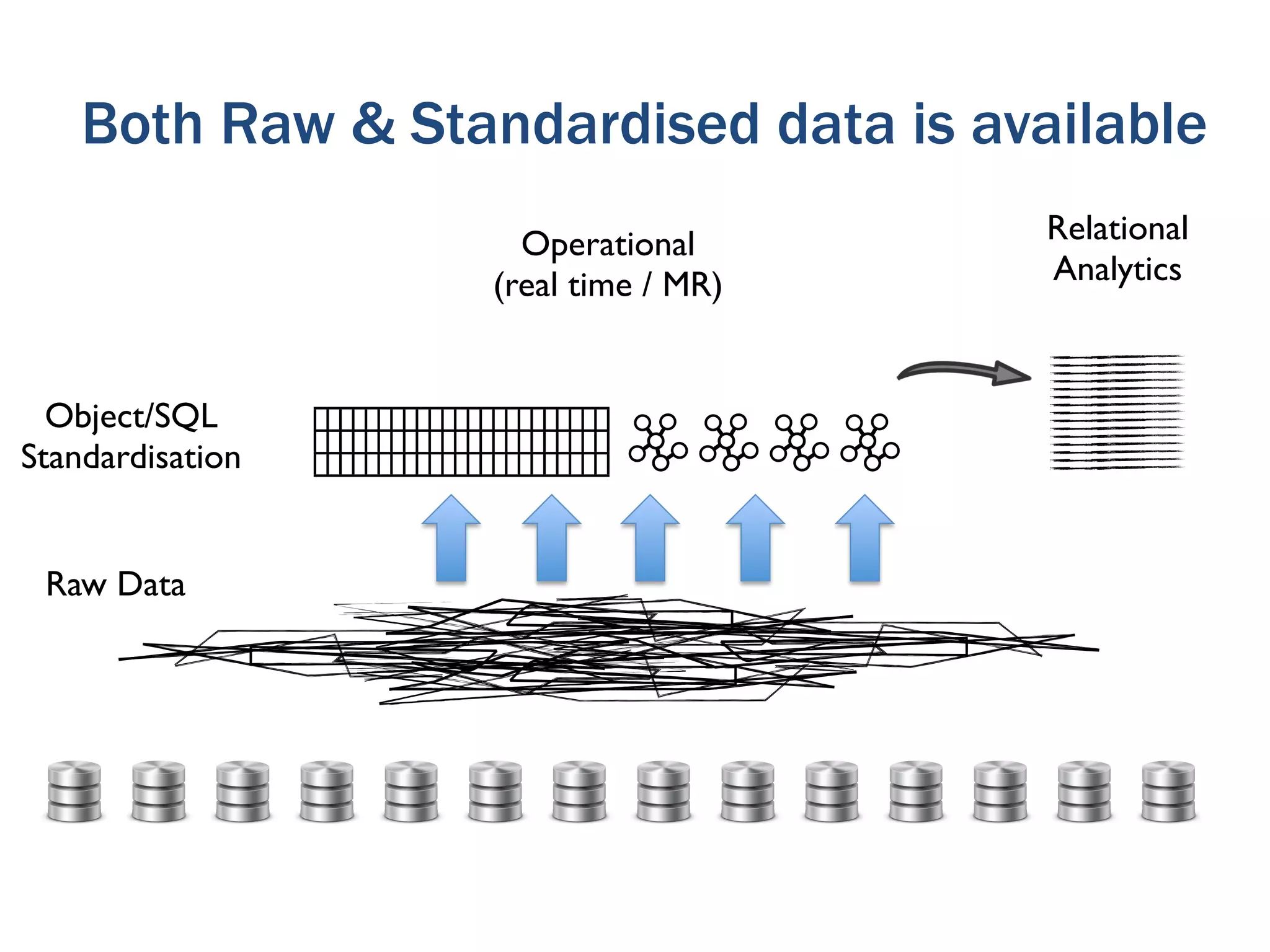

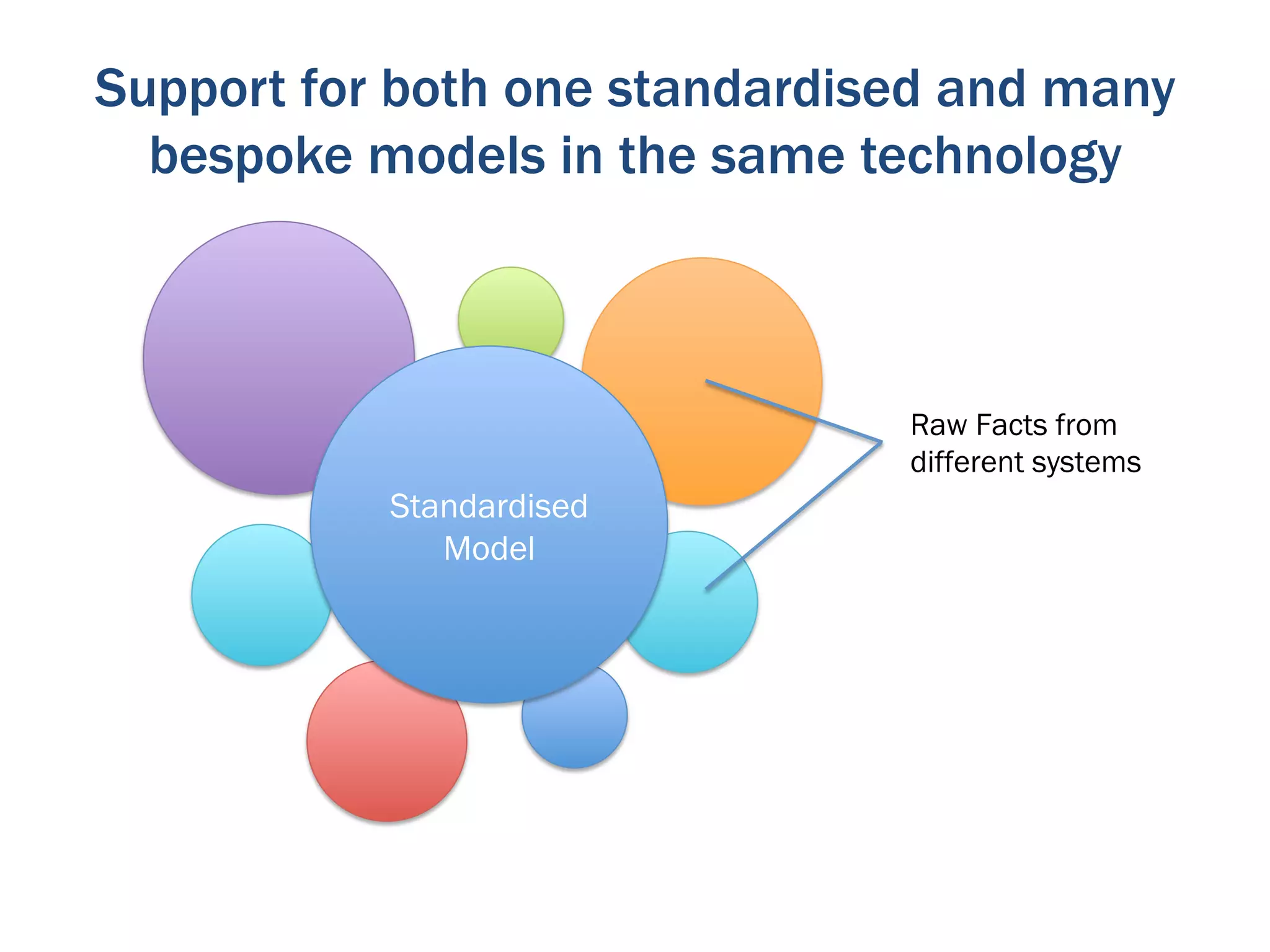

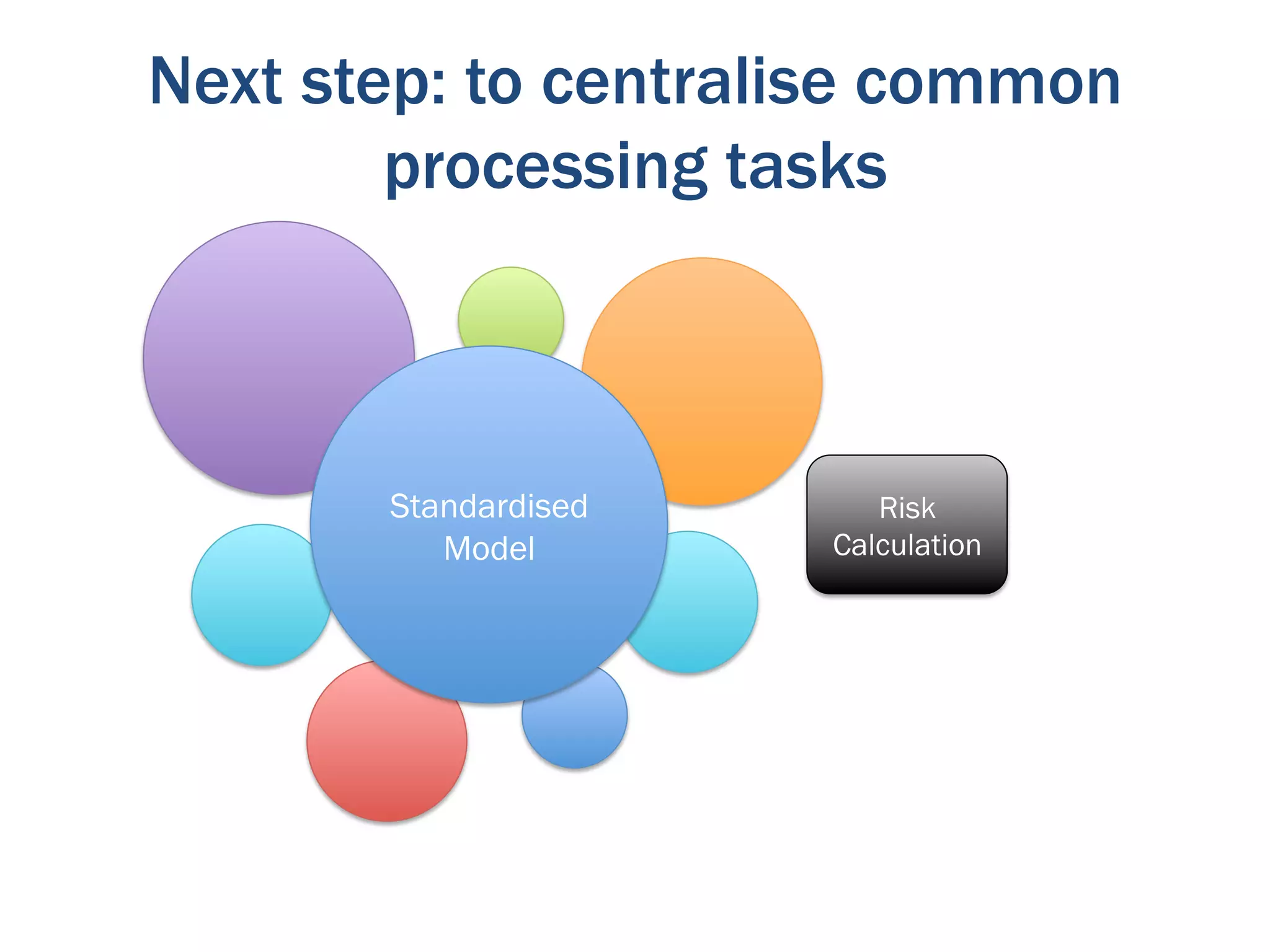

This document discusses the evolution of databases from traditional relational databases to NoSQL and big data technologies. It notes that NoSQL databases have emerged to solve problems related to large volumes of data and unreliable networks. Both relational and NoSQL solutions now have value. Most enterprise data is not highly structured and does not require the complexities of relational databases. A composite approach using both relational and NoSQL technologies in layers provides more flexibility to work with both structured and unstructured data.