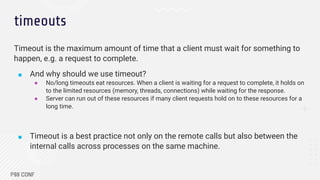

The document discusses strategies for building resilient distributed systems through techniques such as timeouts, retries, backoff, and jitter to handle node failures and optimize performance. It emphasizes the importance of setting appropriate timeouts to prevent resource hogging, implementing retries for transient errors, and employing backoff with jitter to reduce contention during retries. Additionally, it introduces adaptive retries using algorithms like the token bucket to manage request rates during high error scenarios.

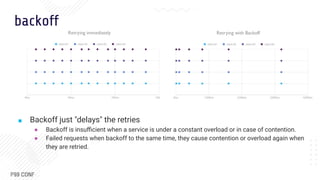

![jitter

Adds randomness to the backoff (wait time) when retrying a request to spread out the load

and reduce contention.

■ What jitter to use?

Add Jitter to backoff value (most common) Between zero and backoff value

Sleep

duration

■ (2^retries * delay) +/- random_number

■ (2^retries * delay) * randomization_interval

random_between(0, 2^retries *

delay)

Resource

Utilization

less resources because work is spread out due to randomization

Time to

complete

takes longer to complete, has longer sleep durations takes less time to complete, sleep duration

min value range is 0, i.e. [0, backoff]

When to use if backing-off retries help give downstream service time to

heal

if most failures are due to contention and

spreading out retries is just what we need.](https://image.slidesharecdn.com/omarelgabryp99conf2022slidetemplate-221012154807-72439eb2/85/Square-Engineering-s-Fail-Fast-Retry-Soon-Performance-Optimization-Technique-17-320.jpg)

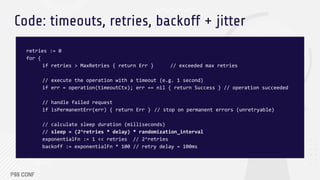

![Code: timeouts, retries, backoff + jitter

// … to be continued

// get a random sleep duration between interval [backoff*0.5, backoff*1.5] in ms

// for e.g. if backoff = 100ms, sleep is any number in the range [50ms, 150ms]

minInterval := backoff / 2 // backoff*0.5

maxInterval := backoff + (backoff / 2) // backoff*1.5

// rand.Intn() returns a rand number from 0 to N (exclusive) so we +1

sleep := time.Millisecond *

time.Duration(minInterval + rand.Intn(maxInterval - minInterval + 1))

time.Sleep(sleep) // wait until retry sleep duration has elapsed

retries++ // increment retries for the next retry attempt

}](https://image.slidesharecdn.com/omarelgabryp99conf2022slidetemplate-221012154807-72439eb2/85/Square-Engineering-s-Fail-Fast-Retry-Soon-Performance-Optimization-Technique-20-320.jpg)