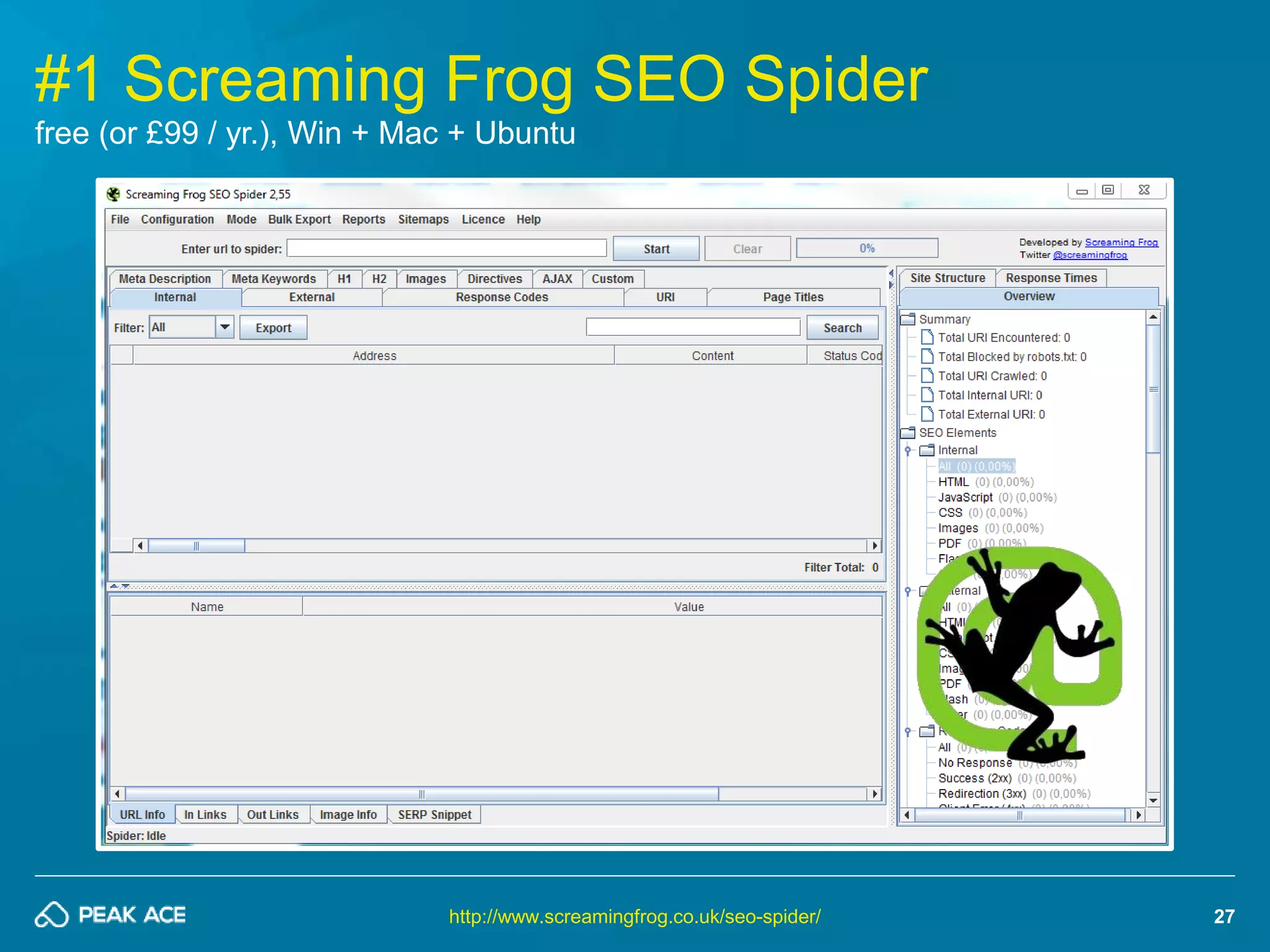

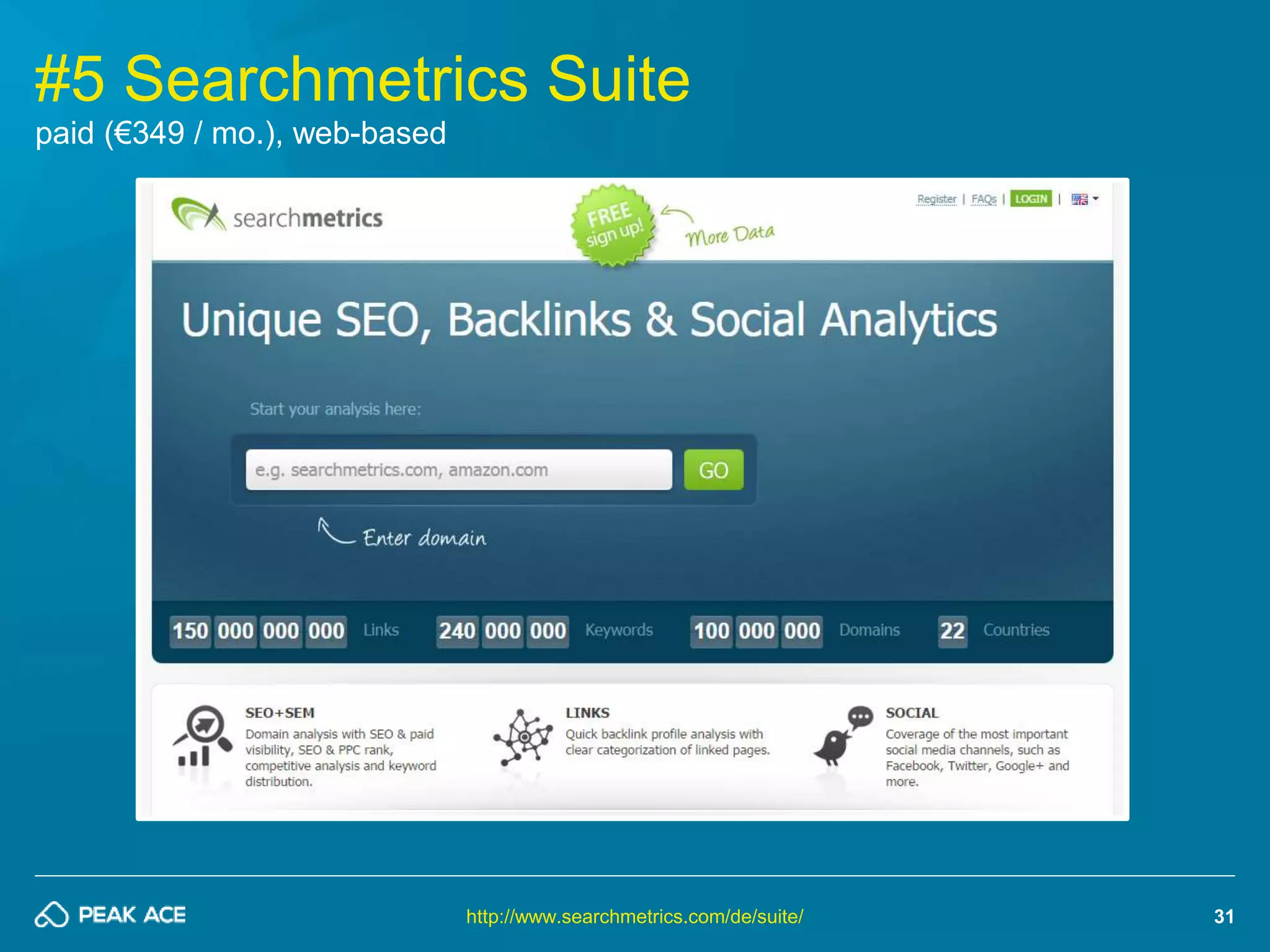

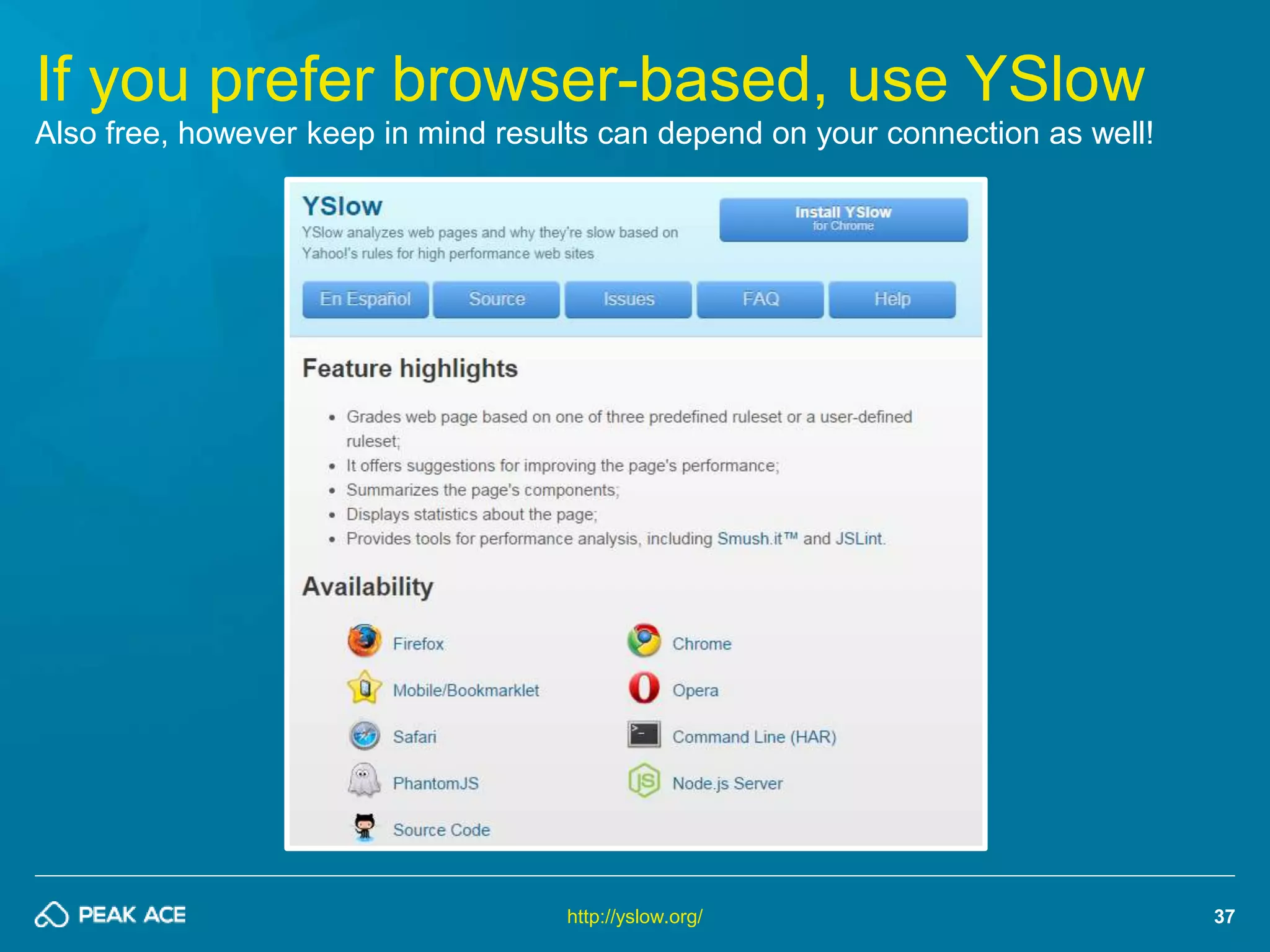

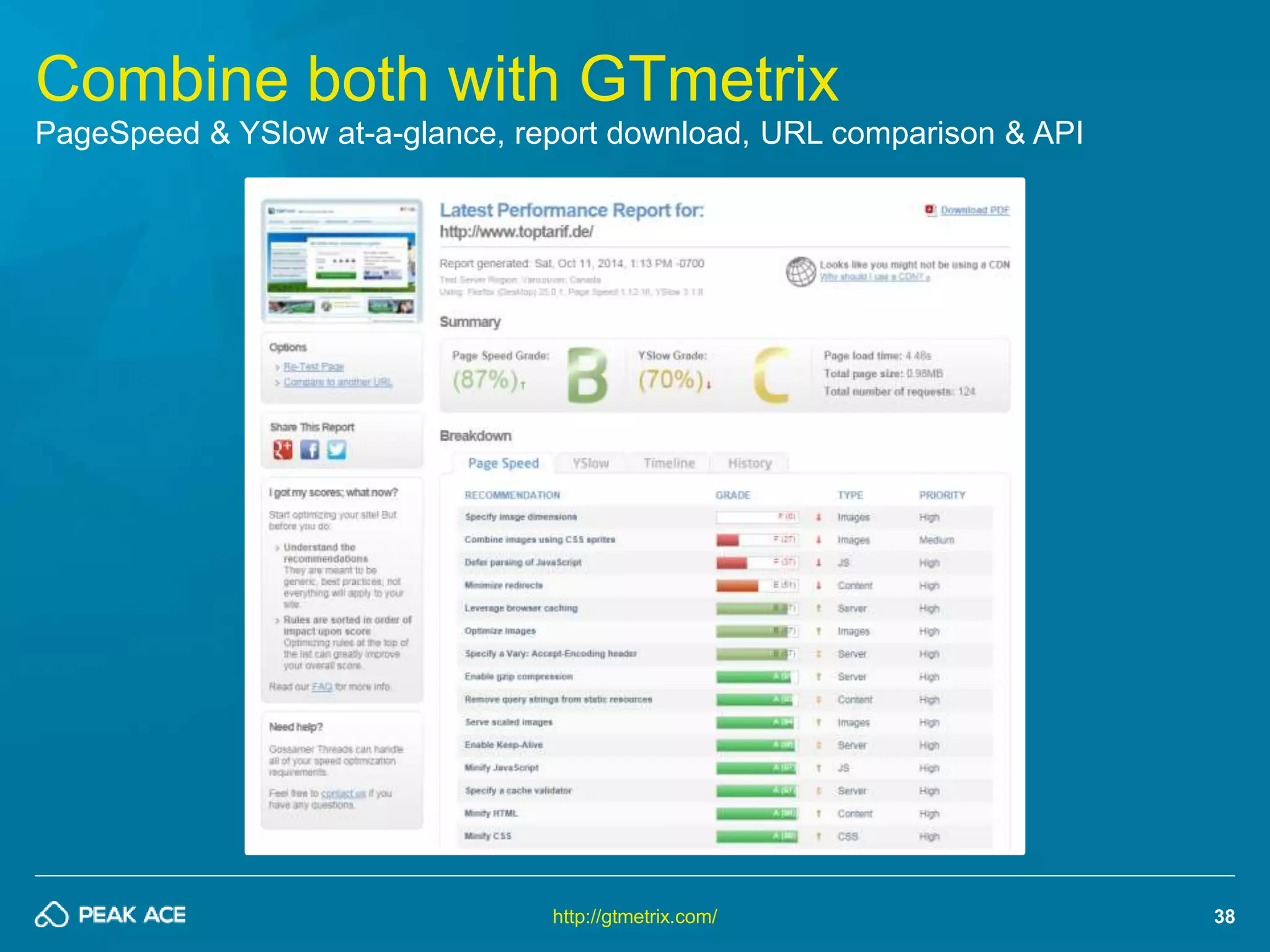

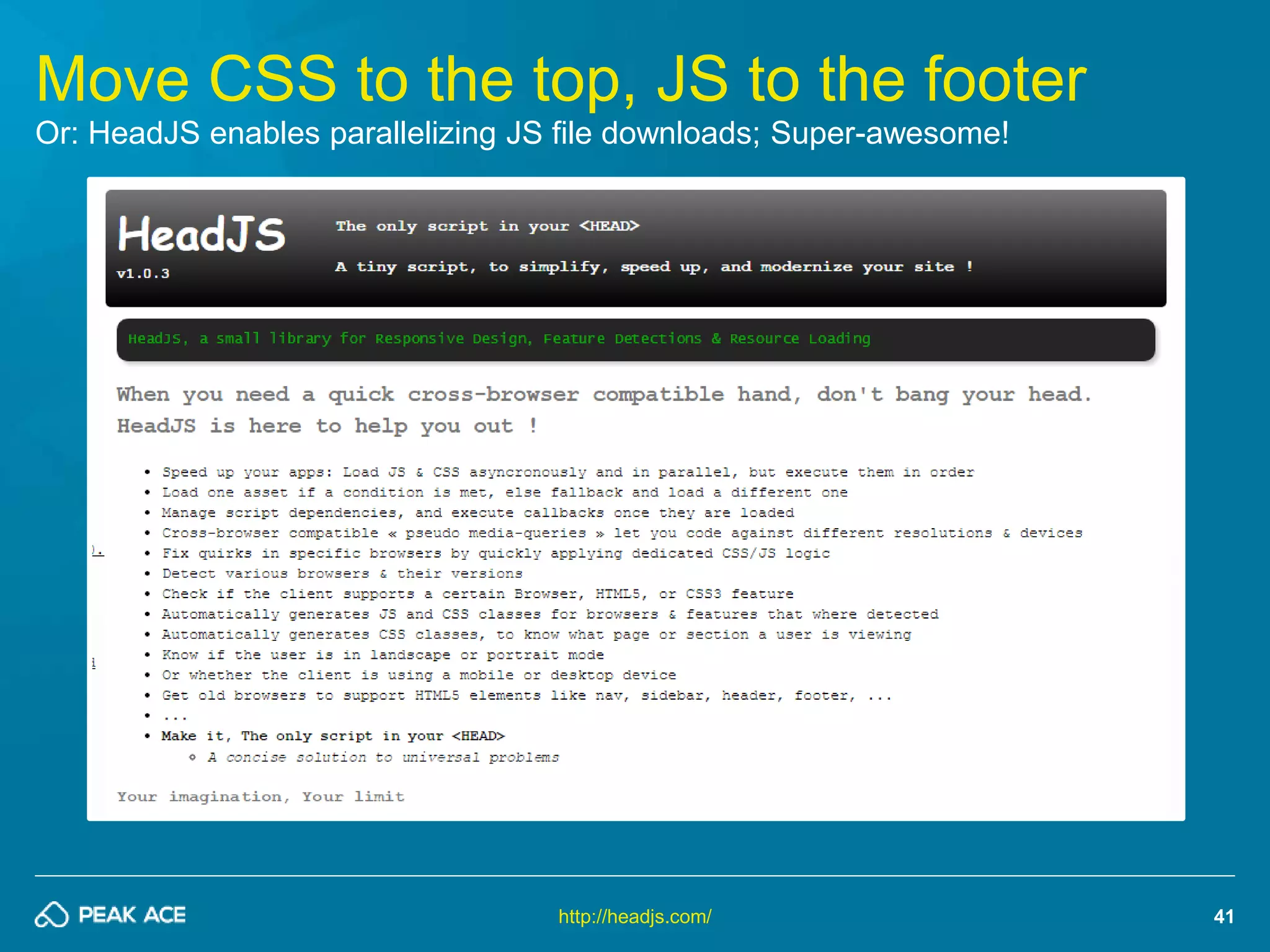

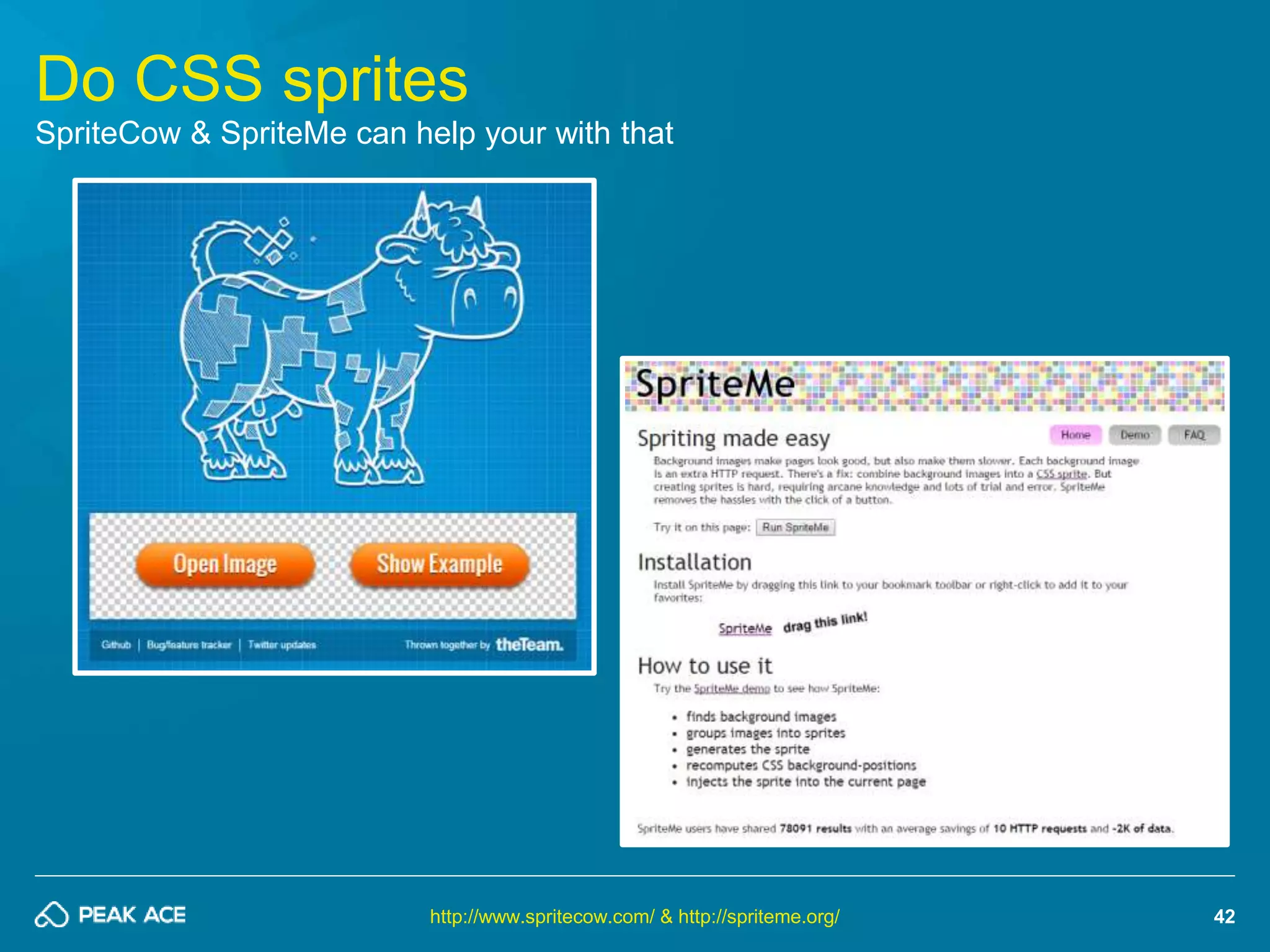

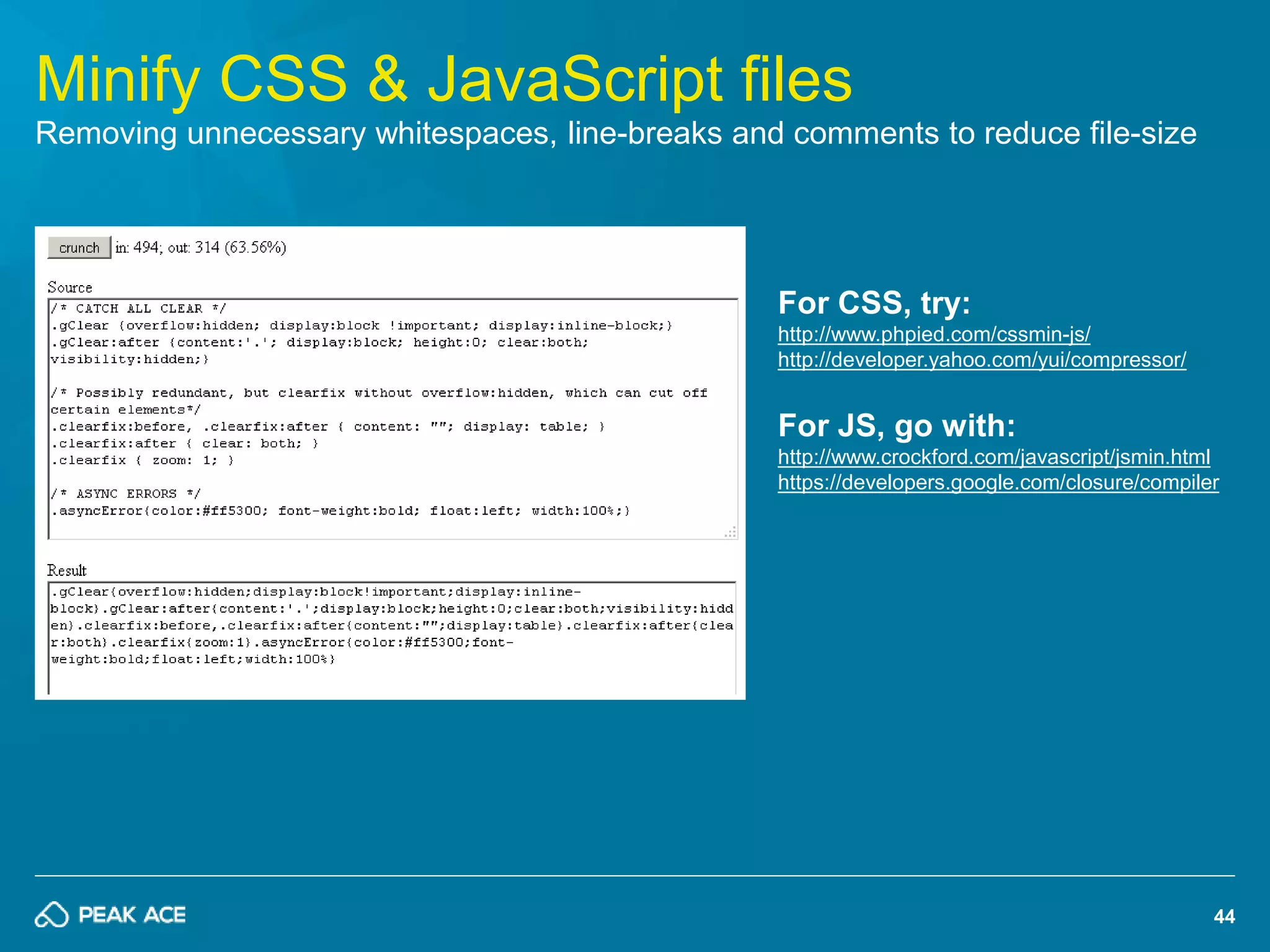

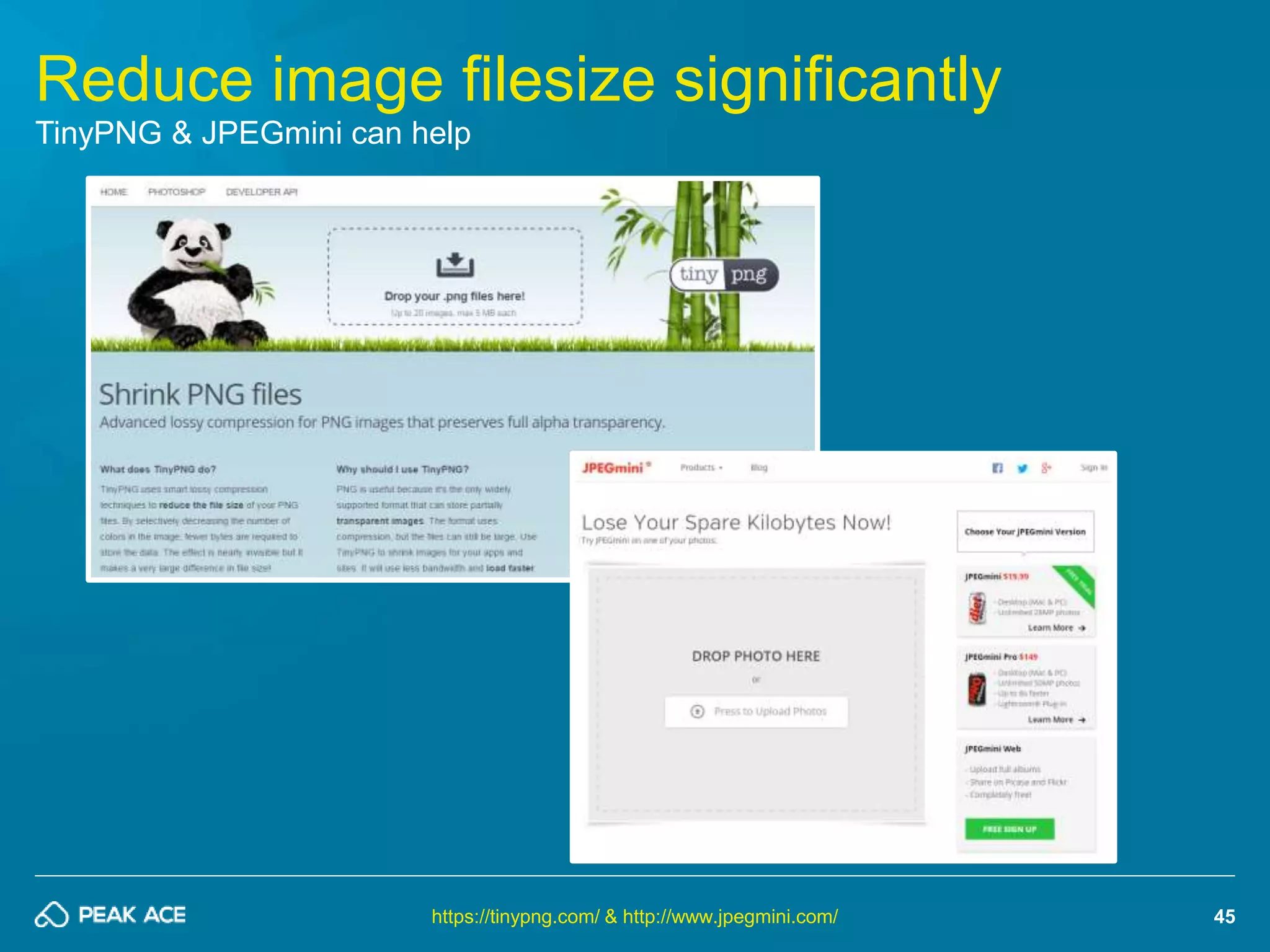

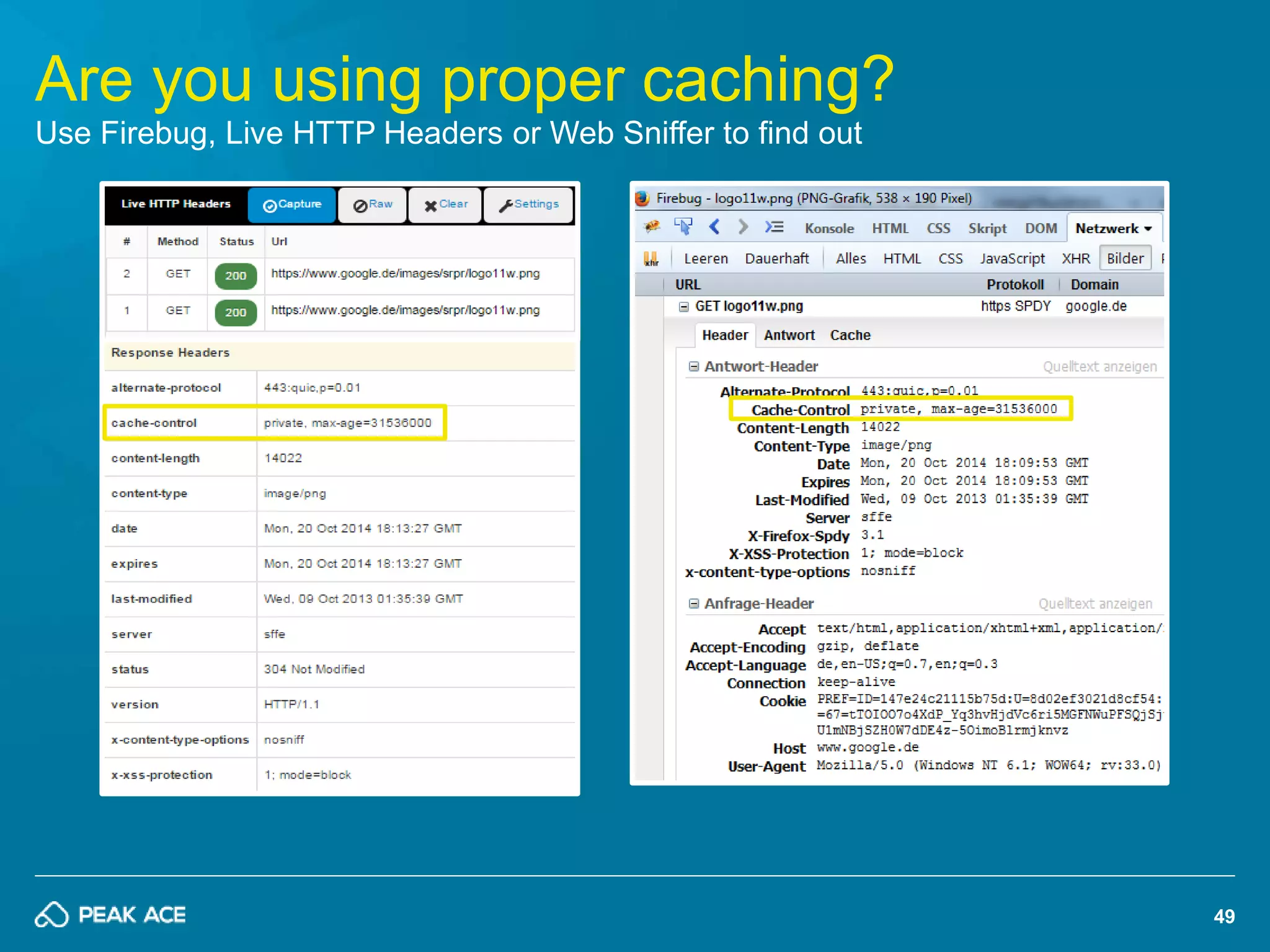

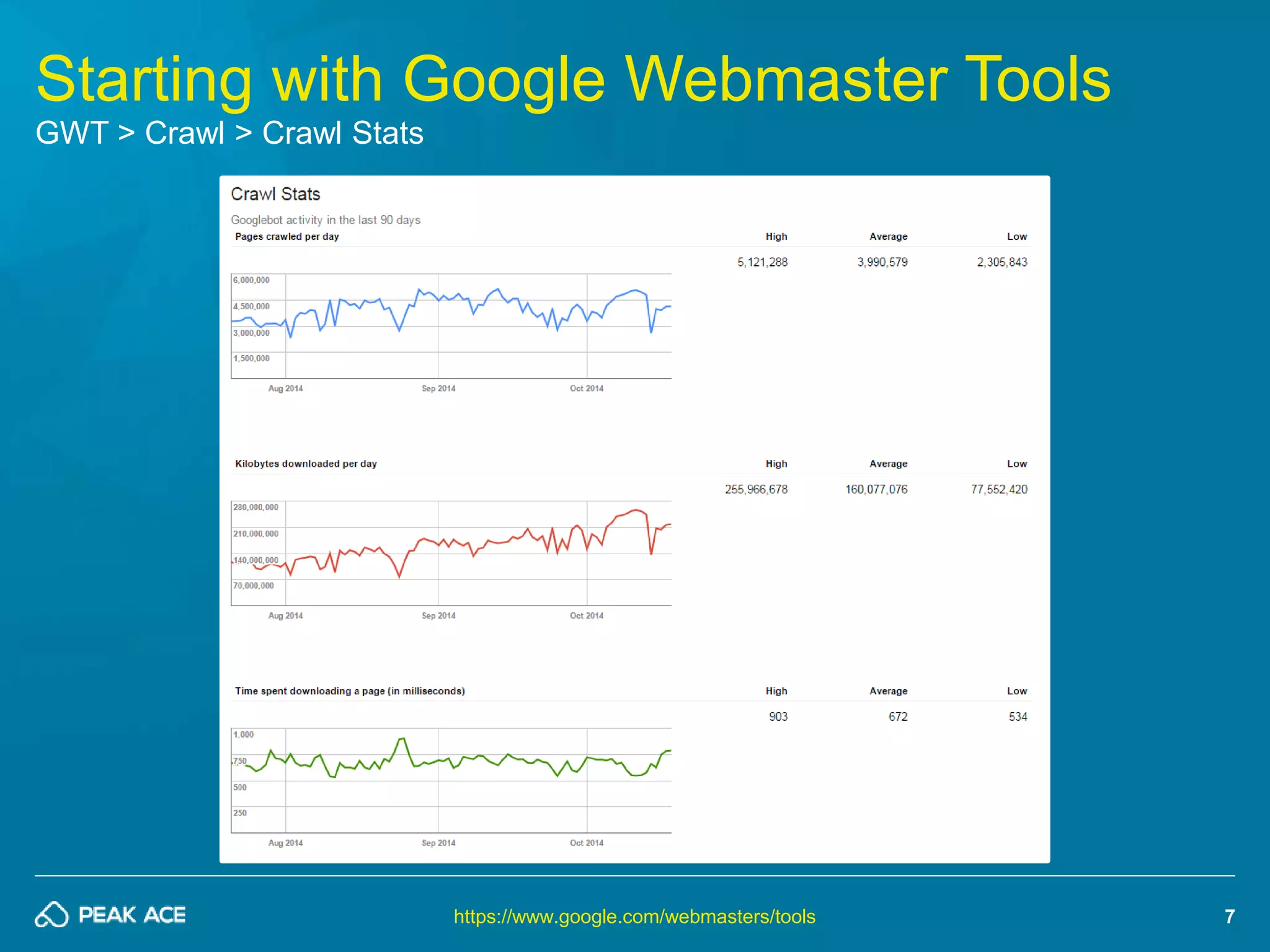

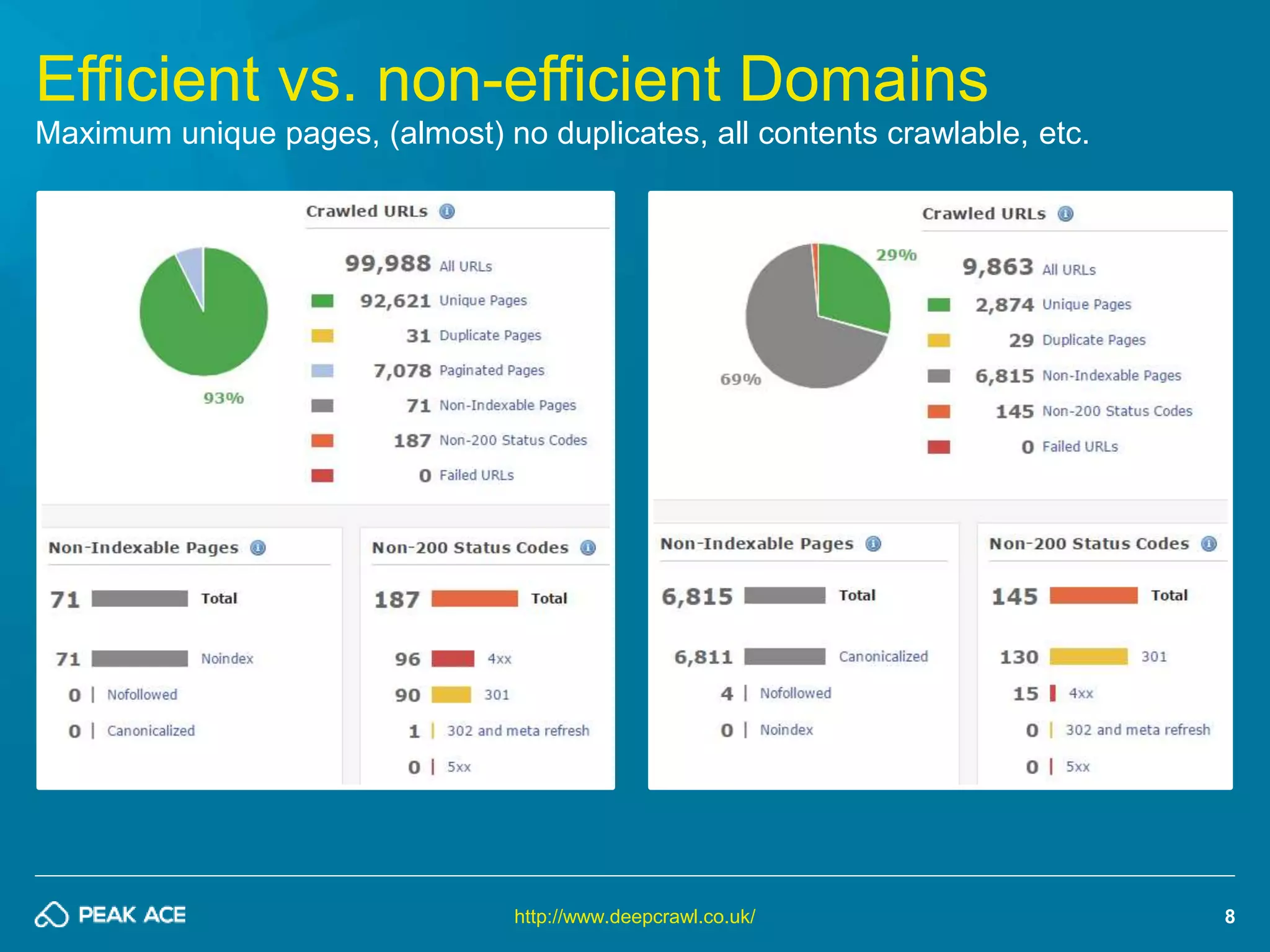

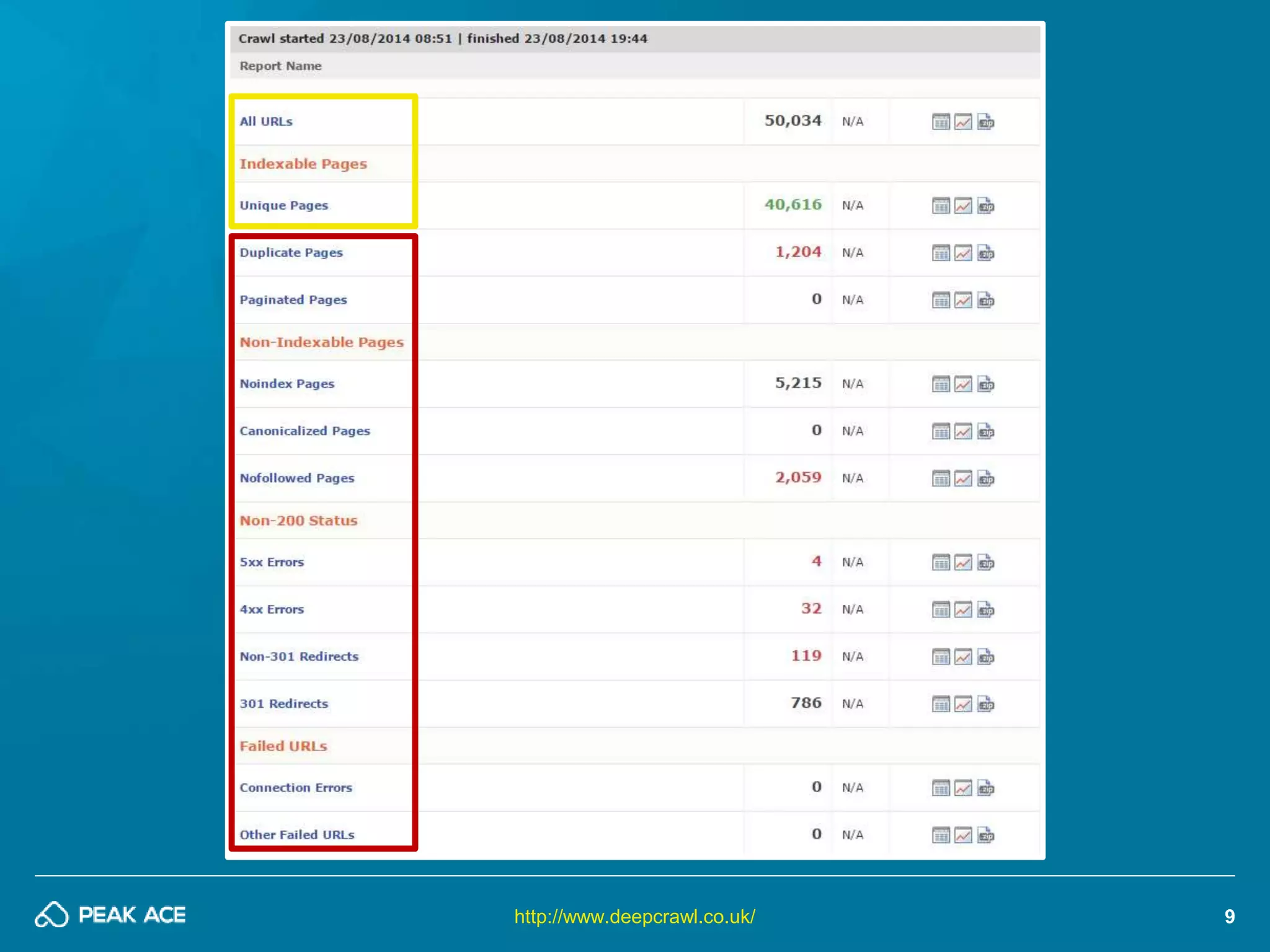

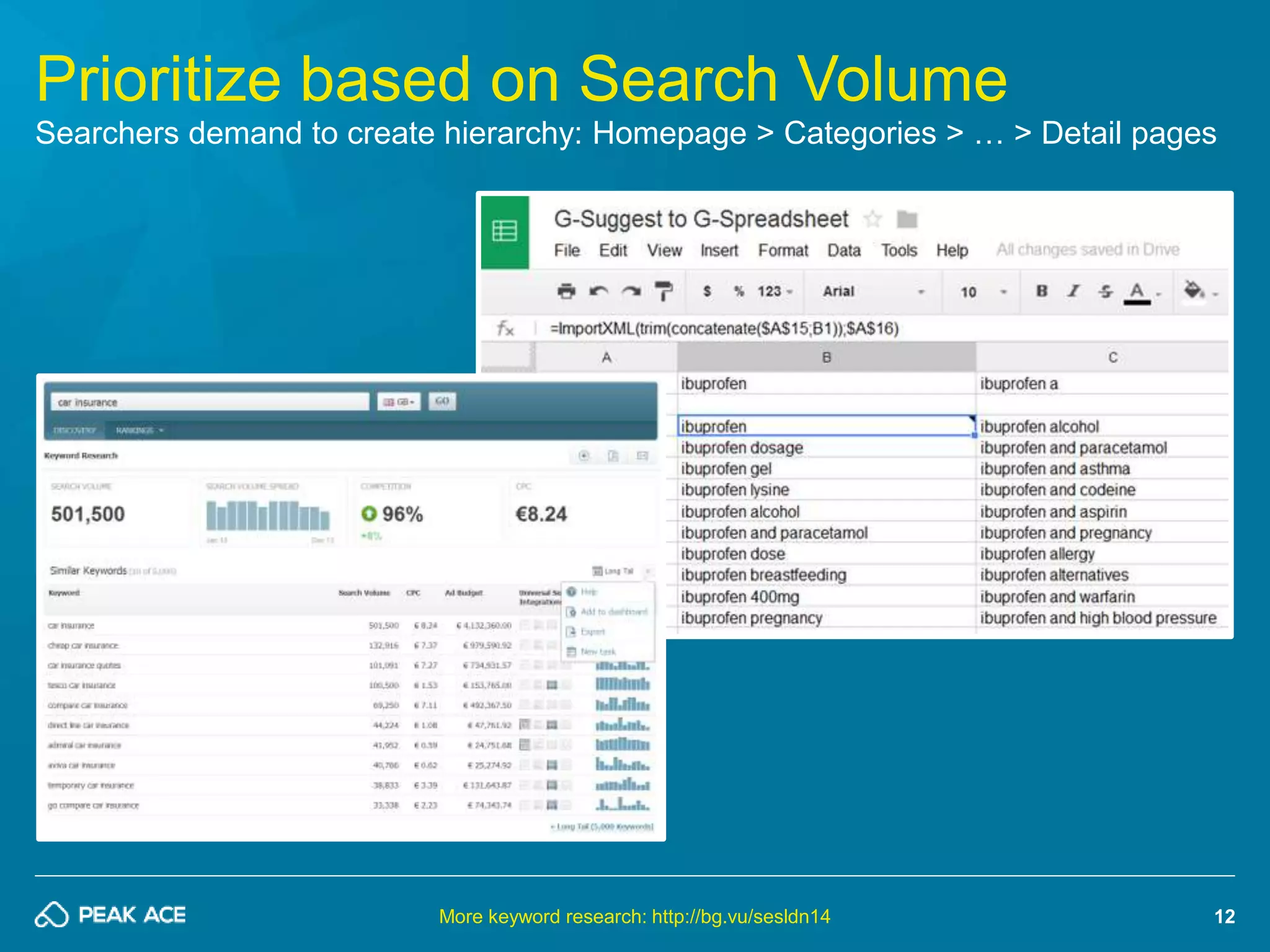

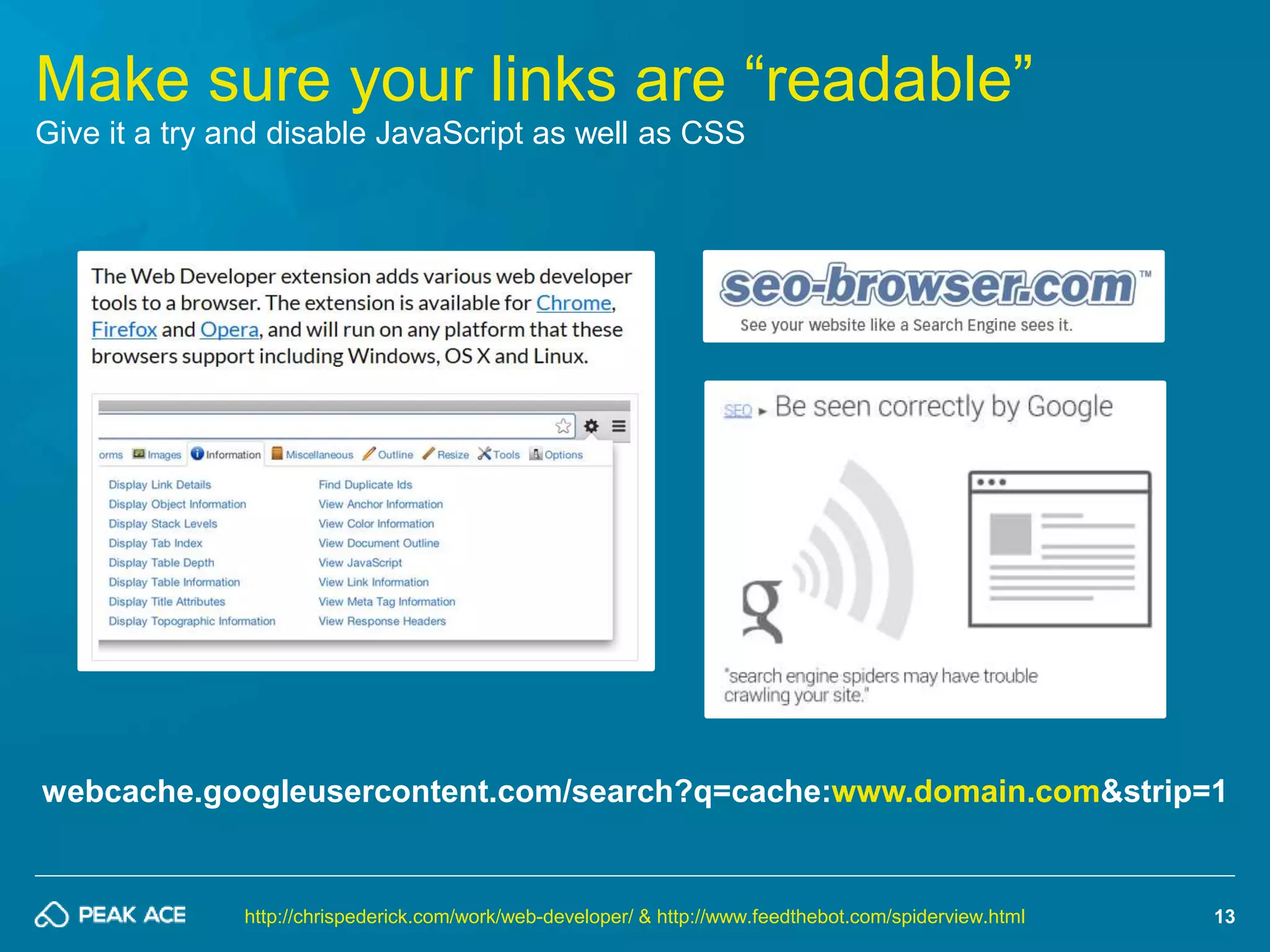

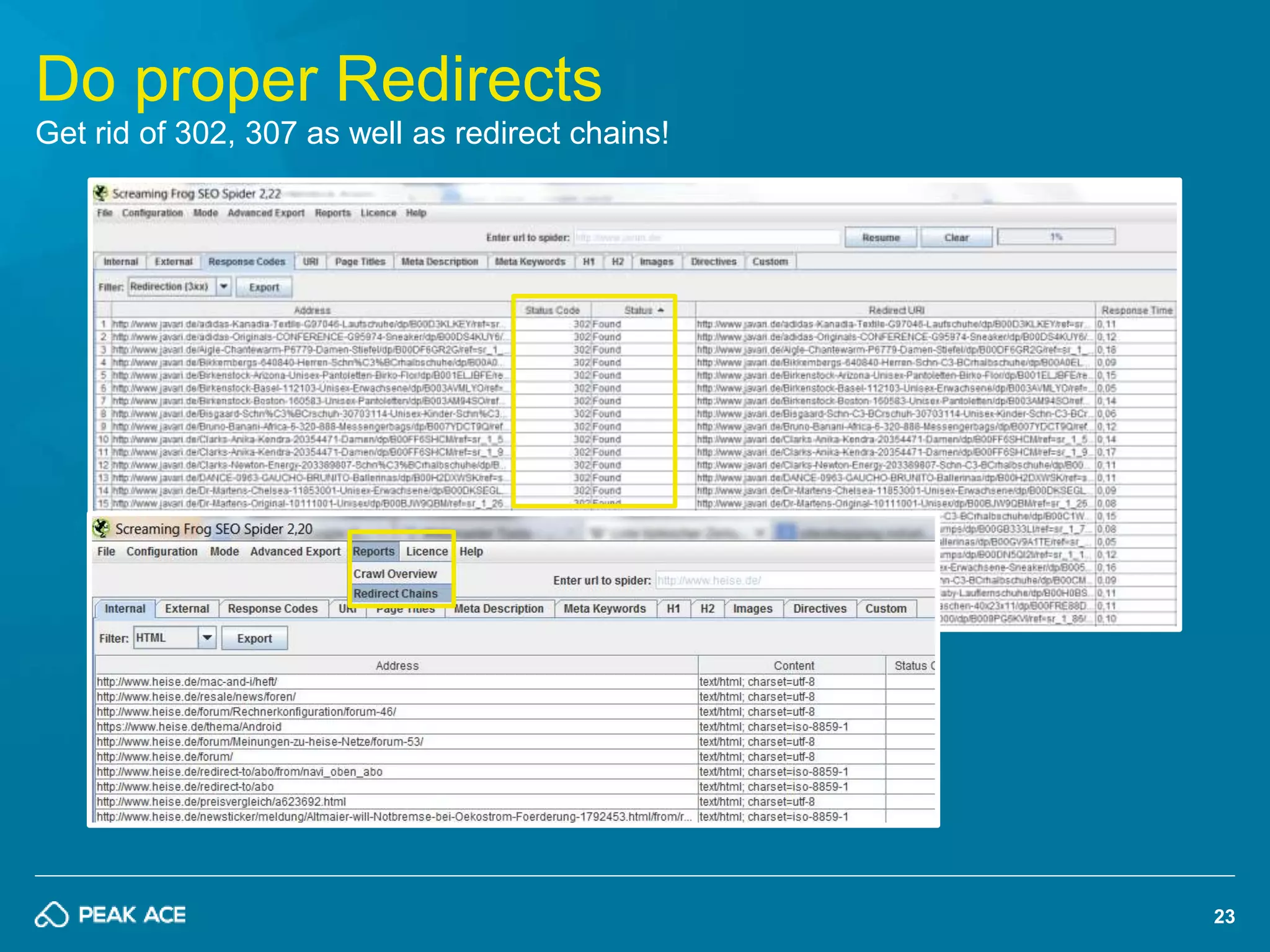

The document discusses strategies for optimizing technical SEO, focusing on enhancing site crawling efficiency and accessibility to improve search engine performance. Key points include structuring site architecture, utilizing proper internal links, managing redirects, and improving site speed using various tools and techniques. It also emphasizes the importance of ongoing performance auditing and user experience optimization.

![25

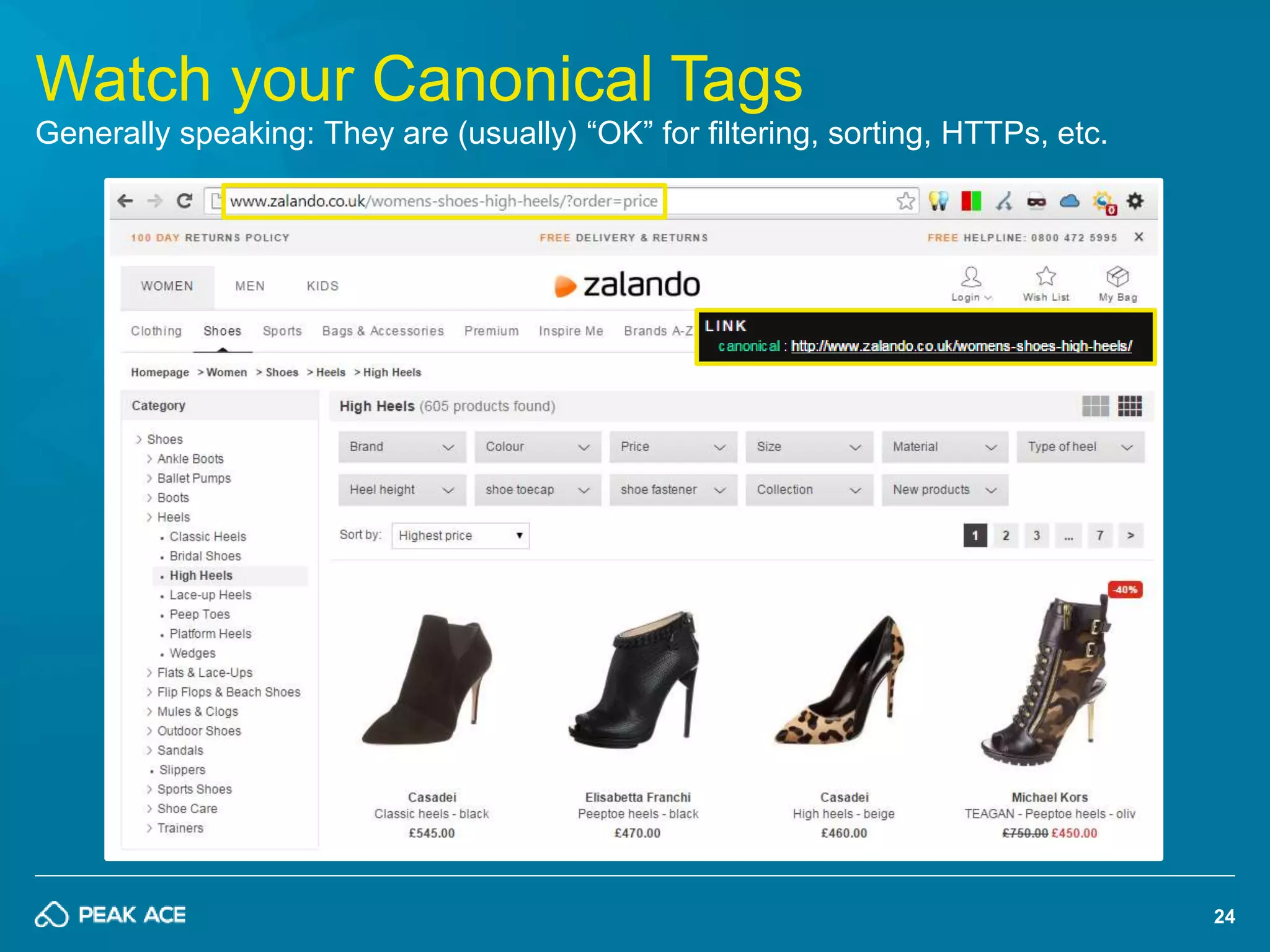

Google might ignore Canonicals…!

Don’t use Canonicals as excuse for poor architecture; do 301’s whenever possible!

Is rel="canonical" a hint or a directive?

It's a hint that we honor strongly. We'll

take your preference into account, in

conjunction with other signals, when

calculating the most relevant page […]

Full story: http://pa.ag/1FxYZEd](https://image.slidesharecdn.com/seozone-instanbul-2014grimmtechnical-seo-141024232614-conversion-gate01/75/Technical-SEO-Crawl-Space-Management-SEOZone-Istanbul-2014-25-2048.jpg)