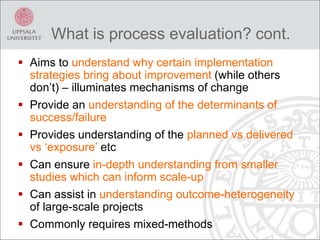

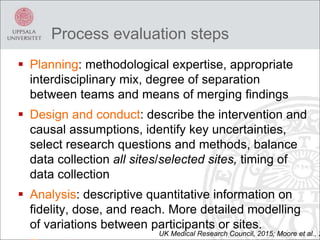

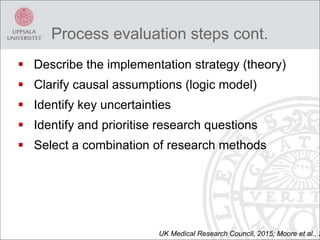

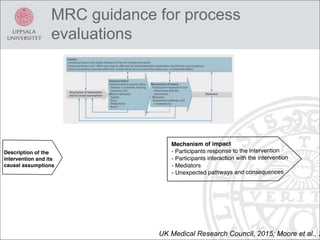

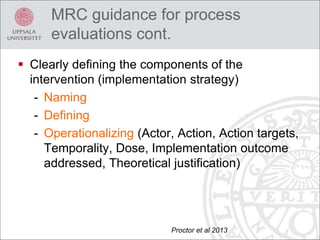

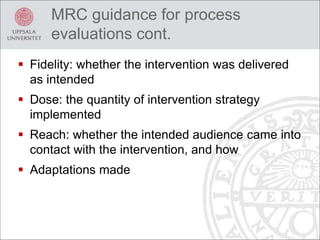

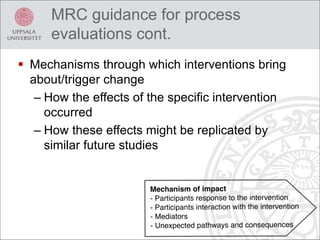

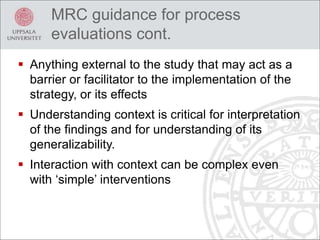

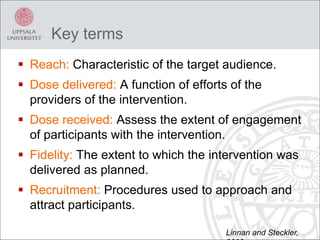

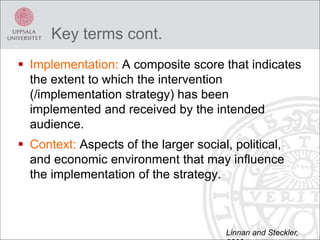

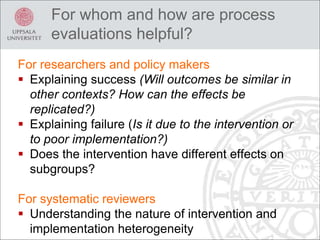

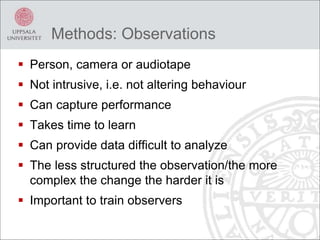

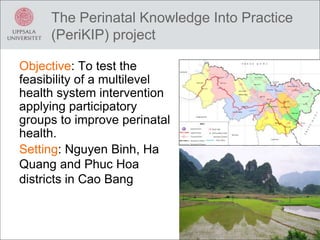

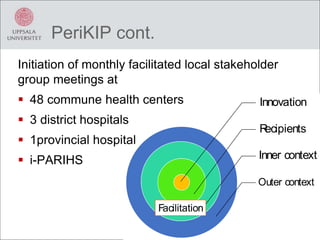

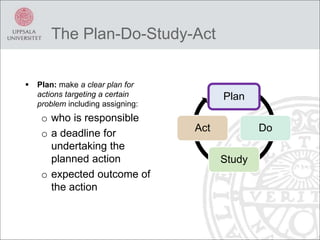

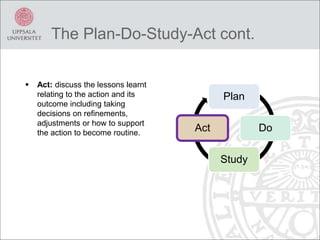

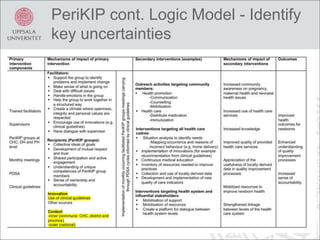

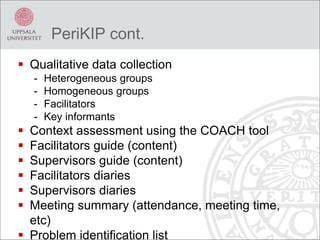

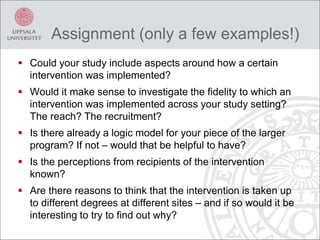

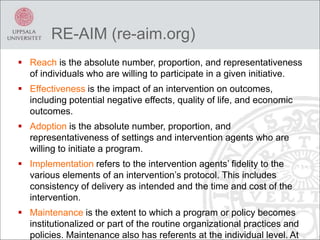

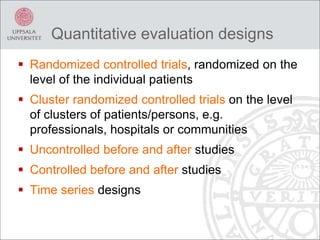

This document discusses process evaluation for implementation science projects. It defines process evaluation as understanding how and why interventions work by examining implementation and change processes. Key aspects of process evaluation include assessing fidelity, dose, reach, adaptations and context. Process evaluation helps explain success or failure of interventions and understand outcome heterogeneity. The document reviews guidelines for process evaluation from the UK Medical Research Council, including clarifying theories of change. It then discusses the PeriKIP project, which aims to improve perinatal health in Vietnam using participatory stakeholder groups, and outlines plans for its process evaluation.