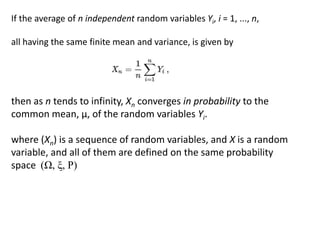

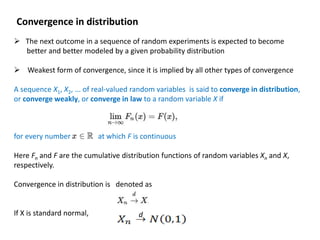

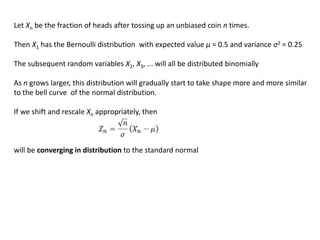

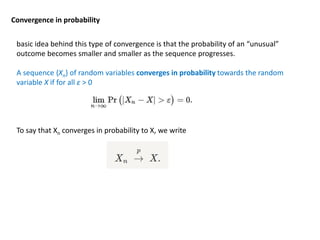

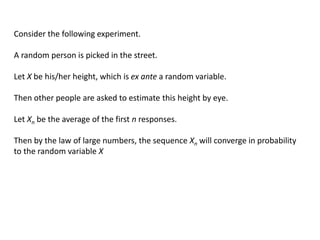

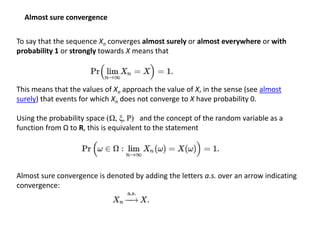

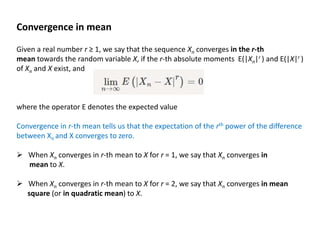

The document discusses the concept of convergence of random variables, detailing different forms such as convergence in distribution, probability, mean, and almost sure convergence. It explains how sequences of random variables can settle into predictable patterns and how these convergence types relate to each other, emphasizing their importance in probability theory and statistics. Additionally, examples illustrate the behavior of random variables as sequences progress towards stable outcomes.