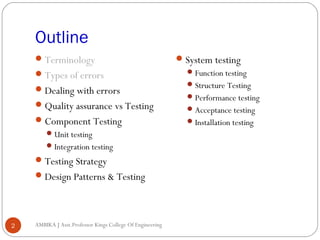

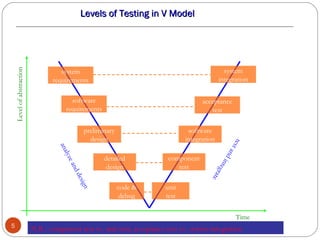

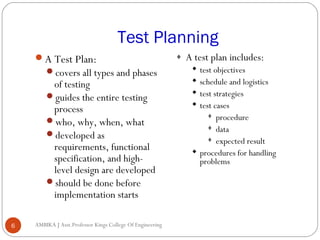

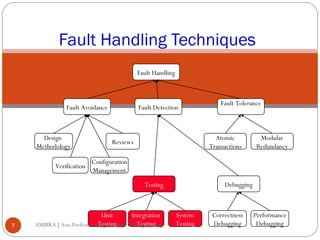

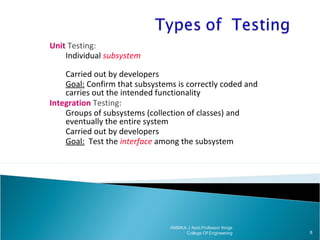

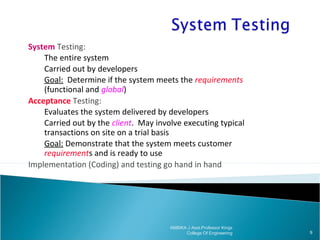

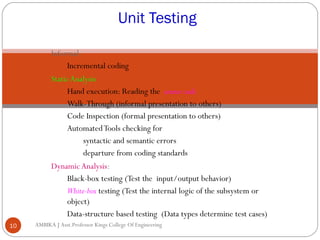

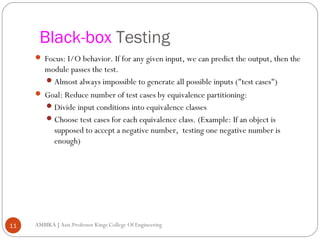

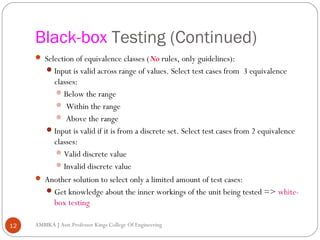

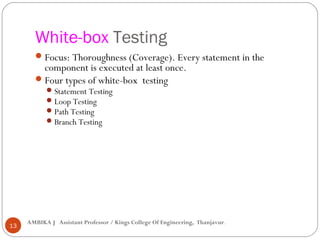

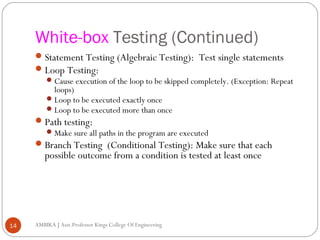

The document outlines various aspects of testing in software engineering, including definitions, types of testing (unit, integration, system, acceptance), and strategies involved in creating test plans. It emphasizes the practical and theoretical limitations of testing, noting that while testing can reveal bugs, it cannot definitively prove their absence. Additionally, it discusses methodologies such as black-box and white-box testing, focusing on the importance of structured testing processes and the planning required to ensure comprehensive quality assurance.

![White-Box Testing

Loop Testing

[Pressman]

Simple

loop

Nested

Loops

Concatenated

Loops

15

AMBIKA J Asst.Professor Kings College Of Engineering

Why is loop testing important?

Unstructured

Loops](https://image.slidesharecdn.com/testingppt-140219003505-phpapp02/85/Software-testing-an-introduction-15-320.jpg)