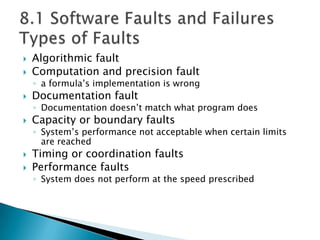

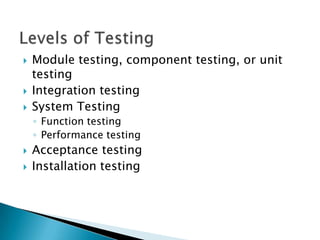

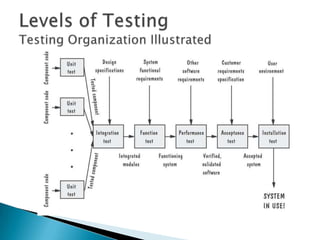

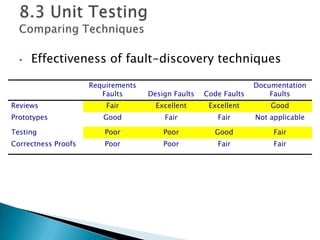

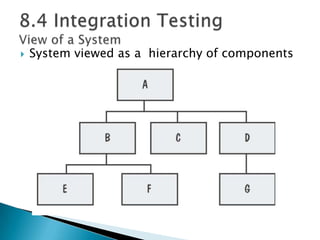

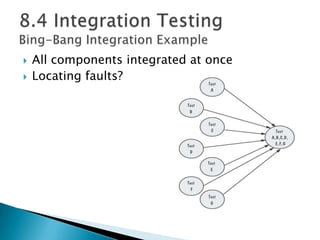

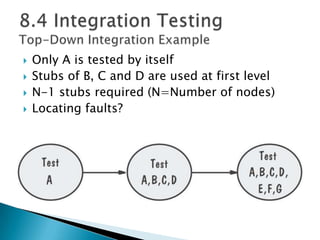

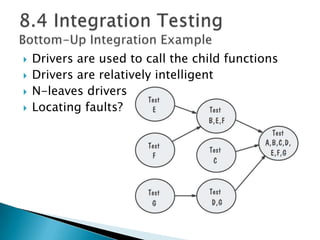

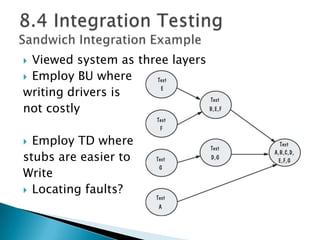

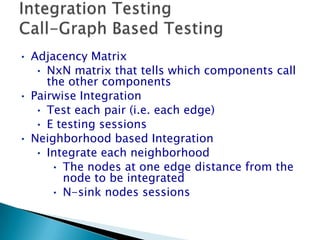

This document discusses various types of software testing including unit testing and integration testing. It describes different types of faults that can occur like algorithmic faults and documentation faults. It also covers integration testing strategies like big bang, bottom-up, and top-down. The purpose of testing is to discover faults in the system. Testing should continue until predefined criteria are met like low defect rates or lack of time/money. Thorough testing helps avoid issues like what happened during the Ariane-5 rocket failure.