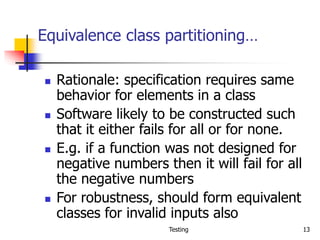

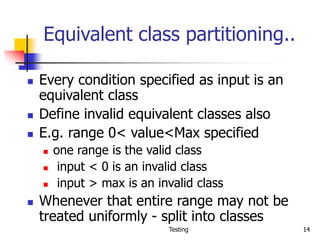

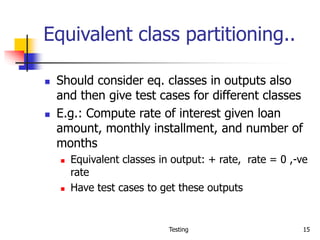

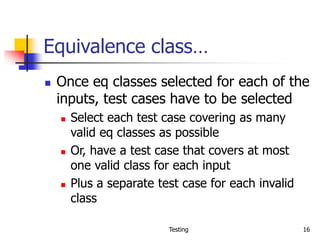

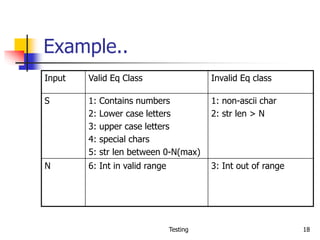

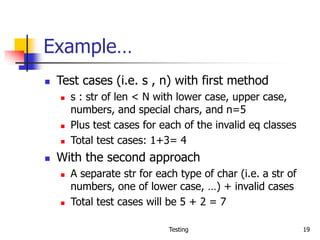

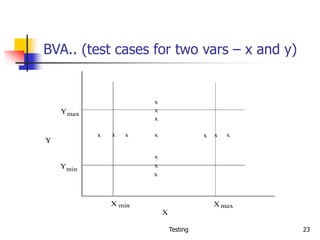

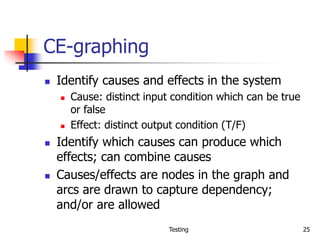

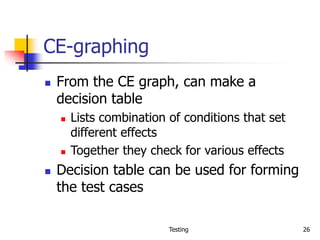

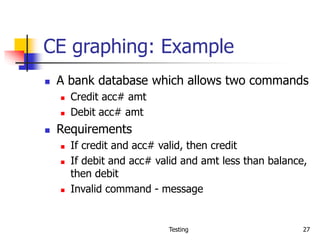

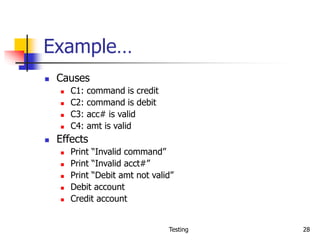

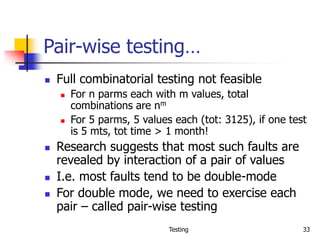

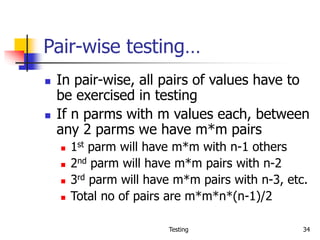

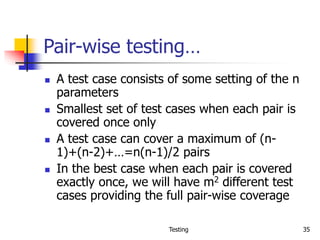

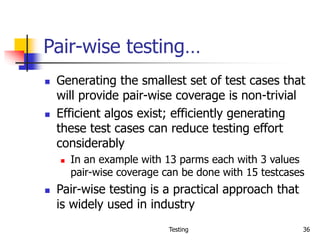

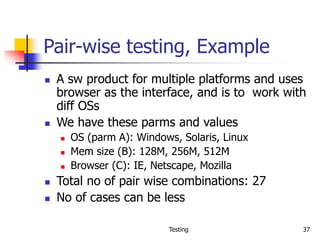

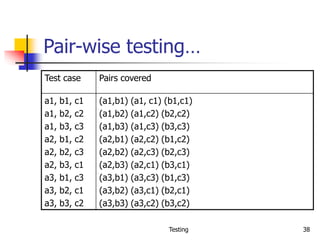

The document discusses various techniques for software testing including black box testing, equivalence partitioning, boundary value analysis, cause-effect graphing, pairwise testing, and special case testing. The goal of testing is to identify defects by designing test cases that are most likely to cause failures and reveal faults in the software.